Multi-frame image restoration for face recognition

MULTI-FRAME IMAGE RESTORATION FOR FACE RECOGNITION Frederick W.Wheeler,Xiaoming Liu,Peter H.Tu and Ralph T.Hoctor

Visualization and Computer Vision Lab

GE Global Research,Niskayuna,NY,USA

{wheeler,liux,tu,hoctor}@https://www.360docs.net/doc/03624513.html,

ABSTRACT

Face recognition at a distance is a challenging and important law-enforcement surveillance problem,with low image reso-lution and blur contributing to the dif?culties.We present a method for combining a sequence of video frames of a sub-ject in order to create a restored image of the face with re-duced blur.A generic Active Appearance Model of face shape and appearance is used for registration.By warping and av-eraging registered video frames,noise is reduced,allowing a Wiener?lter to deblur the face to a greater degree than can be achieved on a single video frame.This process is theoret-ically justi?ed and tested with real-world outdoor video us-ing a PTZ camera and a commercial face recognition engine. Improvement is demonstrated for both face recognition and authentication.

1.INTRODUCTION

Automatic face recognition at a distance is of growing impor-tance to many real-world law enforcement surveillance appli-cations.However,performance of existing face recognition systems is often inadequate due to the low-resolution of sub-ject probe images[1].Our present goal is to improve the accu-racy and extend the range of face recognition through multi-frame facial image restoration from video.

In surveillance systems,a subject is typically captured on video.Current commercial face recognition algorithms work on still images so face recognition applications generally ex-tract a single frame with a suitable view of the face.In a sense,this is throwing away a great deal of information.We expect to improve facial image resolution and face recogni-tion by exploiting the fact that the face is seen in many video frames,and combining those frames to make a single restored facial image.

The?eld of image super-resolution is concerned with us-ing multiple images or video frames of the same object or scene to make one image of superior quality[2,3,4].Quality This project was supported by award#2005-IJ-CX-K060awarded by the National Institute of Justice,Of?ce of Justice Programs,US Department of Justice.The opinions,?ndings,and conclusions or recommendations ex-pressed in this publication are those of the authors and do not necessarily re?ect the views of the Department of Justice.improvement can come from noise reduction through averag-ing,deblurring,and de-aliasing.It is also possible to improve image quality through modeling,or use of a statistical prior, though such methods are equally applicable and bene?cial to single image restoration[5].It is generally accepted that super-resolution is achieved when the restored image contains information above the Nyquist frequency of the individual ob-served images,and this can be achieved with signal process-ing.

In principle the faces can be super-resolved given accu-rate registration and an PSF that passes spatial frequencies above the Nyquist frequency.As an intermediate step in our progress in this area we demonstrate here improved facial res-olution and sharpness gained through registration,noise re-duction and classic Wiener image restoration.Our current baseline multi-frame restoration approach is described in this paper.Given video of an unknown subject we?t an Active Appearance Model(AAM)[6,7]to the face in each frame.A set of about10consecutive frames are then combined to pro-duce the restored image.A base frame of reference at twice the pixel resolution and the same orientation as the central video frame is de?ned.Each video frame is warped using bilinear interpolation to the base frame using the registration de?ned by the AAM.These warped frames are averaged and deblurred using a Wiener?lter[8,9].While this is techni-cally not super-resolution in the sense de?ned above,signi?-cant image deblurring is achieved and this baseline approach shows clear improvement in image quality.

To validate the bene?t of this technique we utilize the commercial face recognition package FaceIt R SDK ver.6.1 (Identix Inc.)with single video frames and restored images. Our goal is to determine the degree to which face recognition and veri?cation is improved by the image restoration process. Tests are performed using video collected in real-world out-door conditions in our surveillance testbed.

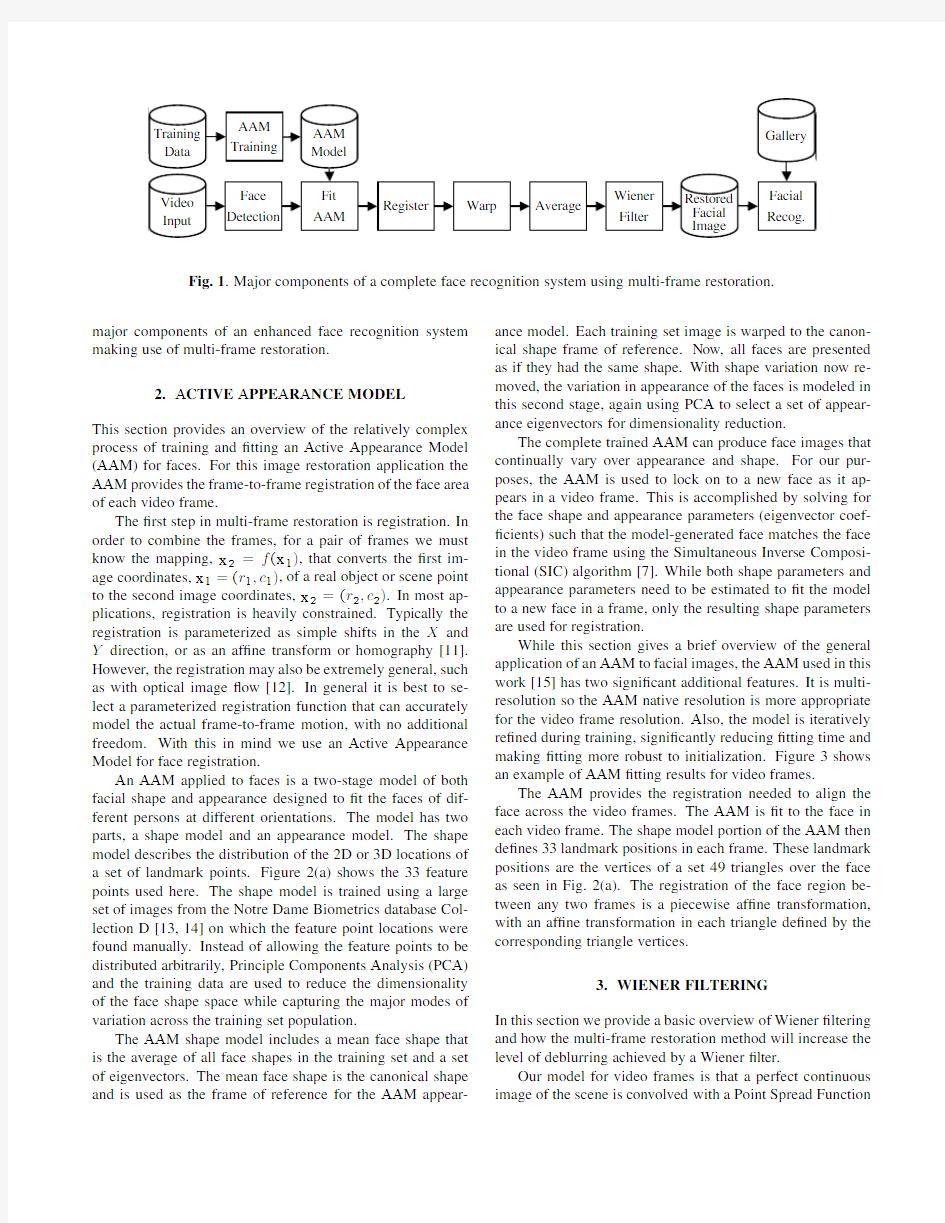

This restoration process may be used in both manual and on-line applications.Multi-frame restoration can be applied to restore video after a crime has been committed to aid recog-nition of perpetrators or witnesses.It can also be applied in an on-line system,where video is continually monitored,faces are detected[10],?tted,restored and sent to a face recogni-tion system.The system?ow diagram in Fig.1shows the

Fig.1.Major components of a complete face recognition system using multi-frame restoration.

major components of an enhanced face recognition system making use of multi-frame restoration.

2.ACTIVE APPEARANCE MODEL

This section provides an overview of the relatively complex process of training and?tting an Active Appearance Model (AAM)for faces.For this image restoration application the AAM provides the frame-to-frame registration of the face area of each video frame.

The?rst step in multi-frame restoration is registration.In order to combine the frames,for a pair of frames we must know the mapping,x2=f(x1),that converts the?rst im-age coordinates,x1=(r1,c1),of a real object or scene point to the second image coordinates,x2=(r2,c2).In most ap-plications,registration is heavily constrained.Typically the registration is parameterized as simple shifts in the X and Y direction,or as an af?ne transform or homography[11]. However,the registration may also be extremely general,such as with optical image?ow[12].In general it is best to se-lect a parameterized registration function that can accurately model the actual frame-to-frame motion,with no additional freedom.With this in mind we use an Active Appearance Model for face registration.

An AAM applied to faces is a two-stage model of both facial shape and appearance designed to?t the faces of dif-ferent persons at different orientations.The model has two parts,a shape model and an appearance model.The shape model describes the distribution of the2D or3D locations of a set of landmark points.Figure2(a)shows the33feature points used here.The shape model is trained using a large set of images from the Notre Dame Biometrics database Col-lection D[13,14]on which the feature point locations were found manually.Instead of allowing the feature points to be distributed arbitrarily,Principle Components Analysis(PCA) and the training data are used to reduce the dimensionality of the face shape space while capturing the major modes of variation across the training set population.

The AAM shape model includes a mean face shape that is the average of all face shapes in the training set and a set of eigenvectors.The mean face shape is the canonical shape and is used as the frame of reference for the AAM appear-ance model.Each training set image is warped to the canon-ical shape frame of reference.Now,all faces are presented as if they had the same shape.With shape variation now re-moved,the variation in appearance of the faces is modeled in this second stage,again using PCA to select a set of appear-ance eigenvectors for dimensionality reduction.

The complete trained AAM can produce face images that continually vary over appearance and shape.For our pur-poses,the AAM is used to lock on to a new face as it ap-pears in a video frame.This is accomplished by solving for the face shape and appearance parameters(eigenvector coef-?cients)such that the model-generated face matches the face in the video frame using the Simultaneous Inverse Composi-tional(SIC)algorithm[7].While both shape parameters and appearance parameters need to be estimated to?t the model to a new face in a frame,only the resulting shape parameters are used for registration.

While this section gives a brief overview of the general application of an AAM to facial images,the AAM used in this work[15]has two signi?cant additional features.It is multi-resolution so the AAM native resolution is more appropriate for the video frame resolution.Also,the model is iteratively re?ned during training,signi?cantly reducing?tting time and making?tting more robust to initialization.Figure3shows an example of AAM?tting results for video frames.

The AAM provides the registration needed to align the face across the video frames.The AAM is?t to the face in each video frame.The shape model portion of the AAM then de?nes33landmark positions in each frame.These landmark positions are the vertices of a set49triangles over the face as seen in Fig.2(a).The registration of the face region be-tween any two frames is a piecewise af?ne transformation, with an af?ne transformation in each triangle de?ned by the corresponding triangle vertices.

3.WIENER FILTERING

In this section we provide a basic overview of Wiener?ltering and how the multi-frame restoration method will increase the level of deblurring achieved by a Wiener?lter.

Our model for video frames is that a perfect continuous image of the scene is convolved with a Point Spread Function

(a)(b)(c)

Fig.2.(a)Face from video with 33AAM landmarks;(b)additional border landmarks;(c)blending

mask.

Fig.3.Faces from 8consecutive video frames and the ?tted AAM shape model.

(PSF),sampled on an image grid,corrupted by additive white Gaussian noise,and quantized.The PSF is responsible for the blur and it is our desire to reduce this blur.The additive noise will be the limiting factor in our ability to do this.As is typically done,we assume that the dominant source of noise is CCD electronic noise,and that the noise is i.i.d.additive Gaussian,and thus has a ?at spectrum.With all image signals represented in the spatial frequency domain,if the transform of the original image is I (ω1,ω2),the Optical Transfer Func-tion (OTF,the Fourier Transform of the PSF)is H (ω1,ω2)

and the additive Gaussian noise signal is ?N

(ω1,ω2),then the observed video frame is,

G (ω1,ω2)=H (ω1,ω2)I (ω1,ω2)+?N

(ω1,ω2)(1)

The Wiener ?lter is a classic method for single image de-blurring [8,9],providing the Minimum Mean Squared Error (MMSE)estimate of I (ω1,ω2),the non-blurred image given a noisy blurred observation,G (ω1,ω2).With no assumption made about the unknown image signal,the Wiener ?lter is,

?I

(ω1,ω2)=H ?(ω1,ω2)

|H (ω1,ω2)|2+K

G (ω1,ω2)

(2)

where H ?(ω1,ω2)is the complex conjugate of H (ω1,ω2).If parameter K is the noise to signal power ratio then we have

the MMSE Wiener ?lter.In practice K is adjusted to balance

noise ampli?cation and sharpening.If K is too large the im-age will not have high spatial frequencies restored to the full extent possible.If K is too small the restored image will be corrupted by ampli?ed high spatial frequency noise.As K goes to zero,and assuming H (ω1,ω2)>0,the Wiener ?lter approaches the ideal inverse ?lter,

?I

(ω1,ω2)=1

H (ω1,ω2)

G (ω1,ω2)

(3)

The inverse ?lter greatly ampli?es high-frequency noise and is generally not a well conditioned operation.

The effect of the Wiener ?lter on a blurred noisy image is to pass spatial frequencies that are not attenuated by the PSF and have a high SNR;to amplify spatial frequencies that are attenuated by the PSF and have a high SNR;and to attenuate spatial frequencies that have a low SNR.This is seen in 1-D in Fig.4for the case of a Gaussian shaped PSF and several val-ues for K .In Fig.4(a)is the spatial domain PSF with σ=2.For a Gaussian shaped PSF,the OTF is Gaussian shaped as well,shown in Fig.4(b)with the frequency variable ωnor-malized so that with spatial domain sampling at the integers,the Nyquist frequency is 0.5.The ideal inverse of the OTF in Fig.4(c)would amplify high-frequency noise an extreme

(a)(b)(c)(d)(e)

Fig.4.(a)spatial domain Gaussian PSF(h(x));(b)corresponding frequency domain OTF(H(ω));(c)unrealizable inverse of OTF(1/H(ω));(d)Wiener?lter for K=0.5,0.2,0.1,0.05(H?(ω)/(|H(ω)|2+K));(e)total response for each Wiener?lter (|H(ω)|2/(|H(ω)|2+K)).

amount.Figure4(d)shows the Wiener?lter for several val-ues of K.Notice how when K is reduced(less image noise) the higher spatial frequencies are more ampli?ed.Figure4(e) shows the product of the OTF and the Wiener?lter.This rep-resents the total system response.As K is reduced higher spatial frequencies are more strongly restored by the Wiener ?lter.For suf?ciently low K,the total response passband in Fig.4(e)is wider than the OTF in Fig.4(b)so the restored im-age will have greater apparent resolution and sharpness than the observed image.

This example shows how reducing image noise allows a Wiener?lter to restore high-spatial frequencies to a greater degree,improving resolution and sharpness.In the following section,the multi-frame method for reducing image noise is described.

4.MULTI-FRAME RESTORATION

Our baseline multi-frame restoration algorithm works by av-eraging the aligned face region of N consecutive video frames and applying a Wiener?lter[8,9]to the result.The frame averaging reduces additive image noise and the Wiener?l-ter deblurs the effect of the PSF.The Wiener?lter applied to the time averaged frame is able to reproduce the image at high spatial frequencies that were attenuated by the PSF more accurately than a Wiener?lter applied to a single video frame.Reproducing the high spatial frequencies more accu-rately means the restored image will have higher effective res-olution and more detail.The reason is that the image noise at these high spatial frequencies was reduced through the av-eraging process.Just as averaging N independent measure-ments of a value,each measurement corrupted by zero-mean additive Gaussian noise with varianceσ2gives an estimate of that value that has a variance ofσ2/N,averaging N regis-tered and warped images reduces the additive noise variance, and the appropriate value of K by a factor of1/N.

The AAM provides registration only for the portion of the face within the triangles.If only this region is used,the regis-tered frame mean will have a border that is at the edge of the face.This sharp discontinuity will result in strong rippling edge effects after deblurring.To mitigate this we extrapolate the registration by adding to the set of face landmarks to de-?ne an extended border region.The30new landmarks are simply positioned some?xed distance out from the estimated face edges,and form45new triangles at the border,seen in Fig.2(b).Registration will not be accurate in this border re-gion,however,we have found it to be suf?cient to eliminate restoration?lter artifacts caused by the discontinuity.

For the set of N video frames,a new base frame of refer-ence is created by selecting the middle video frame and dou-bling its pixel resolution.Each video frame is then warped to the base frame of reference.The registration function is piecewise af?ne,and bilinear interpolation is used.This aligns the face in each video frame.The aligned faces frames are then averaged to make a mean frame.

To deblur,a Wiener?lter is applied to the aligned face mean frame.For most installed surveillance cameras it is dif-?cult to determine the true PSF,so we assume a Gaussian shaped PSF with hand selected width,σ,and image noise to signal power ratio,K.For an on-line or repeatedly used sys-tem this would need to be done only once.Frame averaging allows reduction of parameter K by a factor of1/N and thus further ampli?cation of high spatial frequencies.Wiener?l-tering is performed in the frequency domain using the FFT. All other operations,registration,warping,and averaging,are performed in the spatial domain.

A sample restoration result appears in Fig.5.This?g-ure shows(a)the face from an original video frame,(b)that single frame restored with a Wiener?lter with K=0.1(the best result found by hand),(c)the result of multi-frame en-hancement using N=8consecutive frames using low-noise assumption K=0.01(the best result found by hand)and(d) the same single frame restored using the incorrect low-noise assumption K=0.01.With multi-frame enhancement,we restore higher spatial frequencies because K is lower in(c). When that same low value for K is used to attempt to restore high spatial frequencies in a single frame in(d),the result is poor and shows signi?cant artifacts because the single frame has more noise.The restored image in(c)is sharper and more detailed than the original frame and the Wiener?ltered origi-

(a)(b)(c)(d)

Fig.5.(a)Original video frame;(b)Wiener ?ltered single video frame (K =0.1);(c)Multi-frame restoration result (K =0.01);(d)Wiener ?ltered single video frame incorrectly using the same value of K as was used for the multi-frame restoration result (K =0.01).nal frame.

5.BLENDING

Outside of the face region modeled by the AAM,frame-to-frame registration is not determined.The multi-frame restora-tion technique improves the quality of the face region,but not the other regions of the image.To make a more pleasing ?nal

result,the restored face image,?I

,is blended with a ?ll image,I f .The ?ll image is the single middle unrestored video frame upsampled to match the pixel resolution of the restored image.The ?ll image is the source of the base frame of reference so it lines up perfectly with the restored face image.

A mask M is de?ned in the base frame that has value 1inside the face region and fades to zero outside of that region linearly with distance to the face region.This mask is used to blend the restored image with the ?ll image,I f using,

I (r,c )=M (r,c )?I

(r,c )+(1?M (r,c ))I f (r,c )(4)

Figure 2(c)shows an example of the mask image.The result in Fig.5(c)has been blended using this procedure.

The result after blending is an image with improved fa-cial resolution and a background that is at the original frame resolution,but is not distracting to a viewer and appears more natural to automatic face recognition algorithms.

6.EXPERIMENTAL RESULTS AND CONCLUSIONS To validate the restoration algorithm we have collected out-door video of 3test subjects using a GE CyberDome R

PTZ camera.The PTZ camera was zoomed at intervals to cap-ture video at different face resolutions,measured as eye dis-tance in pixels.A 700person gallery was created with 3good quality images of the test subjects and the “FA”image of the ?rst 697subjects in the FERET database [16].From

FAR (%)

F R R (%)

Fig.7.ROC performance for authentication with a watch-list improved with enhancement.Arrows indicate performance improvement due to multi-frame restoration.

the test video sequences we extracted original frames and cre-ated multi-frame restored facial images from the surrounding set of N =10frames.Figure 6shows the rank 1–5recog-nition counts and rates for the original frames and enhanced images.The results are grouped by face resolution and also combined.We see a noticeable trend of improvement,espe-cially for small original face resolutions.Even this straight-forward multi-frame restoration process bene?ts recognition under these dif?cult conditions.

The original and enhanced face images were also tested in veri?cation mode against the 700person gallery used as a watch-list.Figure 7shows the False Recognition Rate (FRR)vs.False Alarm Rate (FAR)operational performance for eye distances of 37and 29pixels in the original video,where we

Eye Dist.483729241917all Num.Probes243624182115138 Enhanced no yes no yes no yes no yes no yes no yes no yes Rank-116182626161581145147179 Rank-2192027281616101156157886 Rank-3202027301718111266158291 Rank-4202127321819111276258595 Rank-5212128331819121278368999 Rank-167%75%72%72%67%63%44%61%19%24%7%27%51%57% Rank-279%83%75%78%67%67%56%61%24%29%7%33%57%62% Rank-383%83%75%83%71%75%61%67%29%29%7%33%59%66% Rank-483%88%75%89%75%79%61%67%33%29%13%33%62%69% Rank-588%88%78%92%75%79%67%67%33%38%20%40%64%72% Fig.6.Rank recognition counts and rate(%),with and without multi-frame restoration,grouped by eye distance(pixels)in the original video frames.

saw the most signi?cant improvement.

As we develop methods for face recognition from video by combining and preprocessing multiple-frames we present our initial baseline algorithm and encouraging results on dif-?cult real-world face video.

7.REFERENCES

[1]D.M.Blackburn,J.M.Bone,and P.J.Phillips,FRVT

2000Evaluation Report,February2001.

[2]Subhasis Chaudhuri,Ed.,Super-Resolution Imaging,

Kluwer Academic Publishers,3rd edition,2001.

[3]K.J.Ray Liu,Moon Gi Kang,and Subhasis Chaudhuri,

Eds.,IEEE Signal Processing Magazine,Special edi-tion:Super-Resolution Image Reconstruction,vol.20, no.3,IEEE,May2003.

[4]Sean Borman and Robert Stevenson,“Super resolu-

tion enhancement of low-resolution image sequences;

a comprehensive review with directions for future re-

search,”Research report,University of Notre Dame, July1998.

[5]Simon Baker and Takeo Kanade,“Limits on super-

resolution and how to break them,”IEEE Transactions on Pattern Analysis and Machine Intelligence,vol.24, no.9,pp.1167–1183,September2002.

[6]T.Cootes,D.Cooper,C.Tylor,and J.Graham,“A

trainable method of parametric shape description,”in Proc.2nd British Machine Vision Conference.Septem-ber1991,pp.54–61,Springer.

[7]S.Baker and I.Matthews,“Lucas-Kanade20years on:

A unifying framework,”International Journal of Com-

puter Vision,vol.56,no.3,pp.221–255,March2004.

[8]Anil K.Jain,Fundamentals of Digital Image Process-

ing,Prentice Hall,1989.

[9]Rafael C.Gonzalez and Paul Wintz,Digital Image Pro-

cessing,Addison-Wesley Publishing Co.,2nd edition, 1987.

[10]H.Schneiderman,“Learning a restricted bayesian net-

work for object detection,”in Proceedings of the IEEE Conference on Computer Vision and Pattern Recogni-tion,2004.

[11]Richard Hartley and Andrew Zisserman,Multiple View

Geometry in Computer Vision,Cambridge University Press,2000.

[12]Simon Baker and Takeo Kanade,“Super resolution op-

tical?ow,”Tech.Rep.CMU-RI-TR-99-36,Robotics In-stitute,Carnegie Mellon University,Pittsburgh,PA,Oc-tober1999.

[13]K.Chang,K.W.Bowyer,and P.J.Flynn,“Face recogni-

tion using2D and3D facial data,”in ACM Workshop on Multimodal User Authentication,December2003,pp.

25–32.

[14]P.J.Flynn,K.W.Bowyer,and P.J.Phillips,“Assess-

ment of time dependency in face recognition:An initial study,”in Audio and Video-Based Biometric Person Au-thentication,2003,pp.44–51.

[15]Xiaoming Liu,Peter Tu,and Frederick Wheeler,“Face

model?tting on low resolution images,”in submitted to British Machine Vision Conference,2006.

[16]P.J.Phillips,H.Moon,P.J.Rauss,and S.Rizvi,“The

FERET evaluation methodology for face recognition al-gorithms,”IEEE Transactions on Pattern Analysis and Machine Intelligence,vol.22,no.10,October2000.

机器视觉与图像处理方法

图像处理及识别技术在机器人路径规划中的一种应用 摘要:目前,随着计算机和通讯技术的发展,在智能机器人系统中,环境感知与定位、路径规划和运动控制等功能模块趋向于分布式的解决方案。机器人路径规划问题是智能机器人研究中的重要组成部分,路径规划系统可以分为环境信息的感知与识别、路径规划以及机器人的运动控制三部分,这三部分可以并行执行,提高机器人路径规划系统的稳定性和实时性。在感知环节,视觉处理是关键。本文主要对机器人的路径规划研究基于图像识别技术,研究了图像处理及识别技术在路径规划中是如何应用的,机器人将采集到的环境地图信息发送给计算机终端,计算机对图像进行分析处理与识别,将结果反馈给机器人,并给机器人发送任务信息,机器人根据接收到的信息做出相应的操作。 关键词:图像识别;图像处理;机器人;路径规划 ABSTRACT:At present, with the development of computer and communication technology, each module, such as environment sensing, direction deciding, route planning and movement controlling moduel in the system of intelligent robot, is resolved respectively. Robot path planning is an part of intelligent robot study. The path planning system can be divided into three parts: environmental information perception and recognition, path planning and motion controlling. The three parts can be executed in parallel to improve the stability of the robot path planning system. As for environment sensing, vision Proeessing is key faetor. The robot path planning of this paper is based on image recognition technology. The image processing and recognition technology is studied in the path planning is how to apply, Robots will sent collected environment map information to the computer terminal, then computer analysis and recognize those image information. After that computer will feedback the result to the robot and send the task information. The robot will act according to the received information. Keywords: image recognition,image processing, robot,path planning

Matlab 图像处理相关函数命令大全

Matlab 图像处理相关函数命令大全 一、通用函数: colorbar 显示彩色条 语法:colorbar \ colorbar('vert') \ colorbar('horiz') \ colorbar(h) \ h=colorbar(...) \ colorbar(...,'peer',axes_handle) getimage 从坐标轴取得图像数据 语法:A=getimage(h) \ [x,y,A]=getimage(h) \ [...,A,flag]=getimage(h) \ [...]=getimage imshow 显示图像 语法:imshow(I,n) \ imshow(I,[low high]) \ imshow(BW) \ imshow(X,map) \ imshow(RGB)\ imshow(...,display_option) \ imshow(x,y,A,...) \ imshow filename \ h=imshow(...) montage 在矩形框中同时显示多幅图像 语法:montage(I) \ montage(BW) \ montage(X,map) \ montage(RGB) \ h=montage(...) immovie 创建多帧索引图的电影动画 语法:mov=immovie(X,map) \ mov=immovie(RGB) subimage 在一副图中显示多个图像 语法:subimage(X,map) \ subimage(I) \ subimage(BW) \ subimage(RGB) \ subimage(x,y,...) \ subimage(...) truesize 调整图像显示尺寸 语法:truesize(fig,[mrows mcols]) \ truesize(fig)

高中函数图像大全

指数函数 概念:一般地,函数y=a^x(a>0,且a≠1)叫做指数函数,其中x 是自变量,函数的定义域是R。 注意:⒈指数函数对外形要求严格,前系数要为1,否则不能为指数函数。 ⒉指数函数的定义仅是形式定义。 指数函数的图像与性质: 规律:1. 当两个指数函数中的a互为倒数时,两个函数关于y轴对称,但这两个函数都不具有奇偶性。

2.当a>1时,底数越大,图像上升的越快,在y轴的右侧,图像越靠近y轴; 当0<a<1时,底数越小,图像下降的越快,在y轴的左侧,图像越靠近y轴。 在y轴右边“底大图高”;在y轴左边“底大图低”。

3.四字口诀:“大增小减”。即:当a>1时,图像在R上是增函 数;当0<a<1时,图像在R上是减函数。 4. 指数函数既不是奇函数也不是偶函数。 比较幂式大小的方法: 1.当底数相同时,则利用指数函数的单调性进行比较; 2.当底数中含有字母时要注意分类讨论; 3.当底数不同,指数也不同时,则需要引入中间量进行比较; 4.对多个数进行比较,可用0或1作为中间量进行比较 底数的平移: 在指数上加上一个数,图像会向左平移;减去一个数,图像会向右平移。 在f(X)后加上一个数,图像会向上平移;减去一个数,图像会向下平移。

对数函数 1.对数函数的概念 由于指数函数y=a x 在定义域(-∞,+∞)上是单调函数,所以它存在反函数, 我们把指数函数y=a x (a >0,a≠1)的反函数称为对数函数,并记为y=log a x(a >0,a≠1). 因为指数函数y=a x 的定义域为(-∞,+∞),值域为(0,+∞),所以对数函数y=log a x 的定义域为(0,+∞),值域为(-∞,+∞). 2.对数函数的图像与性质 对数函数与指数函数互为反函数,因此它们的图像对称于直线y=x. 据此即可以画出对数函数的图像,并推知它的性质. 为了研究对数函数y=log a x(a >0,a≠1)的性质,我们在同一直角坐标系中作出函数 y=log 2x ,y=log 10x ,y=log 10x,y=log 2 1x,y=log 10 1x 的草图

高一数学函数总结大全

一次函数 一、定义与定义式: 自变量x和因变量y有如下关系: y=kx+b 则此时称y是x的一次函数。 特别地,当b=0时,y是x的正比例函数。 即:y=kx (k为常数,k≠0) 二、一次函数的性质: 1.y的变化值与对应的x的变化值成正比例,比值为k 即:y=kx+b (k为任意不为零的实数b取任何实数) 2.当x=0时,b为函数在y轴上的截距。 三、一次函数的图像及性质: 1.作法与图形:通过如下3个步骤 (1)列表; (2)描点; (3)连线,可以作出一次函数的图像——一条直线。因此,作一次函数的图像只需知道2点,并连成直线即可。(通常找函数图像与x轴和y轴的交点) 2.性质:(1)在一次函数上的任意一点P(x,y),都满足等式:y=kx+b。(2)一次函数与y轴交点的坐标总是(0,b),与x轴总是交于(-b/k,0)正比例函数的图像总是过原点。

3.k,b与函数图像所在象限: 当k>0时,直线必通过一、三象限,y随x的增大而增大; 当k<0时,直线必通过二、四象限,y随x的增大而减小。 当b>0时,直线必通过一、二象限; 当b=0时,直线通过原点 当b<0时,直线必通过三、四象限。 特别地,当b=O时,直线通过原点O(0,0)表示的是正比例函数的图像。 这时,当k>0时,直线只通过一、三象限;当k<0时,直线只通过二、四象限。 四、确定一次函数的表达式: 已知点A(x1,y1);B(x2,y2),请确定过点A、B的一次函数的表达式。 (1)设一次函数的表达式(也叫解析式)为y=kx+b。 (2)因为在一次函数上的任意一点P(x,y),都满足等式y=kx+b。所以可以列出2个方程:y1=kx1+b …… ①和y2=kx2+b …… ② (3)解这个二元一次方程,得到k,b的值。 (4)最后得到一次函数的表达式。 五、一次函数在生活中的应用: 1.当时间t一定,距离s是速度v的一次函数。s=vt。 2.当水池抽水速度f一定,水池中水量g是抽水时间t的一次函数。设水池中原有水量S。g=S-ft。

图像处理的流行的几种方法

一般来说,图像识别就是按照图像地外貌特征,把图像进行分类.图像识别地研究首先要考虑地当然是图像地预处理,随着小波变换地发展,其已经成为图像识别中非常重要地图像预处理方案,小波变换在信号分析识别领域得到了广泛应用. 现流行地算法主要还有有神经网络算法和霍夫变换.神经网络地方法,利用神经网络进行图像地分类,而且可以跟其他地技术相互融合.个人收集整理勿做商业用途 一神经网络算法 人工神经网络(,简写为)也简称为神经网络()或称作连接模型(),它是一种模范动物神经网络行为特征,进行分布式并行信息处理地算法数学模型.这种网络依靠系统地复杂程度,通过调整内部大量节点之间相互连接地关系,从而达到处理信息地目地.个人收集整理勿做商业用途 在神经网络理论地基础上形成了神经网络算法,其基本地原理就是利用神经网络地学习和记忆功能,让神经网络学习各个模式识别中大量地训练样本,用以记住各个模式类别中地样本特征,然后在识别待识样本时,神经网络回忆起之前记住地各个模式类别地特征并将他们逐个于样本特征比较,从而确定样本所属地模式类别.他不需要给出有关模式地经验知识和判别函数,通过自身地学习机制形成决策区域,网络地特性由拓扑结构神经元特性决定,利用状态信息对不同状态地信息逐一训练获得某种映射,但该方法过分依赖特征向量地选取.许多神经网络都可用于数字识别,如多层神经网络用于数字识别:为尽可能全面描述数字图像地特征,从很多不同地角度抽取相应地特征,如结构特征、统计特征,对单一识别网络,其输入向量地维数往往又不能过高.但如果所选取地特征去抽取向量地各分量不具备足够地代表性,将很难取得较好地识别效果.因此神经网络地设计是识别地关键.个人收集整理勿做商业用途 神经网络在图像识别地应用跟图像分割一样,可以分为两大类: 第一类是基于像素数据地神经网络算法,基于像素地神经网络算法是用高维地原始图像数据作为神经网络训练样本.目前有很多神经网络算法是基于像素进行图像分割地,神经网络,前向反馈自适应神经网络,其他还有模糊神经网络、神经网络、神经网络、细胞神经网络等.个人收集整理勿做商业用途 第二类是基于特征数据地神经网络算法.此类算法中,神经网络是作为特征聚类器,有很多神经网络被研究人员运用,如神经网络、模糊神经网络、神经网络、自适应神经网络、细胞神经网络和神经网络.个人收集整理勿做商业用途 例如神经网络地方法在人脸识别上比其他类别地方法有独到地优势,它具有自学习、自适应能力,特别是它地自学能力在模式识别方面表现尤为突出.神经网络方法可以通过学习地过程来获得其他方法难以实现地关于人脸识别规律和规则地隐性表达.但该方法可能存在训练时间长、收敛速度慢地缺点.个人收集整理勿做商业用途 二小波变换 小波理论兴起于上世纪年代中期,并迅速发展成为数学、物理、天文、生物多个学科地重要分析工具之一;其具有良好地时、频局域分析能力,对一维有界变差函数类地“最优”逼近性能,多分辨分析概念地引入以及快速算法地存在,是小波理论迅猛发展地重要原因.小波分析地巨大成功尤其表现在信号处理、图像压缩等应用领域.小波变换是一种非常优秀地、具有较强时、频局部分析功能地非平稳信号分析方法,近年来已在应用数序和信号处理有很大地发展,并取得了较好地应用效果.在频域里提取信号里地相关信息,通过伸缩和平移算法,对信号进行多尺度分类和分析,达到高频处时间细分、低频处频率细分、适应时频信号分解地要求.小波变换在图像识别地应用,包括图形去噪、图像增强、图像融合、图像压缩、图像分解和图像边缘检测等.小波变换在生物特征识别方面(例如掌纹特征提取和识别)同样得到了成功应用,部分研究结果表明在生物特征识别方面效果优于、、傅里叶变换等方

MATLAB图像处理函数大全

Matlab图像处理函数大全 目录 图像增强 (3) 1. 直方图均衡化的Matlab 实现 (3) 1.1 imhist 函数 (3) 1.2 imcontour 函数 (3) 1.3 imadjust 函数 (3) 1.4 histeq 函数 (4) 2. 噪声及其噪声的Matlab 实现 (4) 3. 图像滤波的Matlab 实现 (4) 3.1 conv2 函数 (4) 3.2 conv 函数 (5) 3.3 filter2函数 (5) 3.4 fspecial 函数 (6) 4. 彩色增强的Matlab 实现 (6) 4.1 imfilter函数 (6) 图像的变换 (6) 1. 离散傅立叶变换的Matlab 实现 (6) 2. 离散余弦变换的Matlab 实现 (7) 2.1. dct2 函数 (7) 2.2. dict2 函数 (8) 2.3. dctmtx函数 (8) 3. 图像小波变换的Matlab 实现 (8) 3.1 一维小波变换的Matlab 实现 (8) 3.2 二维小波变换的Matlab 实现 (9) 图像处理工具箱 (11) 1. 图像和图像数据 (11) 2. 图像处理工具箱所支持的图像类型 (12) 2.1 真彩色图像 (12) 2.2 索引色图像 (13) 2.3 灰度图像 (14) 2.4 二值图像 (14) 2.5 图像序列 (14) 3. MATLAB图像类型转换 (14) 4. 图像文件的读写和查询 (15) 4.1 图形图像文件的读取 (15) 4.2 图形图像文件的写入 (16) 4.3 图形图像文件信息的查询imfinfo()函数 (16) 5. 图像文件的显示 (16) 5.1 索引图像及其显示 (16) 5.2 灰度图像及其显示 (16) 5.3 RGB 图像及其显示 (17)

实验一图像处理基本操作

实验一图像处理基本操作 一、 实验目的 1、熟悉并掌握在MATLAB中进行图像类型转换及图像处理的基本操作。 2、熟练掌握图像处理中的常用数学变换。 二、实验设备 1、计算机1台 2、MATLAB软件1套 3、实验图片 三、实验原理 1、数字图像的表示和类别 一幅图像可以被定义为一个二维函数f(x,y),其中x和y是空间(平面)坐标,f在坐标(x,y)处的幅度称为图像在该点的亮度。灰度是用来表示黑白图像亮度的一个术语,而彩色图像是由若干个二维图像组合形成的。例如,在RGB彩色系统中,一幅彩色图像是由三幅独立的分量图像(红、绿、蓝)组成的。因此,许多为黑白图像处理开发的技术也适用于彩色图像处理,方法是分别处理三幅独立的分量图像即可。 图像关于x和y坐标以及幅度连续。要将这样的一幅图像转化为数字形式,就要求数字化坐标和幅度。将坐标值数字化称为取样,将幅度数字化称为量化。采样和量化的过程如图1所示。因此,当f的x、y分量和幅度都是有限且离散的量时,称该图像为数字图像。 作为MATLAB基本数据类型的数组十分适于表达图像,矩阵的元素和图像的像素之间有着十分自然的对应关系。 图1 图像的采样和量化 图1 采样和量化的过程 根据图像数据矩阵解释方法的不同,MATLAB把其处理为4类: ?亮度图像(Intensity images) ?二值图像(Binary images) ?索引图像(Indexed images) ? RGB图像(RGB images) (1) 亮度图像 一幅亮度图像是一个数据矩阵,其归一化的取值表示亮度。若亮度图像的像素都是uint8类型或uint16类型,则它们的整数值范围分别是[0,255]和[0,65536]。若图像是double 类型,则像素取值就是浮点数。规定双精度double型归一化亮度图像的取值范围是[0 1]。 (2) 二值图像 一幅二值图像是一个取值只有0和1的逻辑数组。而一幅取值只包含0和1的uint8

MATLAB图像处理相关函数

一、通用函数: colorbar显示彩色条 语法:colorbar \ colorbar('vert') \ colorbar('horiz') \ colorbar(h) \ h=colorbar(...) \ colorbar(...,'peer',axes_handle) getimage 从坐标轴取得图像数据 语法:A=getimage(h) \ [x,y,A]=getimage(h) \ [...,A,flag]=getimage(h) \ [...]=getimage imshow 显示图像 语法:imshow(I,n) \ imshow(I,[low high]) \ imshow(BW) \ imshow(X,map) \ imshow(RGB)\ imshow(...,display_option) \ imshow(x,y,A,...) \ imshow filename \ h=imshow(...) montage 在矩形框中同时显示多幅图像 语法:montage(I) \ montage(BW) \ montage(X,map) \ montage(RGB) \ h=montage(...) immovie 创建多帧索引图的电影动画 语法:mov=immovie(X,map) \ mov=immovie(RGB) subimage 在一副图中显示多个图像 语法:subimage(X,map) \ subimage(I) \ subimage(BW) \ subimage(RGB) \ subimage(x,y,...) \ subimage(...) truesize 调整图像显示尺寸 语法:truesize(fig,[mrows mcols]) \ truesize(fig) warp 将图像显示到纹理映射表面 语法:warp(X,map) \ warp(I ,n) \ warp(z,...) warp(x,y,z,...) \ h=warp(...) zoom 缩放图像 语法:zoom on \ zoom off \ zoom out \ zoom reset \ zoom \ zoom xon \ zoom yon\ zoom(factor) \ zoom(fig,option) 二、图像文件I/O函数命令 imfinfo 返回图形图像文件信息 语法:info=imfinfo(filename,fmt) \ info=imfinfo(filename) imread 从图像文件中读取(载入)图像 语法:A=imread(filename,fmt) \ [X,map]=imread(filename,fmt) \

实训三图像频域处理基本操作

实训三:图像频域处理基本操作 一:实验的目的 1:掌握基本的离散傅里叶变换操作,熟悉命令fftn, fftshift,ifftn。 2:对图像text.png进行图像特征识别操作。 二:实验指导: 1.通过MATLAB的Help文档,学习Image Processing Toolbox中关于图像变换的内容。 2.通过MATLAB的Help文档,查询命令fftn, fftshift,ifftn的用法。 3. 用MATLAB生成一个矩形连续函数并得到它的傅里叶变换的频谱。

4.对图像text.png中的字母a完成特征识别操作。

一 bw = imread('text.png'); a = bw(32:45,88:98); imview(bw); imshow(bw); figure, imshow(a); C = real(ifft2(fft2(bw) .* fft2(rot90(a,2),256,256))); figure, imshow(C,[]) max(C(:)) thresh = 60; figure, imshow(C > thresh) ans = 68

N=100 f=zeros(500,500); f(60:180,30:400)=1; subplot(221),imshow(f) subplot(221),imshow(f,'notruesize') F1=fft2(f,N,N),

F1=fft2(f,N,N), F2=fftshift(abs(F1)); F3=abs(log(1+F2)); subplot(222), imshow(F3,[]) imshow(F3,[]); f1=imrotate(f,45,'bicubic') subplot(223),imshow(f1); F21=fft2(f1,N,N); F22=abs((log(1+F22)); F22=abs((log(1+F21))); F23=abs(log(1+F22)); subplot(224), imshow(F23,[])

图像处理方法

i=imread('D:\00001.jpg'); >> j=rgb2gray(i); >> warning off >> imshow(j); >> u=edge(j,'roberts'); >> v=edge(j,'sobel'); >> w=edge(j,'canny'); >> x=edge(j,'prewitt'); >> y=edge(j,'log'); >> h=fspecial('gaussian',5); >> z=edge(j,'zerocross',[],h); >> subplot(2,4,1),imshow(j) >> subplot(2,4,2),imshow(u) >> subplot(2,4,3),imshow(v) >> subplot(2,4,4),imshow(w) >> subplot(2,4,5),imshow(x) >> subplot(2,4,6),imshow(y) >> subplot(2,4,7),imshow(z)

>> %phi:地理纬度lambda:地理经度delta:赤纬omega:时角lx 影子长,ly 杆长 >> data=xlsread('D:\附件1-3.xls','附件1'); >> X = data(:,2); >> Y = data(:,3); >> [x,y]=meshgrid(X,Y); %生成计算网格 >> fxy = sqrt(x.^2+y.^2); >> %[Dx,Dy] = gradient(fxy); >> Dx = x./fxy; >> Dy = y./fxy; >> quiver(X,Y,Dx,Dy); %用矢量绘图函数绘出梯度矢量大小分布>> hold on >> contour(X,Y,fxy); %与梯度值对应,绘出原函数的等值线图

MATLAB中图像函数大全 详解及例子

图像处理函数详解——strel 功能:用于膨胀腐蚀及开闭运算等操作的结构元素对象(本论坛随即对膨胀腐蚀等操作进行讲解)。 用法:SE=strel(shape,parameters) 创建由指定形状shape对应的结构元素。其中shape的种类有 arbitrary' 'pair' 'diamond' 'periodicline' 'disk' 'rectangle' 'line' 'square' 'octagon 参数parameters一般控制SE的大小。 例子: se1=strel('square',6) %创建6*6的正方形 se2=strel('line',10,45) %创建直线长度10,角度45 se3=strel('disk',15) %创建圆盘半径15 se4=strel('ball',15,5) %创建椭圆体,半径15,高度5

图像处理函数详解——roipoly 功能:用于选择图像中的多边形区域。 用法:BW=roipoly(I,c,r) BW=roipoly(I) BW=roipoly(x,y,I,xi,yi) [BW,xi,yi]=roipoly(...) [x,y,BW,xi,yi]=roipoly(...) BW=roipoly(I,c,r)表示用向量c、r指定多边形各点的X、Y坐标。BW选中的区域为1,其他部分的值为0. BW=roipoly(I)表示建立交互式的处理界面。 BW=roipoly(x,y,I,xi,yi)表示向量x和y建立非默认的坐标系,然后在指定的坐标系下选择由向量xi,yi指定的多边形区域。 例子:I=imread('eight.tif'); c=[222272300270221194]; r=[21217512112175]; BW=roipoly(I,c,r); imshow(I)

envi图像处理基本操作

使用ENVI进行图像处理 主要介绍利用envi进行图像处理的基本操作,主要分为图像合成、图像裁减、图像校正、图像镶嵌、图像融合、图像增强。 分辨率:空间分辨率、波谱分辨率、时间分辨率、辐射分辨率。咱们平时所说的分辨率是指?怎么理解? 1、图像合成 对于多光谱影像,当我们要得到彩色影像时,需要进行图像合成,产生一个与自然界颜色一致的真彩色(假彩色)图像。 对于不同类型的影像需要不同的波段进行合成,如中巴CCD影像共5个波段,一般选择2、4、3进行合成。(为什么不选择其他波段?重影/不是真彩色)。SOPT5影像共7个波段,一般选择7、4、3三个波段。 操作过程以中巴资源卫星影像为例 中巴资源卫星影像共有五个波段,选择2、4、3三个波段对R、G、B赋值进行赋值。 在ENVI中的操作如下: (1)file→open image file→打开2、3、4三个波段,选择RGB,分别将2、4、3赋予RGB。(2)在#1窗口file---〉save image as-→image file。 (3)在主菜单中将合成的文件存为tiff格式(file-→save file as-→tiff/geotiff) 即可得到我们需要的彩色图像。 2、图像裁减 有时如果处理较大的图像比较困难,需要我们进行裁减,以方便处理。如在上海出差时使用的P6、SOPT5,图幅太大不能直接校正需要裁减。 裁减图像,首先制作AOI文件再根据AOI进行裁减。一般分为两种:指定范围裁减、不指定范围裁减。 不指定范围裁减在ENVI中的操作如下: (1)首先将感兴趣区存为AOI文件 file→open image file打开原图像→选择IMAGE窗口菜单overlay→region of interesting 选择划定感兴趣区的窗口如scroll,从ROI_Type菜单选择ROI的类型如Rectangle,在窗口中选出需要选择的区域。在ROI窗口file→Save ROIs将感兴趣区存为ROI文件。

图像处理基本方法

图像处理的基本步骤 针对不同的目的,图像处理的方法不经相同。大体包括图像预处理和图像识别两大模块。 一、图像预处理: 结合识别复杂环境下的成熟黄瓜进行阐述,具体步骤如下: · 图像预处理阶段的流程图 对以上的图像流程进行详细的补充说明: 图像预处理的概念: 将每一个文字图像分检出来交给识别模块识别,这一过程称为图像预处理。 图像装换和图像分割以及区域形态学处理都是属于图像处理的基本内容之一。 图像转换:方法:对原图像进行灰度化处理生成灰度矩阵——降低运算速度(有具体的公式和方程),中值滤波去噪声——去除色彩和光照的影响等等。 图像分割:传统方法:基于阈值分割、基于梯度分割、基于边缘检测分割和基于区域图像割等方法。脉冲耦合神经网络 (PCNN)是针对复杂环境下 图像采集 图像采集中注意采集的方法、工具进行介绍。目的是怎样获取有代表性的样本。(包括天气、相机的位置等) 对采集的图像进行特征分析 目标的颜色和周围环境的颜色是否存在干涉的问题、平整度影响相机的拍摄效果、形状 图像转换 图像分割 区域形态学处理

的有效分割方法,分割的时候如果将一个数字图像输入PCNN,则能基于空间邻近性和亮度相似性将图像像素分组,在基于窗口的图像处理应用中具有很好的性能。 区域形态学处理:对PCNN分割结果后还存在噪声的情况下,对剩余的噪声进行分析,归类属于哪一种噪声。是孤立噪声还是黏连噪声。采用区域面积统计法可以消除孤立噪声。对于黏连噪声,可以采用先腐蚀切断黏连部分,再膨胀复原目标对象,在进行面积阙值去噪,通过前景空洞填充目标,最后通过形态学运算,二值图像形成众多独立的区域,进行各连通区域标识,利于区域几何特征的提取。 二、图像识别: 针对预处理图像提取 目标特征 建立LS SVM分类器 得到结果 图像识别流程图 提取目标特征:目标特征就是的研究对象的典型特点,可以包括几何特征和纹理特征。 对于几何特征采用的方法:采用LS-SVM支持向量机对几何特征参数进行处理,通过分析各个参数的分布区间来将目标和周围背景区分开,找出其中具有能区分功能的决定性的几何特征参数。 纹理特征方法:纹理特征中的几个参数可以作为最小二乘支持向量机的辅助特征参数,提高模型的精准度。 最小二乘支持向量机介绍:首先选择非线性映射将样本从原空间映射到特征空间,以解决原空间中线性不可分问题,在此高维空间中把最优决策问题转化为等式约束条件,构造最优决策函数,并引入拉格朗日乘子求解最优化问题,对各个变量求偏微分。 LS SVM分类器:对于p种特征选择q个图像连通区域,作为训练样本。依

一些图像处理函数用法(精华)

一些图像处理函数用法(精华) imshow imshow是用来显示图片的,如 I = imread('moon.tif'); figure,imshow(I); 而有时为了数据处理,要把读取的图片信息转化为更高的精度, I = double(imread('moon.tif')); 为了保证精度,经过了运算的图像矩阵I其数据类型会从unit8型变成double型。如果直接运行imshow(I),我们会发现显示的是一个白色的图像。这是因为imshow()显示图像时对double型是认为在0~1范围内,即大于1时都是显示为白色,而imshow显示uint8型时是0~255范围。而经过运算的范围在0-255之间的double型数据就被不正常得显示为白色图像了。 有两个解决方法: 1、imshow(I/256); -----------将图像矩阵转化到0-1之间 2、imshow(I,[]); -----------自动调整数据的范围以便于显示 从实验结果看两种方法都解决了问题,但是从显示的图像看,第二种方法显示的图像明暗黑白对比的强烈些!图像为y,为何用imshow(uint8(y))和imshow(y,[])时的图像显示结果不同? 回答: imshow(uint8(y))是按照256级灰度显示y得绝对数据。0表示黑色,255表示白色,y中大于255的值强制为255。 imshow(y,[]),将y中的最小值看作0(black),最大值看作255(white) -->增加图像的对比度。 所以两者不同。 padarray 功能:填充图像或填充数组。 用法:B = padarray(A,padsize,padval,direction) A为输入图像,B为填充后的图像, padsize给出了给出了填充的行数和列数,通常用[r c]来表示, padval和direction分别表示填充方法和方向。 它们的具体值和描述如下: padval:'symmetric' 表示图像大小通过围绕边界进行镜像反射来扩展; 'replicate' 表示图像大小通过复制外边界中的值来扩展; 'circular' 图像大小通过将图像看成是一个二维周期函数的一个周期来进行扩展。 direction:'pre' 表示在每一维的第一个元素前填充; 'post' 表示在每一维的最后一个元素后填充;

遥感卫星图像处理方法

北京揽宇方圆信息技术有限公司 遥感卫星图像处理方法 随着遥感技术的快速发展,获得了大量的遥感影像数据,如何从这些影像中提取人们感兴趣的对象已成为人们越来越关注的问题。但是传统的方法不能满足人们已有获取手段的需要,另外GIS的快速发展为人们提供了强大的地理数据管理平台,GIS数据库包括了大量空间数据和属性数据,以及未被人们发现的存在于这些数据中的知识。将GIS技术引入遥感图像的分类过程,用来辅助进行遥感图像分类,可进一步提高了图像处理的精度和效率。如何从GIS数据库中挖掘这些数据并加以充分利用是人们最关心的问题。GIS支持下的遥感图像分析特别强调RS和GIS的集成,引进空间数据挖掘和知识发现(SDM&KDD)技术,支持遥感影像的分类,达到较好的结果,专家系统表明了该方法是高效的手段。 遥感图像的边缘特征提取观察一幅图像首先感受到的是图像的总体边缘特征,它是构成图像形状的基本要素,是图像性质的重要表现形式之一,是图像特征的重要组成部分。提取和检测边缘特征是图像特征提取的重要一环,也是解决图像处理中许多复杂问题的一条重要的途径。遥感图像的边缘特征提取是对遥感图像上的明显地物边缘特征进行提取与识别的处理过程。目前解决图像特征检测/定位问题的技术还不是很完善,从图像结构的观点来看,主要是要解决三个问题:①要找出重要的图像灰度特征;②要抑制不必要的细节和噪声;③要保证定位精度图。遥感图像的边缘特征提取的算子很多,最常用的算子如Sobel算子、Log算子、Canny算子等。 1)图像精校正 由于卫星成像时受采样角度、成像高度及卫星姿态等客观因素的影响,造成原始图像非线性变形,必须经过几何精校正,才能满足工作精度要求一般采用几何模型配合常规控制点法对进行几何校正。 在校正时利用地面控制点(GCP),通过坐标转换函数,把各控制点从地理空间投影到图像空间上去。几何校正的精度直接取决于地面控制点选取的精度、分布和数量。因此,地面控制点的选择必须满足一定的条件,即:地面控制点应当均匀地分布在图像内;地面控制点应当在图像上有明显的、精确的定位识别标志,如公路、铁路交叉点、河流叉口、农田界线等,以保证空间配准的精度;地面控制点要有一定的数量保证。地面控制点选好后,再选择不同的校正算子和插值法进行计算,同时,还对地面控制点(GCPS)进行误差分析,使得其精度满足要求为止。最后将校正好的图像与地形图进行对比,考察校正效果。 2)波段组合及融合 对卫星数据的全色及多光谱波段进行融合。包括选取最佳波段,从多种分辨率融合方法中选取最佳方法进行全色波段和多光谱波段融合,使得图像既有高的空间分辨率和纹理特性,又有丰富的光谱信息,从而达到影像地图信息丰富、视觉效果好、质量高的目的。 3)图像镶嵌

数字图像处理复习习题库

复习习题库: 简答题 1.将M幅图像相加求平均可以起到消除噪声的效果,用一个n×n的模板进行平滑滤波也可以起到消除噪声的效果,试比较这两种方法的消噪效果。 将M幅图像相加求平均利用了M幅图像中同一位置的M个像素的平均值,用一个n*n的模板进行平滑滤波利用了同一幅图像中的n*n个像素的平均值。因为参与的像素个数越多,消除噪声的能力越强,所以如果M>n*n,则前者消除噪声的效果较好,反之则后者消除噪声的效果较好。 2.图像锐化与图像平滑有何区别与联系? 图象锐化是用于增强边缘,导致高频分量增强,会使图像清晰;图象平滑用于去噪,对图像高频分量即图像边缘会有影响。都属于图象增强,改善图像效果。 3.比较均值滤波和中值滤波对图像的椒盐噪声和高斯噪声抑制过程中的优势,并说明其原因。 对于椒盐噪声,中值滤波效果比均值滤波效果好。其原因为:椒盐噪声是幅值近似相等但随机分布在不同位置上,图像中有干净点也有污染点。中值滤波是选择适当的点来替代污染点的值,所以处理效果好。因为噪声的均值不为0,所以均值滤波不能很好地去除噪声点。对于高斯噪声,均值滤波效果比中值滤波效果好。其原因是:高斯噪声是幅值近似正态分布,但分布在每点像素上。因为图像中的每点都是污染点,所中值滤波选不到合适的干净点。因为正态分布的均值为0,所以根据统计数学,均值可以消除噪声。 4.伪彩色增强和假彩色增强有何异同点? 伪彩色增强是对一幅灰度图象经过三种变换得到三幅图象,进行彩色合成得到一幅彩色图像;假彩色增强则是对一幅彩色图像进行处理得到与原图象不同的彩色图像;主要差异在于处理对象不同。相同点是利用人眼对彩色的分辨能力高于灰度分辨能力的特点,将目标用人眼敏感的颜色表示。 5.图像几何失真校正的主要步骤。 (1)空间变换:对图像平面上的像素进行重新排列以恢复原空间关系; (2)灰度插值:对空间变换后的像素赋予相应的灰度值以恢复原位置的灰度值。 6.梯度法与Laplacian算子检测边缘的异同点? 梯度算子和Laplacian检测边缘对应的模板分别为 -1 -1 1 1 1 1 -4 1 1 (梯度算子)(Laplacian算子)

试验一图像处理基本操作

图像处理基本操作 姓名:王泗凯学号:2012045043 日期:2014.3.10 1.实验目的和内容 为了得到我们想得到的图像效果以及为图像的后续处理做好准备,我们需要对原图像进行一些基本处理操作,以下是对原图像进行一些基本处理的内容和具体步骤。 1.1.设置ENVI 打开ENVI在File下的Preferences里面就是ENVI的基本设置 图1.1-1:设置绘图选项图1.1-2:设置默认显示参数

图1.1-3:设置页面单位和内存分配 图1.1-4:ENVI 系统设置窗口 1.2.打开图像,显示单波段图像、显示彩色图像 打开图像:File -> Open Image File 选择7个单波段图像打开 在窗口中选择Gray Scale ,然后选择想要打开的波段,例如打开第四波段,得到下图所示单波段图像;在窗口中选择RGB Color ,然后进行波段组合,若显示真彩色图像则选择3,2,1三个波段,此处打开真彩色图像,结果如图。 图1.2-1:单波段图像(波段4) 图1.2-2:RGB 合成显示(3,2,1) 1.3.窗口连接 先在Display #1中打开一幅图像(如图1.2-1),再新建一个窗口Display #2打开另一图像(如图1.2-2),在Display #1内右击选择Link Display 得到如图1.3-1所示窗口,即可连接Display #1和Display #2,连接后如图1.3-2。

图1.3-1图像窗口连接 图1.3-2图像连接后显示 1.4.图像格式转换(自行定义转入转出格式) 点击Display #1窗口上的file中的Save Image As,然后选择Image File弹出图1.4-1所示窗口,在Output File Type中即可选择图像要转换成的格式。

数字图像处理-图像去噪方法

图像去噪方法 一、引言 图像信号在产生、传输和记录的过程中,经常会受到各种噪声的干扰,噪声可以理解为妨碍人的视觉器官或系统传感器对所接收图像源信 息进行理解或分析的各种元素。噪声对图像的输入、采集、处理的各个环节以及最终输出结果都会产生一定影响。图像去噪是数字图像处理中的重要环节和步骤。去噪效果的好坏直接影响到后续的图像处理工作如图像分割、边缘检测等。一般数字图像系统中的常见噪声主要有:高斯噪声(主要由阻性元器件内部产生)、椒盐噪声(主要是图像切割引起的黑图像上的白点噪声或光电转换过程中产生的泊松噪声)等。我们平常使用的滤波方法一般有均值滤波、中值滤波和小波滤波,他们分别对某种噪声的滤除有较好的效果。对图像进行去噪已成为图像处理中极其重要的内容。 二、常见的噪声 1、高斯噪声:主要有阻性元器件内部产生。 2、椒盐噪声:主要是图像切割引起的黑图像上的白点噪声或光电转换过程中产生泊松噪声。 3、量化噪声:此类噪声与输入图像信号无关,是量化过程存在量化误差,再反映到接收端而产生,其大小显示出数字图像和原始图像差异。 一般数字图像系统中的常见噪声主要有高斯噪声和椒盐噪声等,减少噪声的方法可以在图像空间域或在图像频率域完成。在空间域对图像处理主要有均值滤波算法和中值滤波算法.图像频率域去噪方法

是对图像进行某种变换,将图像从空间域转换到频率域,对频率域中的变换系数进行处理,再进行反变换将图像从频率域转换到空间域来达到去除图像噪声的目的。将图像从空间转换到变换域的变换方法很多,常用的有傅立叶变换、小波变换等。 三、去噪常用的方法 1、均值滤波 均值滤波也称为线性滤波,其采用的主要方法为邻域平均法。其基本原理是用均值替代原图像中的各个像素值,即对待处理的当前像素点(x,y),选择一个模板,该模板由其近邻的若干像素组成,求模板中所有像素的均值,再把该均值赋予当前像素点(x,y),作为处理后图像在 f?sf(x,y),其中,s为模板,M为该点上的灰度g(x,y),即g x,y=1 M 该模板中包含当前像素在内的像素总个数。这种算法简单,处理速度快,但它的主要缺点是在降低噪声的同时使图像产生模糊,特别是在边缘和细节处。而且邻域越大,在去噪能力增强的同时模糊程度越严重。

Matlab图像处理函数汇总

1、图像的变换 ①fft2:fft2函数用于数字图像的二维傅立叶变换,如:i=imread('104_8.tif'); j=fft2(i); ②ifft2::ifft2函数用于数字图像的二维傅立叶反变换,如: i=imread('104_8.tif'); j=fft2(i); k=ifft2(j); 2、模拟噪声生成函数和预定义滤波器 ①imnoise:用于对图像生成模拟噪声,如: i=imread('104_8.tif'); j=imnoise(i,'gaussian',0,0.02);%模拟高斯噪声 ②fspecial:用于产生预定义滤波器,如: h=fspecial('sobel');%sobel水平边缘增强滤波器 h=fspecial('gaussian');%高斯低通滤波器 h=fspecial('laplacian');%拉普拉斯滤波器 h=fspecial('log');%高斯拉普拉斯(LoG)滤波器 h=fspecial('average');%均值滤波器 2、图像的增强 ①直方图:imhist函数用于数字图像的直方图显示,如: i=imread('104_8.tif'); imhist(i); ②直方图均化:histeq函数用于数字图像的直方图均化,如: i=imread('104_8.tif'); j=histeq(i); ③对比度调整:imadjust函数用于数字图像的对比度调整,如:i=imread('104_8.tif'); j=imadjust(i,[0.3,0.7],[]); ④对数变换:log函数用于数字图像的对数变换,如: i=imread('104_8.tif'); j=double(i);