Soft Learning Vector Quantization and Clustering Algorithms Based on Ordered Weighted Aggre

Soft Learning Vector Quantization and Clustering Algorithms Based on Ordered Weighted

Aggregation Operators

Nicolaos B.Karayiannis,Member,IEEE

Abstract—This paper presents the development and investigates

the properties of ordered weighted learning vector quantization

(LVQ)and clustering algorithms.These algorithms are developed

by using gradient descent to minimize reformulation functions

based on aggregation operators.An axiomatic approach pro-

vides conditions for selecting aggregation operators that lead to

admissible reformulation functions.Minimization of admissible

reformulation functions based on ordered weighted aggregation

operators produces a family of soft LVQ and clustering algo-

rithms,which includes fuzzy LVQ and clustering algorithms as

special cases.The proposed LVQ and clustering algorithms are

used to perform segmentation of magnetic resonance(MR)images

of the brain.The diagnostic value of the segmented MR images

provides the basis for evaluating a variety of ordered weighted

LVQ and clustering algorithms.

Index Terms—Aggregation operator,clustering,image seg-

mentation,learning vector quantization,magnetic resonance

(MR)imaging,ordered weighted aggregation,reformulation,

reformulation function.

I.I NTRODUCTION

C ONSIDER the set fea-

ture vectors from an

feature vectors to

-dimensional Euclidean space

also referred to

as the codebook.Codebook design can be performed by clus-

tering algorithms,which are typically developed to solve a con-

strained minimization problem involving two sets of unknowns,

namely the membership functions that assign feature vectors to

clusters and the prototypes.The solution of this problem is often

determined using alternating optimization[1].These clustering

techniques include the crisp-means(FCM)

[1],generalized fuzzy

Axiom A3:The

function

and

of

represent the membership

functions of the aggregated fuzzy sets and,as such,their values

are typically restricted to the interval[0,1].This is reflected by

the additional axiomatic requirement often imposed on aggre-

gation operators for fuzzy sets,according to which the

function

and

are

not necessarily upper-bounded by one.Moreover,the boundary

conditions mentioned above are satisfied by any

function

must be symmetric under any permutation of its arguments,

that

is,

in

are considered to be equally important

to the aggregation operator,a property that is desirable but

not necessary for the development of soft LVQ and clustering

algorithms.

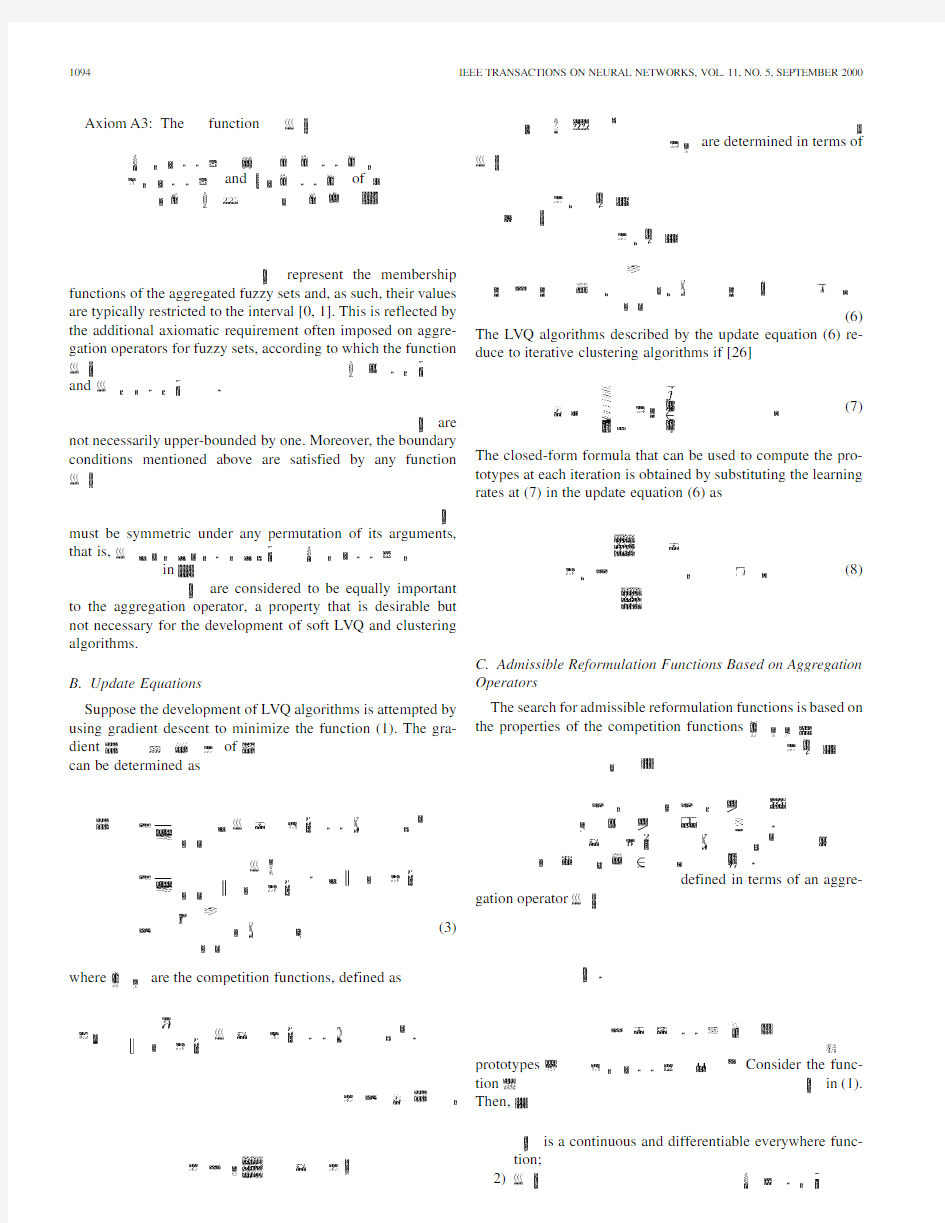

B.Update Equations

Suppose the development of LVQ algorithms is attempted by

using gradient descent to minimize the function(1).The gra-

dient

of

can be determined

as

(3)

where are the competition functions,defined

as

are determined in terms

of

(6)

The LVQ algorithms described by the update equation(6)re-

duce to iterative clustering algorithms if

[26]

(7)

The closed-form formula that can be used to compute the pro-

totypes at each iteration is obtained by substituting the learning

rates at(7)in the update equation(6)

as

(8)

C.Admissible Reformulation Functions Based on Aggregation

Operators

The search for admissible reformulation functions is based on

the properties of the competition

functions

defined in terms of an aggre-

gation

operator

prototypes Consider the func-

tion in(1).

Then,

is a continuous and differentiable everywhere func-

tion;

2)

KARAYIANNIS:SOFT LEARNING VECTOR QUANTIZATION AND CLUSTERING ALGORITHMS1095

3)

4)

for

used to construct reformulation func-

tions of the form(1)must be an aggregation operator in accor-

dance with the axiomatic requirements A1–A3.Nevertheless,

Theorem1indicates that not all aggregation operators lead to

admissible reformulation functions.The subset of all aggrega-

tion operators that can be used to construct reformulation func-

tions of the form(1)are those satisfying the fourth condition of

Theorem1.

III.R EFORMULATION F UNCTIONS B ASED ON O RDERED

W EIGHTED A GGREGATION O PERATORS

A broad family of aggregation operators is composed of or-

dered weighted operators[21],[27]–[29],[31].Consider the

function

and the

weights

Any function of the form(9)is an admissible refor-

mulation function if the

functions and the

weights

prototypes Consider the

function

(10)

where ordered in ascending

order,that

is,and the

weights

is an admissible reformulation function of the first

(second)kind in accordance with the axiomatic requirements

R1–R3

if are continuous and differentiable every-

where functions

satisfying

and are both

monotonically decreasing(increasing)functions

of

is a monotonically increasing(decreasing)function

of

and

Proof:Proof of this theorem is shown in Appendix B.

It can easily be verified that the

function

is not affected by their permu-

tation.Thus,the function(10)satisfies the auxiliary axiomatic

requirement for aggregation operators in addition to the basic

Axioms A1–A3.

A.The Ordered Weighted Generalized Mean

The development of soft LVQ and clustering algorithms can

be accomplished by considering ordered weighted aggregation

operators of the form(10)corresponding

to

and

Theorem2requires

that be a monotonically

increasing function

of which is true

if

this last inequality is valid

if

corresponding

to

corresponding

to

(11)

with

denotes the ordered

weighted generalized mean

of

(13)

If the ordered weighted generalized mean(12)differs

from the ordered weighted mean(13)unless the weight

vector

then coincides with the general-

ized mean or

unweighted

associated with a weight

vector

1096IEEE TRANSACTIONS ON NEURAL NETWORKS,VOL.11,NO.5,SEPTEMBER2000

that

(14)

By

definition,The values

of

assuming descending ordering

of the

arguments

measures the degree of“orness”of the corresponding ordered

weighted aggregation operator.In the formulation considered

in this paper,it is assumed that the

arguments

are ordered in ascending order.In such a

case,

if

Since

and

decreases from one and approaches zero as the aggregation

operator

to one

can be used to measure the emphasis placed by the

weights

which corresponds

to

defined in(14)takes values in the interval[1/2,1].This can

be proven using the results of the following proposition.

Proposition1:Consider the weight

vector

then

in(14)

that obtained

for

can be obtained by writing

(14)

as

then

According to Proposition

1

(17)

with the equality holding

for

is the percentage of

weights marked for elimination.Since the

weights

denotes the integer part

of

for

,the weights defined in(19)form the

weight

vector

In this case,the ordered weighted generalized mean(12)

coincides with the generalized mean.

For,the weights

defined in(19)form the weight

vector

defined in(14)can be computed

as

,which corresponds

to

while the upper

bound,which corresponds

to

is the percentage of weights marked

for elimination.Since the

weights

For

,then the weights

KARAYIANNIS:SOFT LEARNING VECTOR QUANTIZATION AND CLUSTERING ALGORITHMS1097 defined in(21)form the weight vector

defined in(14)can be computed

as

is not a function of

increases above zero,that takes values

above2/3.The upper bound

,which reduces the ordered weighted generalized mean

(12)to the minimum of

1098IEEE TRANSACTIONS ON NEURAL NETWORKS,VOL.11,NO.5,SEPTEMBER2000 This is the form of the membership functions of the minimum

FCM algorithm[15].

If

then(32)

becomes

then

-partitions[1].In fact,this constraint was

used in the development of the FCM and entropy-constrained

fuzzy clustering algorithms[9],[16].

The

do not satisfy the

constraint

-partitions.Such weight vec-

tors lead to soft LVQ and clustering algorithms.For any set of

weights,the values

of

or

if

Since

and

According to Proposition

2

The constraint(35)satisfied by the membership functions de-

fined in(32)indicates that ordered weighted clustering algo-

rithms can also be derived by using alternating optimization to

solve the constrained minimization

problem

and

the is

a

the definition

of by(41)indicates

that It can also be verified that

the ordered membership functions defined in(41)

satisfy

then(42)

gives

-par-

titions that provided the basis for the development of the FCM

algorithm[1].According to(42),the proposed formulation pro-

duces a variety of algorithms whose behavior and performance

can be tailored to the application at hand by selecting sets of

weights

KARAYIANNIS:SOFT LEARNING VECTOR QUANTIZATION AND CLUSTERING ALGORITHMS1099 which allows them to search for near-optimum partitions in a

subspace of all admissible partitions that is not necessarily re-

stricted to a small neighborhood centered at the initial parti-

tion.Although the initialization of soft LVQ and clustering algo-

rithms has little or no effect on their performance on simple data

sets[13],[14],the application of such algorithms in more chal-

lenging problems indicated that their initialization has a rather

significant effect on their performance.

A.Initialization of Soft LVQ and Clustering Algorithms

Ordered weighted LVQ and clustering algorithms were ini-

tialized in the experiments presented in this paper by a pro-

totype splitting procedure that begins with a single prototype

and designs a codebook containing

is split to

create a codebook of size2containing a new prototype and

an updated version of the original prototype

iterations the

codebook contains the prototypes

formed by the indexes of the

feature vectors represented by the prototype

the prototype is split

if

The new prototype is obtained by moving the orig-

inal prototype toward

the new

prototype

with

is fixed during the

learning process and the learning rates are computed at each

iteration as

and the value of

1100IEEE TRANSACTIONS ON NEURAL NETWORKS,VOL.11,NO.5,SEPTEMBER2000 Segmentation of MR images is formulated to exploit the

differences among local values of the T1,T2,and Flair relax-

ation parameters.The values of these parameters represent the

intensity levels(pixels)of a set of three images,namely the

T1-weighted,T2-weighted,and Flair-weighted images.Let

and be the pixel values of the T1-weighted,

T2-weighted,and Flair-weighted images,respectively,at a

certain location.The relaxation parameter values

and can be combined to form the vector vector

can be represented in the

segmentation process by

and

with

KARAYIANNIS:SOFT LEARNING VECTOR QUANTIZATION AND CLUSTERING ALGORITHMS1101

(a)(b)

(c)(d)

Fig.2.Segmented MR images produced by ordered weighted clustering algorithms corresponding to the weight sets(a)S

1102IEEE TRANSACTIONS ON NEURAL NETWORKS,VOL.11,NO.5,SEPTEMBER

2000

(a)

(b)

(c)

(d)

Fig.3.Segmented MR images produced by ordered weighted LVQ algorithms corresponding to the weight sets (a)S

=5to m

m LV m me m m m LV m m m m

m m m m LV m

m m

m

m N N

LV m mi m m m