Learning classifiers when the training data is not IID

Learning Classi?ers When The Training Data Is Not IID

Murat Dundar,Balaji Krishnapuram,Jinbo Bi,R.Bharat Rao

Computer Aided Diagnosis&Therapy Group,Siemens Medical Solutions,

51Valley Stream Parkway,Malvern,PA-19355,USA

{murat.dundar,balaji.krishnapuram,jinbo.bi,bharat.rao}@https://www.360docs.net/doc/7713588297.html,

Abstract

Most methods for classi?er design assume that the

training samples are drawn independently and iden-

tically from an unknown data generating distribu-

tion,although this assumption is violated in several

real life problems.Relaxing this i.i.d.assumption,

we consider algorithms from the statistics litera-

ture for the more realistic situation where batches

or sub-groups of training samples may have inter-

nal correlations,although the samples from dif-

ferent batches may be considered to be uncorre-

lated.Next,we propose simpler(more ef?cient)

variants that scale well to large datasets;theoretical

results from the literature are provided to support

their validity.Experimental results from real-life

computer aided diagnosis(CAD)problems indi-

cate that relaxing the i.i.d.assumption leads to sta-

tistically signi?cant improvements in the accuracy

of the learned classi?er.Surprisingly,the simpler

algorithm proposed here is experimentally found to

be even more accurate than the original version.

1Introduction

Most classi?er-learning algorithms assume that the training data is independently and identically distributed.For ex-ample,support vector machine(SVM),back-propagation for Neural Networks,and many other common algorithms im-plicitly make this assumption as part of their derivation.Nev-ertheless,this assumption is commonly violated in many real-life problems where sub-groups of samples exhibit a high de-gree of correlation amongst both features and labels.

In this paper we:(a)experimentally demonstrate that ac-counting for the correlations in real-world training data leads to statistically signi?cant improvements in accuracy;(b)pro-pose simpler algorithms that are computationally faster than previous statistical methods and(c)provide links to theoreti-cal analysis to establish the validity of our algorithm.

1.1Motivating example:CAD

Although overlooked because of the dominance of algorithms that learn from i.i.d.data,sample correlations are ubiqui-tous in the real world.The machine learning community fre-quently ignores the non-i.i.d.nature of data,simply because we do not appreciate the bene?ts of modeling these corre-lations,and the ease with which this can be accomplished algorithmically.For motivation,consider computer aided di-agnosis(CAD)applications where the goal is to detect struc-tures of interest to physicians in medical images:e.g.to iden-tify potentially malignant tumors in CT scans,X-ray images, etc.In an almost universal paradigm for CAD algorithms, this problem is addressed by a3stage system:identi?cation of potentially unhealthy candidate regions of interest(ROI) from a medical image,computation of descriptive features for each candidate,and classi?cation of each candidate(e.g. normal or diseased)based on its features.Often many candi-date ROI point to the same underlying anatomical structure at slightly different spatial locations.

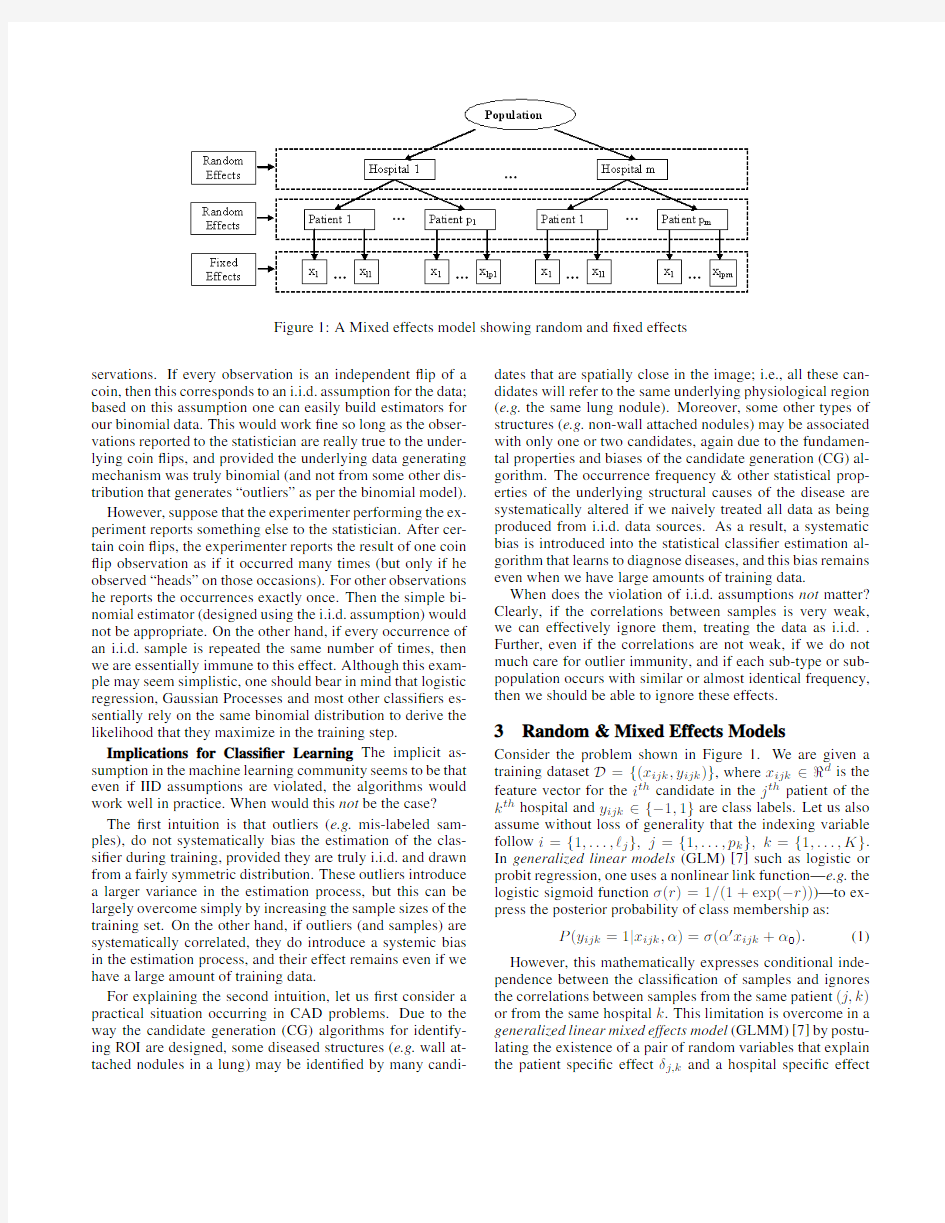

Under this paradigm,correlations clearly exist among both the features and the labels of candidates that refer to the same underlying structure,image,patient,imaging system,doc-tor/nurse,hospital etc.Clearly,the candidate ROI acquired from a set of patient images cannot be assumed to be IID. Multiple levels of hierarchical correlations are commonly ob-served in most real world datasets(see Figure1).

1.2Relationship to Previous Work

Largely ignored in the machine learning and data mining lit-erature,the statistics and epidemiology communities have de-veloped a rich literature to account for the effect of correlated samples.Perhaps the most well known and relevant mod-els for our purposes are the random effects model(REM)[3], and the generalized linear mixed effects models(GLMM)[7]. These models have been mainly studied from the point of view of explanatory data analysis(e.g.what is the effect of smoking on the risk of lung cancer?)not from the point of view of predictive modeling,i.e.accurate classi?cation of un-seen test samples.Further,these algorithms tend to be com-putationally impractical for large scale datasets that are com-monly encountered in commercial data mining applications. In this paper,we propose a simple modi?cation of existing al-gorithms that is computationally cheap,yet our experiments indicate that our approach is as effective as GLMMs in terms of improving classi?cation accuracy.

2Intuition:Impact of Sample Correlations Simpli?ed thought experiment Consider the estimation of the odds of heads for a biased coin,based on a set of ob-

Figure 1:A Mixed effects model showing random and ?xed effects

servations.If every observation is an independent ?ip of a coin,then this corresponds to an i.i.d.assumption for the data;based on this assumption one can easily build estimators for our binomial data.This would work ?ne so long as the obser-vations reported to the statistician are really true to the under-lying coin ?ips,and provided the underlying data generating mechanism was truly binomial (and not from some other dis-tribution that generates “outliers”as per the binomial model).However,suppose that the experimenter performing the ex-periment reports something else to the statistician.After cer-tain coin ?ips,the experimenter reports the result of one coin ?ip observation as if it occurred many times (but only if he observed “heads”on those occasions).For other observations he reports the occurrences exactly once.Then the simple bi-nomial estimator (designed using the i.i.d.assumption)would not be appropriate.On the other hand,if every occurrence of an i.i.d.sample is repeated the same number of times,then we are essentially immune to this effect.Although this exam-ple may seem simplistic,one should bear in mind that logistic regression,Gaussian Processes and most other classi?ers es-sentially rely on the same binomial distribution to derive the likelihood that they maximize in the training step.

Implications for Classi?er Learning The implicit as-sumption in the machine learning community seems to be that even if IID assumptions are violated,the algorithms would work well in practice.When would this not be the case?The ?rst intuition is that outliers (e.g.mis-labeled sam-ples),do not systematically bias the estimation of the clas-si?er during training,provided they are truly i.i.d.and drawn from a fairly symmetric distribution.These outliers introduce a larger variance in the estimation process,but this can be largely overcome simply by increasing the sample sizes of the training set.On the other hand,if outliers (and samples)are systematically correlated,they do introduce a systemic bias in the estimation process,and their effect remains even if we have a large amount of training data.

For explaining the second intuition,let us ?rst consider a practical situation occurring in CAD problems.Due to the way the candidate generation (CG)algorithms for identify-ing ROI are designed,some diseased structures (e.g.wall at-tached nodules in a lung)may be identi?ed by many candi-dates that are spatially close in the image;i.e.,all these can-didates will refer to the same underlying physiological region (e.g.the same lung nodule).Moreover,some other types of structures (e.g.non-wall attached nodules)may be associated with only one or two candidates,again due to the fundamen-tal properties and biases of the candidate generation (CG)al-gorithm.The occurrence frequency &other statistical prop-erties of the underlying structural causes of the disease are systematically altered if we naively treated all data as being produced from i.i.d.data sources.As a result,a systematic bias is introduced into the statistical classi?er estimation al-gorithm that learns to diagnose diseases,and this bias remains even when we have large amounts of training data.

When does the violation of i.i.d.assumptions not matter?Clearly,if the correlations between samples is very weak,we can effectively ignore them,treating the data as i.i.d..Further,even if the correlations are not weak,if we do not much care for outlier immunity,and if each sub-type or sub-population occurs with similar or almost identical frequency,then we should be able to ignore these effects.

3Random &Mixed Effects Models

Consider the problem shown in Figure 1.We are given a training dataset D ={(x ijk ,y ijk )},where x ijk ∈?d is the feature vector for the i th candidate in the j th patient of the k th hospital and y ijk ∈{?1,1}are class labels.Let us also assume without loss of generality that the indexing variable follow i ={1,...,?j },j ={1,...,p k },k ={1,...,K }.In generalized linear models (GLM)[7]such as logistic or probit regression,one uses a nonlinear link function—e.g.the logistic sigmoid function σ(r )=1/(1+exp(?r )))—to ex-press the posterior probability of class membership as:

P (y ijk =1|x ijk ,α)=σ(α′x ijk +α0).

(1)

However,this mathematically expresses conditional inde-pendence between the classi?cation of samples and ignores the correlations between samples from the same patient (j,k )or from the same hospital k .This limitation is overcome in a generalized linear mixed effects model (GLMM)[7]by postu-lating the existence of a pair of random variables that explain the patient speci?c effect δj,k and a hospital speci?c effect

δk.During training,one not only estimates the?xed effect parameters of the classi?erα,but also the distribution of the random effects p(δk,δj,k|D).The class prediction becomes:

P(y ijk=1|x ijk,α)=

σ(α′x ijk+α0+δk+δj,k)p(δk,δj,k)dδk dδj,k.(2)

In Bayesian terms,classi?cation of a new test sample x with a previously trained GLM involves marginalization over the posterior of the classi?er’s?xed effect parameters:

E[P(y=1|x)]= σ(α′x+α0)p(α,α0|D)dαdα0.(3)

The extension to?nd the classi?cation decision on a test sam-ple in a GLMM is quite straight-forward:

E[P(y ijk=1|x ijk)]=

σ(α′x ijk+α0+δk+δj,k)p(α,α0,δk,δj,k|D)

dαdα0dδk dδj,k.(4) Notice that the classi?cation predictions of sets of samples from the same patient(j,k)or from the same hospital k are no longer independent.During the testing phase,if one wants to classify samples from a new patient or a new hospital not seen during training(so the appropriate random effects are not available),one may still use the GLM approach,but rely on a marginalized version of the posterior distributions learnt for the GLMM:

E[P(y=1|x)]= σ(α′x+α0)p(α,α0,δk,δj,k|D)

dαdα0dδk dδj,k.(5) A note of explanation may be useful to explain the above equation.The integral in(5)is overδk andδj,k,for all j and k,i.e.we are marginalizing over all the random effects in order to obtain p(α,α0|D)and then relying on(2).Clearly, training a GLMM amounts to the estimation of the posterior distribution,p(α,α0,δk,δj,k|D).

3.1Avoiding Bayesian integrals

Since the exact posterior can not be determined in analytic form,the calculation of the above integral for classifying ev-ery test sample can prove computationally dif?cult.In prac-tice,we can use Markov chain monte carlo(MCMC)meth-ods but they tend to be too slow for data mining applica-tions.We can also use approximate strategies for computing Bayesian posteriors such as the Laplace approximation,vari-ational methods or expectation propagation(EP).However, as we see using the following lemma(adapted from a differ-ent context[5]),if this posterior is approximated by a sym-metric distribution(e.g.Gaussian)and we are only interested in the strict classi?cation decision rather than the posterior class membership probability,then a remarkable result holds: The GLMM classi?cation involving a Bayesian integration can be replaced by a point classi?er.

First we de?ne some convenient notation(which will use bold font to distinguish it from the rest of the paper).Let us denote the vector combiningα,α0and all the random effect variables as

w=[α′,α0,δ1,δ2,...,δK,δ11,...,δp

K,K

]′.

Let us de?ne the augmented vector combining the fea-ture vector x,the unit scalar1and a vector0of zeros—whose length corresponds to the number of random effect variables—as x=[x′,1,0′]′.Next,observe that if one only requires a hard classi?cation(as in the SVM literature) the sign(?)function is to be used for the link.Finally,note that the classi?cation decision in(5)can be expressed as: E[P(y=1|x)]= sign(w′x)p(w|D)d w.

Lemma1For any sample x,the hard classi?cation decision of a Point Classi?er f PC(x, w)=sign( w′x)is identical to that of a Bayesian Voting Classi?cation

f BVC(x,q)=sign sign(w′x)q(w)d w (6)

if q(w)=q(w| w)is a symmetric distribution with respect to w,that is,if q(w| w)=q( w| w),where w≡2 w?w is the symmetric re?ection of w about w.

Proof:For every w in the domain of the integral, w is also in the domain of the integral.Since w=2 w?w,we see that w′x+ w′x=2 w′x.Three cases have to be considered: Case1:When sign(w′x)=?sign( w′x),or w′x= w′x=0,the total contribution from w and w to the integral is0,since

sign(w′x)q(w| w)+sign( w′x)q( w| w)=0. Case2:When sign(w′x)=sign( w′x),since w′x+ w′x=

2 w′x,it follows that sign( w′x)=sign(w′x)=

sign( w′x).Thus,

sign sign(w′x)q(w| w)+sign( w′x)q( w| w)

=sign( w′x). Case3:When w′x=0, w′x=0or w′x=0, w′x=0, we have again from w′x+ w′x=2 w′x that sign sign(w′x)q(w| w)+sign( w′x)q( w| w)

=sign( w′x). Unless w=0(in which case the classi?er in unde?ned), case2or3will occur at least some times.Hence proved. Assumptions&Limitations of this approach:This Lemma suggests that one may simply use point classi?ers and avoid Bayesian integration for linear hyper-plane classi?ers.How-ever,this is only true if our objective is only to obtain the classi?cation:if we used some other link function to obtain a soft-classi?cation then the above lemma can not be extended for our purposes exactly(it may still approximate the result). Secondly,the lemma only holds for symmetric posterior distributions p(α,α0,δk,δj,k|D).However,in practice,most approximate Bayesian methods would also use a Gaussian

approximation in any case,so it is not a huge limitation.Fi-nally,although any symmetric distribution may be approxi-

mated by a point classi?er at its mean,the estimation of the mean is still required.However,for computing a Laplace ap-proximation to the posterior,one simply chooses to approxi-

mate the mean by the mode of the posterior distribution:the mode can be obtained simply by maximizing the posterior during maximum a posteriori(MAP)estimation.This is com-

putationally expedient&works reasonably well in practice. 4Proposed Algorithm

4.1Augmenting Feature Vectors(AFV)

In the previous section we showed that full Bayesian inte-

gration is not required and a suitably chosen point classi?er would achieve an identical classi?cation decision.In this sec-tion,we make practical recommendations about how to ob-

tain these equivalent point classi?ers with very little effort, using already existing algorithms in an original way.

We propose the following strategy for building linear clas-

si?ers in a way that uses the known(or postulated)correla-tions between the samples.First,for all the training samples, construct augmented feature vectors with additional features

corresponding to the indicator functions for the patient&hos-pital identity.In other words,to the original feature vector of a sample x,append a vector that is composed of zero in all

locations except the location of the patient-id,and another corresponding to the location of the hospital-id,and augment the feature vector with the auxiliary features by,ˉx=[x′?x′]′. Next use this augmented feature vector to train a classi-?er using any standard training algorithm such as Fisher’s Discriminant or SVMs.This classi?er will classify samples

based not only on their features,but also based on the ID of the hospital and the patient.Viewed differently,the classi?er in this augmented feature space not only attempts to explain how the original features predict the class membership,but it also simultaneously assigns a patient and hospital speci?c “random-effect”explanation to de-correlate the training data. During the test phase,for new patients/hospitals,the ran-dom effects may simply be ignored(implicitly this is what we used in the lemma in the previous section).In this paper we implement the proposed approach for Fisher’s Discriminant.

4.2Fisher’s Discriminant

In this section we adopt the convex programming formulation

of Fisher’s Discriminant(FD)presented in[8],

min

α,α0,ξ

L(ξ|cξ)+L(α|cα)

s.t.ξi+y i=α′x i+α0

e′ξC=0,C∈{±}

(7)

where L(z)≡?log p(z)be the negative log likelihood associated with the probability density p.The constraint ξi+y i=α′x i+α0?i,i=(1,2,...,?)where?is the total number of labeled samples,pulls the output for each sample to its class label while the constraints e′ξC=0,C∈{±} ensure that the average output for each class is the label,i.e. without loss of generality the between class scattering is?xed to be two.The?rst term in the objective function minimizes the within class scattering whereas the second term penalizes modelsαthat are a priori unlikely.

Setting L(ξ|cξ)= ξ 2,L(α|cα)= α 2,i.e.assuming a zero mean Gaussian density model with unit variance for p(ξ|cξ)and p(α|cξ)and using the augmented feature vectors we obtain the following optimization problem.Fisher’s Dis-criminant with Augmented Feature Vectors(FD-AFV): min

α,α0,ξ,δ,γ

ˉcξ+ ξ+ 2+ˉcξ? ξ? 2+ˉcα α 2+ˉcδ δ 2 s.t.ξijk+y ijk=α′x ijk+δ′?x ijk+α0

e′ξC=0,C∈{±}

where for the?rst set of constraints,k runs from1to K,j from1to p k for each k,i from1to?jk for each pair of(j,k),ξC is a vector ofξijk corresponding to class C andδis the vector of model parameters for the auxiliary features.

4.3Parameter Estimation

Depending on the sensitivity of the candidate generation mechanism,a representative training dataset might have on the order of few thousand candidates of which only few are positive making the training data very large and unbalanced between classes.To account for the unbalanced nature of the data we used different tuning parameters,ˉcξ+,ˉcξ?for the positive and negative classes.To estimate these andˉcα,ˉcδ, we?rst coarsely tune each parameter independently and de-termine a range of values for that parameter.Then for each parameter we consider a discrete set of three values.We use 10-fold cross validation on the training set as a performance measure to?nd the optimum set of parameters.

5Experimental Studies

For the experiments in this section,we compare three tech-niques:naive Fisher’s Discriminant(FD),FD-AFV,and GLMM(implemented using approximate expectation propa-gation inference).We compare FD-AFV and GLMM against FD to see if these algorithms yield statistically signi?cant im-provements in the accuracy of the classi?er.We also study if the computationally much less expensive FD-AFV is compa-rable to GLMM in terms of classi?er sensitivity.

For most CAD problems,it is important to keep the num-ber of false positives per volume at a reasonable level,since each candidate that is marked as positive by the classi?er will then be visually inspected by a physician.For the projects described below,we focus our attention on the region of the Receiver Operating Characteristics(ROC)curve with fewer than5false positives per volume.This roughly corresponds to90%speci?city for both datasets.

5.1Experiment1:Colon Cancer

Problem Description:Colorectal cancer is the third most common cancer in both men and women.It is estimated that in2004,nearly147,000cases of colon and rectal cancer will be diagnosed in the US,and more than56,730people would die from colon cancer[4].While there is wide consensus that screening patients is effective in decreasing advanced disease, only44%of the eligible population undergoes any colorectal cancer screening.There are many factors for this,key being: patient comfort,bowel preparation and cost.

Non-invasive virtual colonoscopy derived from computer tomographic(CT)images of the colon holds great promise as a screening method for colorectal cancer,particularly if CAD tools are developed to facilitate the ef?ciency of radiol-ogists’efforts in detecting lesions.In over90%of the cases colon cancer progressed rapidly is from local(polyp adeno-mas)to advanced stages(colorectal cancer),which has very poor survival rates.However,identifying(and removing)le-sions(polyp)when still in a local stage of the disease,has very high survival rates[1],hence early diagnosis is critical. This is a challenging learning problem that requires the use of a random effects model due to two reasons.First, the sizes of polyps(positive examples)vary from1mm to all the way up to60mm:as the size of a polyp gets larger, the number of candidates identifying it increases(these can-didates are highly correlated).Second,the data is collected from152patients across seven different sites.Factors such as patient anatomy/preparation,physician practice and scan-ner type vary across different patients and hospitals—the data from the same patient/hospital is correlated.

Dataset:The database of high-resolution CT images used in this study were obtained from NYU Medical Center,Cleve-land Clinic Foundation,and?ve EU sites in Vienna,Belgium, Notre Dame,Muenster and Rome.The275patients were randomly partitioned into training(n=152patients,with126 polyps among15596candidates)and test(n=123patients, with104polyps among12984candidates)groups.The test group was sequestered and only used to evaluate the perfor-mance of the?nal system.A combined total of48features are extracted for each candidate.

Experimental Results:The ROC curves obtained for the three techniques on the test data are shown in Figure2.To better visualize the differences among the curves,the en-larged views corresponding to regions of clinical signi?cance are plotted.We performed pair-wise analysis of the three curves to see if the difference between each pair for the area under the ROC-curve is statistically signi?cant(p values com-puted using the technique described in[2]).Statistical anal-ysis indicates that FD-AFV is more accurate than FD with a p-value of0.01,GLMM will be more accurate than FD with a p-value of0.07and FD-AFV will be more accurate than GLMM with a p-value of0.18.The run times for each algo-rithm are shown in Table1.

5.2Experiment2:Pulmonary Embolism

Data Sources and Domain Description

Pulmonary embolism(PE),a potentially life-threatening con-dition,is a result of underlying venous thromboembolic dis-ease.An early and accurate diagnosis is the key to survival. Computed tomography angiography(CTA)has merged as an accurate diagnostic tool for PE.However,there are hundreds of CT slices in each CTA study.Manual reading is laborious, time consuming and complicated by various PE look-alikes (false positives)including respiratory motion artifact,?ow-related artifact,streak artifact,partial volume artifact,stair step artifact,lymph nodes,vascular bifurcation among many others[6],[10].Several Computer-Aided Detection(CAD) systems are developed to assist radiologists in this process by

Figure2:Comparing FD,FD-AFV on the Colon Testing data, p F D

F D?AF V

=0.01,p F D

GLMM

=0.07,p GLMM

F D?AF V

=0.18.

Table1:Comparison of training times in seconds for FD,FD-AFV and GLMM for PE and Colon training data

FD526

FD-AFV728

GLMM518329

Figure3:Comparing FD,FD-AFV on the PE Testing data,

p F D

F D?AF V =0.08,p F D

GLMM

=0.25,p GLMM

F D?AF V

=0.38.

that FD-AFV will be more sensitive than FD with a p-value of 0.08,GLMM will be more sensitive than FD with a p-value of0.25and FD-AFV will be more sensitive than GLMM with a p-value of0.38.Even though statistical signi?cance can not be proved here(a p-value less than0.05is required),a p-value of0.08clearly favors FD-AFV over FD.Note also that the ROC curve corresponding to FD-AFV clearly dominates that of FD in the region of clinical interest.When the ROC curves for FD-AFV and GLMM are compared we see that the difference is not signi?cant.However,using the proposed augmented feature vector formulation we were able account for the random effects with much less of computational effort than is required for GLMM.

6Conclusion

Summary:The basic message of this paper is:there is a standard i.i.d.assumption that is implicit in the derivation of most classi?er-training algortihms.By allowing the classi?er to explain the data based on both the random effects(common to many samples)and the?xed effects speci?c to a each sam-ple,the learning algorithm can achieve better performance.If training data is limited,fully Bayesian integration via MCMC algorithms can help improve accuracy slightly,but signi?-ciant gains can be reaped without even that effort.All that a practitioner needs to do is to create a categorical indicator variable for each random effect.

Contributions:Generalized Linear Mixed effects models have been extensively studied in the statistics and epidemi-ology communities.The main contribution of this paper is to highlight the problem and solutions to the Machine learn-ing&Data Mining community:in our experiments,both GLMMs and the proposed approach improve the classi?ca-tion accuracy as compared to ignoring the inter-sample cor-relations.Our secondary contribution is to propose a very simple and extremely ef?cient solution that can scale well to very large data mining problems,unlike the current statistics approach to GLMMs that are computationally infeasible for the large datasets.Despite the computational speedup,the classi?cation accuracy of the proposed method was no worse than that of GLMMs in our real-life experiments. Applicability:Although we describes our approach in a medical setting in order to make it more concrete,the algo-rithm is completely general and is capable of handling any hi-erarchical correlations structure among the training samples. Though our experiments focussed on Fisher’s Discriminants in this study,the proposed model can be incorporated into any linear discriminant function based classi?er. References

[1]L.Bogoni,P.Cathier,M.Dundar,A.Jerebko,https://www.360docs.net/doc/7713588297.html,kare,

J.Liang,S.Periaswamy,M.Baker,and M.Macari.Cad for colonography:A tool to address a growing need.

British Journal of Radiology,78:57–62,2005.

[2]J.A.Hanley and B.J.McNeil.A method of comparing

the areas under receiver operating characteristic curves derived from the same cases.Radiology,148:839–843, 1983.

[3]H.Ishwaran.Inference for the random effects in

bayesian generalized linear mixed models.In ASA Pro-ceedings of the Bayesian Statistical Science Section, pages1–10,2000.

[4] D.Jemal,R.Tiwari,T.Murray, A.Ghafoor,

A.Saumuels,E.Ward,E.Feuer,and M.Thun.Can-

cer statistics,2004.

[5] B.Krishnapuram,L. C.M.Figueiredo,and

A.Hartemink.Sparse multinomial logistic regres-

sion:Fast algorithms and generalization bounds.

IEEE Transactions on Pattern Analysis and Machine Intelligence(PAMI),27:957968,2005.

[6]Y.Masutani,H.MacMahon,and https://www.360docs.net/doc/7713588297.html,puterized

detection of pulmonary embolism in spiral ct angiogra-phy based on volumetric image analysis.IEEE Trans-actions on Medical Imaging,21:1517–1523,2002. [7]P.McCullagh and J.A.Nelder.Generalized Linear

Models.Chapman&Hall/CRC,1989.

[8]S.Mika,G.R¨a tsch,and K.-R.M¨u ller.A mathematical

programming approach to the kernel?sher algorithm.

In NIPS,pages591–597,2000.

[9]M.Quist,H.Bouma,C.V.Kuijk,O.V.Delden,and

https://www.360docs.net/doc/7713588297.html,puter aided detection of pulmonary

embolism on multi-detector ct,2004.

[10]C.Wittram,M.Maher,A.Yoo,M.Kalra,O.Jo-Anne,

M.Shepard,and T.McLoud.Ct angiography of pul-monary embolism:Diagnostic criteria and causes of misdiagnosis,2004.

[11]C.Zhou,L.M.Hadjiiski,B.Sahiner,H.-P.Chan,S.Pa-

tel,P.Cascade,E.A.Kazerooni,and https://www.360docs.net/doc/7713588297.html,put-erized detection of pulmonary embolism in3D com-puted tomographic(CT)images:vessel tracking and segmentation techniques.In Medical Imaging2003: Image Processing.Edited by Sonka,Milan;Fitzpatrick, J.Michael.Proceedings of the SPIE,Volume5032,pp.

1613-1620(2003).,pages1613–1620,May2003.

知识管理和信息管理之间的联系和区别_[全文]

引言:明确知识管理和信息管理之间的关系对两者的理论研究和实际应用都有重要意义。从本质上看,知识管理既是在信息管理基础上的继承和进一步发展,也是对信息管理的扬弃,两者虽有一定区别,但更重要的是两者之间的联系。 知识管理和信息管理之间的联系和区别 By AMT 宋亮 相对知识管理而言,信息管理已有了较长的发展历史,尽管人们对信息管理的理解各异,定义多样,至今尚未取得共识,但由于信息对科学研究、管理和决策的重要作用,信息管理一词一度成为研究的热点。知识管理这一概念最近几年才提出,而今知识管理与创新已成为企业、国家和社会发展的核心,重要原因就在于知识已取代资本成为企业成长的根本动力和竞争力的主要源泉。 信息管理与知识管理的关系如何?有人将知识管理与信息管理对立起来,强调将两者区分开;也有不少人认为知识管理就是信息管理;多数人则认为,信息管理与知识管理虽然存在较大的差异,但两者关系密切,正是因为知识和信息本身的密切关系。明确这两者的关系对两者的理论研究和实际应用都有重要意义。从本质上看,知识管理既是在信息管理基础上的继承和进一步发展,也是对信息管理的扬弃,两者虽有一定区别,但更重要的是两者之间的联系。 一、信息管理与知识管理之概念 1、信息管理 “信息管理”这个术语自本世纪70年代在国外提出以来,使用频率越来越高。关于“信息管理”的概念,国外也存在多种不同的解释。1>.人们公认的信息管理概念可以总结如下:信息管理是实观组织目录、满足组织的要求,解决组织的环境问题而对信息资源进行开发、规划、控制、集成、利用的一种战略管理。同时认为信息管理的发展分为三个时期:以图书馆工作为基础的传统管理时期,以信息系统为特征的技术管理时期和以信息资源管理为特征的资源管理时期。 2、知识管理 由于知识管理是管理领域的新生事物,所以目前还没有一个被大家广泛认可的定义。Karl E Sverby从认识论的角度对知识管理进行了定义,认为知识管理是“利用组织的无形资产创造价值的艺术”。日本东京一桥大学著名知识学教授野中郁次郎认为知识分为显性知识和隐性知识,显性知识是已经总结好的被基本接受的正式知识,以数字化形式存在或者可直接数字化,易于传播;隐性知识是尚未从员工头脑中总结出来或者未被基本接受的非正式知识,是基于直觉、主观认识、和信仰的经验性知识。显性知识比较容易共享,但是创新的根本来源是隐性知识。野中郁次郎在此基础上提出了知识创新的SECI模型:其中社会化(Socialization),即通过共享经验产生新的意会性知识的过程;外化(Externalization),即把隐性知识表达出来成为显性知识的过程;组合(Combination),即显性知识组合形成更复杂更系统的显性知识体系的过程;内化(Internalization),即把显性知识转变为隐性知识,成为企业的个人与团体的实际能力的过程。

when 和while的用法区别

when 和while的用法区别 两者的区别如下: ①when是at or during the time that, 既指时间点,也可指一段时间; while是during the time that,只指一段时间,因此when引导的时间状语从句中的动词可以是终止性动词,也可以是延续性动词,而while从句中的动词必须是延续性动词。 ②when 说明从句的动作和主句的动作可以是同时,也可以是先后发生;while 则强调主句的动作在从句动作的发生的过程中或主从句两个动作同时发生。 ③由when引导的时间状语从句,主句用过去进行时,从句应用一般过去时;如果从句和主句的动作同时发生,两句都用过去进行时的时候,多用while引导,如: a. When the teacher came in, we were talking. 当此句改变主从句的位置时,则为: While we were talking, the teacher came in. b. They were singing while we were dancing. ④when和while 还可作并列连词。when表示“在那时”;while表示“而,却”,表对照关系。如: a. The children were running to move the bag of rice when they heard the sound of a motor bike. 孩子们正要跑过去搬开那袋米,这时他们听到了摩托车的声音。 b. He is strong while his brother is weak. 他长得很结实,而他弟弟却很瘦弱。 一。引导时间状语从句时,WHILE连接的是时间段,而WHEN连接的多是时间点 例如What does your father do while your mother is cooking? What does your mother do when you come back? 二,WHILE可以连接两个并列的句子,而WHEN不可以 例如I was trying my best to finish my work while my sister was whtching TV 三,WHEN是特殊疑问词,对时间进行提问,WHILE不是。 例如,When were you bron? 续性动词和短暂性动词 英语中的动词,是学习中的重点,又是难点。英语中的动词有多种分类法。根据其有无含义,动词可分为实义动词和助动词;根据动词所表示的是动作还是状态,可以分为行为动词和状态动词;根据动词所表示的动作能否延缓,分为延续性动词和终止性动词。 可以表示持续的行为或状态的动词,叫做“延续性动词”,也叫“持续性动词”,如:be, keep, have, like, study, live, etc. 有的表示短暂、瞬间性的动词,叫做“终止性动词”,也可叫“短暂性动词”,或“瞬间性动词”,如die, join, leave, become, return, reach, etc.

when和while区别及专项练习---含答案

when和while用法区别专项练习 讲解三例句: 1. The girls are dancing while the boys are singing. 2. Kangkang’s mother is cooking when he gets home. 3. When/While Kangkang’s mother is cooking, he gets home. 一、用when或者while填空 Margo was talking on the phone, her sister walked in. we visited the school, the children were playing games. · Sarah was at the barber’s, I was going to class. I saw Carlos, he was wearing a green shirt. Allen was cleaning his room, the phone rang. Rita bought her new dog; it was wearing a little coat. 7. He was driving along ________ suddenly a woman appeared. 8. _____ Jake was waiting at the door, an old woman called to him. 9. He was reading a book ______suddenly the telephone rang. 10. ______ it began to rain, they were playing chess. [ 二、用所给动词适当形式填空 11. While Jake __________ (look) for customers, he _______ (see) a woman. 12. They __________ (play) football on the playground when it _____ (begin) to rain. 13. A strange box ________ (arrive) while we _________ (talk). 14. John ____________ (sleep) when someone __________ (steal) his car. 15. Father still (sleep) when I (get) up yesterday morning. 16. Grandma (cook) breakfast while I (wash) my face this morning. 17. Mother (sweep) the floor when I (leave) home. ~ 18. I (read) a history book when someone (knock ) at the door. 19. Mary and Alice are busy (do) their homework. 20. The teacher asked us (keep) the windows closed. 21. I followed it (see) where it was going. 22. The students (play) basketball on the playground from 3 to 4 yesterday afternoon. / 三、完成下面句子,词数不限 1.飞机在伦敦起飞时正在下雨。 It when the plane in London. 2.你记得汶川大地震时你在做什么吗 Do you remember what you when Wenchuan Earthquake . 3.当铃声想起的时候,我们正在操场上玩得很开心。 We on the playground when the bell . 4.当妈妈下班回家时,你在做什么 % when Mum from work 5.当我在做作业时,有人敲门。 I was doing my homework, someone

信息管理与知识管理典型例题讲解

信息管理与知识管理典型例题讲解 1.()就是从事信息分析,运用专门的专业或技术去解决问题、提出想法,以及创造新产品和服务的工作。 2.共享行为的产生与行为的效果取决于多种因素乃至人们的心理活动过程,()可以解决分享行为中的许多心理因素及社会影响。 3.从总体来看,目前的信息管理正处()阶段。 4.知识员工最主要的特点是()。 5.从数量上来讲,是知识员工的主体,从职业发展角度来看,这是知识员工职业发展的起点的知识员工类型是()。 6.人类在经历了原始经济、农业经济和工业经济三个阶段后,目前正进入第四个阶段——(),一种全新的经济形态时代。 1.对信息管理而言,( )是人类信息活动产生和发展的源动力,信息需求的不断 变化和增长与社会信息稀缺的矛盾是信息管理产生的内在机制,这是信息管理的实质所在。 2.()就是从事信息分析,运用专门的专业或技术去解决问题、提出想法,以及创造新产品和服务的工作。 3.下列不属于信息收集的特点() 4.信息管理的核心技术是() 5.下列不属于信息的空间状态() 6.从网络信息服务的深度来看,网络信息服务可以分 1.知识管理与信息管理的关系的()观点是指,知识管理在历史上曾被视为信息管理的一个阶段,近年来才从信息管理中孵化出来,成为一个新的管理领域。 2.著名的Delicious就是一项叫做社会化书签的网络服务。截至2008年8月,美味书签已经拥有()万注册用户和160万的URL书签。 3.激励的最终目的是()

4.经济的全球一体化是知识管理出现的() 5.存储介质看,磁介质和光介质出现之后,信息的记录和传播越来越() 6.下列不属于信息的作用的选项() 1.社会的信息化和社会化信息网络的发展从几方面影响着各类用户的信息需求。() 2.个性化信息服务的根本就是“()”,根据不同用户的行为、兴趣、爱好和习惯,为用户搜索、组织、选择、推荐更具针对性的信息服务。 3.丰田的案例表明:加入( )的供应商确实能够更快地获取知识和信息,从而促动了生产水平和能力的提高。 4.不属于信息资源管理的形成与发展需具备三个条件() 5.下列不属于文献性的信息是() 6.下列不属于()职业特点划分的

when和while的用法解析、练习题及答案(附总结表格)

when和while的用法解析、练习题及答案(附总结表格) 一、讲解三例句: 1. The girls are dancing while the boys are singing. 2. Lucy’s mother is cooking when she gets home. 3. When/While Lucy’s mother is cooking, she gets home. 二、用when或者while填空 1.______ Margo was talking on the phone, her sister walked in. 2.______ we visited the school, the children were playing games. 3.______ Sarah was at the barber’s, I was going to class. 4.______ I saw Carlos, he was wearing a green shirt. 5.______ Allen was cleaning his room, the phone rang. 6.______ Rita bought her new dog; it was wearing a little coat. 7. He was driving along ________ suddenly a woman appeared. 8. _____ Jake was waiting at the door, an old woman called to him. 9. ______ it began to rain, they were playing chess. 10. She saw a taxi coming ______ the woman was waiting under the streetlight. 三、语法 while和when都是表示同时,到底句子中是用when还是while主要看主句和 从句中所使用 的动词是短暂性动作(瞬时动词)还是持续性动作。 1、若主句表示一个短暂性动作,而从句表示的是一个持续性动作时,用 When/While。如: He fell asleep when while he was reading. 他看书时睡着了。

when和while的区别是

Period____8______ in Unit ___6_________ (Period_____20_____Week 4-6)Subject _Grammar (B) Teaching aims To use the Past Continuous Tense with while and when correctly Teaching interesting points of this class: The differences of when and while in Past Continuous Tense Teaching steps: I. Cooperation and intercourse 1. Check the preparation 2. Discuss in groups II. Question and explanation Check the exercises on page 101, and explain the differences between when and while. when和while的区别是: when只能用于一般时态 while可以用于进行时态 when conj. 在...的时候, 当…的时候 when 在绝大多数情况下,所引导的从句中,应该使用非延续性动词(也叫瞬间动词) 例如:I'll call you when I get there. 我一到那里就给你打电话 I was about to go out when the telephone rang.我刚要出门,电话铃就响了。 但是,when 却可以be 连用。 例如:I lived in this village when I was a boy. 当我还是个孩子的时候我住在这个村庄里。 When I was young, I was sick all the time. 在我小时候我总是生病 while 当...的时候 While he was eating, I asked him to lend me $2. 当他正在吃饭时,我请他借给我二美元。While I read, she sang. 我看书时,她在唱歌。 while 的这种用法一般都和延续性动词连用 while 可以表示“对比‘,这样用有的语法书认为是并列连词 Some people like coffee, while others like tea. 有些人喜欢咖啡, 而有些人喜欢茶。 as 当...之时,一边......一边....... I slipped on the ice as I ran home. 我跑回家时在冰上滑了一跤 He dropped the glass as he stood up. 他站起来时,把杯子摔了。 She sang As she worked. 她一边工作一边唱歌。 III. Rumination and evaluation when, as, while这三个词都可以引出时间状语从句,它们的差别是:when 从句表示某时刻或一段时间as 从句表示进展过程,while 只表示一段时间 When he left the house, I was sitting in the garden. 当他离开家时,我正在院子里坐着。 When he arrived home, it was just nine o'clock.

国内外知识共享理论和实践述评

●储节旺,方千平(安徽大学 管理学院,安徽 合肥 230039) 国内外知识共享理论和实践述评 3 摘 要:知识共享是知识管理的重要环节之一。本文评析了知识共享的内涵、类型、过程、模式、实践。对知识共享内涵的认识有信息技术、沟通、组织学习、市场、系统等角度;对知识共享的类型的认识有共享的主体、共享的知识类型等角度;对知识共享的过程的认识有交流、知识主体等角度;知识共享的模式包括SEC I 模式、行动—结果的模式、基于信息传递的模式、正式和非正式的模式。对知识共享的实践主要总结了一些著名公司的做法和成绩。 关键词:知识共享;知识管理;知识共享模式;理论研究 Abstract:Knowledge sharing is the i m portant link in the knowledge manage ment chain .This paper revie ws the meaning,type,p r ocess,model and p ractice of knowledge sharing .The meaning of knowledge sharing is su mma 2rized fr om the pers pectives of I T,communicati on,organizati on learning,market and syste m.The type of knowl 2edge sharing is su mmarized fr om the pers pectives of the main body and the type of knowledge sharing .The p r ocess of knowledge sharing is su mmarized fr om the pers pectives of communicati on and the main body of knowledge .The 2model of knowledge sharing is su mmarized fr om the pers pectives of SEC I,acti on -result,inf or mati on -based deliv 2ery and for mal and inf or mal type .The p ractice of knowledge sharing is su mmarized in s ome famous cor porati ons ’method of work and their achieve ments . Keywords:knowledge sharing;knowledge manage ment;model of knowledge sharing;theoretical study 3本文为国家社会科学基金项目“国内外知识管理理论发展与学科体系构建研究”(项目编号:06CT Q009)及安徽大学人才建设计划项目“企业应对危机的知识管理问题研究”研究成果之一。 知识管理的目标是为了有效地开发利用知识,实现知识的价值,其前提与基础是知识的获取与积累,其核心是知识的交流与学习,其追求的目标是知识的吸收与创造,从而最终达到充分利用知识获得效益、获得先机、获得竞争优势的目的。在这个过程中最根本的前提是知识的存在,包括知识的质与量。而如何拥有足够质与量的知识在于知识的积累与共享,因为个体的经验、知识和智慧都是相对有限的,而一个组织团体的知识是相对无限的,如何使一个组织的所有成员交流共享知识是知识管理达到最佳状态的关键。知识共享是发挥知识价值最大化的有效途径,知识共享是知识管理的基点,是知识管理的优势所在。因此,知识共享的理论研究和实施运营都显得十分必要。 保罗?S ?迈耶斯于1997年详细介绍了适合于知识共享的组织系统的设计方案[1]。自此以后,专家学者们开始从不同角度对知识共享展开研究,研究领域从企业不断扩 大到政府、教育等社会组织。有关知识共享的研究逐渐成为知识管理的重点。从笔者掌握的知识共享研究的相关文献来看,其研究内容主要体现在知识共享的定义类型、过程、模式等方面。 1 知识共享的定义 由于知识内涵的开放性和知识分类的复杂性,专家学者们对知识共享的内涵理解也很难形成一致的看法,但他们还是从不同的视角对知识共享的内涵提出了许多颇有见地的观点。 111 观点简述 1)信息技术角度。Ne well 及Musen 从信息技术的角 度来理解知识共享,认为必须厘清知识库中符号和知识之间存在的差异。知识库本身并不足以用来捕捉知识,而是赋予知识库意义的解释过程。因此,对知识共享而言必须包括理性判断与推理机制,才能赋予知识库生命[2]。 2)信息沟通角度。Hendriks 和Botkin 等人认为知识 共享是一种人与人之间的联系和沟通的过程。 3)组织学习角度。Senge 认为,知识共享不仅仅是一 方将信息传给另一方,还包含愿意帮助另一方了解信息的内涵并从中学习,进而转化为另一方的信息内容,并发展

WHEN与WHILE用法区别

WHEN与WHILE用法区别 when, while这三个词都有"当……时候"之意,但用法有所不同,使用时要特别注意。 ①when意为"在……时刻或时期",它可兼指"时间点"与"时间段",所引导的从句的动词既可以是终止性动词,也可是持续性动词。如: When I got home, he was having supper.我到家时,他正在吃饭。 When I was young, I liked dancing.我年轻时喜欢跳舞。 ②while只指"时间段",不指"时间点",从句的动词只限于持续性动词。如:While I slept, a thief broke in.在我睡觉时,盗贼闯了进来。 辨析 ①when从句与主句动作先后发生时,不能与while互换。如: When he has finished his work, he takes a short rest.每当他做完工作后,总要稍稍休息一下。(when = after) When I got to the cinema, the film had already begun.当我到电影院时,电影已经开始了。(when=before) ②when从句动词为终止性动词时,不能由while替换。如: When he came yesterday, we were playing basketball.昨天他来时,我们正在打篮球。 ③当从句的谓语是表动作的延续性动词时,when, while才有可能互相替代。如:While / When we were still laughing, the teacher came in.正当我们仍在大声嬉笑时,老师进来了。 ④当从句的谓语动词是终止性动词,而且主句的谓语动词也是终止性动词 时,when可和as通用,而且用as比用when在时间上更为紧凑,有"正当这时"的含义。如: He came just as (or when) I reached the door.我刚到门那儿,他就来了。 ⑤从句的谓语动词如表示状态时,通常用while。如: We must strike while the iron is hot.我们应该趁热打铁。 ⑥while和when都可以用作并列连词。

(完整版)信息管理与知识管理

信息管理与知识管理 ()是第一生产力,科学技术 ()是物质系统运动的本质特征,是物质系统运动的方式、运动的状态和运动的有序性信息按信息的加工和集约程度分()N次信息源 不是阻碍企业技术创新的主要因素主要包括()市场需求薄弱 不属于 E.M.Trauth博士在20世纪70年代首次提出信息管理的概念,他将信息管理分内容为()网络信息管理 不属于W.Jones将个人信息分为三类()个人思考的 不属于信息的经济价值的特点()永久性 不属于信息的社会价值特征的实()广泛性 不属于信息资源管理的形成与发展需具备三个条件()信息内容 存储介质看,磁介质和光介质出现之后,信息的记录和传播越来越()方便快捷 国外信息管理诞生的源头主要有()个1个 即信息是否帮助个人获得更好的发展,指的是()个人发展 社会的信息化和社会化信息网络的发展从几方面影响着各类用户的信息需求。()2个 社会价值的来源不包括()社会观念 图书馆层面的障碍因素不去包括:()书籍复杂无法分类 下列不属于信息收集的过程的()不提供信息收集的成果 下列不属于信息收集的特点()不定性

下列不属于用户在信息获取过程中的模糊性主要表现()用户无法准确表达需求 下列哪种方式不能做出信息商品()口口相传 下列现象不是五官感知信息为()电波 下列属于个人价值的来源()个人需求 信息information”一词来源于哪里()拉丁 信息管理的核心技术是()信息的存储与处理 技术信息管理观念是专业技术人员的(基本要素 信息认知能力是专业技术人员的(基本素质) 信息是通信的内容。控制论的创始人之一维纳认为,信息是人们在适应(外部) 世界并且使之反作用于世界的过程中信息收集的对象是(信息源)由于()是一种主体形式的经济成分,它们发展必将会导致社会运行的信息化。(信息经济) 在网络环境下,用户信息需求的存在形式有()4种 判断题 “效益”一词在经济领域内出现,效益递增或效益递减也是经济学领域的常用词。(对) “知识经济”是一个“直接建立在知识和信息的生产、分配和使用之上的经济”,是相对于“以物质为基础的经济”而言的一种新型的富有生命力的经济形态。(对) 百度知道模块是统计百度知道中标题包含该关键词的问题,然后由问

while、when和as的用法区别

as when while 的区别和用法 as when while的用法 一、as的意思是“正当……时候”,它既可表示一个具体的时间点,也可以表示一段时间。as可表示主句和从句的动作同时发生或同时持续,即“点点重合”“线线重合”;又可表示一个动作发生在另一个动作的持续过程中,即“点线重合”, 但不能表示两个动作一前一后发生。如果主句和从句的谓语动词都表示持续性的动作,二者均可用进行时,也可以一个用进行时,一个用一般时或者都用一般时。 1、As I got on the bus,he got off. 我上车,他下车。(点点重合)两个动作都是非延续性的 2、He was writing as I was reading. 我看书时,他在写字。(线线重合)两个动作都是延续性的 3、The students were talking as the teacher came in. 老师进来时,学生们正在讲话。(点线重合)前一个动作是延续性的,而后一个动作时非延续性的 二、while的意思是“在……同时(at the same time that )”“在……期间(for as long as, during the time that)”。从while的本身词义来看,它只能表示一段时间,不能表示具体的时间点。在时间上可以是“线线重合”或“点线重合”,但不能表示“点点重合”。例如: 1、He was watching TV while she was cooking. 她做饭时,他在看电视。(线线重合) 2、He was waiting for me while I was working. 我工作的时候,他正等着我。(线线重合) 3、He asked me a question while I was speaking. 我在讲话时,他问了我一个问题。(点线重合)

知识管理案例之一Marconi的知识共享

知识管理案例之一——Marconi的知识共享 https://www.360docs.net/doc/7713588297.html,./ewkArticles/Category111/Article14375.htm 来源:.cio. 翻译特约撰稿人:王晨 2003-6-6 投稿 Marconi进入了一场并购狂潮,在3年的时间内先后收购了10家通信公司,随之而来的便是严峻的挑战:这个30亿美元通信设备的生产巨头如何保证它的技术支持人员了解最新收购的技术并在中向客户快速而准确的提供答案呢?Marconi如何培养熟悉公司所有产品的新人员? Marconi的技术支持人员(遍布全球14个呼叫中心的500个工程师)每个月要回复大约10,000个有关公司产品的问题。在并购之前,技术支持人员依赖于公司的外部网(Extranet)TacticsOnline,他们和客户在那里可以查询最近的问题和文本文档。当新的技术支持人员和产品加入公司以后,Marconi希望用一个更加全面的知识管理系统来补充。而新加盟公司的工程师却不愿将他们一直支持的产品的知识共享。“他们觉得他们的知识就象一X安全的毯子,确保他们不会失去工作”,负责管理服务技术与研发的主管DaveBreit说,“进行并购之后,最为重要的就是避免知识囤积,而要使它们共享”。 与此同时,Marconi还想通过把更多的产品和系统信息呈现给客户以及缩短客户的长度来提高客户服务部门的效率。“我们希望在提供客户自助服务的Web页和增加技术支持人员之间进行平衡”,Breit说,“我们还希望能够为我们的一线工程师(直接与客户打交道的)更加迅速的提供更多的信息,使得他们能够更快的解决客户的问题”。 建立知识管理的基础 当Marconi1998年春开始评估知识管理技术时,在技术支持人员中共享知识已经不是什么新鲜的概念了。技术支持人员已经习惯了在3到4人的团队中工作,以作战室的方式集合起来解决客户的技术问题。而且一年前,Marconi已经开始将技术支持人员季度奖的一部分与他们提交给TacticsOnline的知识多少、他们参与指导培训其他技术支持人员的情况进行挂钩。“每位技术服务人员需要教授两次培训课,写10条FAQ,才能获得全奖”,Breit说,“当新公司加入以后,新的技术支持人员将实行同样的奖金计划,这个办法使我们建立了一个相当开放的知识共享的环境”。 为了增强TacticsOnline,Marconi从ServiceWareTechnologies那里选择了软件,部分是因为他们的技术很容易与公司的排障客户关系管理系统(RemedyCRMSystem)集成,技术支持人员用这个系统来记录客户请求,跟踪客户交流。另外,Breit指出,Marconi希望它的技术支持人员能够利用已有的产品信息的Oracle数据库。 Breit的部门花了六个月的时间实施新系统并培训技术支持人员。这个被称为知识库(KnowledgeBase)的系统可以与公司的客户关系管理系统相连接,由强大的Oracle数据库所支持。Marconi客户与产品集成的观点为技术服务人员提供了全面的交流历史纪录。例如,技术服务人员可以将制造者加入数据库,立即接过上一位技术服务人员与客户进行交流。 在第一线 TacticsOnline补足了这个新系统。“知识库中的数据是关于我们不同产品线的特定的排障技巧和线索”,知识管理系统的管理员ZehraDemiral说,“而TacticsOnline更多的则是客户进入我

2019年温州一般公需科目《信息管理与知识管理》模拟题

2019年温州一般公需科目《信息管理与知识管理》模拟题 一、单项选择题(共30小题,每小题2分) 2.知识产权的时间起点就是从科研成果正式发表和公布的时间,但有期限,就是当事人去世()周年以内权利是保全的。 A、三十 B、四十 C、五十 D、六十 3.()国务院发布《中华人民共和国计算机信息系统安全保护条例》。 A、2005年 B、2000年 C、1997年 D、1994年 4.专利申请时,如果审察员认为人类现有的技术手段不可能实现申请者所描述的结果而不给予授权,这体现了()。 A、技术性 B、实用性 C、创造性 D、新颖性 5.根据本讲,参加课题的研究策略不包括()。 A、量力而行 B、逐步击破 C、整体推进 D、有限目标 6.本讲认为,传阅保密文件一律采取()。 A、横向传递 B、纵向传递 C、登记方式 D、直传方式 7.本讲提到,高达()的终端安全事件是由于配置不当造成。 A、15% B、35% C、65% D、95% 8.本讲认为知识产权制度本质特征是()。 A、反对垄断 B、带动创业 C、激励竞争 D、鼓励创新 9.本讲指出,以下不是促进基本 公共服务均等化的是()。 A、互联网+教育 B、互联网+医 疗 C、互联网+文化 D、互联网+工 业 10.根据()的不同,实验可以分 为定性实验、定量实验、结构分析实 验。 A、实验方式 B、实验在科研中 所起作用C、实验结果性质D、实验 场所 11.本讲认为,大数据的门槛包含 两个方面,首先是数量,其次是()。 A、实时性 B、复杂性 C、结构化 D、非结构化 12.2007年9月首届()在葡萄牙里 斯本召开。大会由作为欧盟轮值主席 国葡萄牙的科学技术及高等教育部 主办,由欧洲科学基金会和美国研究 诚信办公室共同组织。 A、世界科研诚信大会 B、世界学术诚信大会 C、世界科研技术大会 D、诺贝尔颁奖大会 13.()是2001年7月15日发现 的网络蠕虫病毒,感染非常厉害,能 够将网络蠕虫、计算机病毒、木马程 序合为一体,控制你的计算机权限, 为所欲为。 A、求职信病毒 B、熊猫烧香病 毒 C、红色代码病毒 D、逻辑炸弹 14.本讲提到,()是创新的基础。 A、技术 B、资本 C、人才 D、知 识 15.知识产权保护中需要多方协 作,()除外。 A、普通老百姓 B、国家 C、单位 D、科研人员 17.世界知识产权日是每年的()。 A、4月23日 B、4月24日 C、4月25日 D、4月26日 18.2009年教育部在《关于严肃 处理高等学校学术不端行为的通知》 中采用列举的方式将学术不端行为 细化为针对所有研究领域的()大类行 为。 A、3 B、5 C、7 D、9 19.美国公民没有以下哪个证件 ()。 A、护照 B、驾驶证 C、身份证 D、社会保障号 20.关于学术期刊下列说法正确 的是()。 A、学术期刊要求刊发的都是第 一手资料B、学术期刊不要求原发 C、在选择期刊时没有固定的套 式 D、对论文的专业性没有限制 22.知识产权的经济价值(),其 遭遇的侵权程度()。 A、越大,越低 B、越大,越高 C、越小,越高 D、越大,不变 23.()是一项用来表述课题研究 进展及结果的报告形式。 A、开题报告 B、文献综述 C、课题报告 D、序论 25.1998年,()发布《电子出版 物管理暂行规定》。

过去进行时、when和while引导时 间状语从句的区别

过去进行时过去进行时表示过去某一时刻或者某段时间正在进行或发生的动作,常和表过去的时间状语连用,如: 1. I was doing my homework at this time yesterday. 昨天的这个时候我正在做作业。 2. They were waiting for you yesterday. 他们昨天一直在等你。 3. He was cooking in the kitchen at 12 o'clock yesterday. 昨天12点,他正在厨房烧饭。 过去进行时的构成: 肯定形式:主语+was/were+V-ing 否定形式:主语+was not (wasn't)/were not (weren't)+V-ing 疑问形式:Was/Were+主语+V-ing。 基本用法: 1. 过去进行时表示过去某一段时间或某一时刻正在进行的动作。常与之连用的时间状语有,at that time/moment, (at) this time yesterday (last night/Sunday/week…), at+点钟+yesterday (last night / Sunday…),when sb. did sth.等时间状语从句,如: 1)What were you doing at 7p.m. yesterday? 昨天晚上七点你在干什么? 2)I first met Mary three years ago. She was working at a radio shop at the time. 我第一次遇到玛丽是在三年前,当时她在一家无线电商店工作。 3)I was cooking when she knocked at the door. 她敲门时我正在做饭。 2. when后通常用表示暂短性动词,while后通常用表示持续性动词,而while所引导的状语从句中,谓语动词常用进行时态,如: When the car exploded I was walking past it. = While I was walking past the car it exploded. 3. when用作并列连词时,主句常用进行时态,从句则用一般过去时,表示主句动作发生的过程中,另一个意想不到的动作发生了。如: I was walking in the street when someone called me. 我正在街上走时突然有人喊我。 4. when作并列连词,表示“(这时)突然”之意时,第一个并列分句用过去进行时,when引导的并列分句用一般过去时。如:

完善知识管理理论构建知识共享系统_王玉晶

完善知识管理理论构建知识共享系统 王玉晶 【摘 要】知识共享是知识管理的一个重要组成部分。本文从知识管理的概念入手,指出了知识共享在知识管理中的重要性和特殊地位,分析了企业中进行知识共享存在的障碍与不利因素,从建立知识共享技术支撑体系、构建知识共享组织结构、营造知识共享文化、完善知识共享激励机制等方面深入探讨了如何提高企业知识共享程度,加强企业竞争力。 【关键词】知识管理 知识共享 体系 Abstract:Kno wledge sharing is an important component of the kno wledge management.This paper obtained from the kno wledge management concept,has pointed out the importance and the special status of knowledge sharing in the knowledge manage ment,and analyzed the barrier and th e disadvantage factor of knowledge sharing in the enterprise.This article pointed out that the knowledge sharing should be established technical support system,built knowledge sharing structure,created a kno wledge sharing culture,and improved knowledge sharing incentive mechanism. Key words:kno wledge sharing knowledge management system 当今社会,企业竞争力已不局限于仅仅依赖其规模、信息和技术,而更注重其创新和应变能力。知识管理就是在知识经济大背景下,以当代信息技术为依托,着力于知识的开发和利用、积累和创新,帮助人们共享信息,进而对扩大了的知识资本进行有效运营,最终通过集体智慧提高企业创新和应变能力的一种全新的信息管理理论与方法。而创新本身,无论是技术创新还是管理创新,其实质就是一种新知识的创造,它要求企业内部各个部门之间以及各员工之间,企业内部与外部之间进行广泛的知识交流与共享。所以知识共享一直是知识管理的一个重要组成部分。它是发挥知识的效用,实现知识价值的必由之路,是实现知识共享的前提,是走向知识共享世界的先导。理解知识共享的内涵,分析企业知识共享存在的障碍与不利因素并提出解决的方案,对完善组织中的知识共享机制具有重要意义。 1 知识共享的含义 关于知识共享的概念,由于标准、角度的不同,学者对知识共享的界定也有所不同。知识共享是指个体知识、组织知识通过各种交流手段为组织中其他成员所共享。同时,通过知识创新,实现组织的知识增值。对知识共享的认识应从知识共享的对象———知识 内容,知识共享的手段———知识网络、会议及团队学习,知识共享的主体———个人、团队及组织这三个层面考虑。其中,人是知识共享的主体,文化是知识共享的背景,信息技术是知识共享的重要工具。 2 知识共享障碍因素分析 2.1 技术应用障碍 知识管理作为一种全新的管理模式,如果缺乏应有的技术支撑,将难以发挥其应有的效力,最终失之于形式,流于空泛。许多企业物质技术基础薄弱,缺少有效的计算机网络和通信系统,很多员工不知道到哪里去寻找所需要的知识。存在于员工头脑中的隐性知识难以明确的被他人观察、了解,也无法用言语表达或者只能用言语进行部分表达。因此,薄弱的技术基础不能够帮助人们跨越时间、空间和知识的数量及质量的限制,从而不能为有效地实现知识共享提供强有力的技术支持。 2.2 组织结构缺陷 传统的组织结构管理层次多,信息传递速度缓慢,信息衰退或信息失真现象非常严重,员工之间没有超越命令以外的直接接触和交流,无法实现面对面的互动式交流,无法突破岗位对个人的约束。多层管理结构森严,越级的知识传递几乎不可能实现;员工之间 27 RESEARCH ES I N LIBRARY SCIENCE DOI:10.15941/https://www.360docs.net/doc/7713588297.html, ki.issn1001-0424.2008.05.027