Hardware support for flexible distributed shared memory

Hardware Support for Flexible Distributed Shared Memory

Steven K. Reinhardt,1 Robert W. Pfile,2 and David A. Wood3

Abstract

Workstation-based parallel systems are attractive due to their low cost and competitive uniprocessor performance. However, supporting a cache-coherent global address space on these systems involves signifi-cant overheads. We examine two approaches to coping with these overheads. First, DSM-specific hardware can be added to the off-the-shelf component base to reduce overheads. Second, application-specific coher-ence protocols can avoid some overheads by exploiting programmer (or compiler) knowledge of an applica-tion’s communication patterns. To explore the interaction between these approaches, we simulated four designs that add DSM acceleration hardware to a collection of off-the-shelf workstation nodes. Three of the designs support user-level software coherence protocols, enabling application-specific protocol optimiza-tions. To verify the feasibility of our hardware approach, we constructed a prototype of the simplest design.

Measured speedups from the prototype match simulation results closely.

We find that even with aggressive DSM hardware support, custom protocols can provide significant speedups for some applications. In addition, the custom protocols are generally effective at reducing the impact of other overheads, including those due to less aggressive hardware support and larger network laten-cies. However, for three of our benchmarks, the additional hardware acceleration provided by our most aggressive design avoids the need to develop more efficient custom protocols.

Index terms:parallel systems, distributed shared memory, cache coherence protocols, fine-grain cache coher-ence, coherence protocol optimization, workstation clusters.

1 Introduction

Technological and economic trends make it increasingly cost-effective to assemble distributed-memory parallel systems from off-the-shelf workstations and networks [3]. Workstations (or, equivalently, high-end personal computers) use the same high-performance microprocessors found in larger systems—but at a far lower cost per processor, thanks to their much greater market volume. More recently, vendors have begun to advertise high-bandwidth, low-latency switched networks targeted specifically at the cluster market. We expect this trend to continue and for the bandwidth and latency characteristics of cluster networks to approach those of dedicated parallel system interconnects [8, 19].

In spite of these trends, custom parallel systems still hold an advantage over off-the-shelf clus-ters of workstations in their ability to provide a low-overhead, globally coherent shared address space. Custom-built distributed shared memory (DSM) systems such as MIT’s Alewife [2], Stan-

1. EECS Dept., The University of Michigan, 1301 Beal Ave., Ann Arbor, MI 48109-212

2. stever@https://www.360docs.net/doc/0516413348.html,.

2. Yago Systems, 795 Vaqueros Ave., Sunnyvale, CA 94086. pfile@https://www.360docs.net/doc/0516413348.html,.

3. CS Dept., University of Wisconsin–Madison, 1210 W. Dayton St., Madison, WI 53706-1685. david@https://www.360docs.net/doc/0516413348.html,. Copyright ? 1998 IEEE. Published in IEEE Transactions on Computers, vol. 47, no. 10, Oct. 1998. Personal use of this material is permitted.

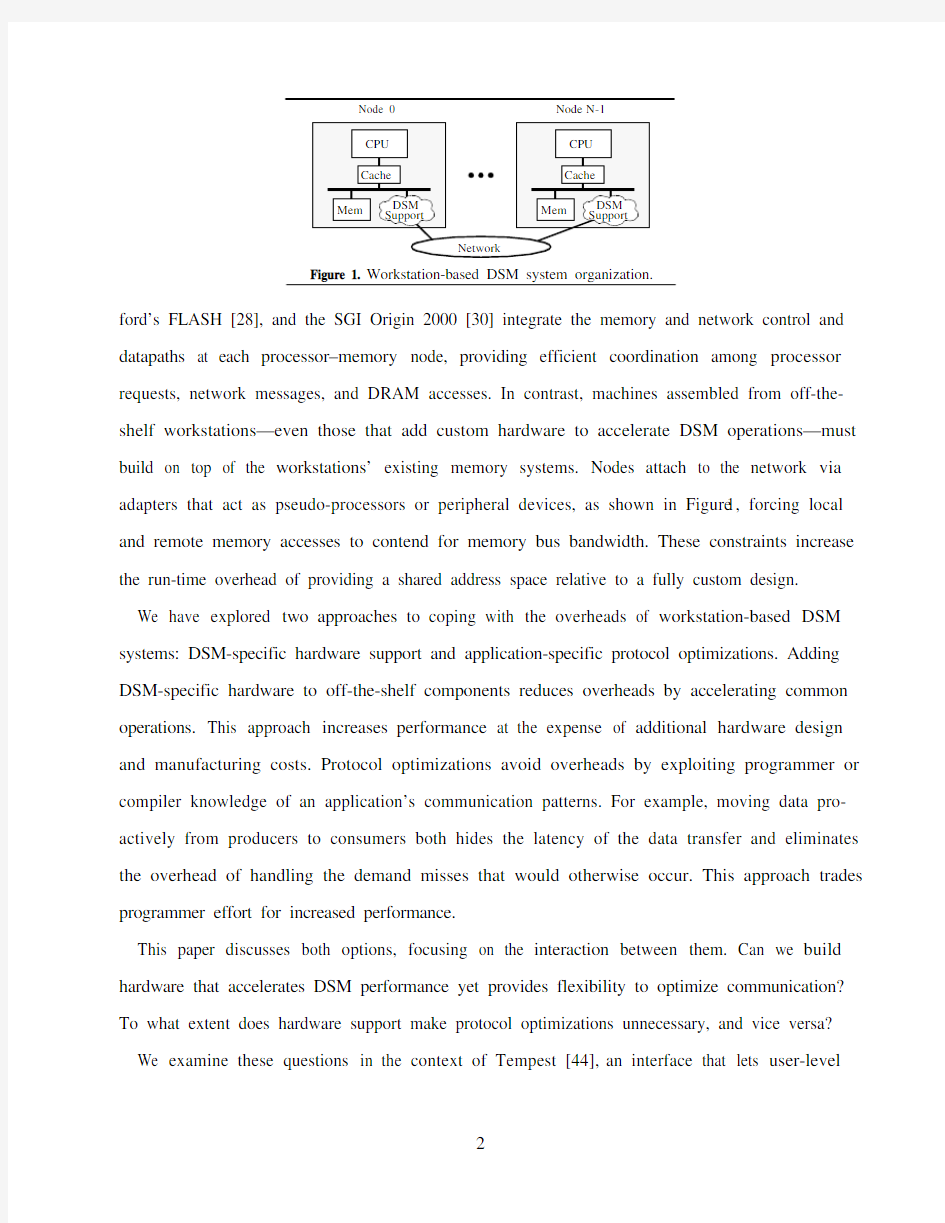

ford’s FLASH [28], and the SGI Origin 2000 [30] integrate the memory and network control and datapaths at each processor–memory node, providing efficient coordination among processor requests, network messages, and DRAM accesses. In contrast, machines assembled from off-the-shelf workstations—even those that add custom hardware to accelerate DSM operations—must build on top of the workstations’ existing memory systems. Nodes attach to the network via adapters that act as pseudo-processors or peripheral devices, as shown in Figure 1, forcing local and remote memory accesses to contend for memory bus bandwidth. These constraints increase the run-time overhead of providing a shared address space relative to a fully custom design.

We have explored two approaches to coping with the overheads of workstation-based DSM systems: DSM-specific hardware support and application-specific protocol optimizations. Adding DSM-specific hardware to off-the-shelf components reduces overheads by accelerating common operations. This approach increases performance at the expense of additional hardware design and manufacturing costs. Protocol optimizations avoid overheads by exploiting programmer or compiler knowledge of an application’s communication patterns. For example, moving data pro-actively from producers to consumers both hides the latency of the data transfer and eliminates the overhead of handling the demand misses that would otherwise occur. This approach trades programmer effort for increased performance.

This paper discusses both options, focusing on the interaction between them. Can we build hardware that accelerates DSM performance yet provides flexibility to optimize communication?To what extent does hardware support make protocol optimizations unnecessary, and vice versa?

We examine these questions in the context of Tempest [44], an interface that lets user-level

Node N-1Node 0

Figure 1. Workstation-based DSM system organization.

(unprivileged) software manage coherence at a fine granularity within a distributed shared address space. By default, applications use a standard software protocol that provides sequentially consistent shared memory, the consistency model generally preferred by most programmers for its simplicity [24]. However, for performance-critical data structures and execution phases, this default protocol may be replaced with arbitrary, potentially application-specific custom protocols, much like an expert programmer might recode a small performance-critical function in assembly language.1 Although there are many approaches to optimizing shared-memory communication, Tempest uniquely allows programmers and compilers to adapt or reimplement the shared-memory abstraction to match a specific data structure or sharing pattern. This flexibility gives direct mes-sage-level control over communication without abandoning traditional shared-memory features such as pointer-based data structures and dynamic, transparent data replication.

Three Tempest-compatible system designs—Typhoon, Typhoon-1, and Typhoon-0—demon-strate one general approach to adding flexible DSM hardware to a cluster of workstations. These designs use logically similar components, but differ in the extent to which these components are integrated and customized for DSM operations. Using simulation, we compare the performance of both standard and custom-protocol versions of our benchmarks on these systems against a baseline hardware-supported DSM design (similar to Simple COMA [21]) that does not allow user protocol customization. While custom protocols provide less relative improvement over stan-dard shared memory on more aggressive hardware, two benchmarks still show dramatic gains—86% and 384%—on the most aggressive Tempest system (Typhoon), outperforming the Simple COMA system by nearly the same amount. Custom protocols also effectively hide the higher overheads of less aggressive hardware; they decrease the performance gap between Typhoon-1 and Typhoon from 222% to 11% and the gap between Typhoon-0 and Typhoon from 427% to 22%. We expand on previous results [44, 45] by examining the effect of network latency and pro-tocol-processor speed; we find that custom protocols are generally effective at reducing perfor-

1. Similarly, just as past improvements in compiler technology have reduced the need to code in assembly language for performance, we hope that future compilers will target Tempest to provide similar levels of optimization without expert programmer involvement [11].

mance sensitivity to these parameters as well. However, Typhoon executes the standard versions of three of our benchmarks nearly as quickly as the custom-protocol versions, and faster than the custom-protocol versions on the less aggressive systems. In these cases, the additional hardware acceleration avoids the need to develop more efficient custom protocols.

To demonstrate the feasibility of our designs, we built a prototype of the simplest system (Typhoon-0). We describe this prototype briefly and report performance measurements. Simula-tion results match measured prototype speedups within 6%.

The remainder of the paper is organized as follows. The next section reviews the Tempest model for fine-grain distributed shared memory, then discusses the benchmarks used in this study and the protocol optimizations that were applied to improve their performance. Section3 describes the three Tempest designs, while Section4 reports on their simulated performance. Section5 reports on the Typhoon-0 prototype. Section6 discusses related work, and Section7 summarizes our conclusions and indicates directions for future work.

2 The Tempest interface

The Tempest interface provides a set of primitive mechanisms that allow user-level software—e.g., compilers and programmers—to construct coherent shared address spaces on distributed-memory systems [44]. Tempest’s flexibility derives from its separation of system-provided mech-anisms from user-provided coherence policies (protocols). Tempest also provides portability by presenting mechanisms in an implementation-independent fashion. This work exploits Tempest’s portability by using optimized applications originally developed for an all-software implementa-tion on a generic message-passing machine [50] to compare the performance of three different hardware-accelerated platforms.

Tempest provides four mechanisms—two types of messaging (active messages and virtual channels), virtual memory management, and fine-grain memory access control—to a set of con-ventional processes, one per processor–memory node, executing a common program text. Tem-pest’s active messages, derived from the Berkeley model [55], allow the sender to specify a handler function that executes on arrival at the destination node. This user-supplied handler con-

sumes the message’s data—e.g., by depositing it in memory—and (if necessary) signals its arrival. Virtual channels implement memory-to-memory communication, potentially providing higher bandwidth for longer messages. Virtual memory management allows users to manage a portion of their virtual address space on each node. Users achieve global shared addressing by mapping the same virtual page into physical memory on multiple nodes, much like conventional shared virtual memory systems [32]. Fine-grain memory access control helps maintain coherence between these multiple copies by allowing users to tag small (e.g., 32-byte), aligned memory blocks as Invalid, ReadOnly, or Writable. Any memory access that conflicts with the referenced block’s tag—i.e., any access to an Invalid block or a store to a ReadOnly block—suspends the com-putation and invokes a user-level handler function. Users may specify different sets of handlers for different virtual memory pages; thus multiple protocols may coexist peacefully, each manag-ing a distinct set of shared pages. A complete specification of Tempest is available in a technical report [40].

2.1 Standard shared memory using Tempest

Tempest’s mechanisms are sufficient to develop protocol software that transparently supports standard shared-memory applications. Stache, the default Tempest protocol included with the standard Tempest run-time library, is an example of such a protocol. The Stache library includes a memory allocation function, a page fault handler, and a set of access fault and active message handlers.

The memory allocation function, called directly by the application, allocates shared pages in the user-managed portion of the virtual address space. Stache assigns each page to a home node, which provides physical memory for the page’s primary copy and manages coherence for the page’s blocks. Processors on the home node access the primary copy directly via the global virtual address. Applications can specify a page’s home node explicitly or use a round-robin or first-touch policy. For benchmarks that have an explicit serial initialization phase, the first-touch pol-icy migrates the page to the node that first references it during the parallel phase [33].

Non-home nodes allocate memory for shared pages on demand via the page fault handler. Ini-

tially, these pages contain no valid data, so all their blocks are tagged Invalid. A reference to one of these Invalid blocks invokes an access fault handler that sends an active message requesting the block’s data to the home node. The message handler on the home node performs any necessary protocol actions, then responds with the data. Finally, the response message’s handler on the requesting node writes the data into the stache page, modifies the access tag, and resumes the local computation.

The Stache handlers maintain a sequentially consistent shared-memory model using a single-writer invalidation-based full-map protocol. A compile-time parameter sets the coherence granu-larity, which can be any power-of-two multiple of the target platform’s access control block size. In practice, we maintain several precompiled versions of the Stache library supporting a range of block sizes.

2.2 Optimizing communication using Tempest

The Stache protocol effectively supports applications written to a standard, sequentially consis-tent shared-memory programming model. However, the real power of Tempest lies in the oppor-tunity to optimize performance using customized coherence protocols tailored to specific data structures and specific phases within an application. Tempest also aids the optimization process itself by enabling extended coherence protocols that collect profiling information [34, 57]. This information drives high-level tools that help programmers identify and understand performance bottlenecks that may benefit from custom protocols. Unlike systems that provide relaxed memory consistency models [1, 18], the effects of these custom protocols are limited to programmer-spec-ified memory regions and execution phases; the remainder of the program sees a conventional sequentially consistent shared memory.

At its simplest, Tempest’s flexibility lets users select from a menu of available shared-memory protocols, as in Munin [10]. Programmers or compilers can further optimize performance by developing custom, application-specific protocols that optimize coherence traffic based on knowledge of an application’s synchronization and sharing patterns. For example, a programmer might exploit a producer-consumer pattern in some inner loop, employing a protocol that batches

the producer’s updates into highly efficient bulk messages. Such protocols can often use applica-tion-specific knowledge to identify updated values without run-time overhead. This optimization does not change the shared-memory view of the data, but only the manner in which the producer and consumer copies are kept coherent. Thus, custom protocols typically do not require non-triv-ial modification of the original shared-memory source code. The custom protocols themselves currently require significant development effort; however, we seek to remedy this situation through software reuse, high-level tools for protocol development [12], and automatic compiler application of optimized protocols [11].

Although Tempest custom protocols use message passing to communicate between nodes, they directly support the higher-level shared-memory abstraction. In contrast, other systems that seek to integrate message passing and shared memory treat user-level message passing as a comple-mentary alternative to—rather than a fundamental building block for—shared-memory communi-cation [22, 27, 56]. To optimize communication in these systems, critical portions of the program must be rewritten in a message-passing style. Of course, if desired, Tempest programmers can also dispense with shared memory and use messages directly—for example, to implement syn-chronization primitives.

This section briefly describes the Tempest optimizations applied to six scientific benchmarks—Appbt, Barnes, DSMC, EM3D, moldyn, and unstructured—both to indicate typical optimizations and to provide background for following sections where these benchmarks are used to evaluate system designs. In each case, the programmer started with an optimized, standard shared-memory parallel program and further improved its communication behavior by developing custom proto-cols for critical data structures. Again, although these changes were performed manually at the lowest level, we expect future development tools to aid or automate this process. The original papers reporting on these optimizations (Falsafi et al. [16] for Appbt, Barnes, and EM3D, and Mukherjee et al. [37] for DSMC, moldyn, and unstructured) detail the applications and the evolu-tionary optimization process.

Appbt is a computational fluid dynamics code from the NAS Parallel Benchmarks [4], parallel-

ized by Burger and Mehta [9]. Each processor works on a subcube of a three-dimensional matrix, sharing values along the subcube faces with its neighbors. In the original version, processors spin on counters to determine when each column of a neighbor’s face is available, then fetch the data via demand misses. The Tempest-optimized version combines the communication and synchroni-zation for each column into a single producer-initiated message. Approximately 100 lines of code were added or modified, a small fraction of the roughly 7,000 lines in the original program. Most changes were repetitive replacements of synchronization statements. The custom protocol itself required about 750 lines of C.

Barnes, from the SPLASH benchmark suite [51], simulates the evolution of an N-body gravita-tional system in discrete time steps. The primary data structure is an oct-tree whose interior nodes represent regions of three-dimensional space; the leaves are the bodies located in the region repre-sented by their parent node. Computation alternates between two phases: rebuilding the tree to reflect new body positions, and traversing the tree to calculate the forces on each body and update its position. The standard shared-memory version we use incorporates several optimizations not in the original SPLASH code [16]. The Tempest-optimized version splits the body structure into three parts and applies a different protocol to each: read-only fields (e.g., the body’s mass) use the default protocol, fields accessed only by the current owner use a custom migratory protocol, and the read-write position field uses a custom update protocol. Because the sharing pattern is dynamic, the updates are performed indirectly through the home node. Because the body data structure is split into multiple structures, source changes for Barnes were more widespread than for the other applications, making it difficult to quantify their scope.

DSMC simulates colliding gas particles in a three-dimensional space. Each processor is respon-sible for the particles within a fixed region of space. When a particle moves between regions, the source processor writes the particle’s data into a buffer associated with the destination processor. Because the original version of the program performs well, we applied only one simple Tempest optimization: processors write particles into the destination processor’s buffer using explicit mes-sages instead of shared-memory writes. In addition to eliminating coherence overhead for the

buffers, the atomic message handlers allow nodes to issue their writes concurrently without barri-ers or locking. This optimization modified only two lines in the original program. The remote-write protocol requires approximately 150 lines of code.

EM3D models electromagnetic wave propagation through three-dimensional objects by itera-tively updating the values at each node of a graph as a function of its neighbors’ values [13]. Each processor owns a contiguous subset of the graph nodes and updates only the nodes it owns. Where a graph edge connects nodes owned by different processors, each processor must fetch new values for the non-local nodes on every iteration. The standard shared memory version amortizes this overhead by placing multiple values in a cache block. This optimization modifies the graph node data structure, replacing the embedded value with a pointer into a packed value array. The Tem-pest version uses a custom deferred update protocol: each node sends all of the updated values required by a particular consumer in a single message over a virtual channel. The set of updates is static, but input dependent; the protocol determines the communication pattern by running a mod-ified version of the Stache protocol in the first iteration. Graph nodes are fetched on demand, but the protocol records the addresses and processors involved. The second and subsequent iterations use the optimized protocol. Interfacing this custom protocol to the original program changed five lines of code. The custom protocol itself comprises over 1,000 lines of C. However, this value overstates the protocol’s complexity somewhat; this code represents one of the earliest custom protocols, and was written without the aid of experience or protocol development tools.

Moldyn models force interactions between molecules. There are two main computation steps: the first identifies molecule pairs that interact significantly, and the second iterates over these pairs to compute forces and update the involved molecules’ state. Most of the communication occurs in the second step, where each processor may generate a number of updates for a given molecule. The original version accumulates each processor’s updates in a local array, then merges the updates in a synchronous, pipelined reduction phase. The Tempest version takes this optimiza-tion to its logical conclusion, using virtual channels to move array sections from node to node. This optimization changes only one line of the original code. The protocol itself involves less than

200 lines of C.

Unstructured iterates over a static, input-dependent mesh to calculate forces on a rigid body. The code partitions the mesh nodes across the processors. Unlike EM3D, processors may update as well as examine the values of mesh nodes owned by other processors. As in moldyn, the origi-nal version accumulates each processor’s updates locally, then merges the updates via a separate reduction step. However, this reduction is less efficient than in moldyn because each processor updates a small fraction of the mesh nodes and each update involves only a small amount of com-putation. The custom protocol sets up virtual channels connecting processors that share edges and sends updates across these channels. Each processor then performs the reduction for its nodes locally. This optimization added five lines in the body of the program. The reduction protocol involves just over six hundred lines of C. A second custom protocol, similar to the one used in EM3D, propagates new node values from the owner to the referencing processors. This optimiza-tion modified 12 lines in the body of the program. The protocol itself is less than 600 lines of C.

2.3 Summary

By allowing user-level software to manage coherence, Tempest enables aggressive communi-cation optimization within a shared address space. These optimizations typically require very few changes to the body of the application, because shared global data structures—for example, the pointer-based graph in EM3D—can be left intact.1Unfortunately, the optimizations described here involved significant protocol development effort. We believe that the magnitude of these efforts is due to our lack of experience and the intentionally low level of the Tempest interface, and will be dramatically reduced through software reuse, protocol development tools [12], and compiler support for protocol generation and selection [5, 11, 15]. Nevertheless, because Tempest enables a nearly unlimited spectrum of communication optimizations, it provides a good environ-ment for investigating the interaction of these optimizations with hardware support.

3 Hardware support for Tempest

To examine the impact of hardware support on both standard and Tempest-optimized shared-1. The leaf structure in Barnes is a notable exception, where the desire to apply different protocols to the structure’s fields conflicts with Tempest’s page-granularity protocol binding.

memory applications, we compare the performance of three system designs that add varying lev-els of hardware acceleration to a cluster of workstations. This section describes these three sys-tems briefly. The following section (Section4) analyzes the simulated execution of the benchmarks of Section2.2 on these systems.

The three systems we study—Typhoon, Typhoon-1, and Typhoon-0—share the organization depicted in Figure1. The DSM support hardware, represented by the “cloud” in Figure1, consists of three components: logic implementing Tempest’s fine-grain access control, a network inter-face, and a processor to execute protocol code (message and access fault handlers). Tempest’s vir-tual memory management is supported by address translation hardware on the main CPU.

All three systems use a custom device at each node to implement some of these components; they differ in the number of components integrated into this device, as depicted by the shaded rectangles in Figure2. Components not included in the custom device are implemented using off-the-shelf parts. These different integration strategies represent different trade-offs between perfor-mance and hardware design simplicity. Integrating a component into a custom device increases the device’s design time and design and manufacturing costs.1 However, separating components into discrete devices significantly increases the overhead of communicating between them. Typhoon provides the highest performance—at the highest cost—by integrating all three compo-nents on a single custom device.2 Typhoon-1 replaces Typhoon’s integrated processor with an off-the-shelf CPU, leaving the network interface and access control logic together in custom hard-ware. Typhoon-0 achieves the simplest design by splitting all three components across separate devices, two of which—the protocol processor and the network interface—can be purchased off the shelf. A relatively simple access control device is Typhoon-0’s only custom component. Typhoon-0’s simplicity allowed us to construct a prototype implementation, which we report on in Section5.

1. In sufficient volume, the increase in the custom component’s cost may be offset by the system-level reduction in package count and board space.

2. Our focus on workstation-based systems precludes integrating components, such as the memory controller, that are already an integral part of the base workstation system.

The rest of this section describes the systems more fully. The first subsection covers common features; following subsections describe Typhoon, Typhoon-1, and Typhoon-0 in turn. Additional details can be found in previous publications [41, 44, 45].

3.1 Common features

To focus on the impact of the organizational differences in our designs, we assume similar tech-nology for the three common components—the protocol processor, network interface, and access control logic.

The protocol processor is a general-purpose CPU. For convenience, the protocol processor uses the same instruction set as the primary (computation) processor. User-level protocol software run-ning on the processor communicates directly with the access control logic and network interface via memory-mapped registers.

The network interface is a pair of memory-mapped hardware queues, one in each direction.Sending a message requires writing a header word indicating the destination node and message length, followed by the message data, into the send queue. A message is received by reading words out of the receive queue. A separate signal indicates when a message is waiting at the head of the receive queue. Credit-based flow control prevents deadlock on the hardware queues. To relieve protocol software from dealing with flow control, the run-time library buffers messages in main memory when the network overflows.

Tempest’s active messages map directly to the interface: the first word of each message is used for the receive handler’s program counter, and the handler reads the remainder of the message from the hardware queue. The messaging library implements Tempest’s virtual channels in a

Typhoon

Typhoon-1Typhoon-0

Figure 2. Component integration diagram.

protocol

processor

network

interface access control

straightforward fashion on top of the active message layer.

The access control logic monitors memory accesses by snooping on the memory bus and enforces access control semantics using the signals intended for local bus-based coherence. On every bus transaction caused by a processor cache miss, the device checks its on-board tag store in parallel with the main memory access. If the access conflicts with the tag, the device inhibits the memory controller’s response (as if to perform a cache-to-cache transfer) and suspends the access. If the access does not conflict with the tag, the device allows the memory controller to respond. In the case of a read access to a ReadOnly block, the device asserts the “shared” bus sig-nal to force the processor cache to load the block in a non-exclusive state. A subsequent write to the block will cause the processor to initiate an invalidation operation on the bus, which the device can then detect and suspend.

Once a block is loaded into the processor’s cache, accesses that hit cannot be snooped; these hits must be guaranteed not to conflict with the access tag. For this reason, tag changes that decrease the accessibility of a block (e.g., from Writable or ReadOnly to Invalid) require a bus transaction to invalidate any copies that may be in the hardware caches. When a block is initially Writable, the bus transaction also retrieves an up-to-date copy, because the data could be modified in a hardware cache.

A shadow space [7, 22, 54] allows direct manipulation of access control tags from Tempest’s user-level protocol software. A shadow space is a physical address range as large as, and at a fixed offset from, the machine’s physical memory address range. Accesses to a shadow-space location are handled by the access control logic and interpreted as operations on the corresponding real memory location. The operating system allows a process to manipulate access control tags for its own data pages by mapping the corresponding shadow space pages into the process’s virtual space. These shadow mappings prevent unauthorized accesses and implicitly translate authorized accesses to physical addresses.

3.2 Typhoon

Typhoon [44] combines the network interface, access control logic, and protocol processor on a

Figure 3. A Typhoon node [44].

single device (see Figure3). Typhoon’s full integration enables two categories of optimizations: those that enhance communication between the processor and other components, and those that take advantage of network interface/access control synergy.

Low-overhead, customized communication paths between the processor and the other compo-nents enable efficient invocation and execution of protocol handlers. Event dispatch logic pro-vides memory-mapped registers containing the program counter and arguments for the next handler to be executed, transparently arbitrating between access fault and message handlers. If no events are pending, the dispatch register defers responding to reads, stalling the processor until an event occurs. While a handler executes, it enjoys single-cycle access to access control and net-work interface registers.

The access control logic combines tag enforcement and fault dispatch using a reverse transla-tion lookaside buffer (RTLB). The RTLB caches per-page entries indexed by physical page num-ber. Each entry contains the page’s access tags, fault handler addresses, protocol data pointer, and the corresponding virtual page number. Each bus transaction is checked against the tags stored in the RTLB. On an access fault, the RTLB latches the appropriate handler address, the data pointer, and the virtual address of the faulting access. The RTLB derives its name from the “reverse”translation of physical bus addresses to the virtual addresses required by the user-level access

fault handlers.

Integration of the network interface and access control logic allows efficient implementation of some common DSM operations. For example, in a single-writer protocol, a writing node must atomically give up write access and send the modified data to the next reader or writer. In this case, the Typhoon device issues a single read-for-ownership bus transaction that both invalidates cached copies (as required to downgrade the access tag) and fetches the current copy into the net-work send queue. Typhoon also accelerates receive operations using the block buffer(BB in Figure3). The block buffer has address tags, like a cache, and participates in the local bus coher-ence protocol. When receiving data for a previously Invalid block, the block buffer stores the arriving data, sets the address tag to match the destination block’s address, and sets the local pro-tocol state to indicate exclusive ownership. (Note that the block buffer cannot safely assert owner-ship without the assurance that no other local cached copies exist, which comes from its knowledge that the block was previously tagged Invalid.) Until the block buffer replaces the data, it will satisfy subsequent requests for the block (possibly including the retry of the faulting access that initiated the block fetch) as cache-to-cache transfers without writing the data to main mem-ory. The block transfer unit manages these combined block operations, which are invoked via writes to the shadow space.

3.3 Typhoon-1: off-the-shelf protocol processor

Typhoon-1 simplifies the Typhoon design by replacing Typhoon’s integrated protocol proces-sor with a general-purpose off-the-shelf CPU. This section discusses our motivation and methods for this separation of components. Because Typhoon-0 also uses an off-the-shelf protocol proces-sor, the contents of this section apply to that design as well (unless stated otherwise).

In addition to reducing the custom device’s design complexity and manufacturing cost, using a general-purpose CPU for protocol processing has two advantages. First, because the integrated processor will face die size constraints and incur design delay due to component integration and testing, an off-the-shelf processor will almost certainly have higher raw performance. (However, the integrated processor’s effective performance can be increased by optimizing the microarchi-

tecture for protocol handling, as in FLASH [28].) Section4.4 investigates the performance impact of a slower integrated processor. Second, a general-purpose protocol processor may be used for the application’s primary computation when there are no protocol events to handle. Similarly, any processor on a symmetric multiprocessor node may serve as the protocol processor. Falsafi and Wood [17] show that dynamically scheduling protocol processing tasks across all available pro-cessors is often more efficient than dedicating a protocol processor. Both Typhoon-1 and Typhoon-0 are capable of supporting this dynamic model. However, we assume a dedicated pro-tocol processor in this study to provide a more direct performance comparison with Typhoon.

Of course, the primary drawback to a separate, off-the-shelf protocol processor is the increased overhead of communicating with the network interface and access control logic. Accesses to these other components must cross the memory bus, which is both much slower than an on-chip inter-connect and subject to contention. This change in connectivity relative to Typhoon led to two changes in the interface between the processor and the other components.

First, we use a novel cacheable control register technique to accelerate dispatch of event han-dlers. A cacheable control register is a device register accessed using the local bus cache coher-ence protocol. When the register is read, the device responds with a cache block of data. Whenever the contents of the register change, the device issues a bus transaction to invalidate the cached copy. Both Typhoon-1 and Typhoon-0 use a cacheable control register called the dispatch register to transfer event information to the protocol processor. Because the dispatch register is a full cache block, it supplies both event notification and all available event parameters (e.g., the address of a block access fault) in a single bus transaction. Because the dispatch register’s con-tents are stored in the protocol processor’s cache until invalidated by the device, the processor can poll continuously while consuming bus bandwidth only when an event occurs.

Second, because the overhead of sending an event notification across the memory bus domi-nates handler invocation latency, we streamline the access control logic—shifting some access fault processing to software—without incurring a significant performance penalty. Specifically, the access control hardware maintains only the two-bit access tags for all of physical memory in

Figure 4. A Typhoon-1 node.

an on-board SRAM array. One megabyte of SRAM is sufficient to tag 128Mbytes of physical memory at a 32-byte granularity. A software-managed inverted page table in main memory stores the other per-page information found in each Typhoon RTLB entry: the virtual page number, fault handler addresses, and protocol data pointer. On a block access fault, software obtains the physi-cal page number from the hardware, indexes the inverted page table, determines the handler address, and forms the arguments. Because all fault information is transferred by the cacheable dispatch register in a single bus operation, and because the hardware formats this data to eliminate software shifting and masking, only eight instructions are needed from the detection of a block access fault to the invocation of the appropriate Tempest user handler. Assuming cache hits, these eight instructions require ten cycles on the Ross HyperSPARC.

In all other respects—other than the performance of inter-chip communication—the combina-tion of Typhoon-1’s protocol processor and custom device, shown in Figure4, behave as the inte-grated Typhoon device. As in Typhoon, Typhoon-1 leverages access control/network interface integration, in cooperation with the block transfer unit and block buffer, to support combined block transfer and access tag change operations.

3.4 Typhoon-0: off-the-shelf network interface

Typhoon-0 further simplifies Typhoon-1’s custom hardware device by replacing the integrated

network interface with a separate, off-the-shelf network adapter, as shown in Figure 5. Just as Typhoon-1 leverages high-performance general-purpose CPUs, the Typhoon-0 design also capi-talizes on emerging low-latency commercial networks such as Myricom’s Myrinet [8] and DEC’s Memory Channel [19]. The custom device contains only fine-grain access control logic. Section 5describes an FPGA-based prototype of Typhoon-0 that demonstrates the feasibility and relative simplicity of this design.

Of course, the lack of network interface/access control integration increases Typhoon-0’s over-heads relative to Typhoon and Typhoon-1. These increases occur primarily in the areas of handler dispatch and combined block transfer/access tag change operations.

Although the custom device’s dispatch register provides all the information required to invoke an access fault handler, it cannot do the same for message handlers. To limit the protocol proces-sor’s polling check to a single cacheable location, a dispatch register status bit reflects the state of the network interface’s message arrival interrupt line, as shown in Figure 5. Unlike Typhoon and Typhoon-1, however, the processor must access the NI explicitly to fetch the message handler’s program counter.

The combined block transfer and access tag change operations—handled by the block transfer unit and block buffer in Typhoon and Typhoon-1—require software sequencing in Typhoon-0.These operations must be performed carefully (and awkwardly) to avoid race conditions that lead to lost writes or undetected accesses to invalid data. For example, to revoke write access on a

Figure 5. A Typhoon-0 node.1Bus Interface

to memory bus

addr 2

data Cache

NI Cache AC

compute CPU

protocol CPU Mem Tag SRAM

Block Buffer Status Regs from NI to network

block and send the modified data, the processor first initiates the tag change via a write to the access control device’s shadow space. The device performs a read-invalidate bus transaction to invalidate cached copies and fetch the current version, which is copied into the device’s block buffer.1 The processor then synchronizes with the device, using an uncached load, to guarantee that the transaction has completed. Finally, the processor copies the contents of the block buffer to the network interface. Similarly, when receiving data for a previously Invalid block, the processor first writes the data to memory via an uncached alias which bypasses the tag check, then changes the access tag.

4 Performance evaluation

This section compares the performance of these three designs via simulation. We first describe the simulation parameters and methodology. Section4.1 presents results for a simple microbench-mark and a set of application macrobenchmarks. Section4.3 examines the impact of network latency on application performance, and Section4.4 examines the impact of integrating a lower-performance protocol processor in Typhoon.

The nodes of the simulated systems are based on the technology used for the Typhoon-0 proto-type. Nodes are based on the Sun SPARCStation20. Processors are modeled after the dual-issue Ross HyperSPARC [46] clocked at 200MHz with a 1Mbyte direct-mapped data cache. The instruction cache is not modeled; all instruction references are treated as hits.

A 50MHz MBus connects the processor(s), memory, access control and network interface devices within each node. The MBus is a 64-bit, multiplexed address/data bus that maintains coherence on 32-byte blocks using a MOESI protocol [52]. On a cache miss, main memory returns the critical doubleword 140ns (7 bus cycles or 28 CPU cycles) after the bus request is issued, with the remaining doublewords following in consecutive cycles. Miss detection, proces-sor/bus clock synchronization, and bus arbitration add 11-14 CPU cycles to the total miss latency. The simulation accounts fully for occupancy, contention, and arbitration delays; the model is suf-

1. Typhoon-0’s block buffer holds only data fetched by these read-invalidate transactions, so it does not have address tags as in Typhoon and Typhoon-1. Typhoon-0’s block buffer is implemented as a cacheable register, so the processor can read the entire contents in a single bus transaction.

ficiently detailed and accurate that the same simulator was used for initial functional design of the Typhoon-0 prototype’s access control device.

On a block access fault, the access control logic inhibits the memory controller and gives the requesting processor an MBus relinquish and retry response, forcing the processor to rearbitrate for the bus. The access control device masks the arbiter to keep the processor off the bus until the access can be completed [31]. Although this technique cannot be implemented on an unmodified SPARCstation20, its performance is representative of more recent systems which support deferred responses, either explicitly (like the Intel P6 [20]) or using a split-transaction bus. Timing parameters for the Typhoon-0 and Typhoon-1 access control devices are taken from the FPGA-based Typhoon-0 prototype implementation described in Section5. To equalize the com-parison with Typhoon-0 and Typhoon-1, which store all the access tags on the access control device, the Typhoon RTLB is assumed to be large enough to map all the active shared pages on each node. Because simulation limits the size of the data sets, it is unlikely that replacement over-heads due to a finite RTLB would affect the results significantly.

The network interface queues transfer up to 64 bits per cycle, at the bus clock rate in Typhoon-1 and Typhoon-0 and at the protocol processor clock rate in Typhoon. Each node may have at most four messages outstanding to each other node. The simulated Typhoon-0’s network interface is modeled after the CM-5 NI, with a message arrival signal that feeds the access control device’s dispatch register.

Network contention is modeled at the interfaces, but not internally. As a result, the network wire latency, measured from the transmission of the tail from the sending network interface to the arrival of the head at the receiving interface, is constant. To emphasize the performance impact of DSM support, the default latency is a fairly aggressive 0.5μs (100processor cycles). Although current off-the-shelf networks are typically slower, the last few years have seen rapid performance advances in commercial system-area networks [8, 19]. We believe that networks with this level of performance will be available in the near future. Section4.3 examines the overall performance impact of higher-latency networks.

step7与wincc flexible仿真

使用Wincc Flexible与PLCSIM进行联机调试是可行的,但是前提条件是安装Wincc Flexible时必须选择集成在Step7中,下面就介绍一下如何进行两者的通讯。 Step1:在Step7中建立一个项目,并编写需要的程序,如下图所示: 为了演示的方便,我们建立了一个起停程序,如下图所示: Step2:回到Simatic Manager中,在项目树中我们建立一个Simatic HMI Station的对象,如果Wincc Flexible已经被安装且在安装时选择集成在Step7中的话,系统会调用Wincc Flexible程序,如下图所示:

为方便演示,我们这里选择TP270 6寸的屏。 确定后系统需要加载一些程序,加载后的Simatic Manager界面如下图所示:

Step3:双击Simatic HMI Station下Wincc Flexible RT,如同在Wincc Flexible软件下一样的操作,进行画面的编辑与通讯的连接的设定,如果您安装的Wincc Flexible软件为多语言版本,那么通过上述步骤建立而运行的Wincc Flexible界面就会形成英语版,请在打开的Wincc Flexible软件菜单Options-〉Settings……中设置如下图所示即可。 将项目树下通讯,连接设置成如下图所示: 根据我们先前编写的起停程序,这里只需要使用两个M变量与一个Q变量即可。将通讯,变量设置成如下图所示:

将画面连接变量,根据本文演示制作如下画面: 现在我们就完成了基本的步骤。

Step4:模拟演示,运行PLCSIM,并下载先前完成的程序。 建立M区以及Q区模拟,试运行,证实Step7程序没有出错。 接下来在Wincc Flexible中启动运行系统(如果不需要与PLCSIM联机调试,那么需要运行带仿真器的运行系统),此时就可以联机模拟了。 本例中的联机模拟程序运行如下图所示:

STC89C52单片机详细介绍

STC89C52是一种带8K字节闪烁可编程可檫除只读存储器(FPEROM-Flash Programable and Erasable Read Only Memory )的低电压,高性能COMOS8的微处理器,俗称单片机。该器件采用ATMEL 搞密度非易失存储器制造技术制造,与工业标准的MCS-51指令集和输出管脚相兼容。 单片机总控制电路如下图4—1: 图4—1单片机总控制电路 1.时钟电路 STC89C52内部有一个用于构成振荡器的高增益反相放大器,引

脚RXD和TXD分别是此放大器的输入端和输出端。时钟可以由内部方式产生或外部方式产生。内部方式的时钟电路如图4—2(a) 所示,在RXD和TXD引脚上外接定时元件,内部振荡器就产生自激振荡。定时元件通常采用石英晶体和电容组成的并联谐振回路。晶体振荡频率可以在1.2~12MHz之间选择,电容值在5~30pF之间选择,电容值的大小可对频率起微调的作用。 外部方式的时钟电路如图4—2(b)所示,RXD接地,TXD接外部振荡器。对外部振荡信号无特殊要求,只要求保证脉冲宽度,一般采用频率低于12MHz的方波信号。片内时钟发生器把振荡频率两分频,产生一个两相时钟P1和P2,供单片机使用。 示,RXD接地,TXD接外部振荡器。对外部振荡信号无特殊要求,只要求保证脉冲宽度,一般采用频率低于12MHz的方波信号。片内时钟发生器把振荡频率两分频,产生一个两相时钟P1和P2,供单片机使用。 RXD接地,TXD接外部振荡器。对外部振荡信号无特殊要求,只要求保证脉冲宽度,一般采用频率低于12MHz的方波信号。片内时钟发生器把振荡频率两分频,产生一个两相时钟P1和P2,供单片机使用。

标准物质与标准样品

1 概述 在国际上标准物质和标准样品英文名称均为“Reference Materials”,由 ISO/REMCO组织负责这一工作。在我国计量系统将“Reference Materials”叫为“标准物质”,而标准化系统叫为“标准样品”。实际上二者有很多相同之处,同时也有一些微小差异(见表1)。 2 标准物质的分类与分级 2.1 标准物质的分类 根据中华人民共和国计量法的子法—标准物质管理办法(1987年7月10日国家计量局发布)中第二条之规定:用于统一量值的标准物质,包括化学成分标准物质、物理特性与物理化学特性测量标准物质和工程技术特性测量标准物质。按其标准物质的属性和应用领域可分成十三大类,即:

l 钢铁成分分析标准物质; l 有色金属及金属中气体成分分析标准物质; l 建材成分分析标准物质; l 核材料成分分析与放射性测量标准物质; l 高分子材料特性测量标准物质; l 化工产品成分分析标准物质; l 地质矿产成分分析标准物质; l 环境化学分析标准物质; l 临床化学分析与药品成分分析标准物质; l 食品成分分析标准物质; l 煤炭石油成分分析和物理特性测量标准物质; l 工程技术特性测量标准物质; l 物理特性与物理化学特性测量标准物质。 2.2 标准物质的分级 我国将标准物质分为一级与二级,它们都符合“有证标准物质”的定义。 2.2.1 一级标准物质 一级标准物质是用绝对测量法或两种以上不同原理的准确可靠的方法定值,若只有一种定值方法可采取多个实验室合作定值。它的不确定度具有国内最高水平,均匀性良好,在不确定度范围之内,并且稳定性在一年以上,具有符合标准物质技术规范要求的包装形式。一级标准物质由国务院计量行政部门批准、颁布并授权生产,它的代号是以国家级标准物质的汉语拼音中“Guo”“Biao”“Wu”三个字的字头“GBW”表示。 2.2.2 二级标准物质 二级标准物质是用与一级标准物质进行比较测量的方法或一级标准物质的定值方

关于国内工程保理业务介绍

关于国内工程保理业务介绍 根据我行最新国内保理业务管理办法,现将本业务作如下介绍: 一、本业务涉及到的几个定义 (一)国内保理业务是指境内卖方将其向境内买方销售货物或提供服务所产生的应收账款转让给我行,由我行为卖方提供应收账款管理、保理融资及为买方提供信用风险担保的综合性金融业务。其中,我行为卖方提供的应收账款管理和保理融资服务为卖方保理,为买方提供的信用风险担保服务为买方保理。 工程保理是指工程承包商或施工方将从事工程建设服务形成 的应收账款转让给卖方保理行,由保理行提供综合性保理服务。(二)卖方(供应商、债权人):对所提供的货物或服务出具发票 的当事方,即保理业务的申请人。 (三)买方(采购商、债务人):对由提供货物或服务所产生的应 收账款负有付款责任的当事方。 (四)应收账款:本办法所称应收账款指卖方或权利人因提供货物、服务或设施而获得的要求买方或义务人付款的权利,包括现有的金钱债权及其产生的收益,包括但不限于应收账款本金、利息、违约金、损害赔偿金,以及担保权利、保险权益等所有主债权、从债权以及与主债权相关的其他权益,但不包括因票据或其他有价证券而产生的付款请求权。 (四)应收账款发票实有金额:是指发票金额扣除销售方已回笼货款后的余额。

(五)保理监管专户:指卖方保理行为卖方开立的用于办理保理项下应收账款收支业务的专用账户。该账户用途包括:收取保理项下应收账款;收取其他用以归还我行保理融资的款项;支付超过保理融资余额以外的应收账款等。卖方不能通过应收账款专户以网上银行或自助设备等形式开展任何业务。 二、保理业务分类 (一)按是否对保理融资具有追索权,我行国内保理分为有追索权保理和无追索权保理。 有追索权保理是指卖方保理行向卖方提供保理项下融资后,若买方在约定期限内不能足额偿付应收账款,卖方保理行有权按照合同约定向卖方追索未偿还融资款的保理业务;无追索权保理是指卖方保理行向卖方提供保理项下融资后,若买方因财务或资信原因在约定期限内不能足额偿付应收账款,卖方保理行无权向卖方追索未偿还融资款。 三、应收账款的范围、条件及转让方式 (一)可办理国内保理业务的应收账款范围: 1、因向企事业法人销售商品、提供服务、设施而形成的应收 账款; 2、由地市级(含)以上政府的采购部门统一组织的政府采购 行为而形成的应收账款; 3、由军队军级(含)以上单位的采购部门统一组织的军队采 购行为而形成的应收账款;

国际标准物质介绍

一、NIST标准物质: 1.资源介绍 美国NIST是世界上开展标准物质研制最早的机构。NIST的前身为美国国家标准局,成立于1901年,是设在美国商务部科技管理局下的非管理机构,负责国家层面上的计量基础研究。NIST在研究权威化学及物理测量技术的同时,还提供以NIST为商标的各类标准参考物质(SRM,Standard Reference Material),该商标以使用权威测量方法和技术而闻名。目前,NIST共提供1300多种标准参考物质,形成了世界领先的、较为完善的标准物质体系,在保证美国国内化学测量量值的有效溯源及分析测量结果的可靠性方面发挥了重要的支撑作用。总体来讲,美国NIST与其它美国商业标准物质生产者的关系是:NIST作为国家计量院将其研究重点放在高端标准物质及测量标准的研究上,建立国家的高端量值溯源体系,商业标准物质将其量值通过NTRM、校准等各种形式溯源至NIST,以改善标准物质的供应状况,满足不同层次用户对标准物质日益增长的需求。 NIST在采纳由ISO制定的国际计量学通用基本术语中对CRM、RM的定义外,又将其标准物质分为SRM(标准参考物质)、RM(标准物质)及NTRM(可溯源至NIST的标准物质)。对它们分别解释如下: NIST SRM:符合NIST规定的附加定值准则,带有证书(物理特性类)或分析证书(化学成分类)并在证书上提供其定值结果及相关使用信息的有证标准物质(CRM)。该类标准物质必须含有认定值(Certified value),同时可提供参考值和信息值; NIST RM:由NIST发布的、带有研究报告而不是证书的标准物质。该类标准物质除了符合ISO对RM的定义,还可能符合ISO对CRM的定义。该类标准物质必须含有参考值,同时可提供信息值; NTRM:可以通过很好建立的溯源链溯源至NIST化学测量标准的商业标准物质。溯源链的建立必须符合由NIST确定的系列标准和草案。通过管理授权,NTRM也可等价于CRM。NTRM模式目前在气体分析用标准物质和光度分析用标准物质方面得到了很好的应用; 在标准物质所提供的特性量值方面,有以下三类: NIST认定值:具有最高准确度、所有已知或可疑偏差来源均已经过充分研究或

winccfleXible系统函数

WinCC Flexible 系统函数 报警 ClearAlarmBuffer 应用 删除HMI设备报警缓冲区中的报警。 说明 尚未确认的报警也被删除。 语法 ClearAlarmBuffer (Alarm class number) 在脚本中是否可用:有 (ClearAlarmBuffer) 参数 Alarm class number 确定要从报警缓冲区中删除的报警: 0 (hmiAll) = 所有报警/事件 1 (hmiAlarms) = 错误 2 (hmiEvents) = 警告 3 (hmiSystem) = 系统事件 4 (hmiS7Diagnosis) = S7 诊断事件 可组态的对象 对象事件 变量数值改变超出上限低于下限 功能键(全局)释放按下 功能键(局部)释放按下 画面已加载已清除 数据记录溢出报警记录溢出 检查跟踪记录可用内存很少可用内存极少 画面对象按下 释放 单击 切换(或者拨动开关)打开 断开 启用 取消激活 时序表到期 报警缓冲区溢出

ClearAlarmBufferProtoolLegacy 应用 该系统函数用来确保兼容性。 它具有与系统函数“ClearAlarmBuffer”相同的功能,但使用旧的ProTool编号方式。语法 ClearAlarmBufferProtoolLegacy (Alarm class number) 在脚本中是否可用:有 (ClearAlarmBufferProtoolLegacy) 参数 Alarm class number 将要删除其消息的报警类别号: -1 (hmiAllProtoolLegacy) = 所有报警/事件 0 (hmiAlarmsProtoolLegacy) = 错误 1 (hmiEventsProtoolLegacy) = 警告 2 (hmiSystemProtoolLegacy) = 系统事件 3 (hmiS7DiagnosisProtoolLegacy) = S7 诊断事件 可组态的对象 对象事件 变量数值改变超出上限低于下限 功能键(全局)释放按下 功能键(局部)释放按下 画面已加载已清除 变量记录溢出报警记录溢出 检查跟踪记录可用内存很少可用内存极少 画面对象按下 释放 单击 切换(或者拨动开关)打开 断开 启用 取消激活 时序表到期 报警缓冲区溢出 SetAlarmReportMode 应用 确定是否将报警自动报告到打印机上。 语法 SetAlarmReportMode (Mode) 在脚本中是否可用:有 (SetAlarmReportMode) 参数 Mode

STC89C52单片机用户手册

STC89C52RC单片机介绍 STC89C52RC单片机是宏晶科技推出的新一代高速/低功耗/超强抗干扰的单片机,指令代码完全兼容传统8051单片机,12时钟/机器周期和6时钟/机器周期可以任意选择。 主要特性如下: 增强型8051单片机,6时钟/机器周期和12时钟/机器周期可以任意选择,指令代码完全兼容传统8051. 工作电压:~(5V单片机)/~(3V单片机) 工作频率范围:0~40MHz,相当于普通8051的0~80MHz,实际工作频率可达48MHz 用户应用程序空间为8K字节 片上集成512字节RAM 通用I/O口(32个),复位后为:P1/P2/P3/P4是准双向口/弱上拉,P0口是漏极开路输出,作为总线扩展用时,不用加上拉电阻,作为 I/O口用时,需加上拉电阻。 ISP(在系统可编程)/IAP(在应用可编程),无需专用编程器,无需专用仿真器,可通过串口(RxD/,TxD/)直接下载用户程序,数秒 即可完成一片 具有EEPROM功能 具有看门狗功能 共3个16位定时器/计数器。即定时器T0、T1、T2 外部中断4路,下降沿中断或低电平触发电路,Power Down模式可由外部中断低电平触发中断方式唤醒 通用异步串行口(UART),还可用定时器软件实现多个UART 工作温度范围:-40~+85℃(工业级)/0~75℃(商业级) PDIP封装 STC89C52RC单片机的工作模式 掉电模式:典型功耗<μA,可由外部中断唤醒,中断返回后,继续执行

原程序 空闲模式:典型功耗2mA 正常工作模式:典型功耗4Ma~7mA 掉电模式可由外部中断唤醒,适用于水表、气表等电池供电系统及便携设备 STC89C52RC引脚图 STC89C52RC引脚功能说明 VCC(40引脚):电源电压 VSS(20引脚):接地 P0端口(~,39~32引脚):P0口是一个漏极开路的8位双向I/O口。作为输出端口,每个引脚能驱动8个TTL负载,对端口P0写入“1”时,可以作为高阻抗输入。

标准物质GBWGBWEBWGSB的区别

标准物质GBW、GBW(E)、BW、GSB的区别 1 概述 在国际上标准物质和标准样品英文名称均为“Reference Materials”,由 ISO/REMCO组织负责这一工作。在我国计量系统将“Reference Materials”叫为“标准物质”,而标准化系统叫为“标准样品”。实际上二者有很多相同之处,同时也有一些微小差异(见表1)。 CAS号(CAS Registry Number或称CAS Number, CAS Rn, CAS #),又称CAS登录号,是某种物质(化合物、高分子材料、生物序列(Biological sequences)、混合物或合金)的唯一的数字识别号码。美国化学会的下设组织化学文摘服务社 (Chemical Abstracts Service, CAS)负责为每一种出现在文献中的物质分配一个CAS号,其目的是为了避免化学物质有多种名称的麻烦,使数据库的检索更为方便。 2 标准物质的分类与分级 2.1 标准物质的分类 根据中华人民共和国计量法的子法—标准物质管理办法(1987年7月10日国家计量局发布)中第二条之规定:用于统一量值的标准物质,包括化学成分标准物质、物理特性与物理化学特性测量标准物质和工程技术特性测量标准物质。按其标准物质的属性和应用领域可分成十三大类, 2.2 标准物质的分级 我国将标准物质分为一级与二级,它们都符合“有证标准物质”的定义。 2.2.1 一级标准物质

一级标准物质又称国家一级标准物质,是由国家权威机构审定的标准物质。例如,美国国家标准局的SRM标准物质,英国的BAS标准物质,联邦德国的BAM标准物质,我国的GBW标准物质等。一级标准物质一般用绝对测量法或其它准确可靠的方法确定物质的含量,准确定为本国最高水平。我国国家技术监督局规定的国家一级标准物质应具备的基本条件为:①用绝对测量法或两种以上不同原理的准确可靠的测量方法进行定值,也可由多个实验室用准确可靠的方法协作定值;②定值的准确度应具有国内最高水平;③应具有国家统一编号的标准物质证书;④稳定时间应在1年以上;⑤均匀性应保证在定值的精度范围内; ⑥具有规定的合格包装形式。一般说来,一级标准物质应具有0.3-1%的准确度。一级标准物质由国务院计量行政部门批准、颁布并授权生产,它的代号是以国家级标准物质的汉语拼音中“Guo”“Biao”“Wu”三个字的字头“GBW”表示。 2.2.2 二级标准物质 二级标准物质是用与一级标准物质进行比较测量的方法或一级标准物质的定值方法定值,其不确定度和均匀性未达到一级标准物质的水平,稳定性在半年以上,能满足一般测量的需要,包装形式符合标准物质技术规范的要求。二级标准物质由国务院计量行政部门批准、颁布并授权生产,它的代号是以国家级标准物质的汉语拼音中“Guo”“Biao”“Wu”三个字的字头“GBW”加上二级的汉语拼音中“Er”字的字头“E”并以小括号括起来――GBW(E)。 GBW和GBW(E)是总局批准的国家级标准物质,属于有证标准物质。BW是中国计量院学报标准物质,未通过总局批准,没有取得有证标准物质号. 2.3 标准物质的编号 一种标准物质对应一个编号。当该标准物质停止生产或停止使用时,该编号不再用于其他标准物质,该标准物质恢复生产和使用仍启用原编号。 准物质目录编辑顺序一致)。第三位数是标准物质的小类号,每大类标准物质分为1-9个小类。第四-五位是同一小类标准物质中按审批的时间先后顺序排列的顺序号。最后一位是标准物质的产生批号,用英文小写字母表示,排于标准物质编号的最后一位,生产的第一批标准物质用a表示,第二批用b表示,批号顺序与英文字母顺序一致。GBW07101a超基性岩,表示地质类中岩石小类第一个批准的标准物质,首批产品。 如:GBW02102b铁黄铜,表示有色金属类中铜合金小类第二个批准的标准物质,第二批产品。 2.3.2 二级标准物质 二级标准物质的编号是以二级标准物质代号“GBW(E)”冠于编号前部,编号的前两位数是标准物质的大类号,第三、四、五、六位数为该大类标准物质的顺序号。生产批号同一级标准物质生产批号,如GBW(E)110007a表示煤炭石油成分分析和物理特性测量标准物质类的第7顺序号,第一批生产的煤炭物理性质和化学成分分析标准物质。 2.4 标准物质的分类编号 标准物质的分类编号,见表2。

winccflexible系统函数

WinCC Flexible 系统函数报警 ClearAlarmBuffer 应用 删除HMI设备报警缓冲区中的报警。 说明 尚未确认的报警也被删除。 语法 ClearAlarmBuffer (Alarm class number) 在脚本中是否可用:有 (ClearAlarmBuffer) 参数 Alarm class number 确定要从报警缓冲区中删除的报警: 0 (hmiAll) = 所有报警/事件 1 (hmiAlarms) = 错误 2 (hmiEvents) = 警告 3 (hmiSystem) = 系统事件 4 (hmiS7Diagnosis) = S7 诊断事件 可组态的对象 对象事件 变量数值改变超出上限低于下限 功能键(全局)释放按下 功能键(局部)释放按下 画面已加载已清除 数据记录溢出报警记录溢出 检查跟踪记录可用内存很少可用内存极少 画面对象按下 释放 单击 切换(或者拨动开关)打开 断开 启用 取消激活 时序表到期 报警缓冲区溢出 ClearAlarmBufferProtoolLegacy 应用

该系统函数用来确保兼容性。 它具有与系统函数“ClearAlarmBuffer”相同的功能,但使用旧的ProTool编号方式。语法 ClearAlarmBufferProtoolLegacy (Alarm class number) 在脚本中是否可用:有 (ClearAlarmBufferProtoolLegacy) 参数 Alarm class number 将要删除其消息的报警类别号: -1 (hmiAllProtoolLegacy) = 所有报警/事件 0 (hmiAlarmsProtoolLegacy) = 错误 1 (hmiEventsProtoolLegacy) = 警告 2 (hmiSystemProtoolLegacy) = 系统事件 3 (hmiS7DiagnosisProtoolLegacy) = S7 诊断事件 可组态的对象 对象事件 变量数值改变超出上限低于下限 功能键(全局)释放按下 功能键(局部)释放按下 画面已加载已清除 变量记录溢出报警记录溢出 检查跟踪记录可用内存很少可用内存极少 画面对象按下 释放 单击 切换(或者拨动开关)打开 断开 启用 取消激活 时序表到期 报警缓冲区溢出 SetAlarmReportMode 应用 确定是否将报警自动报告到打印机上。 语法 SetAlarmReportMode (Mode) 在脚本中是否可用:有 (SetAlarmReportMode) 参数 Mode 确定报警是否自动报告到打印机上: 0 (hmiDisnablePrinting) = 报表关闭:报警不自动打印。

STC89C52RC单片机手册范本

STC89C52单片机用户手册 [键入作者姓名] [选取日期]

STC89C52RC单片机介绍 STC89C52RC单片机是宏晶科技推出的新一代高速/低功耗/超强抗干扰的单片机,指令代码完全兼容传统8051单片机,12时钟/机器周期和6时钟/机器周期可以任意选择。 主要特性如下: 1.增强型8051单片机,6时钟/机器周期和12时钟/机器周期可以任 意选择,指令代码完全兼容传统8051. 2.工作电压:5.5V~ 3.3V(5V单片机)/3.8V~2.0V(3V单片机) 3.工作频率范围:0~40MHz,相当于普通8051的0~80MHz,实 际工作频率可达48MHz 4.用户应用程序空间为8K字节 5.片上集成512字节RAM 6.通用I/O口(32个),复位后为:P1/P2/P3/P4是准双向口/弱上 拉,P0口是漏极开路输出,作为总线扩展用时,不用加上拉电阻, 作为I/O口用时,需加上拉电阻。 7.ISP(在系统可编程)/IAP(在应用可编程),无需专用编程器,无 需专用仿真器,可通过串口(RxD/P3.0,TxD/P3.1)直接下载用 户程序,数秒即可完成一片 8.具有EEPROM功能 9.具有看门狗功能 10.共3个16位定时器/计数器。即定时器T0、T1、T2 11.外部中断4路,下降沿中断或低电平触发电路,Power Down模式

可由外部中断低电平触发中断方式唤醒 12.通用异步串行口(UART),还可用定时器软件实现多个UART 13.工作温度范围:-40~+85℃(工业级)/0~75℃(商业级) 14.PDIP封装 STC89C52RC单片机的工作模式 ●掉电模式:典型功耗<0.1μA,可由外部中断唤醒,中断返回后,继续执行原 程序 ●空闲模式:典型功耗2mA ●正常工作模式:典型功耗4Ma~7mA ●掉电模式可由外部中断唤醒,适用于水表、气表等电池供电系统及便携设备

使用WinCCFlexible2008SP4创建的项目(精)

常问问题 02/2015 使用WinCC Flexible 2008 SP4创建的项目如何移植到博途软件中? 移植, WinCC Flexible 2008 SP4, WinCC V13 C o p y r i g h t S i e m e n s A G C o p y r i g h t y e a r A l l r i g h t s r e s e r v e d 目录 1 基本介绍 (3 1.1 软件环境及安装要求 .......................................................................... 3 1.1.1 软件环境 ............................................................................................ 3 1.1.2 安装要求 ............................................................................................ 3 2 操作过程.. (4 2.1 设备类型在博途软件中包含 ................................................................ 4 2.1.1 移植过程 ............................................................................................ 4 2.2 设备类型在博途软件中不包含 ............................................................ 6 2.2.1 移植过程 .. (6 3 常见错误及解决方法 (8 3.1 项目版本不符 ..................................................................................... 8 3.2

STC89C52单片机学习开发板介绍

STC89C52单片机学习开发板介绍 全套配置: 1 .全新增强STC89C5 2 1个【RAM512字节比AT89S52多256个字节FLASH8K】 2 .优质USB数据线 1条【只需此线就能完成供电、通信、烧录程序、仿真等功能,简洁方便实验,不需要USB 转串口和串口线,所有电脑都适用】 3 .八位排线 4条【最多可带4个8*8 LED点阵,从而组合玩16*16的LED点阵】 4 .单P杜邦线 8条【方便接LED点阵等】 5 .红色短路帽 19个【已装在开发箱板上面,短路帽都是各功能的接口,方便取用】 6 .实验时钟电池座及电池 1PCS 7 .DVD光盘 1张【光盘具体内容请看页面下方,光盘资料截图】 8 .全新多功能折叠箱抗压抗摔经久耐磨 1个【市场没有卖,专用保护您爱板的折叠式箱子,所有配件都可以放入】 9 .8*8(红+绿)双色点阵模块 1片【可以玩各种各样的图片和文字,两种颜色变换显示】 10.全新真彩屏SD卡集成模块 1个【请注意:不包含SD卡,需要自己另外配】 晶振【1个方便您做实验用】 12.全新高速高矩进口步进电机 1个【价格元/个】 13.全新直流电机 1个【价值元/ 个】 14.全新红外接收头 1个【价格元/ 个】 15.全新红外遥控器(送纽扣电池) 1个【价格元/个】 16.全新18B20温度检测 1个【价格元/只】 17.光敏热敏模块 1个(已经集成在板子上)【新增功能】 液晶屏 1个 配件参照图:

温馨提示:四点关键介绍,这对您今后学习51是很有帮助的) 1.板子上各模块是否独立市场上现在很多实验板,绝大部分都没有采用模块化设计,所有的元器件密 密麻麻的挤在一块小板上,各个模块之间PCB布线连接,看上去不用接排线,方便了使用者,事实上是为了降低硬件成本,难以解决各个模块之间的互相干扰,除了自带的例程之外,几乎无法再做任何扩展,更谈不上自由组合发挥了,这样对于后继的学习非常不利。几年前的实验板,基本上都是这种结构的。可见这种设计是非常过时和陈旧的,有很多弊端,即便价格再便宜也不值得选购。 HC6800是采用最新设计理念,实验板各功能模块完全独立,互不干扰,功能模块之间用排线快速连接。 一方面可以锻炼动手能力,同时可加强初学者对实验板硬件的认识,熟悉电路,快速入门;另一方面,因为各功能模块均独立设计,将来大家学习到更高级的AVR,PIC,甚至ARM的时候,都只

标准物质标准样品概述

标准物质/标准样品概述 1.定义简述:标准物质/标准样品是具有准确量值的测量标准,它在化学测量,生物测量,工程测量与物理测量领域得到了广泛的应用。按照“国际通用计量学基本术语”和“国际标准化组织指南30”标准物质定义: (1) 标准物质(Reference Material,RM)具有一种或多种足够均匀和很好确定了的特性值,用以校准设备,评价测量方法或给材料赋值的材料或物质。 (2) 有证标准物质(Certified Reference Material CRM)附有证书的标准物质,其一种或多种特性值用建立了溯源性的程序确定,使之可溯源到准确复现的用于表示该特性值的计量单位,而且每个标准值都附有给定置信水平的不确定度。 (3) 基准标准物质(Primary Reference Material PRM) 2.标准物质/标准样品的用途简述 (1) 校准仪器:常用的光谱、色谱等仪器在使用前需要使用标准物质对仪器进行检定,检查仪器的各项指标,如灵敏度、分辨率、稳定性等是否达到要求。在使用时用标准物质绘制标准曲线校准仪器,测试过程中修正分析结果。 (2) 评价方法:用标准物质考察一些分析方法的可靠性。 (3) 质量控制:分析过程中同时分析控制样品,通过控制样品的分析结果考察操作过程的正确性 3.标准物质/标准样品分类简述: (1) 化学标样:形态:屑状;销售状态:单瓶销售;用途:用于化学方法分析,例如:CS分析仪,湿法化学分析。 (2) 光谱套标标样:形态:块状;销售状态:成套销售(多以5块或5块以上作为一套标样);用途:用于光谱仪器工作曲线的建立。又称仪器标准化标样。 (3) 光谱高低标标样:形态:块状;销售状态:成套销售(多以2块或3块作为一套标样);用途:在光谱仪开机后,使用工作曲线之前,对工作曲线的飘移进行校正,保证工作曲线的工作状态。国外对于这种类似作用的标样成为:SUS标准样品,SUS标样特点是成分均匀稳定,但不做精确定值,不建议使用在单点校正方面,只用于仪器工作曲线飘移的校正。 (4) 光谱单块校正标样:形态:块状;销售状态:单块出售;用途:在工作曲线校正完成的前提下,针对某一种未知产品成分进行校正,又称仪器类型标准化标样。 4.标样牌号与标样编号及成分的关系 牌号:代表某一类产品的名字,是一个成分范围,每个牌号都有其对应的成分范围,在这个成分牌号下面有许多标样。 标样编号:是标样的名字,标样编号与每一个标样是一一对应的。

国家实用标准物质技术要求规范

国家药品标准物质技术规 第一章总则 第一条为保证国家药品标准物质的质量,规药品标准物质的研制工作,根据《国家药品标准物质管理办法》,制定本技术规。 第二条本技术规适用于中国食品药品检定研究院(以下简称中检院)研究、制备、标定、审核、供应的国家药品标准物质。 第三条国家药品标准物质系指供药品质量标准中理化测试及生物方法试验用,具有确定特性,用以校准设备、评价测量方法或给供试药品定性或赋值的物质。 (一)理化检测用国家药品标准物质系指用于药品质量标准中物理和化学测试用,具有确定特性,用以鉴别、检查、含量测定、校准设备的对照品,按用途分为下列四类: 1. 含量测定用化学对照品:系指具有确定的量值,用于测定药品中特定成分含量的标准物质。 2. 鉴别或杂质检查用化学对照品:系指具有特定化学性质,用于鉴别或确定药品某些特定成分的标准物质。 3. 对照药材/对照提取物:系指用于鉴别中药材或中成药中某一类成分或组分的对照物质。 4. 校正仪器/系统适用性试验用对照品:系指具有特定

化学性质用于校正检测仪器或供系统适用性实验用的标准物质。 (二)生物检测用国家药品标准物质系指用于生物制品效价、活性、含量测定或其特性鉴别、检查的生物标准品或生物参考物质,可分为生物标准品和生物参考品。 1.生物标准品系指用国际生物标准品标定的,或由我国自行研制的(尚无国际生物标准品者)用于定量测定某一制品效价或毒性的标准物质,其生物学活性以国际单位(IU)或以单位(U)表示。 2.生物参考品系指用国际生物参考品标定的,或由我国自行研制的(尚无国际生物参考品者)用于微生物(或其产物)的定性鉴定或疾病诊断的生物试剂、生物材料或特异性抗血清;或指用于定量检测某些制品的生物效价的参考物质,如用于麻疹活疫苗滴度或类毒素絮状单位测定的参考品,其效价以特定活性单位表示,不以国际单位(IU)表示。 第二章国家药品标准物质的制备 第四条在建立新的国家药品标准物质时,研制部门应提交研制申请,标准物质管理处负责评估研制的必要性。新增标准物质应遵循适用性、代表性与易获得性的原则,研制申请获得批准后研制部门方可进行制备与标定。 第五条除特殊情况外,理化检测用国家药品标准物质

STC89C52RC单片机特点

STC89C52RC 单片机介绍 STC89C52RC 单片机是宏晶科技推出的新一代高速/低功耗/超强抗干扰的单片机,指令代码完全兼容传统8051 单片机,12 时钟/机器周期和 6 时钟/机器周期可以任意选择。 主要特性如下: 1. 增强型8051 单片机,6 时钟/机器周期和12 时钟/机器周期可以任意选择,指令代码完全兼容传统 8051. 2. 工作电压:5.5V~ 3.3V<5V 单片机)/3.8V~2.0V<3V 单片机) 3. 工作频率范围:0~40MHz,相当于普通 8051 的 0~80MHz,实际工作频率可达 48MHz 4. 用户应用程序空间为 8K 字节 5. 片上集成 512 字节 RAM 6. 通用I/O 口<32 个)复位后为:,P1/P2/P3/P4 是准双向口/弱上拉,P0 口是漏极开路输出,作为总线扩展用时,不用加上拉电阻,作为I/O 口用时,需加上拉电阻。 7. ISP<在系统可编程)/IAP<在应用可编程),无需专用编程器,无需专用仿真器,可通过串口 STC89C52F单片机介绍 STC89C52F单片机是宏晶科技推出的新一代高速 /低功耗/超强抗干扰的单片机,指令代码完全兼容传统8051单片机,12时钟/机器周期和6时钟/机器周期可以任意选择。 主要特性如下: * 增强型8051单片机,6时钟/机器周期和12时钟/机器周期可以任意选择,指令代码完全兼容传统8051. * 工作电压:5.5V?3.3V (5V单片机)/3.8V?2.0V (3V单片机) * 工作频率范围:0?40MHz相当于普通8051的0?80MHz实际工作频率可达48MHz *用户应用程序空间为8K字节 * 片上集成512字节RAM * 通用I/O 口(32个),复位后为:P1/P2/P3/P4是准双向口 /弱上拉,P0 口是漏极开路输出,作为总线扩展用时,不用加上拉电阻,作为I/O 口 用时,需加上拉电阻。 * ISP (在系统可编程)/IAP (在应用可编程),无需专用编程器,无需专用仿真器,可通过串口( RxD/P3.0,TxD/P3.1 )直接下载用户程序,数秒 即可完成一片 * 具有 EEPROM能 *具有看门狗功能 * 共3个16位定时器/计数器。即定时器T0、T1、T2 * 外部中断4路,下降沿中断或低电平触发电路,Power Down模式可由外部中断低电平触发中断方式唤醒 * 通用异步串行口( UART,还可用定时器软件实现多个 UART * 工作温度范围:-40?+85C(工业级)/0?75C(商业级) * PDIP封装 STC89C52F单片机的工作模式 *掉电模式:典型功耗<0.1吩,可由外部中断唤醒,中断返回后,继续执行原程序 标准物质的特性 标准物质最主要的是三大基本特性:均匀性、稳定性、溯源性构成了标准物质三个基本要素,其具体特性有: 1.量具作用:标准物质可以作为标准计量的量具,进行化学计量的 量值传递。 2.特性量值的复现性:每一种标准物质都具有一定的化学成分或物 理特性,保存和复现这些特性量值与物理的性质有关,与物质的数量和形状无关。 3.自身的消耗性:标准物质不同于技术标准、计量器具,是实物标 准,在进行比对和量值传递过程中要逐渐消耗。 4.标准物质品种多:物质的多种性和化学测量过程中的复杂性决定 了标准物质品种多,就冶金标准物质就达几百种,同一种元素的组分就可跨越几个数量级。 5.比对性:标准物质大多采用绝对法比对标准值,常采用几个、十 几个实验室共同比对的方法来确定标准物质的标准值。高一级标准物质可以作为低一级标准物质的比对参照物,标准物质都是作为“比对参照物”发挥其标准的作用。 6.特定的管理要求:标准物质其种类不同,对储存、运输、保管和 使用都有不同的特点要求,才能保证标准物质的标准作用和标准值的准确度,否则就会降低和失去标准物质的标准作用。 7.可溯源性:其溯源性是通过具有规定的不确定度的连续比较链, 使得测量结果或标准的量值能够与规定的参考基准,通常是国家 基准或国际基准联系起来的特性,标准物质作为实现准确一致的测量标准,在实际测量中,用不同级别的标准物质,按准确度由低到高逐级进行量值追溯,直到国际基本单位,这一过程称为量值的“溯源过程”。反之,从国际基本单位逐级由高到低进行量值传递,至实际工作中的现场应用,这一过程称为量值的“传递过程”。通过标准物质进行量值的传递和追溯,构成了一个完整的量值传递和溯源体系。 标准物质研制报告编写规则 1. 范围 本规范规定了国家标准物质研制报告的编写要求、内容和格式,适用于申报国家一级、二级标准物质定级评审的研制报告。 2. 引用文献 JJF1005-2005《标准物质常用术语及定义》 JJG1006-1994《一级标准物质技术规范》 JJF1071-2000 《国家计量校准规范编写规则》 使用本规范时,应注意使用上述引用文献的现行有效版本。 3. 术语和定义 3.1 标准物质(RM)Reference material (RM) 具有一种或多种足够均匀和很好地确定了的特性,用以校准测量装置、评价测量方法或给材料赋值的一种材料或物质。 3.2 有证标准物质(CRM)Certified reference material (CRM) 附有证书的标准物质,其一种或多种特性量值用建立了溯源性的程序确定,使之可溯源到准确复现的表示该特性值的测量单位,每一种鉴定的特性量值都附有给定置信水平的不确定度。 注:在我国,有证标准物质必须经过国家计量行政部门的审批、颁布。 3.3 定值Characterization 对与标准物质预期用途有关的一个或多个物理、化学、生物或工程技术等方面的特性量值的测定。 3.4 均匀性Homogeneity 与物质的一种或多种特性相关的具有相同结构或组成的状态。通过测量取自不同包装单元(如:瓶、包等)或取自同一包装单元的、特定大小的样品,测量结果落在规定不确定度范围内,则可认为标准物质对指定的特性量是均匀的。 3.5 稳定性Stability 在特定的时间范围和贮存条件下,标准物质的特性量值保持在规定范围内的能力。 3.6 溯源性Traceability 通过一条具有规定不确定度的不间断的比较链,使测量结果或测量标准的值能够与规定的参考标准,通常是与国家测量标准或国际测量标准联系起来的特性。 4. 报告编写要求 4.1一般要求 标准物质研制报告(以下简称“报告”)是描述标准物质研制的全过程,并评价结果的重要技术文件,在标准物质的定级评审时,作为技术依据提交给相关评审机构,因此,报告应提供标准物质研制过程和数据分析的充分信息。 研制者应将研制工作中采用的方法、技术路线和创造性工作体现在报告中,写出研制的特色。 报告应作为标准物质研究的重要技术档案保存。 4.1.1报告的内容应科学、完整、易读及数据准确。 基于Step7和WinccFlexible联合仿真教程目录 0 项目要求:..................................................................... .............................................. 2 1 项目分析与规 划: .................................................................... .................................... 2 2 系统IO口分配:..................................................................... ..................................... 2 3 系统接线原理 图: .................................................................... .................................... 2 4 系统控制方式规划:..................................................................... .. (2) 5 系统硬件选择与组态...................................................................... . (3) 6 PLC程序设计...................................................................... ........................................ 19 7 触摸屏通讯设置、画面设计与变量控制....................................................................... 25 8 项目仿真测 试 .....................................................................STC89C52单片机用户手册

标准物质特性

标准物质的申报

基于Step7和WinccFlexible联合仿真教程