Rapid Object Detection using a Boosted cascade of simple features

A CCEPTED C ONFERENCE ON C OMPUTER V ISION AND P ATTERN R ECOGNITION2001 Rapid Object Detection using a Boosted Cascade of Simple

Features

Paul Viola Michael Jones viola@https://www.360docs.net/doc/7110167247.html, mjones@https://www.360docs.net/doc/7110167247.html, Mitsubishi Electric Research Labs Compaq CRL 201Broadway,8th FL One Cambridge Center

Cambridge,MA02139Cambridge,MA02142

Abstract

This paper describes a machine learning approach for vi-sual object detection which is capable of processing images extremely rapidly and achieving high detection rates.This work is distinguished by three key contributions.The?rst is the introduction of a new image representation called the “Integral Image”which allows the features used by our de-tector to be computed very quickly.The second is a learning algorithm,based on AdaBoost,which selects a small num-ber of critical visual features from a larger set and yields extremely ef?cient classi?ers[6].The third contribution is a method for combining increasingly more complex classi-?ers in a“cascade”which allows background regions of the image to be quickly discarded while spending more compu-tation on promising object-like regions.The cascade can be viewed as an object speci?c focus-of-attention mechanism which unlike previous approaches provides statistical guar-antees that discarded regions are unlikely to contain the ob-ject of interest.In the domain of face detection the system yields detection rates comparable to the best previous https://www.360docs.net/doc/7110167247.html,ed in real-time applications,the detector runs at 15frames per second without resorting to image differenc-ing or skin color detection.

1.Introduction

This paper brings together new algorithms and insights to construct a framework for robust and extremely rapid object detection.This framework is demonstrated on,and in part motivated by,the task of face detection.Toward this end we have constructed a frontal face detection system which achieves detection and false positive rates which are equiv-alent to the best published results[16,12,15,11,1].This face detection system is most clearly distinguished from previous approaches in its ability to detect faces extremely rapidly.Operating on384by288pixel images,faces are de-

tected at15frames per second on a conventional700MHz Intel Pentium III.In other face detection systems,auxiliary information,such as image differences in video sequences, or pixel color in color images,have been used to achieve high frame rates.Our system achieves high frame rates working only with the information present in a single grey scale image.These alternative sources of information can also be integrated with our system to achieve even higher frame rates.

There are three main contributions of our object detec-tion framework.We will introduce each of these ideas brie?y below and then describe them in detail in subsequent sections.

The?rst contribution of this paper is a new image repre-sentation called an integral image that allows for very fast feature evaluation.Motivated in part by the work of Papa-georgiou et al.our detection system does not work directly with image intensities[10].Like these authors we use a set of features which are reminiscent of Haar Basis func-tions(though we will also use related?lters which are more complex than Haar?lters).In order to compute these fea-tures very rapidly at many scales we introduce the integral image representation for images.The integral image can be computed from an image using a few operations per pixel.

Once computed,any one of these Harr-like features can be computed at any scale or location in constant time.

The second contribution of this paper is a method for constructing a classi?er by selecting a small number of im-portant features using AdaBoost[6].Within any image sub-window the total number of Harr-like features is very large, far larger than the number of pixels.In order to ensure fast classi?cation,the learning process must exclude a large ma-jority of the available features,and focus on a small set of critical features.Motivated by the work of Tieu and Viola, feature selection is achieved through a simple modi?cation of the AdaBoost procedure:the weak learner is constrained so that each weak classi?er returned can depend on only a 1

single feature[2].As a result each stage of the boosting process,which selects a new weak classi?er,can be viewed as a feature selection process.AdaBoost provides an effec-tive learning algorithm and strong bounds on generalization performance[13,9,10].

The third major contribution of this paper is a method for combining successively more complex classi?ers in a cascade structure which dramatically increases the speed of the detector by focusing attention on promising regions of the image.The notion behind focus of attention approaches is that it is often possible to rapidly determine where in an image an object might occur[17,8,1].More complex pro-cessing is reserved only for these promising regions.The key measure of such an approach is the“false negative”rate of the attentional process.It must be the case that all,or almost all,object instances are selected by the attentional ?lter.

We will describe a process for training an extremely sim-ple and ef?cient classi?er which can be used as a“super-vised”focus of attention operator.The term supervised refers to the fact that the attentional operator is trained to detect examples of a particular class.In the domain of face detection it is possible to achieve fewer than1%false neg-atives and40%false positives using a classi?er constructed from two Harr-like features.The effect of this?lter is to reduce by over one half the number of locations where the ?nal detector must be evaluated.

Those sub-windows which are not rejected by the initial classi?er are processed by a sequence of classi?ers,each slightly more complex than the last.If any classi?er rejects the sub-window,no further processing is performed.The structure of the cascaded detection process is essentially that of a degenerate decision tree,and as such is related to the work of Geman and colleagues[1,4].

An extremely fast face detector will have broad prac-tical applications.These include user interfaces,image databases,and teleconferencing.In applications where rapid frame-rates are not necessary,our system will allow for signi?cant additional post-processing and analysis.In addition our system can be implemented on a wide range of small low power devices,including hand-helds and embed-ded processors.In our lab we have implemented this face detector on the Compaq iPaq handheld and have achieved detection at two frames per second(this device has a low power200mips Strong Arm processor which lacks?oating point hardware).

The remainder of the paper describes our contributions and a number of experimental results,including a detailed description of our experimental methodology.Discussion of closely related work takes place at the end of each sec-tion.

2.Features

Our object detection procedure classi?es images based on the value of simple features.There are many motivations

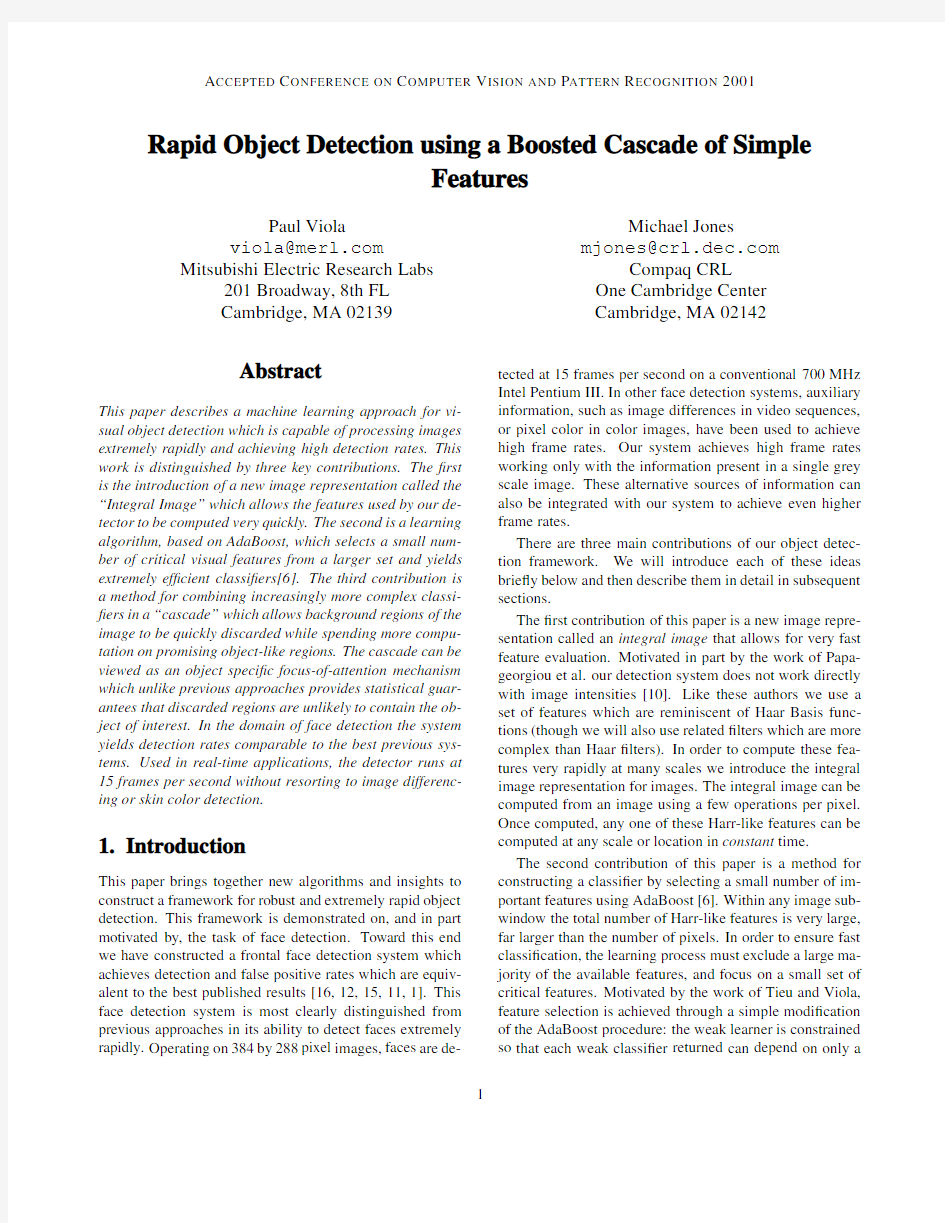

Figure1:Example rectangle features shown relative to the enclosing detection window.The sum of the pixels which lie within the white rectangles are subtracted from the sum of pixels in the grey rectangles.Two-rectangle features are shown in(A)and(B).Figure(C)shows a three-rectangle feature,and(D)a four-rectangle feature.

for using features rather than the pixels directly.The most common reason is that features can act to encode ad-hoc domain knowledge that is dif?cult to learn using a?nite quantity of training data.For this system there is also a second critical motivation for features:the feature based system operates much faster than a pixel-based system.

The simple features used are reminiscent of Haar basis functions which have been used by Papageorgiou et al.[10]. More speci?cally,we use three kinds of features.The value of a two-rectangle feature is the difference between the sum of the pixels within two rectangular regions.The regions have the same size and shape and are horizontally or ver-tically adjacent(see Figure1).A three-rectangle feature computes the sum within two outside rectangles subtracted from the sum in a center rectangle.Finally a four-rectangle feature computes the difference between diagonal pairs of rectangles.

Given that the base resolution of the detector is24x24, the exhaustive set of rectangle features is quite large,over 180,000.Note that unlike the Haar basis,the set of rectan-gle features is overcomplete1.

2.1.Integral Image

Rectangle features can be computed very rapidly using an intermediate representation for the image which we call the integral image.2The integral image at location contains the sum of the pixels above and to the left of,inclusive:

Figure2:The sum of the pixels within rectangle can be computed with four array references.The value of the inte-gral image at location1is the sum of the pixels in rectangle .The value at location2is,at location3is, and at location4is.The sum within can be computed as.

where is the integral image and is the origi-nal https://www.360docs.net/doc/7110167247.html,ing the following pair of recurrences:

(1)

(2) (where is the cumulative row sum,, and)the integral image can be computed in one pass over the original image.

Using the integral image any rectangular sum can be computed in four array references(see Figure2).Clearly the difference between two rectangular sums can be com-puted in eight references.Since the two-rectangle features de?ned above involve adjacent rectangular sums they can be computed in six array references,eight in the case of the three-rectangle features,and nine for four-rectangle fea-tures.

2.2.Feature Discussion

Rectangle features are somewhat primitive when compared with alternatives such as steerable?lters[5,7].Steerable?l-ters,and their relatives,are excellent for the detailed analy-sis of boundaries,image compression,and texture analysis. In contrast rectangle features,while sensitive to the pres-ence of edges,bars,and other simple image structure,are quite coarse.Unlike steerable?lters the only orientations available are vertical,horizontal,and diagonal.The set of rectangle features do however provide a rich image repre-sentation which supports effective learning.In conjunction with the integral image,the ef?ciency of the rectangle fea-ture set provides ample compensation for their limited?ex-ibility.

3.Learning Classi?cation Functions Given a feature set and a training set of positive and neg-ative images,any number of machine learning approaches

could be used to learn a classi?cation function.In our sys-tem a variant of AdaBoost is used both to select a small set of features and train the classi?er[6].In its original form, the AdaBoost learning algorithm is used to boost the clas-si?cation performance of a simple(sometimes called weak) learning algorithm.There are a number of formal guaran-tees provided by the AdaBoost learning procedure.Freund and Schapire proved that the training error of the strong classi?er approaches zero exponentially in the number of rounds.More importantly a number of results were later proved about generalization performance[14].The key insight is that generalization performance is related to the margin of the examples,and that AdaBoost achieves large margins rapidly.

Recall that there are over180,000rectangle features as-sociated with each image sub-window,a number far larger than the number of pixels.Even though each feature can be computed very ef?ciently,computing the complete set is prohibitively expensive.Our hypothesis,which is borne out by experiment,is that a very small number of these features can be combined to form an effective classi?er.The main challenge is to?nd these features.

In support of this goal,the weak learning algorithm is designed to select the single rectangle feature which best separates the positive and negative examples(this is similar to the approach of[2]in the domain of image database re-trieval).For each feature,the weak learner determines the optimal threshold classi?cation function,such that the min-imum number of examples are misclassi?ed.A weak clas-si?er thus consists of a feature,a threshold and

a parity indicating the direction of the inequality sign:

if

otherwise

Here is a24x24pixel sub-window of an image.See Ta-ble1for a summary of the boosting process.

In practice no single feature can perform the classi?ca-tion task with low error.Features which are selected in early rounds of the boosting process had error rates between0.1 and0.3.Features selected in later rounds,as the task be-comes more dif?cult,yield error rates between0.4and0.5.

3.1.Learning Discussion

Many general feature selection procedures have been pro-posed(see chapter8of[18]for a review).Our?nal appli-cation demanded a very aggressive approach which would discard the vast majority of features.For a similar recogni-tion problem Papageorgiou et al.proposed a scheme for fea-ture selection based on feature variance[10].They demon-strated good results selecting37features out of a total1734 features.

Roth et al.propose a feature selection process based on the Winnow exponential perceptron learning rule[11].

The Winnow learning process converges to a solution where many of these weights are zero.Nevertheless a very large

3

Given example images where

for negative and positive examples respec-tively.

Initialize weights for respec-

tively,where and are the number of negatives and

positives respectively.

For:

1.Normalize the weights,

.

The?nal strong classi?er is:

Table1:The AdaBoost algorithm for classi?er learn-ing.Each round of boosting selects one feature from the 180,000potential features.

number of features are retained(perhaps a few hundred or thousand).

3.2.Learning Results

While details on the training and performance of the?nal system are presented in Section5,several simple results merit discussion.Initial experiments demonstrated that a frontal face classi?er constructed from200features yields a detection rate of95%with a false positive rate of1in 14084.These results are compelling,but not suf?cient for many real-world tasks.In terms of computation,this clas-si?er is probably faster than any other published system, requiring0.7seconds to scan an384by288pixel image. Unfortunately,the most straightforward technique for im-proving detection performance,adding features to the clas-si?er,directly increases computation time.

For the task of face detection,the initial rectangle fea-tures selected by AdaBoost are meaningful and easily inter-preted.The?rst feature selected seems to focus on the prop-erty that the region of the eyes is often darker than the

region

Figure3:The?rst and second features selected by Ad-aBoost.The two features are shown in the top row and then overlayed on a typical training face in the bottom row.The ?rst feature measures the difference in intensity between the region of the eyes and a region across the upper cheeks.The feature capitalizes on the observation that the eye region is often darker than the cheeks.The second feature compares the intensities in the eye regions to the intensity across the bridge of the nose.

of the nose and cheeks(see Figure3).This feature is rel-atively large in comparison with the detection sub-window, and should be somewhat insensitive to size and location of the face.The second feature selected relies on the property that the eyes are darker than the bridge of the nose.

4.The Attentional Cascade

This section describes an algorithm for constructing a cas-cade of classi?ers which achieves increased detection per-formance while radically reducing computation time.The key insight is that smaller,and therefore more ef?cient, boosted classi?ers can be constructed which reject many of the negative sub-windows while detecting almost all posi-tive instances(i.e.the threshold of a boosted classi?er can be adjusted so that the false negative rate is close to zero).

Simpler classi?ers are used to reject the majority of sub-windows before more complex classi?ers are called upon to achieve low false positive rates.

The overall form of the detection process is that of a de-generate decision tree,what we call a“cascade”(see Fig-ure4).A positive result from the?rst classi?er triggers the evaluation of a second classi?er which has also been ad-justed to achieve very high detection rates.A positive result from the second classi?er triggers a third classi?er,and so on.A negative outcome at any point leads to the immediate rejection of the sub-window.

Stages in the cascade are constructed by training clas-si?ers using AdaBoost and then adjusting the threshold to minimize false negatives.Note that the default AdaBoost threshold is designed to yield a low error rate on the train-ing data.In general a lower threshold yields higher detec-4

Reject Sub?window

Figure4:Schematic depiction of a the detection cascade.

A series of classi?ers are applied to every sub-window.The initial classi?er eliminates a large number of negative exam-ples with very little processing.Subsequent layers eliminate additional negatives but require additional computation.Af-ter several stages of processing the number of sub-windows have been reduced radically.Further processing can take any form such as additional stages of the cascade(as in our detection system)or an alternative detection system.

tion rates and higher false positive rates.

For example an excellent?rst stage classi?er can be con-structed from a two-feature strong classi?er by reducing the threshold to minimize false negatives.Measured against a validation training set,the threshold can be adjusted to de-tect100%of the faces with a false positive rate of40%.See Figure3for a description of the two features used in this classi?er.

Computation of the two feature classi?er amounts to about60microprocessor instructions.It seems hard to imagine that any simpler?lter could achieve higher rejec-tion rates.By comparison,scanning a simple image tem-plate,or a single layer perceptron,would require at least20 times as many operations per sub-window.

The structure of the cascade re?ects the fact that within any single image an overwhelming majority of sub-windows are negative.As such,the cascade attempts to re-ject as many negatives as possible at the earliest stage pos-sible.While a positive instance will trigger the evaluation of every classi?er in the cascade,this is an exceedingly rare event.

Much like a decision tree,subsequent classi?ers are trained using those examples which pass through all the previous stages.As a result,the second classi?er faces a more dif?cult task than the?rst.The examples which make it through the?rst stage are“harder”than typical exam-ples.The more dif?cult examples faced by deeper classi-?ers push the entire receiver operating characteristic(ROC) curve downward.At a given detection rate,deeper classi-?ers have correspondingly higher false positive rates.4.1.Training a Cascade of Classi?ers

The cascade training process involves two types of trade-offs.In most cases classi?ers with more features will achieve higher detection rates and lower false positive rates. At the same time classi?ers with more features require more time to compute.In principle one could de?ne an optimiza-tion framework in which:i)the number of classi?er stages, ii)the number of features in each stage,and iii)the thresh-old of each stage,are traded off in order to minimize the expected number of evaluated features.Unfortunately?nd-ing this optimum is a tremendously dif?cult problem.

In practice a very simple framework is used to produce an effective classi?er which is highly ef?cient.Each stage in the cascade reduces the false positive rate and decreases the detection rate.A target is selected for the minimum reduction in false positives and the maximum decrease in detection.Each stage is trained by adding features until the target detection and false positives rates are met(these rates are determined by testing the detector on a validation set). Stages are added until the overall target for false positive and detection rate is met.

4.2.Detector Cascade Discussion

The complete face detection cascade has38stages with over 6000features.Nevertheless the cascade structure results in fast average detection times.On a dif?cult dataset,con-taining507faces and75million sub-windows,faces are detected using an average of10feature evaluations per sub-window.In comparison,this system is about15times faster than an implementation of the detection system constructed by Rowley et al.3[12]

A notion similar to the cascade appears in the face de-tection system described by Rowley et al.in which two de-tection networks are used[12].Rowley et https://www.360docs.net/doc/7110167247.html,ed a faster yet less accurate network to prescreen the image in order to ?nd candidate regions for a slower more accurate network. Though it is dif?cult to determine exactly,it appears that Rowley et al.’s two network face system is the fastest exist-ing face detector.4

The structure of the cascaded detection process is es-sentially that of a degenerate decision tree,and as such is related to the work of Amit and Geman[1].Unlike tech-niques which use a?xed detector,Amit and Geman propose an alternative point of view where unusual co-occurrences of simple image features are used to trigger the evaluation of a more complex detection process.In this way the full detection process need not be evaluated at many of the po-tential image locations and scales.While this basic insight

is very valuable,in their implementation it is necessary to ?rst evaluate some feature detector at every location.These features are then grouped to?nd unusual co-occurrences.In practice,since the form of our detector and the features that it uses are extremely ef?cient,the amortized cost of evalu-ating our detector at every scale and location is much faster than?nding and grouping edges throughout the image.

In recent work Fleuret and Geman have presented a face detection technique which relies on a“chain”of tests in or-der to signify the presence of a face at a particular scale and location[4].The image properties measured by Fleuret and Geman,disjunctions of?ne scale edges,are quite different than rectangle features which are simple,exist at all scales, and are somewhat interpretable.The two approaches also differ radically in their learning philosophy.The motivation for Fleuret and Geman’s learning process is density estima-tion and density discrimination,while our detector is purely discriminative.Finally the false positive rate of Fleuret and Geman’s approach appears to be higher than that of previ-ous approaches like Rowley et al.and this approach.Un-fortunately the paper does not report quantitative results of this kind.The included example images each have between 2and10false positives.

5Results

A38layer cascaded classi?er was trained to detect frontal upright faces.To train the detector,a set of face and non-face training images were used.The face training set con-sisted of4916hand labeled faces scaled and aligned to a base resolution of24by24pixels.The faces were ex-tracted from images downloaded during a random crawl of the world wide web.Some typical face examples are shown in Figure5.The non-face subwindows used to train the detector come from9544images which were manually in-spected and found to not contain any faces.There are about 350million subwindows within these non-face images.

The number of features in the?rst?ve layers of the de-tector is1,10,25,25and50features respectively.The remaining layers have increasingly more features.The total number of features in all layers is6061.

Each classi?er in the cascade was trained with the4916 training faces(plus their vertical mirror images for a total of9832training faces)and10,000non-face sub-windows (also of size24by24pixels)using the Adaboost training procedure.For the initial one feature classi?er,the non-face training examples were collected by selecting random sub-windows from a set of9544images which did not con-tain faces.The non-face examples used to train subsequent layers were obtained by scanning the partial cascade across the non-face images and collecting false positives.A max-imum of10000such non-face sub-windows were collected for each layer.

Speed of the Final

Detector Figure5:Example of frontal upright face images used for training.

The speed of the cascaded detector is directly related to the number of features evaluated per scanned sub-window. Evaluated on the MIT+CMU test set[12],an average of10 features out of a total of6061are evaluated per sub-window. This is possible because a large majority of sub-windows are rejected by the?rst or second layer in the cascade.On a700Mhz Pentium III processor,the face detector can pro-cess a384by288pixel image in about.067seconds(us-ing a starting scale of1.25and a step size of1.5described below).This is roughly15times faster than the Rowley-Baluja-Kanade detector[12]and about600times faster than the Schneiderman-Kanade detector[15].

Image Processing

All example sub-windows used for training were vari-ance normalized to minimize the effect of different light-ing conditions.Normalization is therefore necessary during detection as well.The variance of an image sub-window can be computed quickly using a pair of integral images. Recall that

Detector False detections

316595

76.1%91.4%92.1%93.9%

81.1%92.1%93.1%93.7%

83.2%--90.1%

----

--(94.8%)-

Table2:Detection rates for various numbers of false positives on the MIT+CMU test set containing130images and507 faces.

scale with the same cost.Good results were obtained using a set of scales a factor of1.25apart.

The detector is also scanned across location.Subsequent locations are obtained by shifting the window some number of pixels.This shifting process is affected by the scale of the detector:if the current scale is the window is shifted by,where is the rounding operation.

The choice of affects both the speed of the detector as well as accuracy.The results we present are for. We can achieve a signi?cant speedup by setting

with only a slight decrease in accuracy.

Integration of Multiple Detections

Since the?nal detector is insensitive to small changes in translation and scale,multiple detections will usually occur around each face in a scanned image.The same is often true of some types of false positives.In practice it often makes sense to return one?nal detection per face.Toward this end it is useful to postprocess the detected sub-windows in order to combine overlapping detections into a single detection.

In these experiments detections are combined in a very simple fashion.The set of detections are?rst partitioned into disjoint subsets.Two detections are in the same subset if their bounding regions overlap.Each partition yields a single?nal detection.The corners of the?nal bounding region are the average of the corners of all detections in the set.

Experiments on a Real-World Test Set

We tested our system on the MIT+CMU frontal face test set[12].This set consists of130images with507labeled frontal faces.A ROC curve showing the performance of our detector on this test set is shown in Figure6.To create the ROC curve the threshold of the?nal layer classi?er is ad-justed from to.Adjusting the threshold to

will yield a detection rate of0.0and a false positive rate of0.0.Adjusting the threshold to,however,increases both the detection rate and false positive rate,but only to a certain point.Neither rate can be higher than the rate of the detection cascade minus the?nal layer.In effect,a thresh-old of is equivalent to removing that layer.Further increasing the detection and false positive rates requires de-creasing the threshold of the next classi?er in the cascade.

Thus,in order to construct a complete ROC curve,classi?er layers are removed.We use the number of false positives as opposed to the rate of false positives for the x-axis of the ROC curve to facilitate comparison with other systems.To compute the false positive rate,simply divide by the total number of sub-windows scanned.In our experiments,the number of sub-windows scanned is75,081,800.

Unfortunately,most previous published results on face detection have only included a single operating regime(i.e.

single point on the ROC curve).To make comparison with our detector easier we have listed our detection rate for the false positive rates reported by the other systems.Table2 lists the detection rate for various numbers of false detec-tions for our system as well as other published systems.For the Rowley-Baluja-Kanade results[12],a number of differ-ent versions of their detector were tested yielding a number of different results they are all listed in under the same head-ing.For the Roth-Yang-Ahuja detector[11],they reported their result on the MIT+CMU test set minus5images con-taining line drawn faces removed.

Figure7shows the output of our face detector on some test images from the MIT+CMU test set.

A simple voting scheme to further improve results

In table2we also show results from running three de-tectors(the38layer one described above plus two similarly trained detectors)and outputting the majority vote of the three detectors.This improves the detection rate as well as eliminating more false positives.The improvement would be greater if the detectors were more independent.The cor-relation of their errors results in a modest improvement over the best single detector.

6Conclusions

We have presented an approach for object detection which minimizes computation time while achieving high detection accuracy.The approach was used to construct a face de-tection system which is approximately15faster than any previous approach.

This paper brings together new algorithms,representa-tions,and insights which are quite generic and may well 7

Figure 7:Output of our face detector on a number of test images from the MIT+CMU test

set.

Figure 6:ROC curve for our face detector on the MIT+CMU test set.The detector was run using a step size of 1.0and starting scale of 1.0(75,081,800sub-windows scanned).

have broader application in computer vision and image pro-cessing.

Finally this paper presents a set of detailed experiments on a dif?cult face detection dataset which has been widely studied.This dataset includes faces under a very wide range of conditions including:illumination,scale,pose,and cam-era variation.Experiments on such a large and complex dataset are dif?cult and time consuming.Nevertheless sys-tems which work under these conditions are unlikely to be brittle or limited to a single set of conditions.More impor-tantly conclusions drawn from this dataset are unlikely to be experimental artifacts.

References

[1]Y .Amit,D.Geman,and K.Wilder.Joint induction of shape

features and tree classi?ers,1997.[2]Anonymous.Anonymous.In Anonymous ,2000.

[3] F.Crow.Summed-area tables for texture mapping.In

Proceedings of SIGGRAPH ,volume 18(3),pages 207–212,1984.[4] F.Fleuret and D.Geman.Coarse-to-?ne face detection.Int.

https://www.360docs.net/doc/7110167247.html,puter Vision ,2001.[5]William T.Freeman and Edward H.Adelson.The design

and use of steerable ?lters.IEEE Transactions on Pattern Analysis and Machine Intelligence ,13(9):891–906,1991.[6]Yoav Freund and Robert E.Schapire.A decision-theoretic

generalization of on-line learning and an application to boosting.In Computational Learning Theory:Eurocolt ’95,pages 23–37.Springer-Verlag,1995.[7]H.Greenspan,S.Belongie,R.Gooodman,P.Perona,S.Rak-shit,and C.Anderson.Overcomplete steerable pyramid ?l-ters and rotation invariance.In Proceedings of the IEEE Con-ference on Computer Vision and Pattern Recognition ,1994.

[8]L.Itti,C.Koch,and E.Niebur.A model of saliency-based

visual attention for rapid scene analysis.IEEE Patt.Anal.Mach.Intell.,20(11):1254–1259,November 1998.[9]Edgar Osuna,Robert Freund,and Federico Girosi.Training

support vector machines:an application to face detection.In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition ,1997.[10] C.Papageorgiou,M.Oren,and T.Poggio.A general frame-work for object detection.In International Conference on Computer Vision ,1998.[11] D.Roth,M.Yang,and N.Ahuja.A snowbased face detector.

In Neural Information Processing 12,2000.[12]H.Rowley,S.Baluja,and T.Kanade.Neural network-based

face detection.In IEEE Patt.Anal.Mach.Intell.,volume 20,pages 22–38,1998.[13]R.E.Schapire,Y .Freund,P.Bartlett,and W.S.Lee.Boost-ing the margin:a new explanation for the effectiveness of voting methods.Ann.Stat.,26(5):1651–1686,1998.[14]Robert E.Schapire,Yoav Freund,Peter Bartlett,and

Wee Sun Lee.Boosting the margin:A new explanation for the effectiveness of voting methods.In Proceedings of the Fourteenth International Conference on Machine Learning ,1997.[15]H.Schneiderman and T.Kanade.A statistical method for 3D

object detection applied to faces and cars.In International Conference on Computer Vision ,2000.

8

[16]K.Sung and T.Poggio.Example-based learning for view-

based face detection.In IEEE Patt.Anal.Mach.Intell.,vol-

ume20,pages39–51,1998.

[17]J.K.Tsotsos,S.M.Culhane,W.Y.K.Wai,https://www.360docs.net/doc/7110167247.html,i,N.Davis,

and F.Nu?o.Modeling visual-attention via selective tun-

ing.Arti?cial Intelligence Journal,78(1-2):507–545,Octo-

ber1995.

[18]Andrew Webb.Statistical Pattern Recognition.Oxford Uni-

versity Press,New York,1999.

9

最小二乘法及其应用..

最小二乘法及其应用 1. 引言 最小二乘法在19世纪初发明后,很快得到欧洲一些国家的天文学家和测地学家的广泛关注。据不完全统计,自1805年至1864年的60年间,有关最小二乘法的研究论文达256篇,一些百科全书包括1837年出版的大不列颠百科全书第7版,亦收入有关方法的介绍。同时,误差的分布是“正态”的,也立刻得到天文学家的关注及大量经验的支持。如贝塞尔( F. W. Bessel, 1784—1846)对几百颗星球作了三组观测,并比较了按照正态规律在给定范围内的理论误差值和实际值,对比表明它们非常接近一致。拉普拉斯在1810年也给出了正态规律的一个新的理论推导并写入其《分析概论》中。正态分布作为一种统计模型,在19世纪极为流行,一些学者甚至把19世纪的数理统计学称为正态分布的统治时代。在其影响下,最小二乘法也脱出测量数据意义之外而发展成为一个包罗极大,应用及其广泛的统计模型。到20世纪正态小样本理论充分发展后,高斯研究成果的影响更加显著。最小二乘法不仅是19世纪最重要的统计方法,而且还可以称为数理统计学之灵魂。相关回归分析、方差分析和线性模型理论等数理统计学的几大分支都以最小二乘法为理论基础。正如美国统计学家斯蒂格勒( S. M. Stigler)所说,“最小二乘法之于数理统计学犹如微积分之于数学”。最小二乘法是参数回归的最基本得方法所以研究最小二乘法原理及其应用对于统计的学习有很重要的意义。 2. 最小二乘法 所谓最小二乘法就是:选择参数10,b b ,使得全部观测的残差平方和最小. 用数学公式表示为: 21022)()(m in i i i i i x b b Y Y Y e --=-=∑∑∑∧ 为了说明这个方法,先解释一下最小二乘原理,以一元线性回归方程为例. i i i x B B Y μ++=10 (一元线性回归方程)

数据库死锁问题总结

数据库死锁问题总结 1、死锁(Deadlock) 所谓死锁:是指两个或两个以上的进程在执行过程中,因争夺资源而造 成的一种互相等待的现象,若无外力作用,它们都将无法推进下去。此时称系 统处于死锁状态或系统产生了死锁,这些永远在互相等待的进程称为死锁进程。由于资源占用是互斥的,当某个进程提出申请资源后,使得有关进程在无外力 协助下,永远分配不到必需的资源而无法继续运行,这就产生了一种特殊现象 死锁。一种情形,此时执行程序中两个或多个线程发生永久堵塞(等待),每 个线程都在等待被其他线程占用并堵塞了的资源。例如,如果线程A锁住了记 录1并等待记录2,而线程B锁住了记录2并等待记录1,这样两个线程就发 生了死锁现象。计算机系统中,如果系统的资源分配策略不当,更常见的可能是 程序员写的程序有错误等,则会导致进程因竞争资源不当而产生死锁的现象。 锁有多种实现方式,比如意向锁,共享-排他锁,锁表,树形协议,时间戳协 议等等。锁还有多种粒度,比如可以在表上加锁,也可以在记录上加锁。(回滚 一个,让另一个进程顺利进行) 产生死锁的原因主要是: (1)系统资源不足。 (2)进程运行推进的顺序不合适。 (3)资源分配不当等。 如果系统资源充足,进程的资源请求都能够得到满足,死锁出现的可能 性就很低,否则就会因争夺有限的资源而陷入死锁。其次,进程运行推进顺序 与速度不同,也可能产生死锁。 产生死锁的四个必要条件: (1)互斥条件:一个资源每次只能被一个进程使用。 (2)请求与保持条件:一个进程因请求资源而阻塞时,对已获得的资源保持不放。 破解:静态分配(分配全部资源) (3)不剥夺条件:进程已获得的资源,在末使用完之前,不能强行剥夺。 破解:可剥夺 (4)循环等待条件:若干进程之间形成一种头尾相接的循环等待资源关系。 破解:有序分配 这四个条件是死锁的必要条件,只要系统发生死锁,这些条件必然成立,而只要上述条件之一不满足,就不会发生死锁。 死锁的预防和解除:

我的java基础题和答案详解

If语句相关训练 1. (标识符命名)下面几个变量中,那些是对的那些是错的错的请说明理由(CDF) A. ILoveJava B. $20 C. learn@java D. E. Hello_World F. 2tigers 答:标识符中不能有@,不能含有点号,开头只能是字母和$ 2. (Java 程序的编译与运行)假设有如下程序: package public class HelloWorld{ public static void main(String args[]){ "Hello World"); } } 问: 1)假设这个代码存在文件中,那这个程序能够编译通过为什么 如果编译不通过,应该如何改进 答:不能,含有public的类文件名必须要和类名一致;应将改写成 2)假设这个.java 文件放在C:\javafile\目录下,CLASSPATH=.,则生成的.class 文件应该放在什么目录下如何运行 答:.class应该存放在C:\javafile\目录下 3. (if 语句)读入一个整数,判断其是奇数还是偶数 public class Test { int n; If(n%2==0){ 是偶数”); }else{ 是奇数”); } } 4. (操作符)有如下代码: int a = 5; int b = (a++) + (--a) +(++a); 问执行完之后,b 的结果是多少 答:16 解析a先把5赋值给b让后再自增1相当于(b=5+(--6)+(++5))

5. (基本类型的运算)一家商场在举行打折促销,所有商品都进行8 折优惠。一 位程序员把这个逻辑写成: short price = ...; (操作符)有如下代码: a = (a>b)a:b; 请问这段代码完成了什么功能。 答:这段代码的作用是取最大值,当a>b成立时,a=a;当a>b不成立时,a=b; 8. (if 语句)读入一个整数,表示一个人的年龄。如果小于6 岁,则输出“儿童”,6 岁到13 岁,输出“少儿”;14 岁到18 岁,输出“青少年”;18 岁到35 岁,输出“青年”;35 岁到50 岁,输出“中年”;50 岁以上输出“中老年”。 答:public class AgeTest { public static void main(String[] args) { int n=12; if(n<6){

1、曲线拟合及其应用综述

曲线拟合及其应用综述 摘要:本文首先分析了曲线拟合方法的背景及在各个领域中的应用,然后详细介绍了曲线拟合方法的基本原理及实现方法,并结合一个具体实例,分析了曲线拟合方法在柴油机故障诊断中的应用,最后对全文内容进行了总结,并对曲线拟合方法的发展进行了思考和展望。 关键词:曲线拟合最小二乘法故障模式识别柴油机故障诊断 1背景及应用 在科学技术的许多领域中,常常需要根据实际测试所得到的一系列数据,求出它们的函数关系。理论上讲,可以根据插值原则构造n 次多项式Pn(x),使得Pn(x)在各测试点的数据正好通过实测点。可是, 在一般情况下,我们为了尽量反映实际情况而采集了很多样点,造成了插值多项式Pn(x)的次数很高,这不仅增大了计算量,而且影响了函数的逼近程度;再就是由于插值多项式经过每一实测样点,这样就会保留测量误差,从而影响逼近函数的精度,不易反映实际的函数关系。因此,我们一般根据已知实际测试样点,找出被测试量之间的函数关系,使得找出的近似函数曲线能够充分反映实际测试量之间的关系,这就是曲线拟合。 曲线拟合技术在图像处理、逆向工程、计算机辅助设计以及测试数据的处理显示及故障模式诊断等领域中都得到了广泛的应用。 2 基本原理 2.1 曲线拟合的定义 解决曲线拟合问题常用的方法有很多,总体上可以分为两大类:一类是有理论模型的曲线拟合,也就是由与数据的背景资料规律相适应的解析表达式约束的曲线拟合;另一类是无理论模型的曲线拟合,也就是由几何方法或神经网络的拓扑结构确定数据关系的曲线拟合。 2.2 曲线拟合的方法 解决曲线拟合问题常用的方法有很多,总体上可以分为两大类:一类是有理论模型的曲线拟合,也就是由与数据的背景资料规律相适应的解析表达式约束的曲线拟合;另一类是无理论模型的曲线拟合,也就是由几何方法或神经网络的拓扑结构确定数据关系的曲线拟合。 2.2.1 有理论模型的曲线拟合 有理论模型的曲线拟合适用于处理有一定背景资料、规律性较强的拟合问题。通过实验或者观测得到的数据对(x i,y i)(i=1,2, …,n),可以用与背景资料规律相适应的解析表达式y=f(x,c)来反映x、y之间的依赖关系,y=f(x,c)称为拟合的理论模型,式中c=c0,c1,…c n是待定参数。当c在f中线性出现时,称为线性模型,否则称为非线性模型。有许多衡量拟合优度的标准,最常用的方法是最小二乘法。 2.2.1.1 线性模型的曲线拟合 线性模型中与背景资料相适应的解析表达式为: ε β β+ + =x y 1 (1) 式中,β0,β1未知参数,ε服从N(0,σ2)。 将n个实验点分别带入表达式(1)得到: i i i x yε β β+ + = 1 (2) 式中i=1,2,…n,ε1, ε2,…, εn相互独立并且服从N(0,σ2)。 根据最小二乘原理,拟合得到的参数应使曲线与试验点之间的误差的平方和达到最小,也就是使如下的目标函数达到最小: 2 1 1 ) ( i i n i i x y Jε β β- - - =∑ = (3) 将试验点数据点入之后,求目标函数的最大值问题就变成了求取使目标函数对待求参数的偏导数为零时的参数值问题,即: ) ( 2 1 1 = - - - - = ? ?∑ = i i n i i x y J ε β β β (4)

死锁问题解决方法

Sqlcode -244 死锁问题解决 版本说明 事件日期作者说明 创建09年4月16日Alan 创建文档 一、分析产生死锁的原因 这个问题通常是因为锁表产生的。要么是多个用户同时访问数据库导致该问题,要么是因为某个进程死了以后资源未释放导致的。 如果是前一种情况,可以考虑将数据库表的锁级别改为行锁,来减少撞锁的机会;或在应用程序中,用set lock mode wait 3这样的语句,在撞锁后等待若干秒重试。 如果是后一种情况,可以在数据库端用onstat -g ses/onstat -g sql/onstat -k等命令找出锁表的进程,用onmode -z命令结束进程;如果不行,就需要重新启动数据库来释放资源。 二、方法一 onmode -u 将数据库服务器强行进入单用户模式,来释放被锁的表。注意:生产环境不适合。 三、方法二 1、onstat -k |grep HDR+X 说明:HDR+X为排他锁,HDR 头,X 互斥。返回信息里面的owner项是正持有锁的线程的共享内存地址。 2、onstat -u |grep c60a363c 说明:c60a363c为1中查到的owner内容。sessid是会话标识符编号。 3、onstat -g ses 20287 说明:20287为2中查到的sessid内容。Pid为与此会话的前端关联的进程标识符。 4、onstat -g sql 20287

说明:20287为2中查到的sessid内容。通过上面的命令可以查看执行的sql语句。 5、ps -ef |grep 409918 说明:409918为4中查到的pid内容。由此,我们可以得到锁表的进程。可以根据锁表进程的重要程度采取相应的处理方法。对于重要且该进程可以自动重联数据库的进程,可以用onmode -z sessid的方法杀掉锁表session。否则也可以直接杀掉锁表的进程 kill -9 pid。 四、避免锁表频繁发生的方法 4.1将页锁改为行锁 1、执行下面sql语句可以查询当前库中所有为页锁的表名: select tabname from systables where locklevel='P' and tabid > 99 2、执行下面语句将页锁改为行锁 alter table tabname lock mode(row) 4.2统计更新 UPDATE STATISTICS; 4.3修改数据库配置onconfig OPTCOMPIND参数帮助优化程序为应用选择合适的访问方法。 ?如果OPTCOMPIND等于0,优化程序给予现存索引优先权,即使在表扫描比较快时。 ?如果OPTCOMPIND设置为1,给定查询的隔离级设置为Repeatable Read时,优化程序才使用索引。 ?如果OPTCOMPIND等于2,优化程序选择基于开销选择查询方式。,即使表扫描可以临时锁定整个表。 *建议设置:OPTCOMPIND 0 # To hint the optimizer 五、起停informix数据库 停掉informix数据库 onmode -ky 启动informix数据库 oninit 注意千万别加-i参数,这样会初始化表空间,造成数据完全丢失且无法挽回。

精选30道Java笔试题解答

都是一些非常非常基础的题,是我最近参加各大IT公司笔试后靠记忆记下来的,经过整理献给与我一样参加各大IT校园招聘的同学们,纯考Java基础功底,老手们就不用进来了,免得笑话我们这些未出校门的孩纸们,但是IT公司就喜欢考这些基础的东西,所以为了能进大公司就~~~当复习期末考吧。花了不少时间整理,在整理过程中也学到了很多东西,请大家认真对待每一题~~~ 下面都是我自己的答案非官方,仅供参考,如果有疑问或错误请一定要提出来,大家一起进步啦~~~ 1. 下面哪些是Thread类的方法() A start() B run() C exit() D getPriority() 答案:ABD 解析:看Java API docs吧:https://www.360docs.net/doc/7110167247.html,/javase/7/docs/api/,exit()是System类的方法,如System.exit(0)。 2. 下面关于https://www.360docs.net/doc/7110167247.html,ng.Exception类的说法正确的是() A 继承自Throwable B Serialable CD 不记得,反正不正确 答案:A 解析:Java异常的基类为https://www.360docs.net/doc/7110167247.html,ng.Throwable,https://www.360docs.net/doc/7110167247.html,ng.Error和https://www.360docs.net/doc/7110167247.html,ng.Exception继承Throwable,RuntimeException和其它的Exception等继承Exception,具体的RuntimeException继承RuntimeException。扩展:错误和异常的区别(Error vs Exception) 1) https://www.360docs.net/doc/7110167247.html,ng.Error: Throwable的子类,用于标记严重错误。合理的应用程序不应该去try/catch这种错误。绝大多数的错误都是非正常的,就根本不该出现的。 https://www.360docs.net/doc/7110167247.html,ng.Exception: Throwable的子类,用于指示一种合理的程序想去catch的条件。即它仅仅是一种程序运行条件,而非严重错误,并且鼓励用户程序去catch它。 2) Error和RuntimeException及其子类都是未检查的异常(unchecked exceptions),而所有其他的Exception 类都是检查了的异常(checked exceptions). checked exceptions: 通常是从一个可以恢复的程序中抛出来的,并且最好能够从这种异常中使用程序恢复。比如FileNotFoundException, ParseException等。 unchecked exceptions: 通常是如果一切正常的话本不该发生的异常,但是的确发生了。比如ArrayIndexOutOfBoundException, ClassCastException等。从语言本身的角度讲,程序不该去catch这类异常,虽然能够从诸如RuntimeException这样的异常中catch并恢复,但是并不鼓励终端程序员这么做,因为完全没要必要。因为这类错误本身就是bug,应该被修复,出现此类错误时程序就应该立即停止执行。因此, 面对Errors和unchecked exceptions应该让程序自动终止执行,程序员不该做诸如try/catch这样的事情,而是应该查明原因,修改代码逻辑。 RuntimeException:RuntimeException体系包括错误的类型转换、数组越界访问和试图访问空指针等等。

最小二乘法原理及应用【文献综述】

毕业论文文献综述 信息与计算科学 最小二乘法的原理及应用 一、国内外状况 国际统计学会第56届大会于2007年8月22-29日在美丽的大西洋海滨城市、葡萄牙首都里斯本如期召开。应大会组委会的邀请,以会长李德水为团长的中国统计学会代表团一行29人注册参加了这次大会。北京市统计学会、山东省统计学会,分别组团参加了这次大会。中国统计界(不含港澳台地区)共有58名代表参加了这次盛会。本届大会的特邀论文会议共涉及94个主题,每个主题一般至少有3-5位代表做学术演讲和讨论。通过对大会论文按研究内容进行归纳,特邀论文大致可以分为四类:即数理统计,经济、社会统计和官方统计,统计教育和统计应用。 数理统计方面。数理统计作为统计科学的一个重要部分,特别是随机过程和回归分析依然展现着古老理论的活力,一直受到统计界的重视并吸引着众多的研究者。本届大会也不例外。 二、进展情况 数理统计学19世纪的数理统计学史, 就是最小二乘法向各个应用领域拓展的历史席卷了统计大部分应用的几个分支——相关回归分析, 方差分析和线性模型理论等, 其灵魂都在于最小二乘法; 不少近代的统计学研究是在此法的基础上衍生出来, 作为其进一步发展或纠正其不足之处而采取的对策, 这包括回归分析中一系列修正最小二乘法而导致的估计方法。 数理统计学的发展大致可分 3 个时期。① 20 世纪以前。这个时期又可分成两段,大致上可以把高斯和勒让德关于最小二乘法用于观测数据的误差分析的工作作为分界线,前段属萌芽时期,基本上没有超出描述性统计量的范围。后一阶段可算作是数理统计学的幼年阶段。首先,强调了推断的地位,而摆脱了单纯描述的性质。由于高斯等的工作揭示了最小二乘法的重要性,学者们普遍认为,在实际问题中遇见的几乎所有的连续变量,都可以满意地用最小二乘法来刻画。这种观点使关于最小二乘法得到了深入的发展,②20世纪初到第二次世界大战结束。这是数理统计学蓬勃发展达到成熟的时期。许多重要的基本观点和方法,以及数理统计学的主要分支学科,都是在这个时期建立和发展起来的。这个时期的成就,包含了至今仍在广泛使用的大多数统计方法。在其发展中,以英国统计学家、生物学家费希尔为代表的英国学派起了主导作用。③战后时期。这一时期中,数理统计学在应用和理论两方面继续获得很大的进展。

【VIP专享】Java中String类的方法详解

Java中String类的方法详解 JJava中String 类的方法及说明 String : 字符串类型 一、构造函数 String(byte[ ]bytes ):通过byte数组构造字符串对象。 String(char[ ]value ):通过char数组构造字符串对象。 String(Sting original ):构造一个original的副本。即:拷贝一个original。 String(StringBuffer buffer ):通过StringBuffer数组构造字符串对象。例如: byte[] b = {'a','b','c','d','e','f','g','h','i','j'}; char[] c = {'0','1','2','3','4','5','6','7','8','9'}; String sb = new String(b); //abcdefghij String sb_sub = new String(b,3/*offset*/,2/*length*/); //de String sc = new String(c); //0123456789 String sc_sub = new String(c,3,2); //34 String sb_copy = new String(sb); //abcdefghij System.out.println("sb:"+sb); System.out.println("sb_sub:"+sb_sub); System.out.println("sc:"+sc); System.out.println("sc_sub:"+sc_sub); System.out.println("sb_copy:"+sb_copy); 输出结果:sb:abcdefghij sb_sub:de sc:0123456789 sc_sub:34 sb_copy:abcdefghij 二、方法: 说明:①、所有方法均为public。 ②、书写格式: [修饰符] <返回类型><方法名([参数列表])> 0.public static int parseInt(String s) public static byte parseByte(String s) public static boolean parseBoolean(String s) public static short parseShort(String s) public static long parseLong(String s) public static double parseDouble(String s) 例如:可以将“数字”格式的字符串,转化为相应的基本数据类型 int i=Integer.pareInt(“123”)

最小二乘法综述及举例

最小二乘法综述及算例 一最小二乘法的历史简介 1801年,意大利天文学家朱赛普·皮亚齐发现了第一颗小行星谷神星。经过40天的跟踪观测后,由于谷神星运行至太阳背后,使得皮亚齐失去了谷神星的位置。随后全世界的科学家利用皮亚齐的观测数据开始寻找谷神星,但是根据大多数人计算的结果来寻找谷神星都没有结果。时年24岁的高斯也计算了谷神星的轨道。奥地利天文学家海因里希·奥尔伯斯根据高斯计算出来的轨道重新发现了谷神星。 高斯使用的最小二乘法的方法发表于1809年他的著作《天体运动论》中。 经过两百余年后,最小二乘法已广泛应用与科学实验和工程技术中,随着现代电子计算机的普及与发展,这个方法更加显示出其强大的生命力。 二最小二乘法原理 最小二乘法的基本原理是:成对等精度测得的一组数据),...,2,1(,n i y x i i =,是找出一条最佳的拟合曲线,似的这条曲线上的个点的值与测量值的差的平方和在所有拟合曲线中最小。 设物理量y 与1个变量l x x x ,...,2,1间的依赖关系式为:)(,...,1,0;,...,2,1n l a a a x x x f y =。 其中n a a a ,...,1,0是n +l 个待定参数,记()2 1 ∑=- = m i i i y v s 其中 是测量值, 是由己求 得的n a a a ,...,1,0以及实验点),...,2,1)(,...,(;,2,1m i v x x x i il i i =得出的函数值 )(,...,1,0;,...,2,1n il i i a a a x x x f y =。 在设计实验时, 为了减小误差, 常进行多点测量, 使方程式个数大于待定参数的个数, 此时构成的方程组称为矛盾方程组。通过最小二乘法转化后的方程组称为正规方程组(此时方程式的个数与待定参数的个数相等) 。我们可以通过正规方程组求出a 最小二乘法又称曲线拟合, 所谓“ 拟合” 即不要求所作的曲线完全通过所有的数据点, 只要求所得的曲线能反映数据的基本趋势。 三曲线拟合 曲线拟合的几何解释: 求一条曲线, 使数据点均在离此曲线的上方或下方不远处。 (1)一元线性拟合 设变量y 与x 成线性关系x a a y 10+=,先已知m 个实验点),...,2,1(,m i v x i i =,求两个未知参数1,0a a 。 令()2 1 10∑ =--=m i i i x a a y s ,则1,0a a 应满足1,0,0==??i a s i 。 即 i v i v

《操作系统原理》5资源管理(死锁)习题

第五章死锁练习题 (一)单项选择题 1.系统出现死锁的根本原因是( )。 A.作业调度不当B.系统中进程太多C.资源的独占性D.资源管理和进程推进顺序都不得当 2.死锁的防止是根据( )采取措施实现的。 A.配置足够的系统资源B.使进程的推进顺序合理 C.破坏产生死锁的四个必要条件之一D.防止系统进入不安全状态 3.采用按序分配资源的策略可以防止死锁.这是利用了使( )条件不成立。 A.互斥使用资源B循环等待资源C.不可抢夺资源D.占有并等待资源 4.可抢夺的资源分配策略可预防死锁,但它只适用于( )。 A.打印机B.磁带机C.绘图仪D.主存空间和处理器 5.进程调度算法中的( )属于抢夺式的分配处理器的策略。 A.时间片轮转算法B.非抢占式优先数算法C.先来先服务算法D.分级调度算法 6.用银行家算法避免死锁时,检测到( )时才分配资源。 A.进程首次申请资源时对资源的最大需求量超过系统现存的资源量 B.进程己占用的资源数与本次申请资源数之和超过对资源的最大需求量 C.进程已占用的资源数与本次申请的资源数之和不超过对资源的最大需求量,且现存资源能满足尚需的最大资源量 D进程已占用的资源数与本次申请的资源数之和不超过对资源的最大需求量,且现存资源能满足本次申请量,但不能满足尚需的最大资源量 7.实际的操作系统要兼顾资源的使用效率和安全可靠,对资源的分配策略,往往采用( )策略。 A死锁的防止B.死锁的避免C.死锁的检测D.死锁的防止、避免和检测的混合 (二)填空题 1.若系统中存在一种进程,它们中的每一个进程都占有了某种资源而又都在等待其中另一个进程所占用的资源。这种等待永远不能结束,则说明出现了______。 2.如果操作系统对______或没有顾及进程______可能出现的情况,则就可能形成死锁。 3.系统出现死锁的四个必要条件是:互斥使用资源,______,不可抢夺资源和______。 4.如果进程申请一个某类资源时,可以把该类资源中的任意一个空闲资源分配给进程,则说该类资源中的所有资源是______。 5.如果资源分配图中无环路,则系统中______发生。 6.为了防止死锁的发生,只要采用分配策略使四个必要条件中的______。 7.使占有并等待资源的条件不成立而防止死锁常用两种方法:______和______. 8静态分配资源也称______,要求每—个进程在______就申请它需要的全部资源。 9.释放已占资源的分配策略是仅当进程______时才允许它去申请资源。 10.抢夺式分配资源约定,如果一个进程已经占有了某些资源又要申请新资源,而新资源不能满足必须等待时、系统可以______该进程已占有的资源。 11.目前抢夺式的分配策略只适用于______和______。 12.对资源采用______的策略可以使循环等待资源的条件不成立。 13.如果操作系统能保证所有的进程在有限的时间内得到需要的全部资源,则称系统处于______。14.只要能保持系统处于安全状态就可______的发生。 15.______是一种古典的安全状态测试方法。 16.要实现______,只要当进程提出资源申请时,系统动态测试资源分配情况,仅当能确保系统安全时才把资源分配给进程。

C++ string 详解

C++ string 详解 之所以抛弃char*的字符串而选用C++标准程序库中的string类,是因为他和前者比较起来,不必担心内存是否足够、字符串长度等等,而且作为一个类出现,他集成的操作函数足以完成我们大多数情况下(甚至是100%)的需要。我们可以用= 进行赋值操作,== 进行比较,+ 做串联(是不是很简单?)。我们尽可以把它看成是C++的基本数据类型。 好了,进入正题……… 首先,为了在我们的程序中使用string类型,我们必须包含头文件

操作系统死锁练习及答案

死锁练习题 (一)单项选择题 l系统出现死锁的根本原因是( )。 A.作业调度不当 B.系统中进程太多 C.资源的独占性 D.资源管理和进程推进顺序都不得当 2.死锁的防止是根据( )采取措施实现的。 A.配置足够的系统资源 B.使进程的推进顺序合理 C.破坏产生死锁的四个必要条件之一 D.防止系统进入不安全状态 3.采用按序分配资源的策略可以防止死锁.这是利用了使( )条件不成立。 A.互斥使用资源 B循环等待资源 c.不可抢夺资源 D.占有并等待资源 4.可抢夺的资源分配策略可预防死锁,但它只适用于( )。A.打印机 B.磁带机 c.绘图仪 D.主存空间和处理器 5.进程调度算法中的( )属于抢夺式的分配处理器的策略。A.时间片轮转算法 B.非抢占式优先数算法 c.先来先服务算法 D.分级调度算法 6.用银行家算法避免死锁时,检测到( )时才分配资源。 A.进程首次申请资源时对资源的最大需求量超过系统现存的资源量 B.进程己占用的资源数与本次申请资源数之和超过对资源的最大需求量 c.进程已占用的资源数与本次申请的资源数之和不超过对资源的最大需求量,且现存资源能满足尚需的最大资源量 D进程已占用的资源数与本次申请的资源数之和不超过对资源的最大需求量,且现存资源能满足本次申请量,但不能满足尚需的最大资源量 7.实际的操作系统要兼顾资源的使用效率和安全可靠,对资源的分配策略,往往采用 ( )策略。 A死锁的防止 B.死锁的避免 c.死锁的检测 D.死锁的防止、避免和检测的混合(一)单项选择题 1.D 2.C 3.B 4.D 5.A 6 C 7 D (二)填空题 l若系统中存在一种进程,它们中的每一个进程都占有了某种资源而又都在等待其中另一个进程所占用的资源。这种等待永远不能结束,则说明出现了______。 2.如果操作系统对 ______或没有顾及进程______可能出现的情况,则就可能形成死锁。3.系统出现死锁的四个必要条件是:互斥使用资源,______,不可抢夺资源和______。 4.如果进程申请一个某类资源时,可以把该类资源中的任意一个空闲资源分配给进程,则说该类资源中的所有资源是______。 5.如果资源分配图中无环路,则系统中______发生。 6.为了防止死锁的发生,只要采用分配策略使四个必要条件中的______。 7.使占有并等待资源的条件不成立而防止死锁常用两种方法:______和______. 8静态分配资源也称______,要求每—个进程在______就申请它需要的全部资源。 9.释放已占资源的分配策略是仅当进程______时才允许它去申请资源。 10抢夺式分配资源约定,如果一个进程已经占有了某些资源又要申请新资源,而新资源不能满足必须等待时、系统可以______该进程已占有的资源。 11.目前抢夺式的分配策略只适用于______和______。 12.对资源采用______的策略可以使循环等待资源的条件不成立。 13.如果操作系统能保证所有的进程在有限的时间内得到需要的全部资源,则称系统处于______。 14.只要能保持系统处于安全状态就可______的发生。 15.______是一种古典的安全状态测试方法。 16.要实现______,只要当进程提出资源申请时,系统动态测试资源分配情况,仅当能确保系统安全时才把资源分配给进程。 17.可以证明,M个同类资源被n个进程共享时,只要不等式______成立,则系统一定不会发生死锁,其中x为每个进程申请该类资源的最大量。 18.______对资源的分配不加限制,只要有剩余的资源,就可把资源分配给申请者。 19.死锁检测方法要解决两个问题,一是______是否出现了死锁,二是当有死锁发生时怎样去______。 20.对每个资源类中只有一个资源的死锁检测程序根据______和______两张表中记录的资源情况,把进程等待资源的关系在矩阵中表示出

C++ string

C++ string介绍 char*的字符串和C++标准程序库中的string类相比,后者不必担心内存是否足够、字符串长度等等,而且作为一个类出现,他集成的操作函数足以完成我们大多数情况下(甚至是100%)的需要。我们可以用 = 进行赋值操作,== 进行比较,+ 做串联(是不是很简单?)。我们尽可以把它看成是C++的基本数据类型。 好了,进入正题………- 首先,为了在我们的程序中使用string类型,我们必须包含头文件。如下: #include //注意这里不是string.h string.h是C字符串头文件 1.声明一个C++字符串 声明一个字符串变量很简单: string Str; 这样我们就声明了一个字符串变量,但既然是一个类,就有构造函数和析构函数。上面的声明没有传入参数,所以就直接使用了string的默认的构造函数,这个函数所作的就是把Str初始化为一个空字符串。String类的构造函数和析构函数如下: a) string s; //生成一个空字符串s b) string s(str) //拷贝构造函数生成str的复制品 c) string s(str,stridx) //将字符串str内“始于位置stridx”的部分当作字符串的初值 d) string s(str,stridx,strlen) //将字符串str内“始于stridx且长度顶多strlen”的部分作为字符串的初值 e) string s(cstr) //将C字符串作为s的初值 f) string s(chars,chars_len) //将C字符串前chars_len个字符作为字符串s的初值。 g) string s(num,c) //生成一个字符串,包含num个c字符 h) string s(beg,end) //以区间beg;end(不包含end)内的字符作为字符串s的初值 i) s.~string() //销毁所有字符,释放内存 都很简单,我就不解释了。 2.字符串操作函数 这里是C++字符串的重点,我先把各种操作函数罗列出来,不喜欢把所有函数都看完的人可以在这里找自己喜欢的函数,再到后面看他的详细解释。 a) =,assign() //赋以新值

ABB机器人-RAPID程序指令与功能简述

5.6 RAPID程序指令与功能简述5. 6.1 程序执行的控制 1. 程序的调用 指令说明 ProcCall 调用例行程序 CallByVar 通过带变量的例行程序名称调用例行程序 RETURN 返回原例行程序 2. 例行程序内的逻辑控制 指令说明 Compact IF 如果条件满足,就执行下一条指令 IF 当满足不同的条件时,执行对应的程序 FOR 根据指定的次数,重复执行对应的程序 WHILE 如果条件满足,重复执行对应的程序 TEST 对一个变量进行判断,从而执行不同的程序 GOTO 跳转到例行程序内标签的位置 Lable 跳转标签 3. 停止程序执行 指令说明 Stop 停止程序执行 EXIT 停止程序执行并禁止在停止处再开始 Break 临时停止程序的执行,用于手动调试SystemStopAction 停止程序执行与机器人运动 ExitCycle 中止当前程序的运行并将程序指针PP复位到主程序的第一条指令。如果选择了程序连续运行模式,程序将从主程序的第一句重新执行。 5.6.2 变量指令 1. 赋值指令 指令说明:= 对程序数据进行赋值 2. 等待指令 指令说明 WaitTime 等待一个指定的时间,程序再往下执行 WaitUntil 等待一个条件满足后,程序继续往下执行

WaitDI 等待一个输入信号状态为设定值 WaitDO 等待一个输出信号状态为设定值 3. 程序注释 指令说明 Comment 对程序进行注释 4. 程序模块加载 指令说明 Load 从机器人硬盘加载一个程序模块到运行内存 UnLoad 从运行内存中卸载一个程序模块 Start Load 在程序执行的过程中,加载一个程序模块到运行内存中 Wait Load 当Start Load使用后,使用此指令将程序模块连接到任务中使用CancelLoad 取消加载程序模块 CheckProgRef 检查程序引用 Save 保存程序模块 EraseModule 从运行内存删除程序模块 5. 变量功能 指令说明 TryInt 判断数据是否是有效的整数 功能说明 OpMode 读取当前机器人的操作模式 RunMode 读取当前机器人程序的运行模式 NonMotionMode 读取程序任务当前是否无运动的执行模式 Dim 获取一个数组的维数 Present 读取带参数例行程序的可选参数值 IsPers 判断一个参数是不是可变量 IsVar 判断一个参数是不是变量 6. 转换功能 指令说明 StrToByte 将字符串转换为指定格式的字节数据 ByteToStr 将字节数据转换为字符串 5.6.3 运动设定 1. 速度设定 功能说明 MaxRobSpeed 获取当前型号机器人可实现的最大TCP速度

最小二乘法在误差分析中的应用

误差理论综述与最小二乘法讨论 摘要:本文对误差理论和有关数据处理的方法进行综述。并且针对最小二乘法(LS)的创立、发展、思想方法等相关方面进行了研究和总结。同时,将近年发展起来的全面最小二乘法(TLS)同传统最小二乘法进行了对比。 1.误差的有关概念 对科学而言,各种物理量都需要经过测量才能得出结果。许多物理量的发现,物理常数的确定,都是通过精密测量得到的。任何测试结果,都含有误差,因此,必须研究,估计和判断测量结果是否可靠,给出正确评定。对测量结果的分析、研究、判断,必须采用误差理论,它是我们客观分析的有力工具 测量基本概念 一个物理量的测量值应由数值和单位两部分组成。按实验数据处理的方式,测量可分为直接测量、间接测量和组合测量。 直接测量:可以用测量仪表直接读出测量值的测量。 间接测量:有些物理量无法直接测得,需要依据待测物理量与若干直接测量量的函数关系求出。 组合测量:如有若干个待求量,把这些待求量用不同方法组合起来进行测量,并把测量结果与待求量之间的函数关系列成方程组,用最小二乘法求出这个待求量的数值,即为组合测量。 误差基本概念 误差是评定测量精度的尺度,误差越小表示精度越高。若某物理量的测量值为y,真值为Y,则测量误差dy=y-Y。虽然真值是客观存在的,但实际应用时它一般无从得知。按照误差的性质,可分为随机误差,系统误差和粗大误差三类。 随机误差:是同一测量条件下,重复测量中以不可预知方式变化的测量误差分量。 系统误差:是同一测量条件下,重复测量中保持恒定或以可预知方式变化的测量误差分量。 粗大误差:指超出在规定条件下预期的误差。 等精度测量的随机误差 当对同一量值进行多次等精度的重复测量,得到一系列的测量值,每个测量

死锁问题的相关研究

死锁问题的相关研究 摘要死锁是计算机操作系统学习中的一个重点,进程在使用系统资源时易产生死锁问题,若何排除、预防和避免死锁,是我们所要研究的重要问题。 关键词银行家算法;存储转发;重装死锁 所谓死锁是指两个或两个以上的进程在执行过程中,因争夺资源而造成的一种互相等待的现象,若无外力作用,它们都将无法推进下去.此时称系统处于死锁状态或系统产生了死锁,这些永远在互相等待的进程称为死锁进程。 1产生死锁的原因及其必要条件 1)产生死锁的原因。因为系统资源不足;进程运行推进的顺序不合适;资源分配不当等。如果系统资源充足,进程的资源请求都能够得到满足,死锁出现的可能性就很低,否则就会因争夺有限的资源而陷入死锁。其次,进程运行推进顺序与速度不同,也可能产生死锁。 2)产生死锁的四个必要条件。互斥条件:一个资源每次只能被一个进程使用。请求与保持条件(占有等待):一个进程因请求资源而阻塞时,对已获得的资源保持不放。不剥夺条件(不可抢占):进程已获得的资源,在未使用完之前,不能强行剥夺。循环等待条件:若干进程之间形成一种头尾相接的循环等待资源关系。 这四个条件是死锁的必要条件,只要系统发生死锁,这些条件必然成立,而只要上述条件之一不满足,就不会发生死锁。 2死锁的解除与预防 理解了死锁的原因,尤其是产生死锁的四个必要条件,就可以最大可能地避免、预防和解除死锁。在系统设计、进程调度等方面注意如何不让这四个必要条件成立,如何确定资源的合理分配算法,避免进程永久占据系统资源。 1)有序资源分配法。这种算法资源按某种规则系统中的所有资源统一编号(例如打印机为1、磁带机为2、磁盘为3、等等),申请时必须以上升的次序。 采用有序资源分配法:R1的编号为1,R2的编号为2;PA:申请次序应是:R1,R2;PB:申请次序应是:R1,R2;这样就破坏了环路条件,避免了死锁的发生。 2)银行算法。避免死锁算法中最有代表性的算法是DijkstraE.W于1968年提出的银行家算法。该算法需要检查申请者对资源的最大需求量,如果系统现存的各类资源可以满足申请者的请求,就满足申请者的请求。这样申请者就可很快

Oracle常见死锁发生的原因以及解决方法

Oracle常见死锁发生的原因以及解决方法 Oracle常见死锁发生的原因以及解决办法 一,删除和更新之间引起的死锁 造成死锁的原因就是多个线程或进程对同一个资源的争抢或相互依赖。这里列举一个对同一个资源的争抢造成死锁的实例。 Oracle 10g, PL/SQL version 9.2 CREATE TABLE testLock( ID NUMBER, test VARCHAR(100) ) COMMIT INSERT INTO testLock VALUES(1,'test1'); INSERT INTO testLock VALUES(2,'test2'); COMMIT; SELECT * FROM testLock 1. ID TEST 2.---------- ---------------------------------- 3. 1 test1 4. 2 test2 死锁现象的重现: 1)在sql 窗口执行:SELECT * FROM testLock FOR UPDATE; -- 加行级锁并对内容进行修改, 不要提交 2)另开一个command窗口,执行:delete from testLock WHERE ID=1; 此时发生死锁(注意此时要另开一个窗口,不然会提示:POST THE CHANGE RECORD TO THE DATABASE. 点yes 后强制commit):

3)死锁查看: 1.SQL> select https://www.360docs.net/doc/7110167247.html,ername,l.object_id, l.session_id,s.serial#, s.lockwait,s.status,s.machine, s.program from v$session s,v$locked_object l where s.sid = l.session_id; USER NAME SESSION_ID SERIAL# LOCKWAIT STATUS MACHINE PROGRAM 2.---------- ---------- ---------- -------- -------- ---------------------- ------------ 3.SYS 146 104 INACTIVE WORKGROUP\J-THINK PLSQLDev.exe 4.SYS 144 145 20834474 ACTIVE WORKGROUP\J-THINK PLSQLDev. exe 字段说明: Username:死锁语句所用的数据库用户; SID: session identifier,session 标示符,session 是通信双方从开始通信到通信结束期间的一个上下文。 SERIAL#: sid 会重用,但是同一个sid被重用时,serial#会增加,不会重复。 Lockwait:可以通过这个字段查询出当前正在等待的锁的相关信息。 Status:用来判断session状态。Active:正执行SQL语句。Inactive:等待操作。Killed:被标注为删除。 Machine:死锁语句所在的机器。 Program:产生死锁的语句主要来自哪个应用程序。 4)查看引起死锁的语句: