CONTEXT-AWARE CITATION RECOMMENDATION

Citation Recommendation

Qi He

Penn State University

qhe@https://www.360docs.net/doc/0e8374868.html, Jian Pei

Simon Fraser University

jpei@sfu.ca Daniel Kifer

Penn State University

dan+www10@https://www.360docs.net/doc/0e8374868.html,

Prasenjit Mitra Penn State University

pmitra@https://www.360docs.net/doc/0e8374868.html,

C.Lee Giles

Penn State University

giles@https://www.360docs.net/doc/0e8374868.html,

ABSTRACT

When you write papers,how many times do you want to

make some citations at a place but you are not sure which papers to cite?Do you wish to have a recommendation system which can recommend a small number of good can-didates for every place that you want to make some cita-tions?In this paper,we present our initiative of building a context-aware citation recommendation system.High qual-ity citation recommendation is challenging:not only should the citations recommended be relevant to the paper under composition,but also should match the local contexts of the places citations are made.Moreover,it is far from trivial to model how the topic of the whole paper and the contexts of the citation places should a?ect the selection and ranking of citations.To tackle the problem,we develop a context-aware approach.The core idea is to design a novel non-parametric probabilistic model which can measure the context-based relevance between a citation context and a document.Our approach can recommend citations for a context e?ectively.Moreover,it can recommend a set of citations for a paper with high quality.We implement a prototype system in CiteSeerX.An extensive empirical evaluation in the Cite-SeerX digital library against many baselines demonstrates the e?ectiveness and the scalability of our approach.

Categories and Subject Descriptors

H.3.3[Information Storage and Retrieval ]:Information Search and Retrieval

General Terms

Algorithms,Design,Experimentation

Keywords

Recommender Systems,Context,Bibliometrics,Gleason’s Theorem

1.INTRODUCTION

When you write papers,how many times do you want to make some citations at a place but you are not sure which papers to cite?For example,the left part of Figure 1shows a segment of a query manuscript containing some citation placeholders (placeholders for short)marked as “[?]”,where

Copyright is held by the International World Wide Web Conference Com-mittee (IW3C2).Distribution of these papers is limited to classroom use,and personal use by others.

WWW 2010,April 26–30,2010,Raleigh,North Carolina,USA.ACM 978-1-60558-799-8/10/04.

Scalability. models for a sample distribution topic.

Figure 1:A manuscript with citation placeholders and

recommended citations.The text is from Section 2.2.

citations should be added.In order to ?ll in those citation placeholders,one needs to search the relevant literature and ?nd a small number of proper citations.Searching for proper citations is often a labor-intensive task in research paper composition,particularly for junior researchers who are not familiar with the very extensive literature.

Do you wish to have a recommendation system which can recommend a small number of good candidates for every place that you want to make some citations?High quality citation recommendation is a challenging problem for many reasons.

For each citation placeholder,we can collect the words sur-rounding as the context of the placeholder.One may think we can use some keywords in the context of a placeholder to search a literature search engine like Google Scholar or CiteSeerX to obtain a list of documents as the candidates for citations.However,such a method,based on keyword matching,is often far from satisfactory.For example,us-ing query “frequent itemset mining”one may want to search for the ?rst paper proposing the concept of frequent itemset mining [1].However,Google Scholar returns a paper about frequent closed itemset mining published in 2000as the ?rst result,and a paper on privacy preserving frequent itemset mining as the second choice.[1]does not appear in the ?rst page of the results.CiteSeerX also lists a paper on privacy preserving frequent itemset mining as the ?rst result.Cite-SeerX fails to return [1]on the ?rst page,either.

One may wonder,as we can model citations as links from citing documents to cited ones,can we use graph-based link prediction techniques to recommend citations?Graph-based link prediction techniques require a user to provide sample citations for each placeholder,and thus shifts much of the burden to the user.Moreover,graph-based link prediction methods may encounter di?culties to make proper citations across multiple communities because a community may not be aware of the related work in some other community.

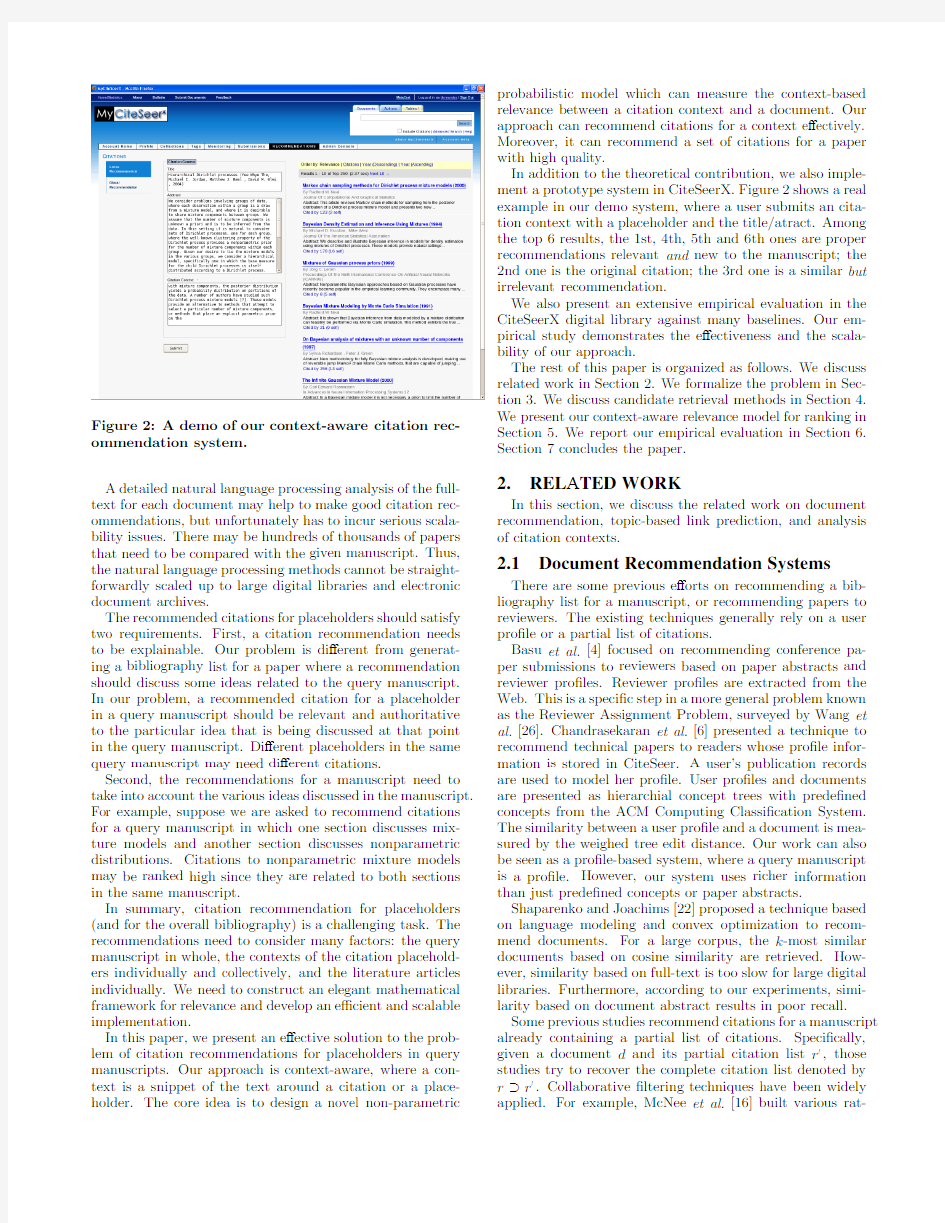

Figure2:A demo of our context-aware citation rec-ommendation system.

A detailed natural language processing analysis of the full-text for each document may help to make good citation rec-ommendations,but unfortunately has to incur serious scala-bility issues.There may be hundreds of thousands of papers that need to be compared with the given manuscript.Thus, the natural language processing methods cannot be straight-forwardly scaled up to large digital libraries and electronic document archives.

The recommended citations for placeholders should satisfy two requirements.First,a citation recommendation needs to be explainable.Our problem is di?erent from generat-ing a bibliography list for a paper where a recommendation should discuss some ideas related to the query manuscript. In our problem,a recommended citation for a placeholder in a query manuscript should be relevant and authoritative to the particular idea that is being discussed at that point in the query manuscript.Di?erent placeholders in the same query manuscript may need di?erent citations.

Second,the recommendations for a manuscript need to take into account the various ideas discussed in the manuscript. For example,suppose we are asked to recommend citations for a query manuscript in which one section discusses mix-ture models and another section discusses nonparametric distributions.Citations to nonparametric mixture models may be ranked high since they are related to both sections in the same manuscript.

In summary,citation recommendation for placeholders (and for the overall bibliography)is a challenging task.The recommendations need to consider many factors:the query manuscript in whole,the contexts of the citation placehold-ers individually and collectively,and the literature articles individually.We need to construct an elegant mathematical framework for relevance and develop an e?cient and scalable implementation.

In this paper,we present an e?ective solution to the prob-lem of citation recommendations for placeholders in query manuscripts.Our approach is context-aware,where a con-text is a snippet of the text around a citation or a place-holder.The core idea is to design a novel non-parametric probabilistic model which can measure the context-based relevance between a citation context and a document.Our approach can recommend citations for a context e?ectively. Moreover,it can recommend a set of citations for a paper with high quality.

In addition to the theoretical contribution,we also imple-ment a prototype system in CiteSeerX.Figure2shows a real example in our demo system,where a user submits an cita-tion context with a placeholder and the title/atract.Among the top6results,the1st,4th,5th and6th ones are proper recommendations relevant and new to the manuscript;the 2nd one is the original citation;the3rd one is a similar but irrelevant recommendation.

We also present an extensive empirical evaluation in the CiteSeerX digital library against many baselines.Our em-pirical study demonstrates the e?ectiveness and the scala-bility of our approach.

The rest of this paper is organized as follows.We discuss related work in Section2.We formalize the problem in Sec-tion3.We discuss candidate retrieval methods in Section4. We present our context-aware relevance model for ranking in Section5.We report our empirical evaluation in Section6. Section7concludes the paper.

2.RELATED WORK

In this section,we discuss the related work on document recommendation,topic-based link prediction,and analysis of citation contexts.

2.1Document Recommendation Systems

There are some previous e?orts on recommending a bib-liography list for a manuscript,or recommending papers to reviewers.The existing techniques generally rely on a user pro?le or a partial list of citations.

Basu et al.[4]focused on recommending conference pa-per submissions to reviewers based on paper abstracts and reviewer pro?les.Reviewer pro?les are extracted from the Web.This is a speci?c step in a more general problem known as the Reviewer Assignment Problem,surveyed by Wang et al.[26].Chandrasekaran et al.[6]presented a technique to recommend technical papers to readers whose pro?le infor-mation is stored in CiteSeer.A user’s publication records are used to model her pro?https://www.360docs.net/doc/0e8374868.html,er pro?les and documents are presented as hierarchial concept trees with prede?ned concepts from the ACM Computing Classi?cation System. The similarity between a user pro?le and a document is mea-sured by the weighed tree edit distance.Our work can also be seen as a pro?le-based system,where a query manuscript is a pro?le.However,our system uses richer information than just prede?ned concepts or paper abstracts. Shaparenko and Joachims[22]proposed a technique based on language modeling and convex optimization to recom-mend documents.For a large corpus,the k-most similar documents based on cosine similarity are retrieved.How-ever,similarity based on full-text is too slow for large digital libraries.Furthermore,according to our experiments,simi-larity based on document abstract results in poor recall. Some previous studies recommend citations for a manuscript already containing a partial list of citations.Speci?cally, given a document d and its partial citation list r ,those studies try to recover the complete citation list denoted by r?r .Collaborative?ltering techniques have been widely applied.For example,McNee et al.[16]built various rat-

ing matrices including a author-citation matrix,a paper-citation matrix,and a co-citation matrix.Papers which are co-cited often with citations in r are potential candidates. Zhou et al.[27]propagated the positive labels(i.e.,the ex-isting citations in r )in multiple graphs such as the paper-paper citation graph,the author-paper bipartite graph,and the paper-venue bipartite graph,and learned the labels of the rest documents for a given testing document in a semi-supervised manner.Torres et al.[25]used a combination of context-based and collaborative?ltering algorithms to build a recommendation system,and reported that the hybrid al-gorithms performed better than individual ones.Strohman et al.[23]experimented with a citation recommendation sys-tem where the relevance between two documents is mea-sured by a linear combination of text features and citation graph features.They concluded that similarity between bib-liographies and Katz distance[15]are the most important features.Tang and Zhang[24]explored recommending cita-tions for placeholders in a very limited extent.In particular, a user must provide a bibliography with papers relevant to each citation context,as this information is used to compute features in the hidden layer of a Restricted Boltzmann Ma-chine before predictions can be made.We feel this require-ment negates the need for requesting recommendations.

In our study,we do not require a partial list of citations, since creating such a list for each placeholder shifts most of the burden on the user.Thus,we have to recommend citations both globally(i.e.,for the bibliography)and also locally(i.e.,for each placeholder).

2.2Topic-based Citation Link Prediction Topic models are unsupervised techniques that analyze the text of a large document corpus and provide a low-dimensional representation of documents in terms of au-tomatically discovered and comprehensible“topics”.Topic models have been extended to handle citations as well as text,and thus can be used to predict citations for bibliogra-phies.The aforementioned work of Tang and Zhang[24]?ts in this framework.Nallapati et al.[18]introduced a model called Pairwise-Link-LDA which models the presence or absence of a link between every pair of documents and thus does not scale to large digital libraries.Nallapati et al.[18]also introduced a simpler model that is similar to the work of Cohn and Hofmann[7]and Erosheva et al.[9]. Here citations are modeled as a sample from a probability distribution associated with a topic.Thus,a paper can be associated with topics when it is viewed as a citation.It can also be associated with topics from the analysis of its text.However,there is no mechanism to enforce consistency between the topics assigned in those two ways.

In general,topic models require a long training process because they are typically trained using iterative techniques such as Gibbs Sampling or variational inference.In addition to this,they must be retrained as new documents are added.

2.3Citation Context Analysis

The prior work on analyzing citation contexts mainly be-longs to two groups.The?rst group tries to understand the motivation functions of an existing citation.For ex-ample,Aya et al.[2]built a machine learning algorithm to automatically learn the motivation functions(e.g.,compare, contrast,use of the citation,etc.)of citations by using ci-tation context based features.The second group tries to enhance topical similarity between citations.For example, Huang et al.[11]observed that citation contexts can e?ec-tively help to avoid“topic drifting”in clustering citations into topics.Ritchie[21]extensively examined the impact of various citation context extraction methods on the perfor-mance of information retrieval.

The previous studies clearly indicate that citation con-texts are a good summary of the contributions of a cited paper and clearly re?ect the information needs(i.e.,moti-vations)of citations.Our work moves one step ahead to recommend citations according to contexts.

3.PROBLEM DEFINITION AND ARCHITEC-

TURE

In this section,we formulate the problem of context-based citation recommendation,and discuss the preprocessing step. 3.1Problem De?nition

Let d be a document,and D be a document corpus. Definition3.1.In a document d,a context c is a bag

of words.The global context is the title and abstract of d.The local context is the text surrounding a citation or placeholder.If document d1cites document d2,the local context of this citation is called an out-link context with respect to d1and an in-link context with respect to d2.

A user can submit either a manuscript(i.e.,a global con-text and a set of out-link local contexts)or a few sentences (i.e.,an out-link local context)as the query to our system. There are two types of citation recommendation tasks,which happen in di?erent application scenarios.

Definition3.2(Global Recommendation).Given a query manuscript d without a bibliography,a global recom-mendation is a ranked list of citations in a corpus D that are recommended as candidates for the bibliography of d.

Note that di?erent citation contexts in d may express dif-ferent information needs.The bibliography candidates pro-vided by a global recommendation should collectively satisfy the citation information needs of all out-link local contexts

in the query manuscript d.

Definition3.3(Local Recommendation).Given an out-link local context c?with respect to d,a local recom-mendation is a ranked list of citations in a corpus D that are recommended as candidates for the placeholder associ-ated with c?.

For local recommendations,the query manuscript d is an optional input and it is not required to already contain a representative bibliography.

To the best of our knowledge,global recommendations are only tackled by document-citation graph methods(e.g., [23])and topic models(e.g.,[24,18]).However,the context-aware approaches have not been considered for global or local recommendations(except in a limited case where a bibliography with papers relevant to each citation context is required as the input).

3.2Preprocessing

Our proposed context-based citation recommendation sys-tem can take two types of inputs,a query manuscript d1or

citing documents

cited

documents Figure 3:Context-oblivious methods for global rec-ommendation.

just a single out-link local context c ?.We preprocess the

query manuscript d 1by extracting its global context (i.e.the title and abstract)and all of its out-link local contexts.Extracting the local contexts from d 1is not a trivial task.Ritchie [21]conducted extensive experiments to study the impact of di?erent lengths of local citation contexts on in-formation retrieval performance,and concluded that ?xed window contexts are simple and reasonably e?ective.Thus,before removing all stop words,for each placeholder we ex-tract the citation context by taking 50words before and 50words after the placeholder.This preprocessing is e?cient.For a PDF document of 10pages and 20citations,prepro-cessing takes on average less than 0.1seconds.

The critical steps of the system are:(1)quickly retrieving a large candidate set which has good coverage over the pos-sible citations,and (2)for each placeholder associated with an out-link local context and for the bibliography,ranking the citations by relevance and returning the top K .The next two sections focus on these critical steps.

4.THE CANDIDATE SET

Citation recommendation systems,including our system and especially those that compute intricate graph-based fea-tures [23,27],?rst quickly retrieve a large candidate set of papers.Then,features are computed for papers in this set and ranking is performed.This is done solely for scalability.Techniques for retrieving this candidate set are illustrated in Figures 3and 4.Here,the query manuscript is repre-sented by a partially shaded circle.Retrieved documents are represented as circles with numbers corresponding to the numbered items in the lists of retrieval methods discussed below.In this section,we discuss two kinds of methods for retrieving a candidate set.Those methods will be evaluated in Section 6.2.

4.1Context-oblivious Methods

The context-oblivious methods do not consider local con-texts in a query manuscript that has no bibliography.For a query manuscript d 1,we can retrieve

1.The top N documents with abstract and title most similar to d 1.We call this method GN (e.g.,G100,G1000).

2.The documents that share authors with d 1.We call this method Author .

3.The papers cited by documents already in a candidate set,generated by some other method (e.g.,GN or Au-thor).We call this method CitHop .

local citation

contexts

cited

documents

citing

documents

(a) single-context-based Figure 4:Context-aware methods.The single-context-based method is for local recommendation with the absence of query manuscript d 1.The hy-brid method is for global recommendation and local recommendation with the presence of d 1,where the ?nal candidate set is the union of the candidate sets derived from each local out-link context in d 1.4.The documents written by the authors whose papers are already in a candidate set generated by some other method.We call this method AuthHop .Note that retrieval based on the similarity between the entire text content of documents would be too slow.Thus,these methods appear to provide a reasonable alternative.However,the body of an academic paper covers many ideas that would not ?t in its abstract and so many relevant doc-uments will not be retrieved.Retrieval by author similarity will add many irrelevant papers,especially if the authors have a broad range of research interests and if CitHop or AuthHop is used to further expand the candidate set.Context-aware methods can avoid such problems.

4.2Context-aware Methods

The context-aware methods help improve coverage for rec-ommendations by considering local contexts in the query manuscript.In a query manuscript d 1,for each context c ?,we can retrieve:

5.The top N papers whose in-link contexts are most sim-ilar to c ?.We call this method LN (e.g.,L100).

6.The papers containing the top-N out-link contexts most similar to c ?(these papers cite papers retrieved by LN ).When used in conjunction with LN ,we call this method LCN (e.g.,LC100).We found that method LCN is needed because frequently a document from a digital library may have an out-link con-text that describes how it di?ers from related work (e.g.,“prior work required full bibliographies but we do not”).Thus,while an out-link context usually describes a cited paper,sometimes it may also describe the citing paper,and this description may be what best matches an out-link con-text c ?from a query manuscript.

The above 6methods can be combined in some obvious ways.For example,(L100+CitHop)+G1000is the can-didate set formed by the following process:for each context c ?in a query manuscript d 1,add the top 100documents with in-link local context most similar to c ?(i.e.L100)and all of their citations (i.e.CitHop )and then add the top 1000documents with abstract and title most similar to d 1(i.e.G1000).

5.MODELING CONTEXT-BASED CITATION

RELEV ANCE

In this section,we propose a non-parametric probabilis-

tic model to measure context-based(and overall)relevance

between a manuscript and a candidate citation,for rank-

ing retrieved candidates.To improve scalability,we use an

approximate inference technique which yields a closed form

solution.Our model is general and simple so that it can be

used to e?ciently and e?ectively measure the similarity be-

tween any two documents with respect to certain contexts

or concepts in information retrieval.

5.1Context-Based Relevance Model

Recall that a query manuscript d1can have a global con-

text c1(e.g.,a title and abstract,which describes the prob-

lem to be addressed)and the out-link local contexts c2,...,c k

1 (e.g.,text surrounding citations)which compactly express

ideas that may be present in related work.A document d2

in an existing corpus D has a global context b1(e.g.ti-

tle and abstract),and local in-link contexts b1,...,b k

2(e.g.,

text that is used by papers citing d2)which compactly ex-press ideas covered in d2.

In this section,we describe a principled and consistent way of measuring sim(d1,d2),de?ned as the overall relevance of d2to d1and sim(d1,d2;c?),de?ned as the relevance of d2 to d1with respect to a speci?c context c?(in particular,c?could be any of the out-link contexts c i).Our techniques are based on Gleason’s Theorem specialized to?nite dimen-sional real vector spaces.

Theorem5.1(Gleason[10]).For an n-dimensional real vector space V(with n≥3),let p be a function that assigns a number in[0,1]to each subspace of V such that p(v1⊕v2)=p(v1)+p(v2)whenever v1and v2are orthog-onal subspaces1and p(V)=1.Then p(v)=T race(T P v) where P v is the projection matrix for subspace v(e.g.P v w is the projection of vector w onto the subspace v),and T is a density matrix–a symmetric positive semide?nite matrix whose trace is1.

Note that van Rijsbergen[20]proposed using Gleason’s The-orem as a model for information retrieval,and this was also extensively studied by Melucci[17].However,our frame-work is substantially di?erent from their proposals since it relies on comparisons between density matrices.

Let W be the set of all words in our corpus and let|W|be the number of words.The vector space V is|W|-dimensional with one dimension for each word.

In this framework,atomic concepts will be represented as one-dimensional vector spaces and we will treat each context (global,in-link,out-link)as an atomic concept.Each atomic concept c will be associated a unit column vector which we shall also denote by c(one such representation can be de-rived from normalizing tf-idf scores into a unit vector).The projection matrix for c is then cc T.Our goal is to measure the probability that c is relevant to a document d and so, by Gleason’s Theorem,each document d is associated with a density matrix T d.The probability that c is relevant to d is then p d(c)=T race(T d cc T)=c T T d c.Note that similar atomic concepts(as measured by the dot product)will have similar relevance scores.

1v

1⊕v2is the linear span of v1and v2.

Now,the probability distribution characterized by Glea-

son’s theorem is not a generative distribution–it cannot be

used to sample concepts.Instead,it is a distribution over

yes/no answers(e.g.is concept c relevant or not?).Thus

to estimate T d for a document d we will need some(un-

known)generative distribution p gen over unit vectors(con-

cepts).Our evidence about the properties of T d come from

the following process.p gen independently generates k con-

cepts c1,...,c k and these concepts are then independently

judged to be relevant to d.This happens with probability

k

i=1

p gen(c i)p d(c i).We seek to?nd a density matrix T d

that maximizes the likelihood.The log likelihood is:

k

i=1

log p gen(c i)+

k

i=1

log

c T i T

d c i

.

Now,if there is only one concept(i.e.k=1),then the

maximum likelihood estimator is easy to compute:it is T d=

c1c t1.In the general case,however,numerical methods are

needed[3].The computational cost of estimating T d can be

seen from the following proposition:

Proposition5.2.The density matrix T d can be repre-

sented as

r

i=1

t i t T i where the t i are a set of at most r or-

thogonal column vectors with

(t i·t i)=1,and r is the

dimension of the space spanned by the c i(the number of lin-

early independent contexts).There are O(r2)parameters to

determine and numerical(iterative)techniques will scale as

a polynomial in r2.

The detailed proof is in Appendix A.

Furthermore,the addition of new documents to the corpus

will cause the addition of new in-link contexts,requiring a

recomputation of all the T d.Thus the likelihood approach

will not scale for our system.

5.2Scalable Closed Form Solutions

Since hundreds of thousands of density matrices need to

be estimated(one for each document)and recomputed as

new documents are added to the corpus,we opt to replace

the exact but slow maximum likelihood computation with an

approximate but fast closed form solution which we derive

in this section.

We begin with the following observation.For each con-

cept c i,the maximum likelihood estimate of T d given that

concept c i is relevant is c i c T i.It stands to reason that our

overall estimate of T d should be similar to each of the c i c T i.

We will measure similarity by the Frobenius norm(square-

root of the sum of the squared matrix entries)and thus set

up the following optimization problem:

minimize L(T d)=

k

i=1

||T d?c i c T i||2F,

subject to the constraint that T d is a density matrix.Taking

derivatives,we get

?L

?T d

=2

k

i=1

(T d?c i c T i)=0,

leading to the solution T d=1

k

k

i=1

c i c T i.Now that we have

a closed form estimate for T d,we can discuss how to measure

the relevance between documents with respect to a concept.

Global Recommendation

Let T d

1and T d

2

be the respective density matrices of the

manuscript d1and a document d2from the corpus D.We de?ne sim(d1,d2),the overall relevance of d2to d1to be the probability that a random context drawn from the uniform distribution over contexts is relevant to both d1and d2.Af-ter much mathematical manipulation,we get the following:

Proposition5.3.Let T d

1=1

k1

k1

i=1

c i c T i and

T d

2=1

k2

k2

i=1

b i b T i.Then the relevance of d2to d1is:

sim(d1,d2)=

1

12

k1

i=1

k2

j=1

(c i·b j)2.(1)

The detailed proof is given in Appendix B.Given query manuscript q1,we use Equation1to rank documents for global recommendation.

Local Recommendation

If we are given a single context c?instead of a manuscript,we can still compute sim(c?,d2),the relevance of a document

d2∈D to the context c?.Letting T d

2=1

k2

k2

i=1

b i b T i,then

by Gleason’s Theorem,we have:

sim(c?,d2)=T race(T d

2c?c T?)=

1

k2

k2

j=1

(b j·c?)2.(2)

We de?ne sim(d1,d2;c?),the relevance of d2to d1with respect to context c?as the probability that c?is relevant to both documents.Applying Gleason’s Theorem twice:

sim(d1,d2;c?)=T race(T d

1c?c T?)T race(T d

2

c?c T?)

=

1

k1k2

k1

i=1

(c i·c?)2

k2

j=1

(b j·c?)2.(3)

Given query manuscript d1,we use Equation3to rank doc-uments for recommendations at the placeholder associated with context c?in d1.If a citation context c?is given with-out d1,then we use Equation2.

6.EXPERIMENTS

We built a real system in the CiteSeerX staging server to evaluate context-aware citation recommendations.We used all research papers published and crawled before year 2008as the document corpus D.After removing duplicate papers and the papers missing abstract/title and citation contexts,we obtained456,787unique documents in the cor-pus.For each paper,we extracted its title and abstract as the global citation context or text content.Within a paper,we simply took50words before and after each cita-tion placeholder as its local citation context.We removed some popular stop words.In order to preserve the time-sensitive past/present/future tenses of verbs and the singu-lar/plural styles of named entities,no stemming was done. All words were transferred to lower-cases.Finally,we ob-tained1,810,917unique local citation contexts and716,927 unique word terms.We used all1,612papers published and crawled in early2008as the testing data set.

We implemented all algorithms in C++and integrated them into the Java running environment of CiteSeerX.All experiments were conducted on a Linux cluster with128nodes2,each of which has8CPU processors of2.67GHz and 32G main memory.

6.1Performance Measures

The performance of recommendation can be measured by a wide range of metrics and means,including user studies and click-through monitoring.For experimental purpose,we focus on four performance measures as follows in this paper. Recall(R):We removed original citations from the test-ing documents.The recall is de?ned as the percentage of original citations that appear in the top K recommended ci-tations.Furthermore,we categorize recall into global recall and local recall for global and local recommendation respec-tively.The global recall is?rst computed as the percentage of original bibliography of each testing document d that ap-pears in the top K recommended citations of d,and then averaged over all1,612testing documents.The local recall is?rst computed as,for each citation placeholder,the per-centage of the original citations cited by c?that appear in the top K recommended citations of c?,and then averaged over all7,049testing out-link citation contexts.

Co-cited probability(P):We may recommend some rel-evant or even better recommendations other than those orig-inal ones among the top K results,which cannot be captured by the traditional metric like precision.The previous work usually conducted user studies for this kind of relevance eval-uation[16,6].In this paper,we instead use the wisdom of the popularity as the ground truth to de?ne a systematic metric.For each pair of documents d i,d j where d i is an original citation and the d j is a recommended one,we cal-culate the probability that these two documents have been co-cited by the popularity in the past as

P=

number of papers citing both d i and d j

i j

.

The co-cited probability is then averaged over all K·l unique document pairs for the top K results,where l is the number of original citations.Again,we categorize this probability into a global version and a local version:the former is av-eraged over all testing documents and the latter is averaged over all testing citation contexts.

NDCG:The e?ectiveness of a recommendation system is also sensitive to the positions of relevant citations,which cannot be evaluated by recall and co-cited probability.In-tuitively,it is desirable that highly relevant citations appear earlier in the top K list.We use normalized discounted cumulative gain(NDCG)to measure the ranked recommen-dation list.The NDCG value of a ranking list at position i is calculated as

NDCG@i=Z i

i

j=1

2r(j)?1

log(1+j)

,

where r(j)is the rating of the j-th document in the rank-ing list,and the normalization constant Z i is chosen so that the perfect list gets a NDCG score of1.Given a testing document d1and any other document d2from our corpus D,we use the average co-cited probability of d2with all original citations of d1to weigh the citation relevance score of d2to d1.Then,we sort all d2w.r.t.this score(suppose P max is the highest score)and de?ne5-scale relevance num-ber for them as the ground truth:4,3,2,1,0for documents 2Due to the PSU policy,we could only use8of them.

Table1:Compare di?erent candidate sets.Num-bers are averaged over1,612test documents. Methods Coverage Candidate Set Size G10000.441000

L1000.55341

LC1000.63674

L10000.692,844

LC10000.785,692

Author0.0517

L100+CitHop0.611,327

L1000+CitHop0.729,049

LC100+CitHop0.733,561

G1000+CitHop0.733,790

LC1000+CitHop0.8322,629

Author+CitHop0.1563

L100+G10000.751,312

LC100+G10000.791,618

(L100+CitHop)+G10000.792,279

(LC100+CitHop)+G10000.854,460

(LC1000+G1000)+CitHop0.9224,793

LC100+G1000+(Author+CitHop)0.791,674

(LC100+G1000)+AuthHop0.8839,496

in(3P max/4,P max],(P max/2,3P max/4],(P max/4,P max/2], (0,P max/4]and0respectively.Finally,the NDCG over all testing documents(the global version)or all testing cita-tion contexts(the local version)is averaged to yield a single qualitative metric for each recommendation problem. Time:We use the running time as an important metric to measure the e?ciency of the recommendation approaches.

6.2Retrieving Candidate Sets

Table1evaluates the quality of di?erent candidate set re-trieval techniques(see Section4for notation and detailed descriptions).Here we measure coverage(the average recall of the candidate set with respect to the true bibliography of a testing document)and candidate set size.A good can-didate set should have high recall and a small size since large candidate sets slow down the?nal ranking for all rec-ommendation systems.For context-aware methods,we feel LC100+G1000achieves the best tradeo?between candi-date set size and coverage.For context-oblivious methods, G1000+CitHop works reasonably well(but not as well as LC100+G1000).However,the retrieval time of context-oblivious methods is around0.28seconds on an8-node clus-ter.On the other hand,the retrieval time of context-aware methods ranges from2to10seconds on the same clus-ter(depending on the number of out-link contexts3of a query manuscript).Our goal for the?nal system is to use LC100+G1000and to speed up retrieval time using a com-bination of indexing tricks and more machines(since this re-trieval is highly parallelizable).Note,however,that ranking techniques are orthogonal to candidate set retrieval meth-ods.Thus,when we compare our ranking methods for rec-ommendations with baselines and related work,we will use LC100+G1000as the common candidate set,since our eventual goal is to use this retrieval method in our system.

6.3Global Recommendation

In this section,we compare our context-aware relevance model(CRM for short,Section5)with other baselines in global recommendation performance,since the related work only focused on recommending the bibliography.

3Due to OCR and crawling issues,there were an average of 5out-link contexts per testing document.Recommendation Quality

We compare CRM with7other context-oblivious baselines which include:

HITs[13]:the candidates are ranked w.r.t.their author-ity scores in the candidate set subgraph.We choose to com-pare with HITs because it is interesting to see the di?erence between the popularity(link-based methods)and the rele-vance(context or document based methods).

Katz[15]:the candidates are ranked w.r.t the Katz dis-tance,

i

βi N i,where N i is the number of unique paths of length i between the query manuscript/context and the candidate and the path should go through the top N sim-ilar documents/contexts of the query manuscript/context, andβi is a decay parameter between0and1.We choose to compare Katz because this feature has been shown to be the most e?ective among all text/citation features in[23] for document-based global recommendation.Note that[23] used the ground truth to calculate the Katz distance,which is impractical.

l-count and g-count:the candidates are ranked accord-ing to the number of citations in the candidate set subgraph (l-count)or the whole corpus(g-count).

textsim:the candidates are ranked according to similar-ity with the query manuscript using only title and abstract. This allows us to see the bene?t of a context-aware approach. di?usion:the candidates are ranked according to their topical similarities which are generated by the multinomial di?usion kernel[14]to the query manuscript,K(θ1,θ2)= (4πt)?|W|2exp

?1arccos2(

√

θ1·

√

θ2)

,whereθ1andθ2are topic distributions of the query manuscript d1and the can-didate d2respectively,t is the decay factor.Since we only care about the ranking,we can ignore the?rst item and t. We choose to compare with it because topic-based citation recommendation[24]is one of related work and the multi-nomial di?usion kernel is the state-of-the-art tool in topic-based text similarity[8].We run LDA[5]on each candidate set online(by setting the number of topics as60)to get the topic distributions for documents.

mix-features:the candidates are ranked according to the weighted linear combination of the above6features.We choose to compare it because in[23],a mixture approach considering both text-based features and citation-based fea-tures is the most e?ective.

Figures5(a),(b)and(c)illustrate their performances on recall,co-cited probability and NDCG,respectively. Among all methods,g-count is the worst one simply be-cause it is measured over the whole corpus,not the candidate set.Context-oblivious content-based methods like textsim and di?usion come to heel,indicating that abtract/title only are too sparse to portray the speci?c citation functions well. Moreover,they cannot?nd the proper related work that uses di?erent conceptual words.The di?usion is better than textsim,indicating that topic-based similarity can capture the citation relations more than raw text similarity.The social phenomenon that the rich gets richer is also common in the citation graph,since the citation features including l-count,HITs,and Katz work better than the abstract/title-based text features.Interestingly,[23]claimed that citation features might do a poor job at coverage.But on our data, they have higher recall values than text features.A combi-nation of these features(mix-features)can further improve the performance,especially on the recall and NDCG,which

(a)recall(b)co-cited probability(c)NDCG

Figure5:Compare performances for global recommendation.

means that if a candidate is recommended by multiple fea-tures,it should be ranked higher.Limited by space,we did not report the results using advanced machine learning tech-niques to learn weights for the features,which can improve the performance further.An interesting?nding is that the performance of HITs(especially on NDCG)increases signif-icantly after more candidates are returned,indicating that some candidates with moderate authoritative scores are our targets(people may stop citing the most well-known papers after they become the standard techniques in their domains and shift the attentions to other recent good papers).Katz works the best among single features,partially because it im-plicitly combines the text similarity with the citation count. It works like the collaborative?ltering,where candidates of-ten cited by similar documents are recommended.Finally, our CRM method leads the pack on all three metrics,im-plying that after considering all historical in-link citation contexts of candidates(then the problem of using di?erent conceptual words for the same concept would be alleviated), CRM is e?ective in recommending bibliography. Ranking Time

Time is not a major issue for most ranking algorithms except for topic-based methods,where LDA usually took tens of seconds(50~100)for each new candidate set.Thus,topic-based methods including di?usion and mix-features are not suitable for online recommendation systems.All other rank-ing algorithms need less than0.1seconds.Limited by space, the detailed comparisons are omitted here.

6.4Local Recommendation

Local recommendation is a novel task proposed by our context-aware citation recommendation system.Here,we do not compare with the above baselines because they are not tailored for local recommendation.Instead,we evaluate the impact of the absence/precense of the global context and other local contexts,and analyze the problem of context-aware methods.

If a user only inputs a bag of words as the single context to request recommendations,we can then only use Equa-tion2to rank candidates.We name this method as CRM-singlecontext.If a user inputs a manuscript with place-holders inside,we can then rank candidates for each place-holder using Equation3.We name this method as CRM-crosscontext.Figure6illustrates the performance of these two kinds of local recommendations on recall,co-cited prob-ability and NDCG.

CRM-crosscontext is rather e?ective.In Figure5(due to crawling and OCR issues,our testing documents have an av-erage of5out-link citation contexts that point to documents in our corpus,so“top5per context”corresponds to“top 25per document”),the performance of CRM-crosscontex is very close to CRM in global recommendation.We know CRM-crosscontext and CRM have the same input(the same amount of information to use).However,CRM-crosscontext tackles a much harder problem than CRM,and has to as-sign citations to each placeholder.Thus,CRM-crosscontext is more capable than CRM.CRM-crosscontext outperforms all baselines of global recommendation and thus is also su-perior than their local versions.Limited by space,we omit the details here.

CRM-crosscontext is able to e?ectively rank candidates for placeholders.For example,more than42%original ci-tations can be found in the top5recommendations,and frequently co-cited papers(w.r.t.original citations)also ap-pear early as indicated by NDCG.On the other hand,CRM-singlecontext uses much less information(without global context and other coupling contexts)but still achieves rea-sonable performance.For example,if a user only inputs100 words as the query,around34%original citations can be found in the top5list.

One may wonder what would happen to some documents in the corpus which do not have enough in-link citation con-texts in history.Given an original citation from a query manuscript,we examine the correlation of its missing/hit probability to its number of historical in-link citation con-texts in CRM,shown in Figure7.

The correlation results clearly indicate that the missing/hit probability of an original citation declines/raises proportion-ally to its number of historical in-link contexts.In fact, for those new corpus documents without any in-link con-texts,our context-aware methods can still conduct context-oblivious document-based recommendation,except that we still enhance the speci?c citation motivations of a query manuscript using its out-link local contexts.

(a)recall(b)co-cited probability(c)NDCG

Figure6:Evaluate local recommendations.

(a)scatter distribution(b)probability distribution Figure7:Correlation between count/probability of missed/hit citations and their number of historical

in-link citation contexts.

7.CONCLUSIONS

In this paper,we tackled the novel problem of context-aware citation recommendation,and built a context-aware citation recommendation prototype in CiteSeerX.Our sys-tem is capable of recommending the bibliography to a manuscript and providing a ranked set of citations to a speci?c cita-tion placeholder.We developed a mathematically sound context-aware relevance model.A non-parametric proba-bilistic model as well as its scalable closed form solutions

are then introduced.We also conducted extensive experi-ments to examine the performance of our approach.

In the future,we plan to make our citation recommen-dation system publicly available.Moreover,we plan to de-velop scalable techniques for integrating various sources of features,and explore semi-supervised learning on the partial

list of citations in manuscripts.

8.REFERENCES

[1]R.Agrawal,T.Imielinski and A.Swami.Mining

Association Rules Between Sets of Items in Large

Databases.SIGMOD,1993.

[2]S.Aya,https://www.360docs.net/doc/0e8374868.html,goze and T.Joachims.Citation Classi?cation

and its Applications.ICKM’05.

[3]K.Banaszek,G.D’Ariano,M.Paris and M.Sacchi.

Maximum-likelihood estimation of the density matrix.

Physical Review A,1999.

[4] C.Basu,H.Hirsh,W.Cohen and C.Nevill-Manning.

Technical Paper Recommendation:A Study in Combining

Multiple Information Sources.J.of Arti?cial Intelligence

Research,2001.

[5] D.Blei,A.Ng and https://www.360docs.net/doc/0e8374868.html,tent dirichlet allocation.

J.Machine Learning Research2003.

[6]K.Chandrasekaran,S.Gauch,https://www.360docs.net/doc/0e8374868.html,kkaraju and H.Luong.

Concept-Based Document Recommendations for CiteSeer

Authors.Adaptive Hypermedia and Adaptive Web-Based

Systems,Springer,2008.

[7] D.Cohn and T.Hofmann.The missing link-a

probabilistic model of document content and hypertext

connectivity.NIPS’01.

[8] F.Diaz.Regularizing Ad Hoc Retrieval Scores.CIKM’05.

[9] E.Erosheva,S.Fienberg and https://www.360docs.net/doc/0e8374868.html,?erty.Mixed

membership models of scienti?c publications.PNAS2004.

[10] A.Gleason.Measures on the Closed Subspaces of a Hilbert

Space.J.of Mathematics and Mechanics,1957.

[11]S.Huang,G.Xue,B.Zhang,Z.Chen,Y.Yu and W.Ma.

TSSP:A Reinforcement Algorithm to Find Related Papers.

WI’04.

[12] A.Je?rey and H.Dai.Handbook of Mathematical

Formulas and Integrals.Academic Press,2008.

[13]J.Kleinberg.Authoritative sources in a hyperlinked

environment.J.of the ACM,1999.

[14]https://www.360docs.net/doc/0e8374868.html,?erty and G.Lebanon.Di?usion Kernels on Statistical

Manifolds.J.of Machine Learning Research,2005.

[15] D.Liben-Nowell and J.Kleinberg.The link prediction

problem for social networks.CIKM’03.

[16]S.McNee,I.Albert,D.Cosley,P.Gopalkrishnan,https://www.360docs.net/doc/0e8374868.html,m,

A.Rashid,J.Konstan and J.Riedl.On the Recommending

of Citations for Research Papers.CSCW’02.

[17]M.Melucci.A basis for information retrieval in context.

TOIS,2008.

[18]R.Nallapati,A.Ahmed,E.Xing and W.Cohen.Joint

latent topic models for text and citations.SIGKDD’08. [19]Z.Nie,Y.Zhang,J.Wen and W.Ma.Object-Level

Ranking:Bringing Order to Web Objects.WWW’05. [20] C.Rijsbergen.The Geometry of Information Retrieval.

Cambridge University Press,2004.

[21] A.Ritchie.Citation context analysis for information

retrieval.PhD thesis,University of Cambridge,2008. [22] B.Shaparenko and T.Joachims.Identifying the Original

Contribution of a Document via Language Modeling.

ECML,2009.

[23]T.Strohman,W.Croft and D.Jensen.Recommending

Citations for Academic Papers.SIGIR’07and Technical

Report,

https://www.360docs.net/doc/0e8374868.html,/getpdf.php?id=610. [24]J.Tang and J.Zhang.A Discriminative Approach to

Topic-Based Citation Recommendations PAKDD’09. [25]R.Torres,S.McNee,M.Abel,J.Konstan and J.Riedl.

Enhancing Digitial Libraries with Techlens.JCDL’04. [26] F.Wang,B.Chen and Z.Miao.A Survey on Reviewer

Assignment Problem.IEA/AIE’08.

[27] D.Zhou,S.Zhu,K.Yu,X.Song,B.Tseng,H.Zha and

L.Giles.Learning Multiple Graphs for Document

Recommendations.WWW’08.

APPENDIX

A.PROOF OF PROPOSITION5.2

By the spectral theorem,we can choose all the t i to be orthogo-nal.Each t i can also be expressed asαi1s i+···+αia s a+βi1u1+···+βib u b where the s i and u j are orthogonal unit vectors such that the s i span the same space as the c i.The log likelihood is then

k

i=1log p gen(c i)+

k

i=1

log p d(c i)

=

k

i=1

log p gen(c i)+

k

i=1

log

r

j=1

c T

i

t j t T

j

c i=

k

i=1

log p gen(c i)+

k i=1log

r

j=1

([αi1s i+···+αia s a+βi1u1+···+βib u b]·c i)2

=

k

i=1

log p gen(c i)+

k

i=1

log

r

j=1

([αi1s i+···+αia s a]·c i)2.

Since the u i are orthogonal to the c i,we can increase the likeli-hood by replacing each t i=αi1s i+···+αia s a+βi1u1+···+βib u b

with t

i =αi1s i+···+αia s a to get the matrix T

d

=

i

t

i

t T

i

without changing the likelihood.However,

T race(T

d )=

i

t

i

·t

i

=

i

(α2

i1

+···+α2

ia

)since theαi andβj are all orthogonal

<

i

(α2

i1

+···+α2

ia

+β2

i1

+···+β2

ib

)=T race(T d)=1.

Thus we need to multiply each t

i by a constantγ>1to increase

the trace of T

d to1.Multiplication by such aγwill then increase

each of the quantities in the log terms of the log likelihood.Thus

γT

d would hav

e a higher log likelihood than T d.Thus in the max-

imum likelihood solution T d=

i

t i t T

i

,each t i is in the subspace

spanned by the contexts c i and representing all of the t i requires O(r2)parameters.So,a numerical solution will have polynomial complexity in r2.

B.PROOF OF PROPOSITION5.3

Recall that we treat concepts as one-dimensional vector spaces and thus can represent them as unit vectors.Let p be the uni-form distribution over unit vectors.It is well-known that sam-pling from p is equivalent to sampling|W|independent standard Gaussian random variables(one for each dimension)and dividing them by the square root of their sum of squares.The similarity between d1and d2is then:

sim(d1;d2)=

T race(T d

1

ww T)T race(T d

2

ww T)p(w)dw

=1

k1k2 k1

i=1

k2

j=1

(c i·w)2(b j·w)2p(w)dw

=1

k1k2E

k1

i=1

k2

j=1

|W|

α=1

c2

i,α

w2α+2

|W|

β=γ+1

|W|

γ=1

c i,βwβc i,γwγ

|W|

δ=1b2

j,δ

w2

δ

+2

|W|

ω= +1

|W|

=1

b j,ωwωb j, w

.

Now,if all coordinates of w other than w i(for some i)are?xed, it is easy to see that the probability of w i=x and w i=?x are the same,and so the coordinates of w are jointly uncorrelated. This means that all terms with an odd power of some coordinate will become0in the expected value.Thus sim(d1;d2)simpli?es to:

sim(d1;d2)=1

k1k2

k1

i=1

k2

j=1

E

|W|

α=1

c2

i,α

b2

i,α

w4α+

β=γ

c2

i,β

b2

j,γ

w2

β

w2γ+4

δ>

c i,δc i, b j,δb j, w2

δ

w2

.

To compute the necessary moments,we will change basis from Cartesian coordinates to hyperspherical coordinates as follows:

w1=r cosθ1,w2=r sinθ1cosθ2,w3=r sinθ1sinθ2cosθ3,

...,w|W|?1=r sinθ1sinθ2...sinθ|W|?2cosθ|W|?1,

w|W|=r sinθ1sinθ2...sinθ|W|?2sinθ|W|?1;

r=

|W|

i=1

w2

i

≥0;

θ1∈[0,π],θ2∈[0,π],...,θ|W|?2∈[0,π],θ|W|?1∈[0,2π);

and the absolute value of the determinant of the Jacobian is

r|W|?1

|W|?2

i=1

sin|W|?1?i(θi).

Let us also de?ne

Φ≡

2π

π

...

π

∞

r|W|?1

|W|?2

i=3

sin|W|?1?i(θi)drdθ3...dθ|W|?1

(2π)|W|/2

.

The moment calculations are thus as follows:

E[w41]=1

(2π)|W|/2

∞

?∞

...

∞

?∞

x41

(

x2

i

)2

e?(

x2i)/2dx1...dx

|W|

=1

(2π)|W|/2

2π

π

...

π

∞

r4cos4(θ1)

r4

r|W|?1×

|W|?2

i=1

sin|W|?1?i(θi)drdθ1...dθ|W|?1

=Φ

π

π

(1?sin2(θ1))2sin|W|?2(θ1)sin|W|?3(θ2)dθ1dθ2

=Φ

π

π

sin|W|?2(θ1)?2sin|W|(θ1)+sin|W|+2(θ1)

sin|W|?3(θ2)dθ1dθ2 =2Φπ

(|W|?3)!!

(|W|?2)!!

?2(|W|?1)!!

(|W|)!!

+(|W|+1)!!

(|W|+2)!!

(|W|?4)!!

(|W|?3)!!

=2Φπ

(|W|?4)!!

(|W|?3)!!

(|W|?3)!!

(|W|?2)!!

1?2|W|?1

|W|

+(|W|+1)(|W|?1)

(|W|+2)|W|

=2Φπ

(|W|?4)!!

(|W|?3)!!

(|W|?3)!!

(|W|?2)!!

3

(|W|+2)|W|

,

by Wallis’s formula[12]where n!!is the double factorial de?ned

as1if n=0or1and n!!=n×(n?2)!!otherwise.Also,

E[w21w22]=1

(2π)|W|/2

∞

?∞

...

∞

?∞

x21x22

(

x2

i

)2

e?(

x2i)/2dx1...dx

|W|

=1

(2π)|W|/2

2π

π

...

π

∞

r4cos2(θ1)sin2(θ1)cos2(θ2)

r4

r|W|?1×

|W|?2

i=1

sin|W|?1?i(θi)drdθ1...dθ|W|?1

=Φ

π

π

cos2(θ1)sin2(θ1)cos2(θ2)sin|W|?2(θ1)sin|W|?3(θ2)dθ1dθ2

=Φ

π

π

sin|W|(θ1)?sin|W|+2(θ1)

sin|W|?3(θ2)?sin|W|?1(θ2)

dθ1dθ2 =2Φπ

(|W|?1)!!

|W|!!

?(|W|+1)!!

(|W|+2)!!

(|W|?4)!!

(|W|?3)!!

?(|W|?2)!!

(|W|?1)!!

=2Φπ

(|W|?4)!!

(|W|?3)!!

(|W|?3)!!

(|W|?2)!!

|W|?1

|W|

?(|W|+1)(|W|?1)

(|W|+2)|W|

1?|W|?2

|W|?1

=2Φπ

(|W|?4)!!

(|W|?3)!!

(|W|?3)!!

(|W|?2)!!

1

|W|(|W|+2)

.

Thus we have E[w4

i

]=3E[w2

i

w2

j

].Thus sim(d1;d2)is propor-

tional to E[w2

i

w2

j

]which is a universal constant.We can drop

this constant to simplify calculations.Thus our similarity is:

sim(d1;d2)

=1

k1k2

k1

i=1

k2

j=1

3

|W|

α=1

c2

i,α

b2

i,α

+

β=γ

c2

i,β

b2

j,γ

+4

δ>

c i,δc i, b j,δb j,

=1

k1k2

k1

i=1

k2

j=1

2

|W|

α=1

c2

i,α

b2

i,α

+

|W|

β=1

|W|

γ=1

c2

i,β

b2

j,γ

+4

δ>

c i,δc i, b j,δb j,

=1

k1k2

k1

i=1

k2

j=1

2(c i·b j)2+

|W|

β=1

|W|

γ=1

c2

i,β

b2

j,γ

=1

k1k2

k1

i=1

k2

j=1

2(c i·b j)2

+1,since the c i and b j are unit vectors.

Finally,after removing the additive and multiplicative constants

(they don’t a?ect ranking),we can use the following equivalent

formula:

sim(d1;d2)=

1

k1k2

k1

i=1

k2

j=1

(c i·b j)2.

考研英语(一)高频重点单词详解—2011年新题型(上)

考研英语(一)高频重点单词详解—2011 年新题型(上) 这是一篇社会教育类文章,本文是关于《思想市场:美国大学的改革与抵制》一书的书评。文章结构是问题解决型,按照“提出问题——分析问题——提出建议”的步骤展开论述。第一、二段引入人文学科在大学中陷入的奇怪困境,第三、四段分析论述原因在于大学人文学科知识和人才的专业化,第五、六段分析影响是人文学科人才处于困境。末段提出建议:改变人文学科人才的培养方式,培养起更广阔的学术视野。以下是本文中出现的10个高频重点单词,就让我们一起来学习吧! 1. 22discipline ['d?s?pl?n] n. 学科;纪律;训练;惩罚vt. 训练,训导;惩戒 【词根记忆】:dis-(前缀:否定前缀)+cip(词根:拿)+line(线)→站成一条线不许拿→纪律;学科→训练,训导;惩戒 【短语搭配】:financial discipline 财政纪律; 财务约束; 财务束缚; 财务纪律 【真题例句】:So disciplines acquire a monopoly not just over the production of knowledge, but also over the production of the producers of knowledge. 所以,各个学科需要的不仅仅是对知识生产的垄断,还有对知识生产者的产出的垄断。(2011年新题型) 2. 2professionalism [pr?'fe?(?)n(?)l?z(?)m] n. 专业主义;专家的地位;特性或方法 【词根记忆】:pro(向前)+fess(说)+sion(名词)+al(形容词)+ism(……主义)→专业主义;专家的地位 【真题例句】No disciplines have seized on professionalism with as much enthusiasm as the humanities. 没有哪个学科像人文学科那样狂热地看重职业化。(2011年新题型) 3.1professionalize [?d??st?f?'ke??n] vi. 专业化;职业化vt. 使…专业化;使…专业化 【词根记忆】:pro(向前)+fess(说)+sion(名词)+al(形容词)+ize(使……化)→使…专业化;使…专业化 【短语搭配】:to professionalize the teacher 使教师专业化 【真题例句】Besides professionalizing the professions by this separation, top American universities have professionalized the professor. 美国知名大学通过这种学科的分离不仅使学科更专业化,而且使教授也更专业化了。(2011年新题型) 4. 5enthusiasm [?n'θuz??z?m] n. 热心,热忱,热情 【词根记忆】:en-(前缀:使动)+thus(音变自Zeus,古希腊神)iasm(名词后缀)→神让我们产生的积极崇拜的情绪→狂热 【真题例句】No disciplines have seized on professionalism with as much enthusiasm as the humanities. 没有哪个学科像人文学科那样狂热地看重职业化。(2011年新题型) 5. 17humanities [hj?'m?n?t?z?k't?v?t?]

研究生英语期末考试试卷

ad if 命 封 线 密

A. some modern women prefer a life of individual freedom. B. the family is no longer the basic unit of society in present-day Europe. C. some professional people have too much work to do to feel lonely. D. Most Europeans conceive living a single life as unacceptable. 5.What is the author’s purpose in writing the passage? A. To review the impact of women becoming high earners. B. To contemplate the philosophy underlying individualism. C. To examine the trend of young people living alone. D. To stress the rebuilding of personal relationships. Passage Two American dramas and sitcoms would have been candidates for prime time several years ago. But those programs -though some remain popular -increasingly occupy fringe times slots on foreign networks. Instead, a growing number of shows produced by local broadcasters are on the air at the best times. The shift counters longstanding assumptions that TV shows produced in the United States would continue to overshadow locally produced shows from Singapore to Sicily. The changes are coming at a time when the influence of the United States on international affairs has annoyed friends and foes alike, and some people are expressing relief that at least on television American culture is no longer quite the force it once was. “There has always been a concern that the image of the world would be shaped too much by American culture,” said Dr. Jo Groebek, director general of the European Institu te for the Media, a non-profit group. Given the choice, he adds, foreign viewers often prefer homegrown shows that better reflect local tastes, cultures and historical events. Unlike in the United States, commercial broadcasting in most regions of the world -including Asia, Europe, and a lesser extent Latin America, which has a long history of commercial TV -is a relatively recent development. A majority of broadcasters in many countries were either state-owned or state-subsidized for much of the last century. Governments began to relax their control in the 1980’s by privatizing national broadcasters and granting licenses to dozens of new commercial networks. The rise of cable and satellite pay-television increased the spectrum of channels. Relatively inexperienced and often financed on a shoestring, these new commercial stations needed hours of programming fast. The cheapest and easiest way to fill airtime was to buy shows from American studios, and the bidding wars for popular shows were fierce. The big American studios took advantage of that demand by raising prices and forcing foreign broadcasters to buy less popular programs if they wanted access to the best-selling shows and movies. “The studio priced themselves out of prime time,” said Harry Evans Sloan, chairman of SBS Broadcasting, a Pan-European broadcaster. Mr. Sloan estimates that over the last decade, the price of American programs has increased fivefold even as the international ratings for these shows have declined. American broadcasters are still the biggest buyers of American-made television shows, accounting for 90% of the $25 billion in 2001 sales. But international sales which totaled $2.5 billion last year often make the difference between a profit and a loss on show. As the pace of foreign sales slows -the market is now growing at 5% a year, down from the double-digit growth of the 1990’s -studio executives are rethinking production costs. 6. Which of the following best characterizes the image embodied in American shows? A. Self-contradictory B. Prejudice-free C. Culture-loaded D. Audience-targeted 7. The intervention of governments in the 1980’s resulted in __________ . A. the patenting of domination shows and movies B. the emergence of new commercial networks C. the promotion of cable and satellite pay-television D. the intense competition coming from the outside 8. The phrase “on a shoestring” (Para. 6) most probably means __________. A. in need of capital B. after a fashion C. on second thoughts D. in the interests of themselves 9. The main reason why American dramas and sitcoms are driven out of prime time is that ____. A. they lose competitiveness B. they are not market-oriented C. they are too much priced D. they fall short of audience expectations 10. American studio producers will give thought to production costs __________. A. if they have no access to popular shows B. because their endeavors come to no avail C. since bidding wars are no longer fierce D. as international sales pace slows down Passage Three How shops can exploit people's herd mentality to increase sales 1. A TRIP to the supermarket may not seem like an exercise in psychological warfare—but it is. Shopkeepers know that filling a store with the aroma of freshly baked bread makes people feel hungry and persuades them to buy more food than they had intended. Stocking the most expensive products at eye level makes them sell faster than cheaper but less visible competitors. Now researchers are investigating how “swarm intelligence” (th at is,how ants,bees or any social animal,including humans,behave in a crowd) can be used to influence what people buy. 2. At a recent conference on the simulation of adaptive behaviour in Rome,Zeeshan-ul-hassan Usmani,a computer scientist from the Florida Institute of Technology,described a new way to increase impulse buying using this phenomenon. Supermarkets already encourage shoppers to buy things they did not realise they wanted: for instance,by placing everyday items such as milk and eggs at the back of the store,forcing shoppers to walk past other tempting goods to reach them. Mr Usmani and Ronaldo Menezes,also of the Florida Institute of Technology, set out to enhance this tendency to buy more by playing on the herd instinct. The idea is that, if a certain product is seen to be popular, shoppers are likely to choose it too. The challenge is to keep customers informed about what others are buying. 3. Enter smart-cart technology. In Mr Usmani's supermarket every product has a radio frequency identification tag, a sort of barcode that uses radio waves to transmit information,and every trolley has a scanner that reads this information and relays it to a central computer. As a customer walks past a shelf of goods, a screen on the shelf tells him how many people currently in the shop have chosen that particular product. If the number is high, he is more likely to select it too.

国家科技成果登记系统用户操作说明

国家科技成果登记系统用户操作说明 第一章系统概述 科技成果登记是成果转化、推广、统计、奖励等科技成果管理的基础。本系统对于各级成果管理机构和成果完成单位而言,是一个完全独立的科技成果管理工作系统,全国科技成果完成单位和各级科技成果管理机构使用本系统以定期或不定期的方式生成上报数据文件,再通过文件的传输,实现科技成果数据的层层上报,最后通过数据导入,形成各级成果管理部门的成果数据库。 各级成果管理机构在进行成果登记时一定要保证数据的完整性准确性和及时性。 第二章系统运行环境 2.1 硬件环境 IBM PC或兼容机,至少256MB内存,1024*768分辩率的监视器,至少剩余200M的硬盘空间。 2.2 软件环境 Windows 操作系统(如windows 98, windowsXP ,windows2000, windows2003,windows2008等),32位、64位均可。 第三章系统的安装与运行 3.1 系统软件的获得途径 3.1.1 免费向各级科技成果管理部门或科技部"NAST"项目组索要光盘; 3.1.2 从网站https://www.360docs.net/doc/0e8374868.html,或https://www.360docs.net/doc/0e8374868.html,下载应用软件。 3.2 国家科技成果登记系统软件的安装 运行系统的安装程序"国家科技成果登记系统[v9.0].exe",系统即自动安装,缺省安装目录为C:\kjcg9.0,用户可自行选择安装路径。 注意:如果操作系统是windows7、windws2008或更高版本 ①系统安装时,一定要以管理员身份运行安装文件; ②自行选择安装路径时,不要选择c:\program files下。 系统安装后自动在系统"程序"菜单下形成"国家科技成果登记系统[V9.0]"子菜单。 3.3 系统运行 点击“开始”菜单,在"程序"菜单下点击"国家科技成果登记系统V9.0"即进入本系统,屏幕上出现“国家科技成果登记系统V9.0”主窗口。 注意:登记系统7.0版及7.0以上版本将不再分管理版和简易版,过去简易版的用户在主界面选择成果完成单位,过去管理版的用户则要选择成果管理机构。 3.4 系统功能 1)用户注册:根据具体的使用对象,对成果管理机构和下属机构、成果完成单位进行注册,确定用户相应的管理功能; 2)数据处理:对本软件使用单位管理的成果进行日常的录入、修改、删除、打印等操作; 3)数据导出:将本单位管理的成果数据和单位信息,生成上报文件,并将上报文件通过传输上报给上级管理机构;

考研英语重点语法小归纳.doc

参考译文:如果这些问题得不到解决,研究行为的技术手段就会继续受到排斥,解决问题的唯一方式不能也随之继续受到排斥。 分析:很明显,and with it possibly the only way to solve our problem,是一个省略句,with做状语一般表示伴随,这一个分句只有一个状语加一个名词结构,构不成一个完整的句子。实际上,与前句相同的成分才会被省略,前一句的谓语部分是:will continue to be rejected. 所以,后一分句补充完整就是:with the rejection of the technology of behavior, possibly the only way to solve our problem will continue to be rejected. 三、从句 从句不能单独成句,但它也有主语部分和谓语部分,就像一个句子一样。所不同在于,从句须由一个关联词(connective)引导。根据引导从句为主不同大概可分为:主语从句、表语从句、宾语从句、同位语从句、定语从句和状语从句6类。前四类由于主语从句、表语从句、宾语从句及同位语从句在句子的功用相当于名词,所以通称名词性从句;定语从句功能相当于形容词,称为形容词性从句;而状语从句功能相当于副词,称为副词性从句。状语从句还可以分为条件状语从句、原因状语从句、地点状语从句和时间状语从句。在翻译的时候,它会成为一个考点,所在在做题的时候,一定要辨清它到底是什么从句,正确地翻译出来。

例如:Time was when biologists somewhat overworked the evidence that these creatures preserve the health of game by killing the physically weak,or that they prey only on“worthless”species.(2010,翻译) 分析:本题中含有两个并列的同位语从句,that these creatures preserve the health of game by killing the physically weak, or that they prey only on“worthless”species.两个that的内容是对前面的evidence进行补充说明或解释。 总之,这是在历年考研英语的基础之上总结的三个重点语法,它们重点中的重点,每年都会以一定的形式出现在考题中,希望广大考生引起足够重视,各个击破! 中国大学网考研频道。 参考译文:如果这些问题得不到解决,研究行为的技术手段就会继续受到排斥,解决问题的唯一方式不能也随之继续受到排斥。 分析:很明显,and with it possibly the only way to solve our problem,是一个省略句,with做状语一般表示伴随,这一个分句只有一个状语加一个名词结构,构不成一个完整的句子。实际

研究生-基础综合英语-单词整理

Unit1 1.semiliterate:a.semi-educated;having only an elementary level of reading and writing ability半文盲的,有初等文化的semi-:half or partly 2.dropout:n.a personwho leavesschool before finishing a course(尤指中学的)退学学生 3.do-gooder:n.sb.who does things that they think will help other people,although the other people might not find their actions helpful一个总是试着帮助别人的人(通常是贬义);不实际的社会改革家(指幼稚的理想主义者,支持善心或博爱的事件的改革者) 4.impediment:n.obstacles,barriers妨碍,阻止,阻碍,阻挡 5.trump card:anything decisiveheld in reservefor useat a critical time王牌 6.charmer:n.a personwho hasgood qualities that make you like him/her讨人喜欢的人,有魅力的人,有迷惑力的人(尤指迷人的女人) 7.get by:to be able to deal with a situation with difficulty,usually by having just enoughof sth.you need,suchas money过得去 8.settledown:to becomequiet and calm(使)安静下来;平息 9.flunk:v.to fail an exam or courseof study不及格 https://www.360docs.net/doc/0e8374868.html,posure:n.calmnessandcontrol平静;镇静;沉着 11.parade:n.a seriesof peopleor things that appearone after the other 12.at stake:to be won or lost;risked受到威胁,面临危险 13.sail:v.to move quickly and effortlessly投入 14.testimony:n.spokenor written statementthat sth.is true证词,证明 15.conspiracy:n.act of joint planning of a crime阴谋,共谋 16.doom:v.to makesb.or sth.certain to do or experiencesth.unpleasant注定 17.follow through:to continue a stroke,motion,plan,or reasoningthrough to the end 将动作、计划等进行到底 18.I flunked my secondyearexamsandwas lucky not to be thrown out of college. 19.The managementdid not seemto consideroffice safetyto be a priority. 20.Thousandsof lives will be at stake if emergency aid does not arrive in the city soon. 21.I think therewas a conspiracy to keep me out of the committee. Unit2 1.propose:v.[to sb.]to aska personto marry one提亲;求婚 2.knockoff:n.a copy or reproduction of a designetc.esp.one madeillegally假货;赝品 3.Windex:v.to clean with a kind of detergentby the brand of Windex用Windex牌清洁剂清洗 4.takethe plunge:to take a decisive first step,commit oneself irrevocably to a course of action冒险尝试 5.bridesmaid:n.a girl or unmarried woman attending the bride at a wedding女傧相;伴娘 6.maid of honor:a principal bridesmaid女傧相

江苏大学XXXX级硕士研究生英语期末考试样卷

江苏大学XXXX级硕士研究生英语期 末考试样卷 考试科目:文献阅读与翻译 考试时 间:XXXXXX Directions:Answer the following questions on the Answer Sheet. 1. How many kinds of literature do you know? And what are they? (5%) 2. How many types of professional papers do you know? And what are they? (5%) 3. What are the main linguistic features of Professional Papers? (10%) 4. What are the purposes of abstracts? How many kinds can the abstracts be roughly classified into? And what are the different kinds? (10%) 5.What is a proposal? How many kinds of proposals do you think are there? What are the main elements of a proposal? (10%) 6.Give your comments on the linguistic features of the following passage. (15%) Basic Point-Set Topology One way to describe the subject of Topology is to say that it is

研究生英语基础1复习重点句子

1.1 What we do in the next two to three years will determine our future. This is the defining moment. 2 some of the dire projections may not occur, but in light of the warnings from our best scientists, it will be beyond irresponsible to take that bet. 3By badly disrupting that envelope, we adversely affect every dimension of human well-being that is tied to the environment. 4 In 2004, Swiss Re warned in a report that the costs of natural disasters, aggravated by climate change, threatened to double to150 billon a year in 10 years. 5The threat of this major disruption coming from a number of factors, including the increased costs of damage from extreme weather events such as floods, droughts, hurricanes, heat waves, and major storms. 6 Stern also points to the consequences of climate change on the environment and on human health as economic growth and productivity suffer under the weight of degrading environmental conditions. 7Moreover, we would incur a very large opportunity cost, having lost out on the chance to become the economic leader in developing alternative and more efficient uses of energy. 4.1In recent years the mushrooming power, functionality and ubiquity of computers and the Internet have outstripped early forecasts about technology’s ra te of advancement and usefulness in everyday life. 2Alert pundits now foresee a world saturated with powerful computer chips, which will increasingly insinuate themselves into our gadgets, dwellings, apparel and even our bodies. 3In light of what I have just described as a history of largely unfulfilled goals in robotics, why do I believe that rapid progress and stunning accomplishments are in the offing? 4Properly educated, the resulting robots will become quite formidable. In fact, I am sure they will outperform us in any conceivable area of endeavor, intellectual or physical. 5 Human will play a pivotal role in formulating the intricate complex of laws that will govern corporate behavior. 5.1Sundown had seen his pockets empty before, but sunrise had always seen them lined. 2Along came a youngster of five, headed for the dispensary, stepping high with the consequence of a big errand, possibly one to which his advancing age had earned him promotion. 3 Content, light-hearted, ironical, keenly philosophic, he watched the moon drifting in and out amidst a maze of flying clouds. 4People’s money must be spent to advance their priorities, not to line pockets of contractors or to maintain projects that don’t work. 5 They had entered a maze of fences, through which they twisted and turned for a long time before finally finding their way out again. 7.1 Through necessity, I was entering a club more viewers are joining by choice: the post television society. 2Unless you got proactive with VCR, you did not copy, carry or remix what you saw. This was why mass media were culturally unifying: those moments that mattered, we all saw in exactly the same way. 3Why sit through 90 minutes waiting for the good bits when an army of online editors will separate the wit from the chaff? 8.1But amid this gloom, there’s buzz about consumers’ shifting demand toward ‘green homes’---and how builders with this expertise remain busy despite the bust. 2.It’s taken almost as a fait accompli,that green building is where the market is headed. 3.If there is a downside to this trend, it may be the growing numbers of green homeowners who’ll brag about low utility bills the way golfers boast of low golf scores.

武汉大学研究生英语期末试题 答案及评分 2009级

Keys to Paper A (1---65 题每题一分,客观题共65分) 1-10 B D A C B C C D A B 11-20 A B D A C A D C B D 21-30 B D C A B D C A C B 31-45 D A D A B D C A C B C D C A B 46-55 A D C A B A C C D D 56-65 A C D B D A B C C D Part IV 汉译英(评分给正分,每小题都需打分,精确到0.5分) 1. China is a large country with four-fifths of the population engaged in agriculture, but only one tenth of the land is farmland, the rest being mountains, forests and places for urban and other uses. (2分) 2. An investigation indicates that non-smoking women living in a smoking family environment for 40 years or still longer will have double risk of developing lung cancer. (2分) 3. In our times, anyone who wants to play an important role in a society as he wishes must receive necessary education. With the development of science, more courses are offered in primary schools and middle schools. Compared with the education in the past, modern education places more stress on practicality. (3 分) 英译汉(评分给正分,每小题都需打分,精确到0.5分) 4. 程式化思维是人们交流的绊脚石,因为它有碍于人们对事物的客观观察。客观观察指人 们敏感地搜寻线索,引导自己的想象更接近他人的现实。(2分) 5. 当经济学家最初探讨经济发展的原因时,他们发现:人们一直认为无法解释的剩余因素是人力资本。人力资本,即人口的技能,是造成各国生产力差距以及地位不平等的一个重要因素。(3分) 6. 下文从解决妇女贫困问题的角度出发,探讨两性平等、减轻贫困和环境的可持续性诸目的之间的协同作用,涉及能源短缺、水资源缺乏、健康、气候变化、自然灾害,以及授予妇女在农业、林业、生态多元化管理领域中的权力使之创造可持续的生存方式等问题。(3分) Part V Summary (20分) 评分标准:主要看考生是否了解概要写作的方法以及能否用恰当的语言来表达。概要一定要客观简洁地表达原文的主要内容,不需要评论,不能照抄原文。具体给分标准为:(1)内容和形式都达标,仅有一二处小错:18-19分。(2)内容缺少一到三点,形式错误不过三处:16-17分。(3)内容欠缺较多,形式错误有五六处:14-15分。(4)内容欠缺较多,形式错误有十来处:12-13分。 Science and Humanity The twentieth century has made greater change to the world, which was brought by the progress in science, than any previous century. Unfortunately, not all these changes did good to the human society. Some of them have done serious damage to mankind and have been even predicted to destroy the whole world someday if out of control. In fact, mankind is not biologically programmed for violent behaviors like war. People are faced with a dilemma in which we would like to see science develop freely, but cannot afford the result of that. It is a