data preprocessing for supervised learning

Abstract—Many factors affect the success of Machine Learning (ML) on a given task. The representation and quality of the instance data is first and foremost. If there is much irrelevant and redundant information present or noisy and unreliable data, then knowledge discovery during the training phase is more difficult. It is well known that data preparation and filtering steps take considerable amount of processing time in ML problems. Data pre-processing includes data cleaning, normalization, transformation, feature extraction and selection, etc. The product of data pre-processing is the final training set. It would be nice if a single sequence of data pre-processing algorithms had the best performance for each data set but this is not happened. Thus, we present the most well know algorithms for each step of data pre-processing so that one achieves the best performance for their data set.

Keywords—data mining, feature selection, data cleaning

I.I NTRODUCTION

HE data preprocessing can often have a significant impact on generalization performance of a supervised ML algorithm. The elimination of noise instances is one of the most difficult problems in inductive ML [48]. Usually the removed instances have excessively deviating instances that have too many null feature values. These excessively deviating features are also referred to as outliers. In addition, a common approach to cope with the infeasibility of learning from very large data sets is to select a single sample from the large data set. Missing data handling is another issue often dealt with in the data preparation steps.

The symbolic, logical learning algorithms are able to process symbolic, categorical data only. However, real-world problems involve both symbolic and numerical features. Therefore, there is an important issue to discretize numerical (continuous) features. Grouping of values of symbolic features is a useful process, too [18]. It is a known problem that features with too many values are overestimated in the process of selecting the most informative features, both for inducing decision trees and for deriving decision rules. Moreover, in real-world data, the representation of data often uses too many features, but only a few of them may be related to the target concept. There may be redundancy, where Manuscript received Feb 19, 2006. The Project is Co-Funded by the European Social Fund & National Resources - EPEAEK II.

S. B. Kotsiantis is with Educational Software Development Laboratory, University of Patras, Greece (phone: +302610997833; fax: +302610997313; e-mail: sotos@ math.upatras.gr).

D. Kanellopoulos is with Educational Software Development Laboratory, University of Patras, Greece (e-mail: dkanellop@ teipat.gr).

P. E. Pintelas is with Educational Software Development Laboratory, University of Patras, Greece (e-mail: pintelas@ math.upatras.gr). certain features are correlated so that is not necessary to include all of them in modeling; and interdependence, where two or more features between them convey important information that is obscure if any of them is included on its own [15]. Feature subset selection is the process of identifying and removing as much irrelevant and redundant information as possible. This reduces the dimensionality of the data and may allow learning algorithms to operate faster and more effectively. In some cases, accuracy on future classification can be improved; in others, the result is a more compact, easily interpreted representation of the target concept. Furthermore, the problem of feature interaction can be addressed by constructing new features from the basic feature set. Transformed features generated by feature construction may provide a better discriminative ability than the best subset of given features

This paper addresses issues of data pre-processing that can have a significant impact on generalization performance of a ML algorithm. We present the most well know algorithms for each step of data pre-processing so that one achieves the best performance for their data set.

The next section covers instance selection and outliers detection. The topic of processing unknown feature values is described in section 3. The problem of choosing the interval borders and the correct arity (the number of categorical values) for the discretization is covered in section 4. The section 5 explains the data normalization techniques (such as scaling down transformation of the features) that are important for many neural network and k-Nearest Neighbourhood algorithms, while the section 6 describes the Feature Selection (FS) methods. Finally, the feature construction algorithms are covered in section 7 and the closing section concludes this work.

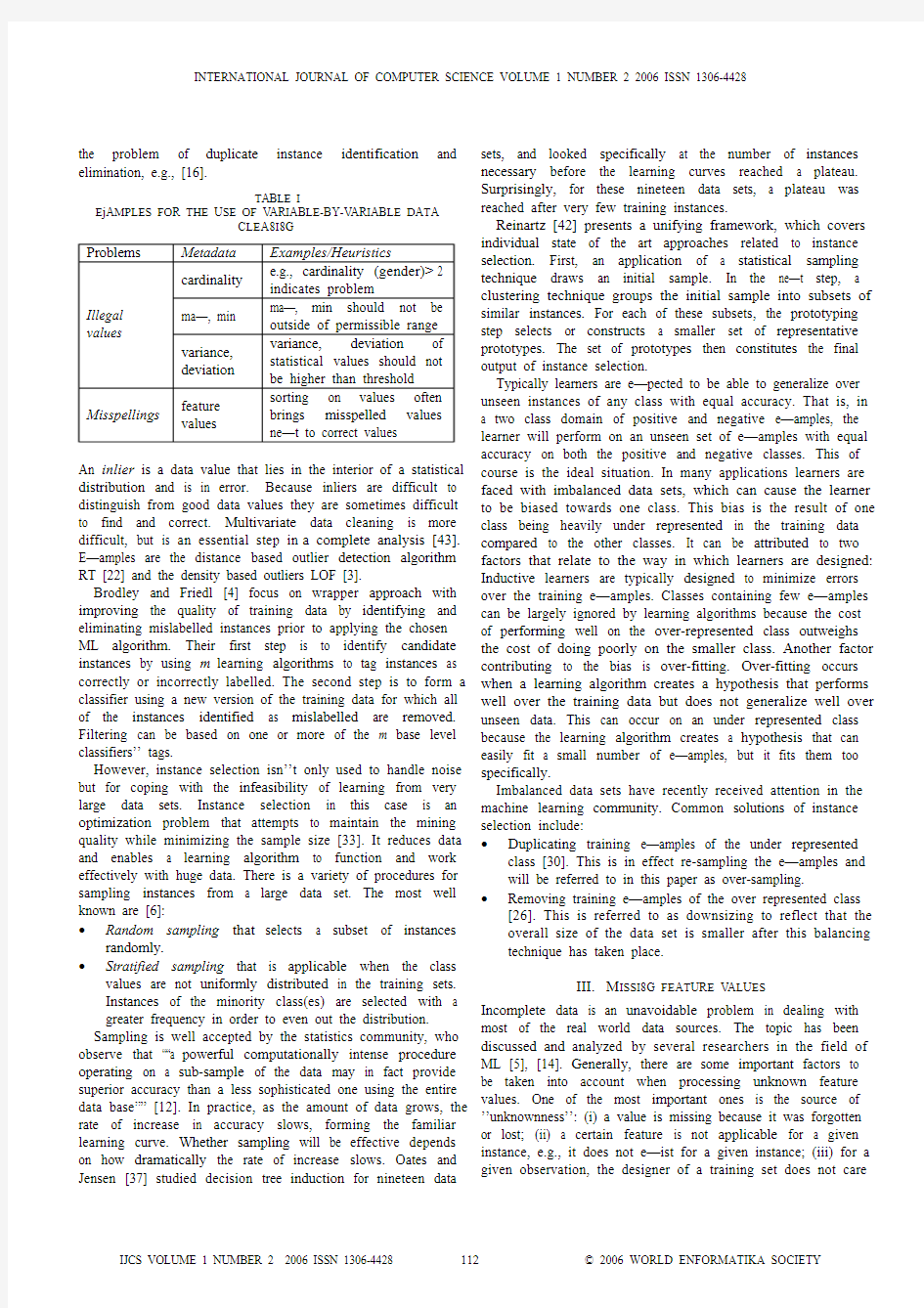

II.I NSTANCE S ELECTION AND O UTLIERS D ETECTION Generally, instance selection approaches are distinguished between filter and wrapper [13], [21]. Filter evaluation only considers data reduction but does not take into account activities. On contrary, wrapper approaches explicitly emphasize the ML aspect and evaluate results by using the specific ML algorithm to trigger instance selection. Variable-by-variable data cleaning is straightforward filter approach (those values that are suspicious due to their relationship to a specific probability distribution, say a normal distribution with a mean of 5, a standard deviation of 3, and a suspicious value of 10). Table 1 shows examples of how this metadata can help on detecting a number of possible data quality problems. Moreover, a number of authors focused on

Data Preprocessing for Supervised Leaning

S. B. Kotsiantis, D. Kanellopoulos and P. E. Pintelas

T

the problem of duplicate instance identification and elimination, e.g., [16].

TABLE I

EXAMPLES FOR THE USE OF VARIABLE-BY-VARIABLE DATA

CLEANING

Problems Metadata Examples/Heuristics

cardinality e.g., cardinality (gender)>2 indicates problem

max, min max, min should not be outside of permissible range

Illegal

values

variance,

deviation variance, deviation of statistical values should not be higher than threshold

Misspellings feature

values

sorting on values often

brings misspelled values

next to correct values

An inlier is a data value that lies in the interior of a statistical distribution and is in error. Because inliers are difficult to distinguish from good data values they are sometimes difficult to find and correct. Multivariate data cleaning is more difficult, but is an essential step in a complete analysis [43]. Examples are the distance based outlier detection algorithm RT [22] and the density based outliers LOF [3].

Brodley and Friedl [4] focus on wrapper approach with improving the quality of training data by identifying and eliminating mislabelled instances prior to applying the chosen ML algorithm. Their first step is to identify candidate instances by using m learning algorithms to tag instances as correctly or incorrectly labelled. The second step is to form a classifier using a new version of the training data for which all of the instances identified as mislabelled are removed. Filtering can be based on one or more of the m base level classifiers?’ tags.

However, instance selection isn?’t only used to handle noise but for coping with the infeasibility of learning from very large data sets. Instance selection in this case is an optimization problem that attempts to maintain the mining quality while minimizing the sample size [33]. It reduces data and enables a learning algorithm to function and work effectively with huge data. There is a variety of procedures for sampling instances from a large data set. The most well known are [6]:

x Random sampling that selects a subset of instances randomly.

x Stratified sampling that is applicable when the class values are not uniformly distributed in the training sets.

Instances of the minority class(es) are selected with a greater frequency in order to even out the distribution. Sampling is well accepted by the statistics community, who observe that ?“a powerful computationally intense procedure operating on a sub-sample of the data may in fact provide superior accuracy than a less sophisticated one using the entire data base?” [12]. In practice, as the amount of data grows, the rate of increase in accuracy slows, forming the familiar learning curve. Whether sampling will be effective depends on how dramatically the rate of increase slows. Oates and Jensen [37] studied decision tree induction for nineteen data sets, and looked specifically at the number of instances necessary before the learning curves reached a plateau. Surprisingly, for these nineteen data sets, a plateau was reached after very few training instances.

Reinartz [42] presents a unifying framework, which covers individual state of the art approaches related to instance selection. First, an application of a statistical sampling technique draws an initial sample. In the next step, a clustering technique groups the initial sample into subsets of similar instances. For each of these subsets, the prototyping step selects or constructs a smaller set of representative prototypes. The set of prototypes then constitutes the final output of instance selection.

Typically learners are expected to be able to generalize over unseen instances of any class with equal accuracy. That is, in a two class domain of positive and negative examples, the learner will perform on an unseen set of examples with equal accuracy on both the positive and negative classes. This of course is the ideal situation. In many applications learners are faced with imbalanced data sets, which can cause the learner to be biased towards one class. This bias is the result of one class being heavily under represented in the training data compared to the other classes. It can be attributed to two factors that relate to the way in which learners are designed: Inductive learners are typically designed to minimize errors over the training examples. Classes containing few examples can be largely ignored by learning algorithms because the cost of performing well on the over-represented class outweighs the cost of doing poorly on the smaller class. Another factor contributing to the bias is over-fitting. Over-fitting occurs when a learning algorithm creates a hypothesis that performs well over the training data but does not generalize well over unseen data. This can occur on an under represented class because the learning algorithm creates a hypothesis that can easily fit a small number of examples, but it fits them too specifically.

Imbalanced data sets have recently received attention in the machine learning community. Common solutions of instance selection include:

x Duplicating training examples of the under represented class [30]. This is in effect re-sampling the examples and will be referred to in this paper as over-sampling.

x Removing training examples of the over represented class

[26]. This is referred to as downsizing to reflect that the

overall size of the data set is smaller after this balancing technique has taken place.

III.M ISSING FEATURE VALUES

Incomplete data is an unavoidable problem in dealing with most of the real world data sources. The topic has been discussed and analyzed by several researchers in the field of ML [5], [14]. Generally, there are some important factors to be taken into account when processing unknown feature values. One of the most important ones is the source of ?’unknownness?’: (i) a value is missing because it was forgotten or lost; (ii) a certain feature is not applicable for a given instance, e.g., it does not exist for a given instance; (iii) for a given observation, the designer of a training set does not care

about the value of a certain feature (so-called don?’t-care value).

Analogically with the case, the expert has to choose from a number of methods for handling missing data [27]:

x Method of Ignoring Instances with Unknown Feature Values: This method is the simplest: just ignore the instances, which have at least one unknown feature value. x Most Common Feature Value: The value of the feature that occurs most often is selected to be the value for all the unknown values of the feature.

x Concept Most Common Feature Value:This time the value of the feature, which occurs the most common within the same class is selected to be the value for all the unknown values of the feature.

x Mean substitution:Substitute a feature?’s mean value computed from available cases to fill in missing data values on the remaining cases. A smarter solution than using the ?“general?” feature mean is to use the feature mean for all samples belonging to the same class to fill in the missing value

x Regression or classification methods:Develop a regression or classification model based on complete case data for a given feature, treating it as the outcome and using all other relevant features as predictors.

x Hot deck imputation: Identify the most similar case to the case with a missing value and substitute the most similar case?’s Y value for the missing case?’s Y value.

x Method of Treating Missing Feature Values as Special Values: treating ?“unknown?” itself as a new value for the features that contain missing values.

IV.D ISCRETIZATION

Discretization should significantly reduce the number of possible values of the continuous feature since large number of possible feature values contributes to slow and ineffective process of inductive ML. The problem of choosing the interval borders and the correct arity for the discretization of a numerical value range remains an open problem in numerical feature handling. The typical discretization process is presented in the Fig. 1.

Generally, discretization algorithms can be divided into unsupervised algorithms that discretize attributes without taking into account the class labels and supervised algorithms that discretize attributes by taking into account the class-attribute [34]. The simplest discretization method is an unsupervised direct method named equal size discretization. It calculates the maximum and the minimum for the feature that is being discretized and partitions the range observed into k equal sized intervals. Equal frequency is another unsupervised method. It counts the number of values we have from the feature that we are trying to discretize and partitions it into intervals containing the same number of instances.

// Sorting continuous attribute

// Selects a candidate cutpoint

// or adjacent intervals

// Invokes appropriate measure

// Checks the outcome

// Discretize by splitting or

// merging adjacent intervals

// Controls the overall discretization

//based on some measure

Sorting

Evaluation

Splitting/

Merging

Stopping

Fig. 1 Discretization process

Most discretization methods are divided into top-down and bottom-up methods. Top down methods start from the initial interval and recursively split it into smaller intervals. Bottom-up methods start from the set of single value intervals and iteratively merge neighboring intervals. Some of these methods require user parameters to modify the behavior of the discretization criterion or to set up a threshold for the stopping rule. Boulle [2] presented a recent discretization method named Khiops. This is a bottom-up method based on the global optimization of chi-square.

Moreover, error-based methods, for example Maas [35], evaluate candidate cut points against an error function and explore a search space of boundary points to minimize the sum of false positive and false negative errors on the training set. Entropy is another supervised incremental top down method described in [11]. Entropy discretization recursively selects the cut-points minimizing entropy until a stopping criterion based on the Minimum Description Length criterion ends the recursion.

Static methods, such as binning and entropy-based partitioning, determine the number of partitions for each feature independent of the other features. On the other hand, dynamic methods [38] conduct a search through the space of possible k partitions for all features simultaneously, thereby capturing interdependencies in feature discretization. Kohavi and Sahami [23] have compared static discretization with dynamic methods using cross-validation to estimate the accuracy of different values of k. However, they report no significant improvement in employing dynamic discretization over static methods.

V.D ATA NORMALIZATION Normalization is a "scaling down" transformation of the features. Within a feature there is often a large difference between the maximum and minimum values, e.g. 0.01 and 1000. When normalization is performed the value magnitudes and scaled to appreciably low values. This is important for many neural network and k-Nearest Neighbourhood algorithms. The two most common methods for this scope are:

x

min-max normalization:

:A

A A A

A A

min new min new max new min max min v v _)__('

x

z-score normalization: A

A

dev stand mean v v _'

where v is the old feature value and v?’ the new one.

VI. F EATURE S ELECTION

Feature subset selection is the process of identifying and removing as much irrelevant and redundant features as possible (see Fig. 2). This reduces the dimensionality of the data and enables learning algorithms to operate faster and more effectively. Generally, features are characterized as:

x Relevant: These are features have an influence on the

output and their role can not be assumed by the rest

x Irrelevant: Irrelevant features are defined as those features

not having any in uence on the output, and whose values are generated at random for each example.

x Redundant: A redundancy exists whenever a feature can

take the role of another (perhaps the simplest way to model redundancy).

FS algorithms in general have two components [20]: a selection algorithm that generates proposed subsets of features and attempts to find an optimal subset; and an evaluation algorithm that determines how ?‘good?’ a proposed feature subset is, returning some measure of goodness to the selection algorithm. However, without a suitable stopping criterion the FS process may run exhaustively or forever through the space of subsets. Stopping criteria can be: (i) whether addition (or deletion) of any feature does not produce a better subset; and (ii) whether an optimal subset according to some evaluation function is obtained.

Ideally, feature selection methods search through the subsets of features, and try to find the best one among the competing 2N candidate subsets according to some evaluation function. However, this procedure is exhaustive as it tries to find only the best one. It may be too costly and practically prohibitive, even for a medium-sized feature set size (N). Other methods based on heuristic or random search methods attempt to reduce computational complexity by compromising performance.

Langley [28] grouped different FS methods into two broad groups (i.e., filter and wrapper) based on their dependence on the inductive algorithm that will finally use the selected subset. Filter methods are independent of the inductive

algorithm, whereas wrapper methods use the inductive algorithm as the evaluation function. The filter evaluation functions can be divided into four categories: distance, information, dependence and consistency .

x Distance : For a two-class problem, a feature X is

preferred to another feature Y if X induces a greater difference between the two-class conditional probabilities than Y [24].

x Information: Feature X is preferred to feature Y if the

information gain from feature X is greater than that from feature Y [7].

x Dependence: The coefficient is a classical dependence

measure and can be used to find the correlation between a feature and a class. If the correlation of feature X with class C is higher than the correlation of feature Y with C , then feature X is preferred to Y [20].

x Consistency: two samples are in conflict if they have the

same values for a subset of features but disagree in the class they represent [31].

Relief [24] uses a statistical method to select the relevant features. Relief randomly picks a sample of instances and for each instance in it finds Near Hit and Near Miss instances based on the Euclidean distance measure. Near Hit is the instance having minimum Euclidean distance among all instances of the same class as that of the chosen instance; Near Miss is the instance having minimum Euclidean distance among all instances of different class. It updates the weights of the features that are initialized to zero in the beginning based on an intuitive idea that a feature is more relevant if it distinguishes between an instance and its Near Miss and less relevant if it distinguishes between an instance and its Near Hit . After exhausting all instances in the sample, it chooses all features having weight greater than or equal to a threshold. Several researchers have explored the possibility of using a particular learning algorithm as a pre-processor to discover useful feature subsets for a primary learning algorithm. Cardie [7] describes the application of decision tree algorithms to the task of selecting feature subsets for use by instance based learners. C4.5 is run over the training set and the features that appear in the pruned decision tree are selected. In a similar approach, Singh and Provan [45] use a greedy oblivious decision tree algorithm to select features from which to construct a Bayesian network. BDSFS (Boosted Decision Stump FS) uses boosted decision stumps as the pre-processor to discover the feature subset [9]. The number of features to be selected is a parameter, say k , to the FS algorithm. The boosting algorithm is run for k rounds, and at each round all features that have previously been selected are ignored. If a feature is selected in any round, it becomes part of the set that will be returned.

FS with neural nets can be thought of as a special case of architecture pruning, where input features are pruned, rather than hidden neurons or weights. The neural-network feature selector (NNFS) is based on elimination of input layer weights [44]. The weights-based feature saliency measures bank on the idea that weights connected to important features attain large absolute values while weights connected to unimportant features would probably attain values somewhere near zero.

Some of FS procedures are based on making comparisons between the saliency of a candidate feature and the saliency of a noise feature [1].

LVF [31] is consistency driven method can handle noisy domains if the approximate noise level is known a-priori. LVF generates a random subset S from the feature subset space during each round of execution. If S contains fewer features than the current best subset, the inconsistency rate of the dimensionally reduced data described by S is compared with the inconsistency rate of the best subset. If S is at least as consistent as the best subset, replaces the best subset. LVS [32] is a variance of LVF that can decrease the number of checkings especially in the case of large datasets.

In Hall [17], a correlation measure is applied to evaluate the goodness of feature subsets based on the hypothesis that a good feature subset is one that contains features highly correlated with the class, yet uncorrelated with each other. Yu and Liu [50] introduced a novel concept, predominant correlation, and proposed a fast filter method which can identify relevant features as well as redundancy among relevant features without pairwise correlation analysis. Generally, an optimal subset is always relative to a certain evaluation function (i.e., an optimal subset chosen using one evaluation function may not be the same as that which uses another evaluation function). Wrapper methods wrap the FS around the induction algorithm to be used, using cross-validation to predict the benefits of adding or removing a feature from the feature subset used. In forward stepwise selection, a feature subset is iteratively built up. Each of the unused variables is added to the model in turn, and the variable that most improves the model is selected. In backward stepwise selection, the algorithm starts by building a model that includes all available input variables (i.e. all bits are set). In each iteration, the algorithm locates the variable that, if removed, most improves the performance (or causes least deterioration). A problem with forward selection is that it may fail to include variables that are interdependent, as it adds variables one at a time. However, it may locate small effective subsets quite rapidly, as the early evaluations, involving relatively few variables, are fast. In contrast, in backward selection interdependencies are well handled, but early evaluations are relatively expensive. Due to the naive Bayes classifier?’s assumption that, within each class, probability distributions for features are independent of each other, Langley and Sage [29] note that its performance on domains with redundant features can be improved by removing such features. A forward search strategy is employed to select features for use with na?ve Bayes, as opposed to the backward strategies that are used most often with decision tree algorithms and instance based learners.

Sequential forward floating selection (SFFS) and sequential backward floating selection (SBFS) are characterized by the changing number of features included or eliminated at different stages of the procedure [46]. The adaptive floating search [47] is able to find a better solution, of course at the expense of significantly increased computational time.

The genetic algorithm is another well-known approach for FS [49]. In each iteration, a feature is chosen and raced between being in the subset or excluded from it. All combinations of unknown features are used with equal probability. Due to the probabilistic nature of the search, a

feature that should be in the subset will win the race, even if it

is dependent on another feature. An important aspect of the genetic algorithm is that it is explicitly designed to exploit

epistasis (that is, interdependencies between bits in the string), and thus should be well-suited for this problem domain.

However, genetic algorithms typically require a large number

of evaluations to reach a minimum.

Piramuthu [39] compared a number of FS techniques without finding a real winner. To combine the advantages of filter and wrapper models, algorithms in a hybrid model have recently been proposed to deal with high dimensional data [25]. In these algorithms, first, a goodness measure of feature subsets based on data characteristics is used to choose best subsets for a given cardinality, and then, cross validation is exploited to decide a final best subset across different cardinalities.

VII.F EATURE CONSTRUCTION

The problem of feature interaction can be also addressed by

constructing new features from the basic feature set. This technique is called feature construction/transformation. The

new generated features may lead to the creation of more concise and accurate classifiers. In addition, the discovery of

meaningful features contributes to better comprehensibility of

the produced classifier, and better understanding of the learned concept.

Assuming the original set A of features consists of a1, a2, ...,

a n, some variants of feature transformation is defined below. Feature transformation process can augment the space of

features by inferring or creating additional features. After

feature construction, we may have additional m features a n+1, a n+2, ..., a n+m. For example, a new feature a k (n < k d n + m) could be constructed by performing a logical operation of a i and a j from the original set of features.

The GALA algorithm [19] performs feature construction throughout the course of building a decision tree classifier. New features are constructed at each created tree node by performing a branch and bound search in feature space. The search is performed by iteratively combining the feature having the highest InfoGain value with an original basic feature that meets a certain filter criterion. GALA constructs new binary features by using logical operators such as conjunction, negation, and disjunction. On the other hand, Zheng [51] creates at-least M-of-N features. For a given instance, the value of an at-least M-of-N representation is true if at least M of its conditions is true of the instance while it is false, otherwise.

Feature transformation process can also extract a set of new

features from the original features through some functional mapping. After feature extraction, we have b1, b2,..., b m (m < n), b i= f i(a1, a2,...,a n), and f i is a mapping function. For instance for real valued features a1and a2, for every object x we can define b1(x) = c1*a1(x) + c2*a2(x) where c1 and c2 are constants. While FICUS [36] is similar in some aspects to some of the existing feature construction algorithms (such as GALA), its main strength and contribution are its generality

and flexibility. FICUS was designed to perform feature generation given any feature representation specification (mainly the set of constructor functions) using its general-purpose grammar.

The choice between FS and feature construction depends on the application domain and the specific training data, which are available. FS leads to savings in measurements cost since some of the features are discarded and the selected features retain their original physical interpretation. In addition, the retained features may be important for understanding the physical process that generates the patterns. On the other hand, transformed features generated by feature construction may provide a better discriminative ability than the best subset of given features, but these new features may not have a clear physical meaning.

VIII.C ONCLUSION

Machine learning algorithms automatically extract knowledge from machine-readable information. Unfortunately, their success is usually dependant on the quality of the data that they operate on. If the data is inadequate, or contains extraneous and irrelevant information, machine learning algorithms may produce less accurate and less understandable results, or may fail to discover anything of use at all. Thus, data pre-processing is an important step in the machine learning process. The pre-processing step is necessary to resolve several types of problems include noisy data, redundancy data, missing data values, etc. All the inductive learning algorithms rely heavily on the product of this stage, which is the final training set.

By selecting relevant instances, experts can usually remove irrelevant ones as well as noise and/or redundant data. The high quality data will lead to high quality results and reduced costs for data mining. In addition, when a data set is too huge, it may not be possible to run a ML algorithm. In this case, instance selection reduces data and enables a ML algorithm to function and work effectively with huge data.

In most cases, missing data should be pre-processed so as to allow the whole data set to be processed by a supervised ML algorithm. Moreover, most of the existing ML algorithms are able to extract knowledge from data set that store discrete features. If the features are continuous, the algorithms can be integrated with a discretization algorithm that transforms them into discrete attributes. A number of studies [23], [20] comparing the effects of using various discretization techniques (on common ML domains and algorithms) have found the entropy based methods to be superior overall. Feature subset selection is the process of identifying and removing as much of the irrelevant and redundant information as possible. Feature wrappers often achieve better results than filters due to the fact that they are tuned to the specific interaction between an induction algorithm and its training data. However, they are much slower than feature filters. Moreover, the problem of feature interaction can be addressed by constructing new features from the basic feature set (feature construction). Generally, transformed features generated by feature construction may provide a better discriminative ability than the best subset of given features, but these new features may not have a clear physical meaning. It would be nice if a single sequence of data pre-processing algorithms had the best performance for each data set but this is not happened. Thus, we presented the most well known algorithms for each step of data pre-processing so that one achieves the best performance for their data set.

R EFERENCES

[1]Bauer, K.W., Alsing, S.G., Greene, K.A., 2000. Feature screening using

signal-to-noise ratios. Neurocomputing 31, 29?–44.

[2]M. Boulle. Khiops: A Statistical Discretization Method of Continuous

Attributes. Machine Learning 55:1 (2004) 53-69

[3]Breunig M. M., Kriegel H.-P., Ng R. T., Sander J.: ?‘LOF: Identifying

Density-Based Local Outliers?’, Proc. ACM SIGMOD Int. Conf. On Management of Data (SIGMOD 2000), Dallas, TX, 2000, pp. 93-104. [4]Brodley, C.E. and Friedl, M.A. (1999) "Identifying Mislabeled Training

Data", AIR, Volume 11, pages 131-167.

[5]Bruha and F. Franek: Comparison of various routines for unknown

attribute value processing: covering paradigm. International Journal of Pattern Recognition and Artificial Intelligence, 10, 8 (1996), 939-955 [6]J.R. Cano, F. Herrera, M. Lozano. Strategies for Scaling Up

Evolutionary Instance Reduction Algorithms for Data Mining. In: L.C.

Jain, A. Ghosh (Eds.) Evolutionary Computation in Data Mining, Springer, 2005, 21-39

[7] C. Cardie. Using decision trees to improve cased-based learning. In

Proceedings of the First International Conference on Knowledge Discovery and Data Mining. AAAI Press, 1995.

[8]M. Dash, H. Liu, Feature Selection for Classification, Intelligent Data

Analysis 1 (1997) 131?–156.

[9]S. Das. Filters, wrappers and a boosting-based hybrid for feature

selection. Proc. of the 8th International Conference on Machine Learning, 2001.

[10]T. Elomaa, J. Rousu. Efficient multisplitting revisited: Optima-

preserving elimination of partition candidates. Data Mining and Knowledge Discovery 8:2 (2004) 97-126

[11]Fayyad U., and Irani K. (1993). Multi-interval discretization of

continuous-valued attributes for classification learning. In Proc. of the Thirteenth Int. Joint Conference on Artificial Intelligence, 1022-1027. [12]Friedman, J.H. 1997. Data mining and statistics: What?’s the connection?

Proceedings of the 29th Symposium on the Interface Between Computer Science and Statistics.

[13]Marek Grochowski, Norbert Jankowski: Comparison of Instance

Selection Algorithms II. Results and Comments. ICAISC 2004a: 580-585.

[14]Jerzy W. Grzymala-Busse and Ming Hu, A Comparison of Several

Approaches to Missing Attribute Values in Data Mining, LNAI 2005, pp. 378í385, 2001.

[15]Isabelle Guyon, André Elisseeff; An Introduction to Variable and

Feature Selection, JMLR Special Issue on Variable and Feature Selection, 3(Mar):1157--1182, 2003.

[16]Hernandez, M.A.; Stolfo, S.J.: Real-World Data is Dirty: Data Cleansing

and the Merge/Purge Problem. Data Mining and Knowledge Discovery 2(1):9-37, 1998.

[17]Hall, M. (2000). Correlation-based feature selection for discrete and

numeric class machine learning. Proceedings of the Seventeenth International Conference on Machine Learning (pp. 359?–366).

[18]K. M. Ho, and P. D. Scott. Reducing Decision Tree Fragmentation

Through Attribute Value Grouping: A Comparative Study, in Intelligent Data Analysis Journal, 4(1), pp.1-20, 2000.

[19]Hu, Y.-J., & Kibler, D. (1996). Generation of attributes for learning

algorithms. Proc. 13th International Conference on Machine Learning. [20]J. Hua, Z. Xiong, J. Lowey, E. Suh, E.R. Dougherty. Optimal number of

features as a function of sample size for various classification rules.

Bioinformatics 21 (2005) 1509-1515

[21]Norbert Jankowski, Marek Grochowski: Comparison of Instances

Selection Algorithms I. Algorithms Survey. ICAISC 2004b: 598-603. [22]Knorr E. M., Ng R. T.: ?‘A Unified Notion of Outliers: Properties and

Computation?’, Proc. 4th Int. Conf. on Knowledge Discovery and Data Mining (KDD?’97), Newport Beach, CA, 1997, pp. 219-222.

[23]R. Kohavi and M. Sahami. Error-based and entropy-based discretisation

of continuous features. In Proceedings of the Second International Conference on Knowledge Discovery and Data Mining. AAAI Press, 1996.

[24]Kononenko, I., Simec, E., and Robnik-Sikonja, M.(1997).Overcoming

the myopia of inductive learning algorithms with RELIEFF. Applied Intelligence, 7: 39?–55.

[25]S. B. Kotsiantis, P. E. Pintelas (2004), Hybrid Feature Selection instead

of Ensembles of Classifiers in Medical Decision Support, Proceedings of Information Processing and Management of Uncertainty in Knowledge-Based Systems, July 4-9, Perugia - Italy, pp. 269-276.

[26]Kubat, M. and Matwin, S., 'Addressing the Curse of Imbalanced Data

Sets: One Sided Sampling', in the Proceedings of the Fourteenth International Conference on Machine Learning, pp. 179-186, 1997. [27]Lakshminarayan K., S. Harp & T. Samad, Imputation of Missing Data in

Industrial Databases, Applied Intelligence 11, 259?–275 (1999).

[28]Langley, P., Selection of relevant features in machine learning. In:

Proceedings of the AAAI Fall Symposium on Relevance, 1?–5, 1994. [29]P. Langley and S. Sage. Induction of selective Bayesian classifiers. In

Proc. of 10th Conference on Uncertainty in Artificial Intelligence, Seattle, 1994.

[30]Ling, C. and Li, C., 'Data Mining for Direct Marketing: Problems and

Solutions', Proceedings of KDD-98.

[31]Liu, H. and Setiono, R., A probabilistic approach to feature selection?—a

filter solution. Proc. of International Conference on ML, 319?–327, 1996.

[32]H. Liu and R. Setiono. Some Issues on scalable feature selection. Expert

Systems and Applications, 15 (1998) 333-339. Pergamon.

[33]Liu, H. and H. Metoda (Eds), Instance Selection and Constructive Data

Mining, Kluwer, Boston, MA, 2001

[34]H. Liu, F. Hussain, C. Lim, M. Dash. Discretization: An Enabling

Technique. Data Mining and Knowledge Discovery 6:4 (2002) 393-423.

[35]Maas W. (1994). Efficient agnostic PAC-learning with simple

hypotheses. Proc. of the 7th ACM Conf. on Computational Learning Theory, 67-75.

[36]Markovitch S. & Rosenstein D. (2002), Feature Generation Using

General Constructor Functions, Machine Learning, 49, 59?–98, 2002. [37]Oates, T. and Jensen, D. 1997. The effects of training set size on

decision tree complexity. In ML: Proc. of the 14th Intern. Conf., pp.

254?–262.

[38]Pfahringer B. (1995). Compression-based discretization of continuous

attributes. Proc. of the 12th International Conference on Machine Learning.

[39]S. Piramuthu. Evaluating feature selection methods for learning in data

mining applications. European Journal of Operational Research 156:2 (2004) 483-494

[40]Pyle, D., 1999. Data Preparation for Data Mining. Morgan Kaufmann

Publishers, Los Altos, CA.

[41]Quinlan J.R. (1993), C4.5: Programs for Machine Learning, Morgan

Kaufmann, Los Altos, California.

[42]Reinartz T., A Unifying View on Instance Selection, Data Mining and

Knowledge Discovery, 6, 191?–210, 2002, Kluwer Academic Publishers.

[43]Rocke, D. M. and Woodruff, D. L. (1996) ?“Identification of Outliers in

Multivariate Data,?” Journal of the American Statistical Association, 91, 1047?–1061.

[44]Setiono, R., Liu, H., 1997. Neural-network feature selector. IEEE Trans.

Neural Networks 8 (3), 654?–662.

[45]M. Singh and G. M. Provan. Efficient learning of selective Bayesian

network classifiers. In Machine Learning: Proceedings of the Thirteenth International Conference on Machine Learning. Morgan Kaufmann, 1996.

[46]Somol, P., Pudil, P., Novovicova, J., Paclik, P., 1999. Adaptive floating

search methods in feature selection. Pattern Recognition Lett. 20 (11/13), 1157?–1163.

[47]P. Somol, P. Pudil. Feature Selection Toolbox. Pattern Recognition 35

(2002) 2749-2759.

[48] C. M. Teng. Correcting noisy data. In Proc. 16th International Conf. on

Machine Learning, pages 239?–248. San Francisco, 1999.

[49]Yang J, Honavar V. Feature subset selection using a genetic algorithm.

IEEE Int Systems and their Applications 1998; 13(2): 44?–49.

[50]Yu and Liu (2003), Proceedings of the Twentieth International

Conference on Machine Learning (ICML-2003), Washington DC. [51]Zheng (2000), Constructing X-of-N Attributes for Decision Tree

Learning, Machine Learning, 40, 35?–75, 2000, Kluwer Academic Publishers.

S. B. Kotsiantis received a diploma in mathematics, a Master and a Ph.D. degree in computer science from the University of Patras, Greece. His research interests are in the field of data mining and machine learning. He has more than 40 publications to his credit in international journals and conferences.

D. Kanellopoulos received a diploma in electrical engineering and a Ph.D. degree in electrical and computer engineering from the University of Patras, Greece. He has more than 40 publications to his credit in international journals and conferences.

P. E. Pintelas is a Professor in the Department of Mathematics, University of Patras, Greece. His research interests are in the field of educational software and machine learning. He has more than 100 publications in international journals and conferences.

英国文科类专业申请的情况

免费澳洲、英国、新西兰留学咨询与办理 官网:https://www.360docs.net/doc/4a9219770.html, 英国文科类专业申请的情况 随着2019年英国申请季的开始,选专业又成为我们面临的重大事情。今天我们主要帮助学生梳理一下英国文科类专业申请的情况。 英国文科类的专业主要包括:教育学、政治学、社会学和人类学、传媒等。教育学 顾名思义就是研究当老师的学问。英国大学教育学专业分支丰富,不仅有倾向于教学的分支,例如倾向于教学方法的分支, 主要培养教学这个方向。还有倾向于管理的分支。例如教育领导管理的分支。主要培养学校的行政管理人员。所以如果想去大学当辅导员的学生,可以考虑这个专业分支哦。 政治学

免费澳洲、英国、新西兰留学咨询与办理 官网:https://www.360docs.net/doc/4a9219770.html, 顾名思义研究国家和国际政治的专业。英国大学政治学主要开设专业分支有政治理论、国际关系和公共政策等。这一类专业申请比较多的是国际关系,因为国际关系相对于政治学,学习内容更加具体。例如国际关系会关注国际安全、人权与公平正义、比较政治经济学等。申请该专业的优势在于不需要专业背景,一般接受转专业申请的学生。分数要求也不高,例如去年有一个学生来自福建师范大学,本科是传播学,82分。申请到曼彻斯特大学和伯明翰大学。对于条件比较普通 的学生,可以考虑这个专业。 近期有一个学生来自于上海师范大学天华学院,本科国际商务贸易专业,分数是77分,学生比较想去好学校,在推荐学校和专业时,首先从文科出发,相对于教育学和传媒,学生申请国际关系更能申请到比较到的学校。 传媒 传媒属于我们申请的热门专业之一。传媒主要包括新闻,电影,媒体和创意产业等专业。新闻专业要求比较高的写作水平,所以新闻专业不太好申请,除非写作功底比较好的同学可以尝试。电影专业分支比较适合本科专业就是电影专业,因为课程涉及到一些动画设计等课程。媒体类和创意产业属于大家选择比较多的分支,因为专业背景比较宽泛,雅思要求比较适中,一般都是总分要求6.5(6.0)。典型学校有利兹大学、诺丁汉大学、华威大学、格拉斯哥大学、谢菲尔德大学。如果条件比较适中的学生可以选择纽卡斯尔大学、莱斯特大学、东英吉利亚大学等。去年有一个三本的学生,均分为 83,本科就读汉语言文学专业,拿到了上述3个大学的offer 。 人类学和社会学

武汉工程大学文科基金项目

所属学科及学科代码: 项目编号: 武汉工程大学文科基金项目 申请书 项目名称: 项目负责人: 联系电话: 依托学院部门: 申请日期: 武汉工程大学科技处制 2007年9月

简表填写要求 一、简表内容将输入计算机,必须认真填写,采用国家公布的标准简化汉 字。简表中学科(专业)代码按GB/T13745-92“学科分类与代码”表填写。 二、部分栏目填写要求: 项目名称——应确切反映研究内容,最多不超过25个汉字(包括标点符号)。 学科名称——申请项目所属的第二级或三级学科。 申请金额——以万元为单位,用阿拉伯数字表示,注意小数点。 起止年月——起始时间从申请的次年元月算起。 项目组其他主要成员——指在项目组内对学术思想、技术路线的制定理论分析及对项目的完成起主要作用的人员。

一、项目信息简表

二、选题:本课题国内外研究现状述评;选题的意义。 三、内容:本课题研究的基本思路和方法;主要观点。 四、预期价值:本课题理论创新程度或实际应用价值。 五、研究基础:课题负责人已有相关成果;主要参考文献。 六、完成项目的条件和保证:包括申请者和项目组主要成员业务简历、项目申请人和主要成员承担过的科研课题以及发表的论文;科研成果的社会评价;完成本课题的研究能力和时间保证;资料设备;科研手段。 (请分5部分逐项填写)。

七、经费预算

六、项目负责人承诺 我确认本申请书及附件内容真实、准确。如果获得资助,我将严格按照学校有关项目管理办法的规定,认真履行项目负责人职责,积极组织开展研究工作,合理安排研究经费,按时报送有关材料并接受检查。若申请书失实或在项目执行过程中违反有关科研项目管理办法规定,本人将承担全部责任。 负责人签字: 年月日 七、所在学院意见 负责人签字:学院盖章: 年月日 八、科技处审核 已经按照项目申报要求对项目申请人的资格及项目申请书内容进行了审核。项目如获资助,科技处将根据项目申请书内容,落实项目研究所需经费及其它条件;以保证项目按时顺利完成。 科技处盖章 年月日

202X美国留学文科专业申请建议.doc

202X美国留学文科专业申请建议 现在,去美国留学,文科专业比较容易申请一些,但是,美国的文科专业众多,该如何申请呢,下面来说说美国留学文科专业申请建议。 1、文科专业非常庞杂,常见专业有:语言类,新闻和传播,政治学,社会学,人类学,历史学,经济学,法学,教育学,心理学,建筑学,城市规划和景观设计,艺术类等。 2、若是英语专业,可以申请教育学,比如教育心理,TESOL,早期教育等;文学类,比如比较文学等;传媒类,公关,广告等;或者政治学、社会学、历史学。具体申请什么专业根据申请人的背景经历进行确定。中文和日语专业的可以申请东亚研究,有东亚研究设置的专业都是TOP50的学校,所以申请难度也是很大的。 3、美国的顶尖大学综合排名前30的学校里设置传播学院的并不多。因为传播学是后兴起的专业才有七、八十年的历史,很多老牌的学校他们排斥这样的新兴学科,所以他们不设置这样的学院,例如哈佛,耶鲁,牛津,剑桥等根本没有传播学校。有些学校虽然有传播学院或者有相关的专业但是规模一般也很小,有些学生甚至只招生本校的本科生。例如:斯坦福大学,传播学一直没有权威的专业排名,比较出名的学校有:哥伦比亚大学,纽约大学,但是这两个牛校都只设置新闻学院,并没有传播学研究。而且这两个学校的新闻类专业是属于那种超级牛人才能申请到的,一般在国内每年招生只有1-2个,甚至没有。总的来说新闻类专业申请难度很大。并且对申请人的英语及GRE成绩要求非常高,并且申请人最好有在央视,新华社,这样的背景才会比较有利。 4、政治学,社会学,人类学和历史,一般硕士的申请很难拿到奖学金,博士奖学金设置会很多,但是同时对学生的研究兴趣有要求,并希望看到学生对未来的职业发展规划。

高中理科申请书

篇一:高一文理分科申请表 高一文理分科申请表 明: 1、传 媒含播音与主持艺术、广播电视编导、服饰艺术与表演、影视表演、空中乘务等专业;美术含 绘画、书法艺术和书法教育、设计等专业;音乐含声乐、舞蹈、器乐、理论作曲、指挥等专业。 2、本 申请表经学生、家长、班主任签名后,不能再更改,学校以此为依据重新分班。 上梅 中学教务处 2013年12月28日 篇二:文理分科申请书 请书 敬的老师: 我 是,现在高()班,本人喜欢科技制作、科普读物、科技发明和生命科学等相关 知识与能力锻炼,对理科的兴趣大于对文科的兴趣。经过慎重考虑,并与家长商量,我决定申 请就读(文科、理科、美术、体育、音乐、舞蹈、传媒)班,敬请批准。 谢谢! (学 生本人签名)年月日 意上述申请。 (家 长签名)年月日 篇三:转班申请书 转班申请书尊敬的杨老师: 您好! 我想转到理科高二(16)班.因为经过假期的思考,我发现自己对文科没有很大的兴趣,而且

我也了解到自己在文科方面很难得到提高。对此,我恳请杨老师能让我转到理科高二(16) 班,我真切希望自己能转理科高二(16)班,我总结了我转班的原因,有以下几点: 一、 我觉得自己对文科的兴趣没有了之前的那种热情,而且我觉得自己在理科方面还有待提高,我 对它也很感兴趣。人们都说兴趣是学习最好的老师,有了兴趣就是成功的一半。而现在我对文 科已经没有了那种热情,又怎么会对学习文科尽心尽力、认真努力的去做呢?所以我真心的想 转到理科。 二、 我的文科成绩不是很好,而且从小我就贪玩把英语落下了很多。然而英语在文科当中可以占很 大的优势,我的英语很差,读文科没有优势,所以我希望自己能转到理科。 三、 学习文科需要很好的记忆力,但我这个人很赖,不喜欢背诵,而文科又需要背诵和 忆。 四、 根据社会的需求和我个人的发展空间,我想理科更适合我。 亡羊 补牢,为时不晚,希望杨老师能给我一次机会。 此致 敬礼 申请 人: 011年7月31日 篇四:申请书 尊敬的政府领导: 我叫 xxx,19xx年xx月xx日生,系xxx居民,配偶19xx年生,也是xxx人,我们都无固定 职业,现有家庭成员x人,家庭月收入xxx元。我们也曾经多次想买房子,但就我们这点收 入想买完全属于我们自己的房子那简直是奢望。所以本人和家人至今也一直居无定所,但为了 生活,又不得不在城区内四处奔波打工,并租房居住。由于本人家庭生活的实际困难和无住房 的实际情况,现想申请政府廉租住房一套,望领导给予批准为盼! 谢谢! 请人:

法国文科类专业留学指南 如何申请适合自己的文科类专业.doc

法国文科类专业留学指南如何申请适合自己的文科类专业法国的商科和理工科在世界范围内都是非常出色的,文科虽稍微逊色,但也是很出色的,今天就和的我一起看看法国文科类专业留学指南如何申请适合自己的文科类专业? 首先看看法国文科类专业留学有哪些优势? 法国文学在欧洲乃至世界文坛上都很有影响力,今年再次获得诺贝尔文学奖就是明证,但是如果你打算去法国读文学专业,那么实在是不大正确的选择。 任何一种文学需要本国本民族的人去学习才会比较顺手,而且一旦毕业之后也更容易获得专业认可。研究中国现当代文学的很少有西方学者,去法国读文学专业也同样如此。 而去法国学习法国历史和社科类专业也同样如此。当然这类专业显得很高大上,但本身就是些“十年一坐冷板凳”的专业,更何况是外国人去研究法国的历史社会情况,我想大多数学生是不会去选择这类专业的。事实上也是很少有学生去法国留学报考这类专业。 接着再来看看申请法国文科生专业要做好哪些准备? 1.合理选择专业,明确职业规划 法国公立学校原则上不允许学生自由转换专业,学生最好尽量申请跟自己国内专业相近或相关的专业,以避免专业跨度太大影响面签。而且法国学校非常重视学生的职业规划,建议学生申请时一定要明确自己未来计划学习的专业以及毕业后将要从事的领域,不能一味盲目追求热门专业,适合自己的才是最好的。 2.提前做好准备,语言学习充分 法国留学整个申请流程比较长,通常建议最好提前至少一年开始着手申请并开始学习法语,而且艺术设计类学校一般为全法语授课(只有少部分学校有英语授课项目),而且公立学校在法国读完语言后升专业对法语

要求相对较高,需要通过学校的考试。学好法语是保证顺利通过面签和顺利通过考试升学的必要条件。 最后再来看看法国有哪些好的文科类专业? 1.商学专业 法国大多数的商学院是独立于公立大学之外的。以前法国不少集团公司鉴于自己很难找到自己想要的实习生,因此就索性根据自己的需求成立了不少商业学院。 这样一来,对于学生来讲,理论和实践都能够结合起来。随着历史的发展,这类商学院毕业的学生能力普遍比较强,适合职场工作,因此很多法国公司甚至国际上的大型跨国公司都喜欢招聘这类商学院的毕业生。 其中,欧洲工商管理学院(INSEAD)、巴黎高等商学院(HEC)、法国著名高等学府(ESSEC)都是在国际上颇有声誉的法国商学院。像INSEAD是世界最大和最有影响力的独立商学院之一,也是欧洲最受尊重、历年排名首位的商学院。可以看到在文科专业之中,去法国留学就读这一方向的专业还是很有前途的。当然也需要注意,这类商学院的学费也比较高昂,INSEAD一年就要3-4万欧元,因此申请者需要较好的经济实力。 2.法学专业 学过法律的同学应该知道,现在我们国家的法系属于所谓的“大陆法系”。这里的“大陆”指的就是欧洲大陆。而欧洲大陆的法系的来源则是法国。 因此到法国学习法学专业,可以对于法律有更加清晰的了解和认知,更能接触到世界法学的原始样本和先进理念,其法学体系知识是非常完备的。 但是也要注意中国学生到法国去留学法学专业的话,难度会比较大。有个学生申请到法国里昂大学的法学院,可以说资质条件是非常好的,但是在那里学习的时候就曾反映,自己还是对于学习颇有些头疼。

文科主要科研项目申报信息汇总-推荐下载

主要文科科研项目申报信息 所属部委或单位:全国哲学社会科学规划办公室 所属部委或单位:国家自然科学基金委 、管路敷设技术弯扁度固定盒位置保护层防腐跨接地线弯曲半径标高等,要求技术交底。管线敷设技术包含线槽、管架等多项方式,、电气课件中调试核与校对图纸,编写复杂设备与装置高中资料试卷调试方案,编写重要设备高中资料试卷试验方案以及系统启动方案;对、电气设备调试高中资料试卷技术常高中资料试卷工况进行自动处理,尤其要避免错误高中资料试卷保护装置动作,并且拒绝动作,来避免不必要高中资

所属部委或单位:国家科技部 所属部委或单位:国家教育部 、管路敷设技术通过管线不仅可以解决吊顶层配置不规范高中资料试卷问题,而且可保障各类管路习题到位。在管路敷设过程中,要加强看护关于管路高中资料试卷连接管口处理高中资料试卷弯扁度固定盒位置保护层防腐跨接地线弯曲半径标高等,要求技术交底。管线敷设技术包含线槽、管架等多项方式,为解决高中语文电气课件中管壁薄、接口不严等问题,合理利用管线敷设技术。线缆敷设原则:在分线盒处,当不同电压回路交叉时,应采用金属隔板进行隔开处理;同一线槽内,强电回路须同时切断习题电源,线缆敷设完毕,要进行检查和检测处理。、电气课件中调试装过程中以及安装结束后进行 高中资料试卷调整试验;通电检查所有设备高中资料试卷相互作用与相互关系,根据生产工艺高中资料试卷要求,对电气设备进行空载与带负荷下高中资料试卷调控试验;对设备进行调整使其在正常工况下与过度工作下都可以正常工作;对于继电保护进行整核对定值,审核与校对图纸,编写复杂设备与装置高中资料试卷调试方案,编写重要设备高中资料试卷试验方案以及系统启动方案;对整套启动过程中高中资料试卷电气设备进行调试工作并且进行过关运行高中资料试卷技术指导。对于调试过程中高中资料试卷技术问题,作为调试人员,需要在事前掌握图纸资料、设备制造厂家出具高中资料试卷试验报告与相关技术资料,并且了解现场设备高中资料试卷布置情况与有关高中资料试卷电气系统接线等情况,然后根据规范与规程规定,制定设备调试高中、电气设备调试高中资料试卷技术电力保护装置调试技术,电力保护高中资料试卷配置技术是指机组在进行继电保护高中资料试卷总体配置时,需要在最大限度内来确保机组高中资料试卷安全,并且尽可能地缩小故障高中资料试卷破坏范围,或者对某些异常高中资料试卷工况进行自动处理,尤其要避免错误高中资料试卷保护装置动作,并且拒绝动作,来避免不必要高中资料试卷突然停机。因此,电力高中资料试卷保护装置调试技术,要求电力保护装置做到准确灵活。对于差动保护装置高中资料试卷调试技术是指发电机一变压器组在发生内部故障时,需要进行外部电源高中资料试卷切除从而采用高中资料试卷主要保护装置。

文科生申请日本留学的条件.doc

文科生申请日本留学的条件 现在,的女生比较多,一般女孩子选择文科会多一些,那文科生寻求自身更好地发展选择去日本留学申请条件是什么满足什么条件才可以申请?有什么专业可以选择?今天就来说说文科生申请日本留学的条件! 文科生去日本留学 文科生留学日本费用 日本留学性价比很高,它有着高水平教学质量,和较为亲民学费。 日本国公立大学学费 本科:53.5万日元/年约3万人民币 研究生:17万日元/半年约1万人民币 修士:53.5万日元/年约3万人民币 日本私立大学学费较国公立大学要高一些,文科相关专业学费大致如下: 本科:约70-90万日元约4-6万人民币 修士:约100-140万日元约6-8.4万人民币 另外日本设有各类奖学金,可以为同学们减轻一部分经济压力。 日本文科专业录取分析 出身校 日本文科对于学生背景学校没有明确限制。但是985、211背景出身学生申请前五名大学成功率则大很多,尤其是京都大学非常喜欢招收国内985、211院校学生。 语言要求 申请日本文科学校语言成绩非常重要,除获得日语成绩学生占绝大部分优势外,如果能掌握非常流利英语能力也很具有竞争优势。

专业背景 本专业申请和跨专业申请基本持平,但还是本专业申请学生占据优势。文科跨专业一般多是指日语专业同学选择其他专业继续攻读。 最为常见是攻读教育学和社会学,因为比起商科专业对专业出身要求低一些;也有一部分英语好日语专业学生转去研究国际关系或国际协力;也有一部分经贸日语专业学生成功申请到商科专业。 当然还有一部分日语专业学生选择继续本专业进学,多会选择研究日本语教育、日本文化或文学。 文科生留学日本读研基本条件 研究生(修士预科) 学历: 16年教育经历,大学本科生,拥有学士学位或专升本、自学考试学位取得者(可申请修士研究生);只有个别学校接受专科生,基本需要进行申请资格审查。 语言能力: 申请日本研究生一般来说文科专业日语水平需N1,N2也可,当然日语水平越高对申请会约有利。 修士 学历: 受过16年正规学校教育、具有本科毕业证和学士学位证者或受过15年教育专科生,但是需要资格审查,可以选择学校较少。 语言能力: 日语,根据大学、学科具体要求不同。有要求提供日语能力证明,有需要通过面试来判断是否达到可以满足研究需求语言水准。

美国文科研究生留学申请要求

美国文科研究生留学申请要求 美国文科研究生留学申请要求 1、背景要求: 专业要求美国文科专业申请对于申请者的专业背景要求各不相同,其中有对专业背景要求不高的传媒、教育等专业,也包括法律、国际关系、政治学等要求相关专业背景的学生才能申请的专业。 2、GPA要求:3.5+ 平均成绩要求美国文科申请虽然在中国的申请者之中不属于热门的方向,但美国文科专业会有更多的美国本土学生申请,所以一个很好的平均绩点都成为申请者强有力的竞争条件。对申请者平均分的要求一般都在3.0以上,对于法律、传媒这种相对热门的专业,或者国际关系、政治学等这种美国学生居多的专业,希望申请者的绩点能达到3.5以上。 3、语言要求:(托福\雅思\RE\) 语言要求由于文科的学习一向对语言的要求较高,另外牵扯到语言文化的不同,因此美国文科申请对于国际学生的语言要求普遍非常高,其中哥伦比亚大学、南加州大学的传媒专业甚至对申请者列出托福114分高标准。与此同时,多数美国文科强校都集中在50院校之中,因此变相提高了语言的要求。对于申请美国文科的申请者而言,建议着重于托福与GRE的Verbal部分,希望能达到至少100分托福加155分以上的GREVerbal的成绩。 扩展阅读:美国研究生留学的教育体制 美国的研究生向来都很高水平,对于研究生的要求也非常的严。也是因为这样,很多学生觉得要到美国的研究生院里去读肯定很难,其实它的门槛真的没有大家想象的那么高。如果的美国研究生已经不是从前那种精英教育,已经是非常普及的了。这是有数据可以看的,美国很多的大学中,研究生和本科生能保持1∶

1的比例,甚至有一些学校的研究生比本科生还多。 研究生主要是为了培养水平更高的人才。所以很多国家对于研究生的招收都会考察学生专业的知识掌握得如何。但是在美国这里的研究生招收,却不会对学生的专业基础有太多的考察。他们在招收硕士的时候都是用通用考试,主要是为了看学生有怎样的培养潜力。比如像是GRE这个考试是为了考查学生对于英语、数学等等方面的应用,就算是商学院必须要考的GMAT都不会有专业基础的内容。 要想进入研究生院确实不难,不过这不代表大家在申请到了之后就能不用想别的了。美国对于研究生们的要求还是很严的,学业上有非常重的负担。老师并不因为学生对于课程都是零起点就放慢教学进度,在这里,一个星期的课程内容就等同于本科一两个月的内容。此外老师还会让学生在课外做大量的阅读以及经常让学生写论文。 美国这边对于学位的规定是,只允许学生在得到学士学位后再去读研究生的学位。在学位体制上,美国采用的是五级制,而硕士的学位就有两种:一种要写论文,一种不需要写,不过学分就要求得比较高了。 而且在硕士学位上还有两种分类,就是文理科以及专业的硕士。文理科一般学分都需要要到24-30这个阶段,不过大多都是本专业的课程。专业硕士就有很明确的=定义了,会在学生的学位头衔前标明这个学生学的专业,而且这一类的课程会设置得非常紧凑,当然了,这些课程的声誉也较高。 美国在奖学金上也有很多种类,光是一个高校奖学金都能有服务性、非服务性以及贷款等等几种类别。不过美国大部分的高校都是会设置前两种奖学金给学生。虽然说此类资助不一定能包含学生一年中的费用,不过基本都能包含了2/3以上的费用。

参加高考申请书范文(精选多篇)

参加高考申请书(精选多篇) 参加申请书 申请人:______无线电元件二厂,地址:______市______路______号。法定代表人:李______,厂长。 委托代理人:刘______,男,____岁,______无线电元件二厂工程师,住本厂宿舍楼___号。 申请目的:参加诉讼。 申请理由:在______百货公司诉______无线电厂购销合同质量纠纷一案中,______百货公司声称因______无线电厂所产______牌收机录质量问题,造成了商品积压,影响了资金周转,要求赔偿损失。______无线电厂则声言我厂所产元件质量“不过关”造成的。 我厂是50年代组建的老厂家,所产______电子元件一直符合国家规定标准。______无线电厂所购该批电子原件,经______省质量检验中心检验,完全合格(见该批电子原件的质量鉴定书)。 我们认为,______无线电厂所产该批______牌收录机的质量问题,不是我厂______电子原件的问题,而是电子线路或其他技术方面的问题。此案既然涉及到我厂,其审理结果可能与我们有利害关系。为了不使我厂的合法权益遭受损失,根据《民事诉讼法》第五十六条第二款的规定,特申请参加本案的诉讼,望予批准。 此致

______人民法院 写给即将参加高考的你 写给即将参加高考的你。特别是,艺考生们! 看看时间,已经是2014年的5月7日的凌晨。我突然意识到:再过一个月,又将是一年一度的高考了!我心猛抽了一下,因为在若干年前的这个时候,我在家乡的某一所复读学校破旧的寝室里,正辗转难眠。 那个时候的我和你们一样,心怀梦想,却为自己能否顺利通过文化课而担忧。我几乎无法用语言来表达我当时内心的惶恐和压力,因为那时候我虽拿到了中戏的专业考试合格证,但那也只是仅有的一张,而那时候我已经21岁了!我知道,上天不会再给我一次机会的。 我的经历与现在电脑面前的你们不一样,你们大部分是应届生,顶多也只是复读一年或是两年。而我是从部队退役之后重新走入了复读学校,况且是在周围人群中除了我母亲以外所有人都怀疑、笑话、嘲笑我的举措之下的环境中做出的举动。记得退役之后的第一次月考,总分750分的试卷我只考了150多分,而那已经是当年的11月份,也就是说我除了要参加艺术考试的2个多月,仅有不到4个月的文化学习时间给我。被逼无奈我只有硬着头皮冲上前去。虽然说专业考试我顺利过了关,但中戏总分420分以上的录取线,外加语文90、外语70的单科小分对我来说依然是难以攻克的难题。

参加高考申请书

参加高考申请书 第一篇:参加申请书 参加申请书 申请人:______无线电元件二厂,地址:______市______路______号。法定代表人:李______,厂长。 委托代理人:刘______,男,____岁,______无线电元件二厂工程师,住本厂宿舍楼___号。 申请目的:参加诉讼。 申请理由:在______百货公司诉______无线电厂购销合同质量纠纷一案中,______百货公司声称因______无线电厂所产______牌收机录质量问题,造成了商品积压,影响了资金周转,要求赔偿损失。______无线电厂则声言我厂所产元件质量“不过关”造成的。 我厂是50年代组建的老厂家,所产______电子元件一直符合国家规定标准。______无线电厂所购该批电子原件,经______省质量检验中心检验,完全合格。 我们认为,______无线电厂所产该批______牌收录机的质量问题,不是我厂______电子原件的问题,而是电子线路或其他技术方面的问题。此案既然涉及到我厂,其审理结果可能与我们有利害关系。为了不使我厂的合法权益遭受损失,根据《民事诉讼法》第五十六条第二款的规定,特申请

参加本案的诉讼,望予批准。 此致 ______人民法院 第二篇:写给即将参加高考的你 写给即将参加高考的你 写给即将参加高考的你。特别是,艺考生们! 看看时间,已经是20XX年的5月7日的凌晨。我突然意识到:再过一个月,又将是一年一度的高考了!我心猛抽了一下,因为在若干年前的这个时候,我在家乡的某一所复读学校破旧的寝室里,正辗转难眠。 那个时候的我和你们一样,心怀梦想,却为自己能否顺利通过文化课而担忧。我几乎无法用语言来表达我当时内心的惶恐和压力,因为那时候我虽拿到了中戏的专业考试合格证,但那也只是仅有的一张,而那时候我已经21岁了!我知道,上天不会再给我一次机会的。 我的经历与现在电脑面前的你们不一样,你们大部分是应届生,顶多也只是复读一年或是两年。而我是从部队退役之后重新走入了复读学校,况且是在周围人群中除了我母亲以外所有人都怀疑、笑话、嘲笑我的举措之下的环境中做出的举动。记得退役之后的第一次月考,总分750分的试卷我只考了150多分,而那已经是当年的11月份,也就是说我除了要参加艺术考试的2个多月,仅有不到4个月的文化学

我的申请经(申请文科公派博士超长版)

我的申请经(申请文科公派博士超长版) 申请那段日子过得很混乱,所以一直逃避,不想回头去面对,总觉得理不清,更懒得理,但是最近一些朋友还有师弟妹们经常问我一些类似的问题,我想还是尽量回忆得清晰些,以备大家参考。 出国,从很多角度看,不同的标准,可以分成很多不同的种类,我需要先把自己的情况写出来,这样大家在参照的时候,可以避免盲目。 我的关键词是“文科、国内硕士、申英国博士、CSC公派出国、无中介”。 打听——申请前或者说决定要出国,在准备申请前,最重要的一件事,不论是通过周围的亲朋好友、师兄学姐老师还是上网搜索资料,主要就是看看出去是什么样的生活,出去后回来了是什么样的生活,是否是自己能接受的、想要的。算算读这么多年付出的成本、收益。成年人了嘛出个国不论是花家里的钱还是国家的钱,都是一个家庭的大事,多想想总是好的。我在这期间就徘徊了N次一直举棋不定,⊙﹏⊙b汗。当然,每个人有每个人的特殊性,我也只是泛泛的简单说一下,想清楚了,商量好了,就可以进行下一步了。 搜索资料——想去的国家、地区、学校的资料 这一部分在上一个阶段中已经完成了一部分,但是要在这个阶段继续细化。需要的信息类型因人而异,方法除了上述的之外,最主要的就是自己去学校官方网站看、找、得到第一手资料,很多人觉得不想看麻烦,满屏幕的单词字母,如果碰上个性点儿的学校页面查起信息就更麻烦。我也有过这样的经历N次,但是每次我都说服自己:这算啥啊?出去的话,都是比这麻烦N倍的破事儿,现在不学着适应,以后哭爹喊娘都没用。读硕士的话相对简单些,因为就是一两年的事儿,周围绝大部分出去读硕的朋友都是想借这个机会出去看看。如果你不想留在国外,不想继续读博,不是必须要名校和奖学金,那么现在的经济形势和出国如此低的门槛儿,小康家庭都能承受一个孩子出去读个硕。但是如果是读一两年就想留在国外的话,

理科转文科申请书.doc

理科转文科申请书 申请书的使用范围广泛,申请书也是一种专用书信,它同一般书信一样,也是表情达意的工具。下面我们来看看理科转文科申请书,欢迎阅读借鉴。 理科转文科申请书1 尊敬的学校领导: 我是高二(6)班学生xxx,由于文理科分班时考虑欠周到,盲目跟形势,忽略了自己的学科差异,导致现在学习非常吃力,理科多数课听不懂,经与家长商量,结合自身实际,特申请转入文科学习,望领导批准为谢。如果此申请能获得准许,我定将努力学习,绝不辜负老师厚望,以优异成绩来回报学校领导和老师的耐心教育。 此致 敬礼 申请人:xxx 20xx年x年x日 理科转文科申请书2 尊敬的校领导、老师: 我是原高一(×)班学生××。转眼间我已在××中学习生活1年了。在高一一年学习中,老师们工作兢兢业业,学校也开展了丰富多彩的活动,我取得了良好的学习成绩并且个人素质

也得到了提升。 升入高二后,我们面临着选择学科的问题。当初选则学科时,我考虑并不充分。单纯的想到我在初中80中和高中八十中都在理科实验班学习,并且现班中大部分同学都选择理科,我又有一些从众心理,所以错误得选择了理科。在暑假期间有许多前辈向我传授经验,我也查阅了大量资料。仔细考虑后我认为我选择文科有以下几个原因: 1.我一直对政治、经济、心理抱有浓厚的兴趣,经常阅读《环球时报》,《读者》,《环球》等报纸杂志,并且经常收看各类新闻节目。相比理科的物理、化学,我对文科的政治、历史、地理更为感兴趣。我对文科的兴趣高于理科。 2.××中的文科教学质量过硬。我对××中的文科教学有信心。 综合以上原因及一些其他因素,我认为我更适合在文科班继续我的学习。固向老师提出申请,恳请老师准许我由文科班转向理科班,使我有机会走一条更适合我的道路,以后得到更大的发展。 申请学生: 20xx年x年x日 理科转文科申请书3 尊敬的学校领导: xxx同学系本校高二(6)班的学生,根据目前其学习中

文科生可以申请德国大学的哪些专业

文科生可以申请德国大学的哪些专业 文科生可以申请德国大学的哪些专业? 海德堡大学 成立于1386年的海德堡大学是德国最古老的大学,也是德意志 神圣罗马帝国继布拉格和维也纳之后开设的第三所大学。十六世纪 的下半叶,海德堡大学就成为欧洲科学文化的中心。截至2013年, 海德堡大学共有29,000名在校生,超过5000人的教学和科研队伍,其中大约420人为教授。 海德堡大学不少科系享誉世界,是中欧地区第三所大学,历史仅次于捷克的布拉格大学和奥地利的维也纳大学。其中法学专业被誉 为海德堡大学的优势专业。既然题主心仪海德堡,那么如果对法学 有兴趣,不妨去海德堡大学修法学专业。 开放的文科专业: 法学系:有法学、外国和国际私法与经济法、法学史、社会经济法、刑事法、财政税和德国与欧洲管理法等专业。 哲学历史系:有哲学、历史、东欧历史、政治学、艺术史学等专业。 东方学与古代学系:有东方语言、埃及学、汉学、日本学、古典语文学、早期史学、古代史和考古学等专业。 新哲学系:有日耳曼学、英国语言文学、罗马学、中世纪和新时代拉丁哲学、斯拉夫语、语言学、口译和笔译、作为外语的德语等 等专业。 经济学系:有国际经济和社会统计比较学、社会学史和经济史等专业。 慕尼黑大学

德国排名第一的大学 路德维希-马克西米利安-慕尼黑大学(Ludwig-Maximilians-Universit?tMünchen),简称LMU或慕尼黑大学。由路德维希公爵于1472年所创建,是德国和欧洲最杰出、文化气息最浓重的大学之一,也是德国精英大学和欧洲研究型大学联盟的成员。 慕尼黑大学是国际领先的研究型大学,以34名诺贝尔奖得主在 全球院校诺奖排名中排13名,毕业生中不乏马克斯·普朗克、沃纳·海森堡,康拉德·阿登纳等科学家及政治家。慕尼黑大学在 2014/15年THE全球院校排名中排第29位,QS排名自然科学领域排 第21位、生命科学与医学第35位,艺术与人文科学第90位。在2014年世界大学学术排名(ARWU)中与海德堡大学并列德国最杰出大学,其中在自然学科排名39位、物理排名19位。

选择文科的理由

选择文科的理由 以下是本人整理的读文科的理由,正在徘徊的学生可以看看! 1.随着我国改革开放步伐的加快,我国经济快速发展.加之我国已经进入WTO, 目前的形势是:缺乏经济类人才.因此经济学在今后一段时间内将是很热门的!谁 说读文科就不好了!? 2.高考中政治、历史的专业:法律、审计、新闻、中文、哲学、国际金融等 高考中地理的专业:地质、地球物理、考古等 文科十大热门专业: (1)会计电算化。授予经济学学士。 主干课程;经济学、会计学、市场营销、计算机技术、金融学等。 招生院校:财经类、综合类、师范类院校。 (2)金融学。授予经济学学士。 主干课程:政治经济学、西方经济学、财政学、证券投资学、保险学、银行业务、投资银行理论等。 招生院校:财经类、综合类院校。 (3)对外汉语。授予文学学士。 主干课程:汉语言文学、外国语言文学、写作、翻译、古代(现代)汉语、西方文化礼仪等。 招生院校:外语类、师范类院校。 (4)外语语言文学(外语)。授予文学学士。 主要语种:英语、德语、法语、日语、意大利语、西班牙语及小语种。 招生院校:外语类、师范类、综合类院校。 (5)广播电视新闻学。授予文学学士。 主干课程:广电概论、广电技术基础、新闻采访写作、编辑制作、摄影摄象等。招生院校:各传媒院校、师范类、综合类院校。 (6)艺术设计。授予艺术学学士, 主干课程:素描、色彩图案、专业技法、专业设计、艺术设计理论等, 招生院校:艺术类、师范类、综合类院校。 (7)学前教育。授予教育学学士 主干课程:教育学、心理学、声乐、美术、幼儿玩具制作等。 招生院校:师范类。 (8)市场营销。授予管理学学士。 主干课程:管理学、统计学、会计学、财务管理、市场营销、经济法、国际市场营销、市场调查等。 招生院校:财经类、综合类院校。 (9)汉语言文学。授予文学学士 主干课程:语言学概论、汉语史、古代文学、现代文学等。 招生院校;师范类、综合类院校。 (10)国际经济与贸易。授予经济学学士。

参加高考申请书(完整版)

参加高考申请书 参加高考申请书 第一篇: 参加申请书 参加申请书 申请人: ______无线电元件二厂,地址: ______市______路______号。法定代表人: 李______,厂长。 委托代理人: 刘______,男,____岁,______无线电元件二厂工程师,住本厂宿舍楼___号。 申请目的: 参加诉讼。 申请理由: 在______百货公司诉______无线电厂购销合同质量纠纷一案中,______百货公司声称因______无线电厂所产______牌收机录质量问题,造成了商品积压,影响了资金周转,要求赔偿损失。______无线电厂则声言我厂所产元件质量“不过关”造成的。 我厂是50年代组建的老厂家,所产______电子元件一直符合国家规定标准。______无线电厂所购该批电子原件,经______省质量检验中心检验,完全合格(见该批电子原件的质量鉴定书)。

我们认为,______无线电厂所产该批______牌收录机的质量问题,不是我厂______电子原件的问题,而是电子线路或其他技术方面的问题。此案既然涉及到我厂,其审理结果可能与我们有利害关系。为了不使我厂的合法权益遭受损失,根据《民事诉讼法》第五十六条第二款的规定,特申请参加本案的诉讼,望予批准。 此致 ______人民法院 第二篇: 写给即将参加高考的你 写给即将参加高考的你 写给即将参加高考的你。特别是,艺考生们! 看看时间,已经是201X年的5月7日的凌晨。我突然意识到:再过一个月,又将是一年一度的高考了!我心猛抽了一下,因为在若干年前的这个时候,我在家乡的某一所复读学校破旧的寝室里,正辗转难眠。 那个时候的我和你们一样,心怀梦想,却为自己能否顺利通过文化课而担忧。我几乎无法用语言来表达我当时内心的惶恐和压力,因为那时候我虽拿到了中戏的专业考试合格证,但那也只是仅有的一张,而那时候我已经21岁了!我知道,上天不会再给我一次机会的。 我的经历与现在电脑面前的你们不一样,你们大部分是应届生,顶多也只是复读一年或是两年。而我是从部队退役之后重新走入了复读学校,况且是在周围人群中除了我母亲以外所有人都怀疑、笑话、嘲笑我的举措之下的环境中做出的举动。记得退役之后的第一次月考,总分750分的试卷我只考了150多分,而那已经是当年的11月