intraprediction,HEVC里面的帧内编码详解

Intra Coding of the HEVC Standard

Jani Lainema,Frank Bossen,Member,IEEE,Woo-Jin Han,Member,IEEE,Junghye Min,and Kemal Ugur

Abstract—This paper provides an overview of the intra coding techniques in the High Ef?ciency Video Coding(HEVC)standard being developed by the Joint Collaborative Team on Video Coding (JCT-VC).The intra coding framework of HEVC follows that of traditional hybrid codecs and is built on spatial sample prediction followed by transform coding and postprocessing steps.Novel features contributing to the increased compression ef?ciency include a quadtree-based variable block size coding structure, block-size agnostic angular and planar prediction,adaptive pre-and post?ltering,and prediction direction-based transform coef?cient scanning.This paper discusses the design principles applied during the development of the new intra coding methods and analyzes the compression performance of the individual tools. Computational complexity of the introduced intra prediction algorithms is analyzed both by deriving operational cycle counts and benchmarking an optimized https://www.360docs.net/doc/871184343.html,ing objective metrics,the bitrate reduction provided by the HEVC intra coding over the H.264/advanced video coding reference is reported to be 22%on average and up to36%.Signi?cant subjective picture quality improvements are also reported when comparing the resulting pictures at?xed bitrate.

Index Terms—High Ef?ciency Video Coding(HEVC),image coding,intra prediction,Joint Collaborative Team on Video Coding(JCT-VC),video coding.

I.Introduction

T HE TWO prominent international organizations specify-ing video coding standards,namely ITU-T Video Coding Experts Group(VCEG)and ISO/IEC Moving Picture Experts Group(MPEG),formed the Joint Collaborative Team on Video Coding(JCT-VC)in April2010.Since then,JCT-VC has been working toward de?ning a next-generation video coding standard called High Ef?ciency Video Coding(HEVC).There are two major goals for this standard.First,it is targeted to achieve signi?cant improvements in coding ef?ciency com-pared to H.264/Advanced Video Coding(A VC)[1],especially when operating on high-resolution video content.Second,the standard should have low enough complexity to enable high-resolution high-quality video applications in various use cases, Manuscript received April13,2012;revised July18,2012;accepted August 21,2012.Date of publication October2,2012;date of current version January 8,2013.This work was supported by Gachon University under the Research Fund of2012GCU-2011-R288.This paper was recommended by Associate Editor O.C.Au.(Corresponding author:W.-J.Han.)

https://www.360docs.net/doc/871184343.html,inema and K.Ugur are with the Nokia Research Center,Tampere 33720,Finland(e-mail:https://www.360docs.net/doc/871184343.html,inema@https://www.360docs.net/doc/871184343.html,;kemal.ugur@https://www.360docs.net/doc/871184343.html,).

F.Bossen is with DOCOMO Innovations,Inc.,Palo Alto,CA94304USA (e-mail:bossen@https://www.360docs.net/doc/871184343.html,).

W.-J.Han is with Gachon University,Seongnam461-701,Korea(e-mail: hurumi@https://www.360docs.net/doc/871184343.html,).

J.Min is with Samsung Electronics,Suwon442-742,Korea(e-mail: jh643.min@https://www.360docs.net/doc/871184343.html,).

Color versions of one or more of the?gures in this paper are available online at https://www.360docs.net/doc/871184343.html,.

Digital Object Identi?er10.1109/TCSVT.2012.2221525including operation in mobile environments with tablets and mobile phones.

This paper analyzes different aspects of the HEVC intra coding process and discusses the motivation leading to the selected design.The analysis includes assessing coding ef?ciency of the individual tools contributing to the overall performance as well as studying complexity of the introduced new algorithms in detail.This paper also describes the methods HEVC utilizes to indicate the selected intra coding modes and further explains how the tools are integrated with the HEVC coding block architecture.

Intra coding in HEVC can be considered as an extension of H.264/A VC,as both approaches are based on spatial sample prediction followed by transform coding.The basic elements in the HEVC intra coding design include:

1)quadtree-based coding structure following the HEVC

block coding architecture;

2)angular prediction with33prediction directions;

3)planar prediction to generate smooth sample surfaces;

4)adaptive smoothing of the reference samples;

5)?ltering of the prediction block boundary samples;

6)prediction mode-dependent residual transform and coef-

?cient scanning;

7)intra mode coding based on contextual information.

In addition,the HEVC intra coding process shares several processing steps with HEVC inter coding.This processing, including,e.g.,transformation,quantization,entropy coding, reduction of blocking effects,and applying sample adaptive offsets(SAO),is outside of the scope of this paper.

This paper is organized as follows.Section II explains the motivation leading to the selected design for HEVC intra coding.It also describes the overall HEVC coding architecture and the new intra prediction tools introduced in the HEVC standard.Section III presents the intra mode coding and the residual coding approaches of the standard.Section IV provides complexity analysis of the introduced intra prediction processes with detailed information about the contribution of different intra prediction modes.Section V summarizes the compression ef?ciency gains provided by the proposed design and Section VI concludes this paper.

II.HEVC Intra Coding Architecture

In H.264/A VC,intra coding is based on spatial extrapolation of samples from previously decoded image blocks,followed by discrete cosine transform(DCT)-based transform coding [2].HEVC utilizes the same principle,but further extends it to be able to ef?ciently represent wider range of textural and

1051-8215/$31.00c 2012IEEE

structural information in images.The following aspects were considered during the course of HEVC project leading to the selected intra coding design.

1)Range of supported coding block sizes:H.264/A VC

supports intra coded blocks up to the size of16×16 pixels.This represents a very small area in a high-de?nition picture and is not large enough to ef?ciently represent certain textures.

2)Prediction of directional structures:H.264/A VC sup-

ports up to eight directional intra prediction modes for

a given block.This number is not adequate to predict

accurately directional structures present in typical video and image content,especially when larger block sizes are used.

3)Prediction of homogeneous regions:The plane mode of

H.264/A VC was designed to code homogeneous image

regions.However,this mode does not guarantee con-tinuity at block boundaries,which can create visible artifacts.Thus,a mode that guarantees continuous pre-diction sample surfaces would be desired.

4)Consistency across block sizes:H.264/A VC uses differ-

ent methods to predict a block depending on the size of the block and the color component the block represents.

A more consistent design is preferred,especially as

HEVC supports a large variety of block sizes.

5)Transforms for intra coding:H.264/A VC utilizes a?xed

DCT transform for a given block size.This design does not take into consideration different statistics of the pre-diction error along the horizontal and vertical directions depending on the directionality of the prediction.

6)Intra mode coding:Due to the substantially increased

number of intra modes,more ef?cient coding techniques are required for mode coding.

The coding structure utilized for intra coding in HEVC follows closely the overall architecture of the codec.Images are split into segments called coding units(CU),predic-tion units(PU),and transform units(TU).CU represent quadtree split regions that are used to separate the intra and inter coded blocks.Inside a CU,multiple nonoverlapping PU can be de?ned,each of which speci?es a region with individual prediction parameters.CU are further split into a quadtree of transform units,each TU having a possibility of applying residual coding with a transform of the size of the TU.

HEVC contains several elements improving the ef?ciency of intra prediction over earlier solutions.The introduced methods can model accurately different directional structures as well as smooth regions with gradually changing sample values. There is also emphasis on avoiding introduction of arti?cial edges with potential blocking effects.This is achieved by adaptive smoothing of the reference samples and smoothing the generated prediction boundary samples for DC and directly horizontal and vertical modes.

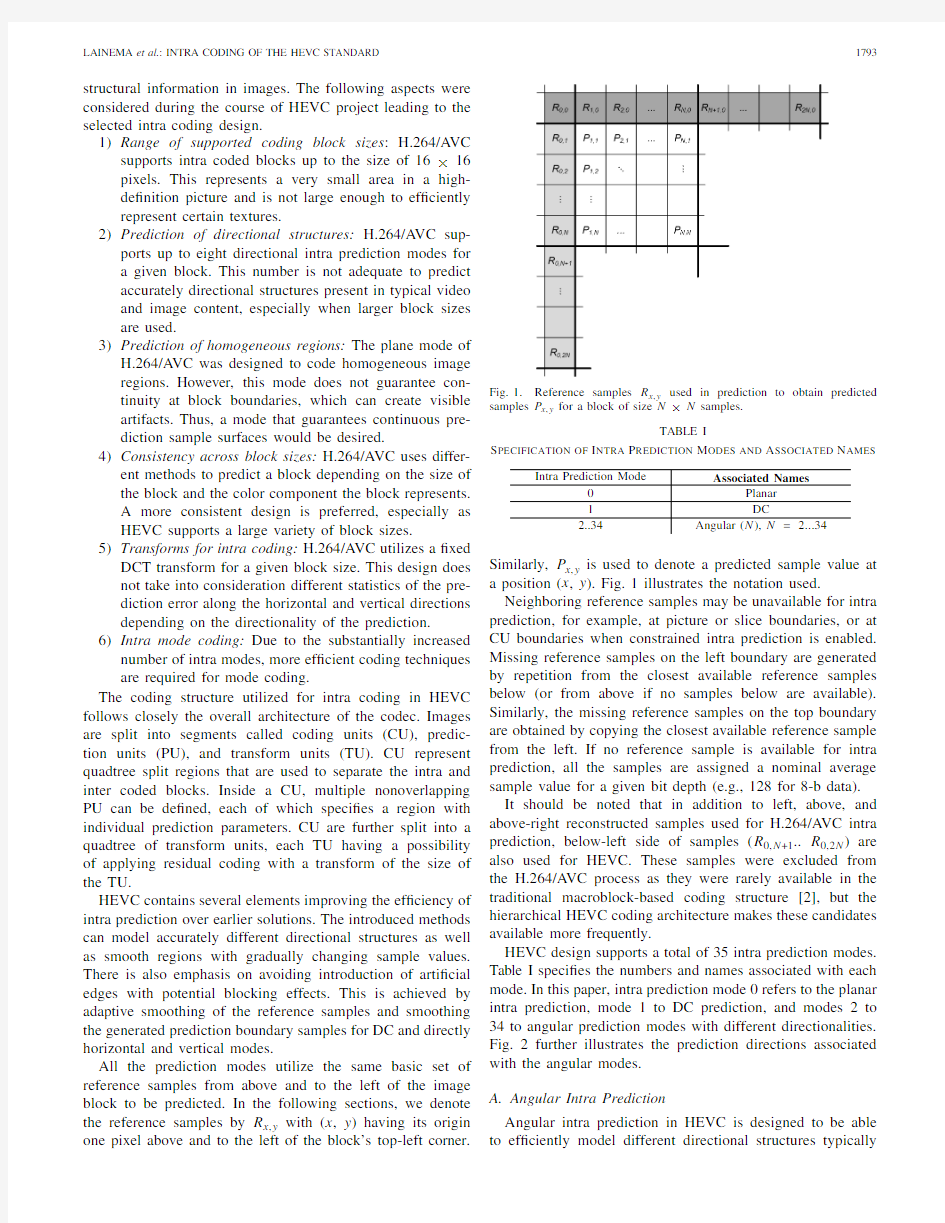

All the prediction modes utilize the same basic set of reference samples from above and to the left of the image block to be predicted.In the following sections,we denote the reference samples by R x,y with(x,y)having its origin one pixel above and to the left of the block’s top-left

corner.Fig.1.Reference samples R x,y used in prediction to obtain predicted samples P x,y for a block of size N×N samples.

TABLE I

Specification of Intra Prediction Modes and Associated Names Intra Prediction Mode Associated Names

0Planar

1DC

2..34Angular(N),N=2 (34)

Similarly,P x,y is used to denote a predicted sample value at a position(x,y).Fig.1illustrates the notation used. Neighboring reference samples may be unavailable for intra prediction,for example,at picture or slice boundaries,or at CU boundaries when constrained intra prediction is enabled. Missing reference samples on the left boundary are generated by repetition from the closest available reference samples below(or from above if no samples below are available). Similarly,the missing reference samples on the top boundary are obtained by copying the closest available reference sample from the left.If no reference sample is available for intra prediction,all the samples are assigned a nominal average sample value for a given bit depth(e.g.,128for8-b data). It should be noted that in addition to left,above,and above-right reconstructed samples used for H.264/A VC intra prediction,below-left side of samples(R0,N+1..R0,2N)are also used for HEVC.These samples were excluded from the H.264/A VC process as they were rarely available in the traditional macroblock-based coding structure[2],but the hierarchical HEVC coding architecture makes these candidates available more frequently.

HEVC design supports a total of35intra prediction modes. Table I speci?es the numbers and names associated with each mode.In this paper,intra prediction mode0refers to the planar intra prediction,mode1to DC prediction,and modes2to 34to angular prediction modes with different directionalities. Fig.2further illustrates the prediction directions associated with the angular modes.

A.Angular Intra Prediction

Angular intra prediction in HEVC is designed to be able to ef?ciently model different directional structures typically

Fig.2.HEVC angular intra prediction modes numbered from2to34and the associated displacement parameters.H and V are used to indicate the horizontal and vertical directionalities,respectively,while the numeric part of the identi?er refers to the pixels’displacement as1/32pixel fractions. present in video and image contents.The number and angu-larity of prediction directions are selected to provide a good tradeoff between encoding complexity and coding ef?ciency for typical video material.The sample prediction process itself is designed to have low computational requirements and to be consistent across block sizes and prediction directions.The latter aims at minimizing the silicon area in hardware and the amount of code in software implementations.It is also intended to make it easier to optimize the implementation for high performance and throughput in various environments. This is especially important as the number of block sizes and prediction directions supported by HEVC intra coding far exceeds those of previous video codecs,such as H.264/A VC. In HEVC,there are four effective intra prediction block sizes ranging from4×4to32×32samples,each of which supports 33distinct prediction directions.A decoder must thus support 132combinations of block size and prediction direction. The following sections discuss in further detail different aspects contributing to the coding performance and implemen-tation complexity of the HEVC angular intra prediction.

1)Angle De?nitions:In natural imagery,horizontal and vertical patterns typically occur more frequently than patterns with other directionalities.The set of33prediction angles is de?ned to optimize the prediction accuracy based on this observation[7].Eight angles are de?ned for each octant with associated displacement parameters,as shown in Fig.2. Small displacement parameters for modes close to horizon-tal and vertical directions provide more accurate prediction for nearly horizontal and vertical patterns.The displacement parameter differences become larger when getting closer to diagonal directions to reduce the density of prediction modes for less frequently occurring

patterns.Fig.3.Example of projecting left reference samples to extend the top reference row.The bold arrow represents the prediction direction and the thin arrows the reference sample projections in the case of intra mode23 (vertical prediction with a displacement of?9/32pixels per row).

2)Reference Pixel Handling:The intra sample prediction process in HEVC is performed by extrapolating sample values from the reconstructed reference samples utilizing a given directionality.In order to simplify the process,all sample locations within one prediction block are projected to a single reference row or column depending on the directionality of the selected prediction mode(utilizing the left reference column for angular modes2to17and the above reference row for angular modes18to34).

In some cases,the projected pixel locations would have negative indexes.In these cases,the reference row or column is extended by projecting the left reference column to extend the top reference row toward left,or projecting the top reference row to extend the left reference column upward in the case of vertical and horizontal predictions,respectively.This approach was found to have a negligible effect on compression performance,and has lower complexity than an alternative approach of utilizing both top and left references selectively during the prediction sample generation process[5].Fig.3 depicts the process for extending the top reference row with samples from the left reference columns for an8×8block of pixels.

3)Sample Prediction for Arbitrary Number of Directions: Each predicted sample P x,y is obtained by projecting its location to a reference row of pixels applying the selected prediction direction and interpolating a value for the sample at1/32pixel accuracy.Interpolation is performed linearly utilizing the two closest reference samples

P x,y=

32?w y

·R i,0+w y·R i+1,0+16

>>5(1)

where w y is the weighting between the two reference samples corresponding to the projected subpixel location in between R i,0and R i+1,0,and>>denotes a bit shift operation to the right.Reference sample index i and weighting parameter w y are calculated based on the projection displacement d associated with the selected prediction direction(describing the tangent of the prediction direction in units of1/32samples

and having a value from ?32to +32as shown in Fig.2)as

c y =(y ·

d )>>5w y =(y ·d )&31i =x +c y

(2)

where &denotes a bitwise AND operation.It should be noted that parameters c y and w y depend only on the coordinate y and the selected prediction displacement d .Thus,both parameters remain constant when calculating predictions for one line of samples and only (1)needs to be evaluated per sample basis.When the projection points to integer samples (i.e.,when w y equals zero),the process is even simpler and consists of only copying integer reference samples from the reference row.Equations (1)and (2)de?ne how the predicted sample values are obtained in the case of vertical prediction (modes 18to 34)when the reference row above the block is used to derive the prediction.Prediction from the left reference column (modes 2to 17)is derived identically by swapping the x and y coordinates in (1)and (2).B.Planar Prediction

While providing good prediction in the presence of edges is important,not all image content ?ts an edge model.The DC prediction provides an alternative but is only a coarse approximation as the prediction is of order 0.H.264/A VC features an order-1plane prediction mode that derives a bilinear model for a block using the reference samples and generates the prediction using this model.One disadvantage of this method is that it may introduce discontinuities along the block boundaries.The planar prediction mode de?ned in HEVC aims to replicate the bene?ts of the plane mode while preserving continuities along block boundaries.It is essentially de?ned as an average of two linear predictions (see [8,Fig.2]for a graphical representation)

P V x,y =(N ?y )·R x,0+y ·R 0,N +1P H x,y =(N ?x )·R 0,y +x ·R N +1,0P x,y = P V

x,y +P H

x,y +N >> log 2(N )+1 .

(3)

C.Reference Sample Smoothing

H.264/A VC applies a three-tap smoothing ?lter to the ref-erence samples when predicting 8×8luma blocks.HEVC uses the same smoothing ?lter ([121]/4)for blocks of size 8×8and larger.The ?ltering operation is applied for each reference sample using neighboring reference samples.The ?rst reference sample R 0,2N and R 2N,0are not ?ltered.For 32×32blocks,all angular modes except horizontal and vertical use a ?ltered reference.In 16×16blocks,the modes not using a ?ltered reference are extended to the four modes (9,11,25,27)closest to horizontal and vertical.Smoothing is also applied where the planar mode is used,for block sizes 8×8and larger.However,HEVC is more discerning in the use of this smoothing ?lter for smaller blocks.For 8×8blocks,only the diagonal modes (2,18,34)use a ?ltered reference.Applying the reference sample smoothing selectively based on the block size and directionality of the prediction is reported to reduce contouring artifacts caused by edges in the reference sample arrays [23].

D.Boundary Smoothing

As noted above in the case of the H.264/A VC plane predic-tion mode,DC and angular prediction modes may introduce discontinuities along block boundaries.To remedy this prob-lem,the ?rst prediction row and column are ?ltered in the case of DC prediction with a two-tap ?nite impulse response ?lter (corner sample with a three-tap ?lter).Similarly,the ?rst prediction column for directly vertical prediction and the ?rst prediction row for directly horizontal prediction are ?ltered utilizing a gradient-based smoothing [9].For a more complete description of the ?ltering process,please refer to [25].

As the prediction for chroma components tends to be very smooth,the bene?ts of the boundary smoothing would be limited.Thus,in order to avoid extra processing with marginal quality improvements,the prediction boundary smoothing is only applied to luma component.The average coding ef?-ciency improvement provided by the boundary smoothing is measured to be 0.4%in HM 6.0environment [14].E.I

?

PCM Mode and Transform Skipping Mode

HEVC supports two special coding modes for intra coding denoted as I ?PCM and transform skipping mode.In I ?PCM mode,prediction,transform,quantization,and entropy coding are bypassed while the prediction samples are coded by a prede?ned number of bits.The main purpose of the I ?PCM mode is to handle the situation when the signal cannot be ef?ciently coded by other modes.

On the contrary,only transform is bypassed in transform skipping mode.It was adopted to improve the coding ef?-ciency for speci?c video contents such as computer-generated graphics.HEVC restricts the use of this mode when the block size is equal to 4×4to avoid the signi?cant design change due to this mode while this choice was proven to have most of coding ef?ciency bene?ts.F .Restrictions for Partitioning Types

HEVC intra coding supports two types of PU division,PART ?2N ×2N and PART ?N ×N ,splitting a CU into one or four equal-size PUs,respectively.However,the four regions speci?ed by the partitioning type PART ?N ×N can be also represented by four smaller CU with the partitioning type PART ?2N ×2N .Due to this,HEVC allows an intra CU to be split into four PU only at the minimum CU size.It has been demonstrated that this restriction is associated with minimal impact in coding ef?ciency,but it reduces the encoding complexity signi?cantly [3].

Another way to achieve the same purpose would be reducing the CU size to 4×4with the partitioning mode PART ?2N ×2N .However,this approach would result in a chroma intra prediction block size of 2×2pixels,which can be critical to the throughput of the entire intra prediction process.For this reason,the minimum allowed CU size is restricted to 8×8pixels while allowing the partitioning type PART ?N ×N only in the smallest CU.G.TU-Based Prediction

When a CU is split into multiple TU,the intra prediction is applied for each TU sequentially instead of applying the

intra prediction at the PU level.One obvious advantage for this approach is the possibility of always utilizing the nearest neighboring reference samples from the already reconstructed TU.It has been shown that this property improves the intra picture coding ef?ciency by about1.2%[4]compared to the case of using PU border reference samples for the PU.It should be noted that all the intra prediction information is indicated per PU and the TUs inside the same PU share the same intra prediction mode.

III.Mode and Residual Coding

A.Mode Coding for Luma

HEVC supports total33angular prediction modes as well as planar and DC prediction for luma intra prediction for all the PU sizes.Due to the large number of intra prediction modes, H.264/A VC-like mode coding approach based on a single most probable mode was not found effective in HEVC[8].Instead, HEVC de?nes three most probable modes for each PU based on the modes of the neighboring PUs.The selected number of most probable modes makes it also possible to indicate one of the32remaining modes by a?xed length code,as the distribution of the mode probabilities outside of the set of most probable modes is found to be relatively uniform.

The selection of the set of three most probable modes is based on modes of two neighboring PUs,one above and one to the left of the current PU.By default,modes of both candidate PUs are included in the set.Possible duplicates and the third mode in the set are assigned with modes planar,DC,or angular (26)in this order.In the case that both top and left PU has the same directional mode,that mode and two closest directional modes are used to construct the set of most probable modes instead.

In the case current intra prediction mode is equal to one of the elements in the set of most probable modes,only the index in the set is transmitted to the decoder.Otherwise,a5-b?xed length code is used to determine the selected mode outside of the set of most probable modes.

B.Derived Mode for Chroma Intra Prediction

Quite often structures in the chroma signal follow those of the luma.Taking advantage of this behavior,HEVC introduces a mechanism to indicate the cases when chroma PU utilizes the same prediction mode as the corresponding luma PU.Table II speci?es the mode arrangement used in signaling the chroma mode.

In the case that derived mode is indicated for a PU,the prediction is performed by using the corresponding luma PU mode.In order to remove the possible redundancy in the signaling arising when derived refers to one of the modes always present,angular(34)mode is used to substitute the duplicate mode as shown in Table II.

C.Syntax Design for the Intra Mode Coding

HEVC uses three syntax elements to represent the luma intra prediction mode.The syntax element prev?intra?luma?pred??ag speci?es whether the luma intra

TABLE II

Specification of Chroma Intra Prediction

Modes and Associated Names

Chroma intra Alternative mode,if

prediction Primary mode primary mode is equal to

mode the derived mode

0Planar Angular(34)

1Angular(26)Angular(34)

2Angular(10)Angular(34)

3DC Angular(34)

4Derived N/A prediction mode is matched with one of three most probable modes.When the?ag is equal to1,the syntax element mpm?idx is parsed,indicating which element of the most probable mode array speci?es the luma intra prediction mode.When the?ag is equal to0,the syntax element rem?intra?luma?pred?mode is parsed,specifying the luma intra prediction mode as rem?intra?luma?pred?mode+N, where N is the number of elements in the most probable mode array having a mode index greater than or equal to the syntax element rem?intra?luma?pred?mode.

In chroma mode coding,a1-b syntax element(0)is assigned to the most often occurring derived mode,while3-b syntax elements(100,101,110,111)are assigned to the remaining four modes.

D.Intra Mode Residual Coding

HEVC utilizes intra mode dependent transforms and co-ef?cient scanning for coding the residual information.The following sections describe the selected approaches in more detail.

1)Block Size-Dependent Transform Selection:Integer trans-forms derived from DCT and discrete sine transform(DST)are applied to the intra residual blocks.A DST-based transform is selected for4×4luma blocks and DCT-based transforms for the rest.For more details on the speci?cation of the transforms, the reader is referred to[10]and[11].

Different approaches to select the transforms were studied during the course of the HEVC development.For example, an early HEVC draft utilized a method that selected the horizontal and vertical transforms separately for4×4luma blocks based on the intra prediction mode.This approach provided an average coding ef?ciency improvement of0.8% [10].However,it was found that the simple block size de-pended approach now adopted in the standard provides very similar coding ef?ciency[26].Also larger size alternative transforms were studied,but it was reported that the additional coding ef?ciency improvements were marginal compared to the complexity impact[27].Thus,only DCT-based transforms are utilized for block sizes larger than4×4.

2)Intra Prediction Mode-Dependent Coef?cient Scanning: HEVC applies adaptive scanning of transform coef?cients to the transform block sizes of4×4and8×8in order to take advantage of the statistical distribution of the active coef?cients in2-D transform blocks.The scan is selected based on directionality of the intra prediction mode as shown

TABLE III

Mapping Table Between Intra Prediction Modes and Coefficient Scanning Order

Intra Coef?cient Coef?cient

prediction mode

scanning for scanning for

4×4and8×816×16and32×32

Angular(6–14)Vertical Diagonal

Angular(22–30)Horizontal Diagonal

All other modes Diagonal Diagonal

in Table III.Vertical and horizontal scans refer to the cor-

responding raster scan orders,while the diagonal scan refers

to a diagonal scan from down-left to up-right direction.The

average coding gain from the intra mode dependent scans is

reportedly around1.2%[12].

https://www.360docs.net/doc/871184343.html,plexity of Intra Prediction Tools

https://www.360docs.net/doc/871184343.html,plexity Analysis of Decoding Process

1)Analysis of Operational Cycle Counts:De?ning a proper

metric for theoretical analysis of complexity can be dif?cult

as various aspects come into play.For example,if relying on

counting operations,de?ning what constitutes one operation

largely depends on the underlying architecture.The bit width

of each data element being processed also plays an important

role in determining a number of gates in hardware and

the amount of parallelism achievable in software.How an

algorithm is implemented can also have a signi?cant impact.

A lower number of operations is not always better.Executing

four operations in parallel may be faster and considered less

complex than executing three in sequence.In this section,we

thus limit ourselves to a more super?cial comparison of HEVC

prediction modes with the H.264/A VC ones.

The DC,directly horizontal,and directly vertical predic-

tion modes are the ones most similar to those de?ned in

H.264/A VC.HEVC simply de?nes an additional post?ltering

operation where one row and/or column of samples is?ltered.

The overhead of such?ltering becomes less with larger block

sizes,as the fraction of?ltered samples becomes smaller.

For predicting a block of size N×N,angular prediction

requires the computation of p=(u·a+v·b+16)>>5for

each sample,which involves two multiplications(8-b unsigned

operands,16-b result),two16-b additions,and one16-b shift

per predicted sample,for a total of?ve operations.In the

H.264/A VC case,directional prediction may take the form

p=(a+2b+c+2)>>2,which may be considered to be ?ve operations as well.However,on some architectures,this

can be implemented using two8-b halving add operations:

d=(a+c)>>1and p=(d+b+1)>> 1.The H.264/A VC directional prediction process is thus less complex as it requires no multiplication and no intermediate value wider than9b.

In the case of the plane and planar modes,considering

the generating equations is probably not adequate,as the

prediction values can be ef?ciently computed incrementally.

For the H.264/A VC plane mode,it is expected that one16-b

addition,one16-b shift,and one clip to8-b range are required

TABLE IV

Decoding Time Comparison Between HM6.0Decoder and Its Optimized Decoder Used for Further Analysis

Setting

Bitrate HM6.0Optimized

(Mbit/s)(s)(s) BasketballDrive,QP=2271.1104.4721.97

BasketballDrive,QP=378.461.60 6.98

BQTerrace,QP=22179.2163.9441.42

BQTerrace,QP=3721.691.0012.03

Cactus,QP=22105.7120.4628.84

Cactus,QP=3714.269.598.78

Kimono,QP=2222.337.117.02

Kimono,QP=37 3.826.94 2.88

ParkScene,QP=2252.760.5314.02

ParkScene,QP=377.333.90 4.35

Geometric mean25.466.3410.91

Sequences are each10-s long and are coded in intra-only mode.Optimized decoder is about four to nine times faster than HM.

per sample.For the HEVC planar mode,three16-b additions and one16-b shift are expected per sample.

The partial analysis provided here is indicative.It suggests that the HEVC intra prediction modes are more complex than the A VC modes,but not necessarily by a large amount.In the following,focus is shifted to complexity investigation based on actual implementations.

2)Decoding Time of Intra Prediction in Relation to Overall Decoding Time:In a?rst experiment,the percentage of de-coding time spent on intra prediction is measured.Reference encodings for the main pro?le of HM6.0[14]are used in the all-intra con?guration[15].The experiment is focused on high-de?nition sequences from the JCT-VC class B test set(?ve 1920×1080sequences).MD5checksum computation and ?le output of the reconstructed pictures are disabled such that decoding times more accurately re?ect time spent on actually decoding pictures.Measurements are obtained on a laptop featuring an Intel Core i5processor clocked at 2.53GHz. Software was compiled using gcc4.6.3.In addition to the HM software,an optimized decoder,based on[16],is also considered.Table IV summarizes decoding times and gives an indication of the level of optimization.For the comparative results between HEVC HM decoder and H.264/A VC JM decoder,please refer to[24],which reported that they are comparable.The fraction of decoding time used for intra prediction is further summarized in Table V.

While the results obtained with HM and optimized software show some differences,they do not diverge signi?cantly:on average roughly12%–15%of decoding time is consumed by intra prediction.The results may however be somewhat surprising in the sense that generating the reference arrays used for prediction takes more time than generating the prediction from those reference arrays.Part of this observation may be caused by the fact that in both of the tested software implemen-tations,full reference arrays are constructed,including2N+1 samples for a row above and2N+1samples for a left column. Most prediction modes do not require all these samples,and constructing only the required ones may reduce the amount of time by up to50%.

TABLE V

Decoding Time Fraction for Intra Prediction

Setting Reference Prediction Total (%)(%)(%)

BasketballDrive,QP=2211.0/5.7 5.0/3.815.0/9.5 BasketballDrive,QP=379.9/8.0 5.7/9.415.6/17.4 BQTerrace,QP=2210.2/4.6 4.2/2.514.4/7.1 BQTerrace,QP=3711.6/8.3 6.3/7.117.9/15.4 Cactus,QP=2210.6/5.4 4.5/3.215.1/8.6 Cactus,QP=3711.1/8.8 5.5/8.016.6/16.8 Kimono,QP=228.3/4.5 4.3/4.912.6/9.4 Kimono,QP=378.0/6.6 5.0/8.813.0/15.4 ParkScene,QP=2210.1/5.4 4.3/2.914.4/8.3 ParkScene,QP=3710.8/8.2 5.0/7.115.8/15.3 Average10.2/6.6 5.0/5.815.2/12.4 Reference represents construction of the reference samples array and Prediction represents the actual prediction process.For the HM6software, functions in the TComPattern class are considered part of reference, functions in the TComPrediction class part of prediction.In each pair of numbers,the left one represents HM6and the right one the optimized decoder

TABLE VI

Cycles Per Sample for Intra Prediction Modes(in a Specific Software Implementation Based on[16])

Luma Chroma

Mode4816324816 DC 1.500.750.440.220.280.140.08 Planar 1.130.660.650.640.560.450.50 Horizontal0.940.420.190.120.380.230.20 Vertical0.940.520.320.260.280.120.10 Angular(2–9) 1.31 1.230.820.68 1.130.730.67 Angular(11–17) 1.50 1.230.890.69 1.410.830.67 Angular(18–25) 1.50 1.140.760.56 1.310.710.63 Angular(27–34) 1.31 1.140.780.55 1.130.730.61 3)Cycles for Each Prediction Mode:Using optimized software based on[16],the decoding time associated with each prediction mode is further studied.Table VI shows numbers of computed cycles,normalized by the number of predicted samples.Numbers for chroma are reported separately for two reasons:post?ltering is not applied to chroma components (DC,horizontal,and vertical modes)and predictions for both chroma components can be generated concurrently.Note that the number of cycles may vary for different angular prediction modes.Angular modes are thus split into four categories (positive/negative angle,main direction is hor/ver).

Note that the cost of DC postsmoothing is signi?cant. For blocks of size4×4,the DC mode even is the most time-consuming prediction mode.It should be further noted that the postsmoothing is not optimized by SIMD opera-tions(at least not manually).While the number of cycles per sample decreases as the size of the blocks increase, this does not always seem to be the case.The differences between the various angular ranges are due to two factors:for modes2–9and11–17,the result is transposed,leading to a higher cycle count;and for modes11–17and18–25,samples from the second reference array are projected onto the?rst one.

TABLE VII

Results of the Tests on Different Intra Coding Tools

Test1Test2Test3Test4Test5 Class BDR BDR BDR BDR BDR

Class A 6.1% 5.7% 2.6%9.6% 1.1%

Class B 6.5%8.5% 1.5% 5.7% 1.0%

Class C 6.9% 3.7%0.3% 1.6% 1.1%

Class D 4.8% 2.5%0.3% 1.4%0.8%

Class E9.5%12.3% 1.9% 6.9% 1.6%

Class F 5.6% 4.8%0.3% 1.5% 1.2%

Maximum14.8%19.2% 4.7%16.3% 1.6% All 6.5% 6.1% 1.1% 4.4% 1.1%

B.Fast Encoding Algorithm

While an increase in the number of intra prediction modes

can provide substantial performance gains,it also makes the

rate-distortion(RD)optimization process more complex.In

this section,we introduce the new encoding algorithm utilized

by the of?cial HM6.0reference software and describe how it

is optimized for large sets of prediction candidates[17].

The fast encoding algorithm of HM software includes two

phases.In the?rst phase,the N most promising candidate

modes are selected by the rough mode decision process.In this

process,all candidates(35modes)are evaluated with respect

to the following cost function:

C=D Had+λ·R mode(4) where the D Had represents the absolute sum of Hadamard

transformed residual signal for a PU and R mode represents the

number of bits for the prediction mode.

In the second stage,the full RD costs with reconstructed

residual signal used for actual encoding process are compared

among the N best candidates.The prediction mode with the

minimum RD cost is selected as the?nal prediction mode.The

number N is varied depending on the PU size.The N is set to {8,8,3,3,3}for4×4,8×8,16×16,32×32,and64×64 PU,respectively,to allow more thorough search for the small

block sizes most critical to the joint optimization of prediction

and residual data.It should be noted that the size of TU is

assumed to be equal to the maximum possible value rather

than allowing the TU splitting in this stage for minimizing the

complexity.RD optimized TU structure is determined after the

second stage by using the best prediction mode.

Results marked as Test6in Table VIII indicate that the

design of the described fast algorithm is well optimized for

the large number of prediction modes.

V.Experimental Results

A.Test Conditions

The JCT-VC common test conditions[20]were used to

evaluate the performance of different aspects of the intra

picture coding of HEVC.These conditions de?ne a set of24

video sequences in six classes(A to F)covering a wide range

of resolutions and use cases.In addition to natural camera

captured material,the Class F sequences also include computer

screen content and computer graphics content,as well as

TABLE VIII

Results of the Tests on Fast Intra Encoding and SAO

Test6Test7

Class BDR t(enc) BDR

Class A?0.4%294%0.8%

Class B?0.3%296%0.7%

Class C?0.3%297%0.9%

Class D?0.4%296%0.6%

Class E?0.4%286%0.9%

Class F?0.4%303% 2.9%

Maximum?0.2%333% 4.3%

All?0.4%296% 1.1%

content mixing natural video and graphics.In order to assess the objective quality differences,Bj?ntegaard delta bitrates are computed using piece-wise cubic interpolation[18],[19]. The10-b Nebuta and SteamLocomotive sequences were not included in the experimental results since the HEVC Main pro?le only speci?es operation for8-b4:2:0video.

HM6.0reference software[14]with the Main pro?le set-tings were used to evaluate the HEVC intra coding perfor-mance.The four rate points required to calculate the Bj?n-tegaard delta bitrates were generated by using quantization parameters22,27,32,and37.

Performance of the H.264/A VC intra coding was investi-gated using JM18.2reference software[22]with its high-pro?le settings(RD optimizations,8×8DCT and CABAC enabled).In order to operate approximately at the same bitrates with HEVC,quantization parameters of24,29,34,and39 were used for H.264/A VC.

B.Experimental Results

2)Performance Analysis of the HEVC Intra Coding Tools: In order to analyze how different aspects contribute to the total performance of HEVC intra coding,the following experiments were carried out.

1)Test1:Restricting HM6.0intra modes to the eight

H.264/A VC directional modes,DC,and planar mode,

utilizing a1-MPM approach for mode coding.

2)Test2:Restricting the HM6.0maximum CU size to

16×16and the maximum TU size to8×8.

3)Test3:Restricting the maximum TU size to16×16.

4)Test4:Restricting the maximum TU size to8×8.

5)Test5:Substituting the HM6.03-MPM intra mode

coding with a1-MPM approach.

6)Test6:Utilizing full RD optimizations for selecting the

intra mode as described in Section IV-B.

7)Test7:Switching off SAO.

The relevant syntax changes due to the differences of the number of intra modes and MPMs were applied in Tests1 and5for the fair comparison.Tables VII and VIII summa-rize the performance for each test.Positive numbers indicate the increase in bitrate associated with restricting the codec according to the test descriptions.In addition to the average and per class results,the maximum difference for a single test sequence is also reported.According to Test1,the average impact provided by the HEVC angular prediction is6.5%

TABLE IX

Performance of HM6.0Compared With JM18.2

Class Sequence Bit-saving(%) Class A(2560×1600)Traf?c?21.9

PeopleOnStreet?22.2

Kimono?27.8

ParkScene?16.5 Class B(1920×1080)Cactus?23.6

BasketballDrive?29.0

BQTerrace?20.8

BasketballDrill?31.7

BQMall?20.3 Class C(832×480)PartyScene?12.6

RaceHorses?18.3

BasketballPass?22.6

BQSquare?13.3 Class D(416×240)BlowingBubbles?13.8

RaceHorses?19.3

FourPeople?23.3 Class E(1280×720)Johnny?35.5

KristenAndSara?28.8

BasketballDrillText?27.9 Class F(1024×768)ChinaSpeed?18.8

SlideEditing?15.7

SlideShow?27.6

Class A?22.1

Class B?23.6

Class C?20.7

Class D?17.2 Averages Class E?29.2

Class F?22.5

Maximum?35.5

All?22.3 while more differences up to14.8%are noticed for sequences with strong directional patterns such as BasketballDrive and BasketballDrill.In addition,the coding ef?ciency bene?ts are rather uniform across difference classes while Class E,which consists of the video conferencing sequences having large objects and steady background,provides more bene?ts. Allowing larger CU,PU,and TU with more?exibility in both predicting and transforming the picture content provides an average bitrate impact of6.1%according to Test2.To clarify further,Tests3and4study the effects provided by utilization of transform sizes larger than8×8while keeping the maximum CU size to64×64.Test3indicates that switch-ing of the32×32transform affects the coding ef?ciency by 1.1%on average and Test4indicates that switching off both 32×32and16×16transforms has an average impact of 4.4%.It can be observed that the coding ef?ciency bene?ts from the large size transform become more signi?cant when the spatial resolution of the video sequence is increased.Test 5shows that using one most probable mode instead of three would result in1.1%average coding ef?ciency difference. Test6illustrates the performance of the HM6.0encoding algorithm for intra coding.A full RD optimized codec would be able to achieve a?0.4%coding gain on average,but with almost three times the encoding time of HM6.0.Thus,the current HM6.0encoder design seems to provide a very inter-esting compromise between coding ef?ciency and complexity.

Fig.4.Example of visual quality improvements in 50f/s BasketballDrive 1920×1080sequence.(a)HM 6.0(8.4Mb/s).(b)A VC 10modes (8.5Mb/s).(c)JM 18.2(9.1

Mb/s).

Fig.5.Example of visual quality improvements in the ?rst frame of 60f/s KristenAndSara 1280×720sequence.Above:an extract of HM 6.0coded frame at 4.8Mb/s.Below:JM 18.2at 4.9Mb/s.

Finally,Test 7provides the results showing that the switching off SAO results in the 1.1%coding ef?ciency loss on average.2)Performance Compared to H.264/AVC Intra Coding :Table IX compares the intra picture coding performance of HEVC with that if H.264/A VC using the test conditions described above.The experiment indicates that in order to achieve similar objective quality,HEVC needs 22.3%lower bitrate on average.Although the average bit-saving of HEVC intra coding seems less than that of HEVC inter coding,which was typically reported over 30%,the bene?ts are still substantial.

The advantages over H.264/A VC intra coding tend to be larger when the video resolution becomes higher and the strong directionalities exist.Differences close to 30%were observed for seven of the sequences:Kimono,BasketballDrive,BasketballDrill,Johnny,KristenAndSara,BasketballDrillText ,and SlideShow .The highest difference of 35.5%is obtained in the case of Johnny .These sequences are characterized by strong directional textures.In addition,Kimono,Johnny ,and KristenAndSara feature also large homogenous regions.The ?exible coding structure of HEVC supporting blocks up to 64×64with accurate prediction means appears to be espe-cially effective for this kind of content.The large transform block size also plays a role in performance improvement by

providing strong energy compaction and smaller quantization errors.Especially for regions that cannot be properly predicted by angular prediction (e.g.,diverse textures),a large transform is successfully applied for residual coding.Sample-adaptive offset ?ltering also provides additional gains in intra pictures by compensating distortions between reconstructed and origi-nal signals.

3)Subjective Quality Evaluation :Comparison of visual quality provided by HM 6.0implementation of HEVC,HM 6.0restricted to 10intra prediction modes as described for Test 1above,and JM 18.2implementation of H.264/A VC is presented in Fig.4.It shows that directional patterns are much more clear and distinctive in the image produced by HM 6.0while blurred lines are observed in other cases.In the image from the JM,several lines are completely missing.Very similar effects are noticeable also in Fig.5,comparing decoded picture quality of HM 6.0with that of JM 18.2in the case of the KristenAndSara test sequence.It appears that the angular prediction with accurate prediction directions contribute positively to the reconstruction of edge structures.As can be observed from these results,the angular prediction and other intra tools adopted in HM 6.0have potential to provide signi?cant improvements in visual quality in addition to the objective performance gains.

VI.Conclusion

The intra coding methods described in this paper can provide signi?cant improvements in both objective and subjective quality of compressed video and still pictures.While demonstrating compression ef?ciency superior to previous solutions,computational requirements and different aspects affecting implementation in various environments were thoroughly considered.The proposed methods passed stringent evaluation by the JCT-VC community and now form the intra coding toolset of the HEVC standard.Potential future work in the area includes,e.g.,extending and tuning the tools for multiview/scalable coding,higher dynamic range operation,and 4:4:4sampling formats.

Acknowledgment

The authors would like to thank the experts of ITU-T VCEG,ISO/IEC MPEG,and the ITU-T/ISO/IEC Joint Col-laborative Team on Video Coding for their contributions and collaborative spirit.

References

[1]Advanced Video Coding for Generic Audiovisual Services ,ISO/IEC

14496-10,ITU-T Rec.H.264,Mar.2010.

[2]T.Wiegand,G.J.Sullivan,G.Bj?ntegaard,and A.Luthra,“Overview

of the H.264/A VC video coding standard,”IEEE Trans.Circuits Syst.Video Technol.,vol.13,no.7,pp.560–576,Jul.2003.

[3]J.Kim and B.Jeon,Encoding Complexity Reduction by Removal of

N ×N Partition Type,JCTVC-D087,Daegu,Korea,Jan.2011.

[4]T.Lee,J.Chen,and W.-J.Han,TE 12.1:Transform Unit Quadtree/2-Level Test,JCTVC-C200,Guangzhou,China,Oct.2010.

[5]T.K.Tan,M.Budagavi,and https://www.360docs.net/doc/871184343.html,inema,Summary Report for TE5on

Simpli?cation of Uni?ed Intra Prediction,JCTVC-C046,Guangzhou,China,Oct.2010.

[6]K.Ugur,K.R.Andersson,and A.Fuldseth,Video Coding Technology

Proposal by Tandberg,Nokia,and Ericsson,JCTVC-A119,Dresden, Germany,Apr.2010.

[7]J.Min,S.Lee,I.Kim,W.-J.Han,https://www.360docs.net/doc/871184343.html,inema,and K.Ugur,Uni?cation

of the Directional Intra Prediction Methods in TMuC,JCTVC-B100, Geneva,Switzerland,Jul.2010.

[8]S.Kanumuri,T.K.Tan,and F.Bossen,Enhancements to Intra Coding,

JCTVC-D235,Daegu,Korea,Jan.2011.

[9] A.Minezawa,K.Sugimoto,and S.Sekiguchi,An Improved Intra

Vertical and Horizontal Prediction,JCTVC-F172,Torino,Italy,Jul.

2011.

[10] A.Saxena and F. C.Fernandes,CE7:Mode-Dependent DCT/DST

Without4*4Full Matrix Multiplication for Intra Prediction,JCTVC-E125,Geneva,Switzerland,Mar.2011.

[11]R.Joshi,P.Chen,M.Karczewicz,A.Tanizawa,J.Yamaguchi,C.Yeo,

Y.H.Tan,H.Yang,and H.Yu,CE7:Mode Dependent Intra Residual Coding,JCTVC-E098,Geneva,Switzerland,Mar.2011.

[12]Y.Zheng,M.Coban,X.Wang,J.Sole,R.Joshi,and M.Karczewicz,

CE11:Mode Dependent Coef?cient Scanning,JCTVC-D393,Daegu, Korea,Jan.2011.

[13]V.Sze,K.Panusopone,J.Chen,T.Nguyen,and M.Coban,CE11:

Summary Report on Coef?cient Scanning and Coding,JCTVC-D240, Daegu,Korea,Jan.2011.

[14]HM 6.0Reference Software[Online].Available:http://hevc.kw.bbc.

https://www.360docs.net/doc/871184343.html,/trac/browser/tags/HM-6.0

[15]HM6.0Anchor Bitstreams[Online].Available:ftp://https://www.360docs.net/doc/871184343.html,/

hevc/hm-6.0-anchors/

[16] F.Bossen,On Software Complexity,HM 6.0Reference Software,

JCTVC-G757,Geneva,Switzerland,Nov.2011.

[17]Y.Piao,J.Min,and J.Chen,Encoder Improvement of Uni?ed Intra

Prediction,JCTVC-C207,Guangzhou,China,Oct.2010.

[18]G.Bj?ntegaard,Calculation of Average PSNR Differences Between RD-

Curves,VCEG-M33,Austin,TX,Apr.2001.

[19]G.Bj?ntegaard,Improvement of BD-PSNR Model,VCEG-AI11,Berlin,

Germany,Jul.2008.

[20] F.Bossen,Common Test Conditions,JCTVC-H1100,San Jose,CA,Mar.

2012.

[21]K.McCann,W.-J.Han,I.Kim,J.Min,E.Alshina,A.Alshin,T.Lee,J.

Chen,V.Seregin,S.Lee,Y.Hong,M.Cheon,and N.Shlyakhov,Video Coding Technology Proposal by Samsung,JCTVC-A124,Dresden,Ger-many,Apr.2010.

[22]JM18.2Reference Software[Online].Available:http://iphome.hhi.de/

suehring/tml/download

[23]M.Wien,“Variable block-size transform for H.264/A VC,”IEEE Trans.

Circuits Syst.Video Technol.,vol.13,no.7,pp.604–619,Jul.2003.

[24]G.J.Sullivan and J.Xu,Comparison of Compression Performance of

HEVC Working Draft5with AVC High Pro?le,JCTVC-H0360,San Jose,CA,Feb.2012.

[25] B.Bross,W.-J.Han,G.J.Sullivan,J.-R.Ohm,and T.Wiegand,

High Ef?ciency Video Coding(HEVC)Text Speci?cation Draft7, ITU-T/ISO/IEC Joint Collaborative Team on Video Coding(JCT-VC) document JCTVC-I1003,May2012.

[26]K.Ugur and A.Saxena,CE1:Summary Report of Core Experiment

on Intra Transform Mode Dependency Simpli?cations,JCTVC-J0021, Stockholm,Sweden,Jul.2012.

[27]R.Cohen,C.Yeo,R.Joshi,and F.Fernandes,CE7:Summary Report of

Core Experiment on Additional Transforms,document JCTVC-H0037,

San Jose,CA,Feb.

2012.

Jani Lainema received the M.Sc.degree in com-puter science from the Tampere University of Tech-nology,Tampere,Finland,in1996.

Since1996,he has been with the Visual Commu-nications Laboratory,Nokia Research Center,Tam-pere,where he contributes to the designs of ITU-T’s and MPEG’s video coding standards as well as to the evolution of different multimedia service standards in3GPP,DVB,and DLNA.He is currently a Prin-cipal Scientist with Visual Media,Nokia Research Center.His current research interests include video,

image,and graphics coding and communications,and practical applications of game theory.Frank Bossen(M’04)received the M.Sc.degree in computer science and the Ph.D.degree in communication systems from the Ecole Polytechnique Fédérale de Lausanne,Lausanne,Switzerland,in1996and1999,respectively.

He has been active in video coding standardization since1995and has held a number of positions with IBM,Yorktown Heights,NY;Sony,Tokyo, Japan;GE,Ecublens,Switzerland;and NTT DOCOMO,San Jose,CA.He is currently a Research Fellow with DOCOMO Innovations,Inc.,Palo Alto,

CA.

Woo-Jin Han(M’02)received the M.S.and Ph.D.

degrees in computer science from the Korea Ad-

vanced Institute of Science and Technology,Dae-

jeon,Korea,in1997and2002,respectively.

He is currently a Professor with the Department

of Software Design and Management,Gachon Uni-

versity,Seongnam,Korea.From2003to2011,he

was a Principal Engineer with the Multimedia Plat-

form Laboratory,Digital Media and Communication

Research and Development Center,Samsung Elec-

tronics,Suwon,Korea.Since2003,he has been contributing successfully to the ISO/IEC Moving Pictures Experts Group, Joint Video Team,and Joint Collaborative Team standardization effort. His current research interests include high ef?ciency video compression techniques,scalable video coding,multiview synthesis,and visual contents understanding.

Dr.Han was an editor of the HEVC video coding standard in

2010.

Junghye Min received the B.S.degree in mathemat-

ics from Ewha Women’s University,Seoul,Korea,in

1995,and the M.E.and Ph.D.degrees in computer

science and engineering from Pennsylvania State

University,University Park,PA,in2003and2005,

respectively.

From1995to1999,she was a S/W Engineer

with Telecommunication Systems Business,Sam-

sung Electronics,Suwon,Korea.She is currently

a Principal Engineer with the Multimedia Platform

Laboratory,Digital Media and Communications Re-search and Development Center,Samsung Electronics,Suwon.Her current research interests include video content analysis,motion tracking,pattern recognition,and video

coding.

Kemal Ugur received the M.Sc.degree in electrical

and computer engineering from the University of

British Columbia,Vancouver,BC,Canada,in2004,

and the Doctorate degree from the Tampere Univer-

sity of Technology,Tampere,Finland,in2010.

Currently,he is a Research Leader with the Nokia

Research Center,Tampere,working on the devel-

opment of next-generation audiovisual technologies

and standards.Since joining Nokia,he has actively

participated in several standardization forums,such

as the Joint Video Team’s(JVT)work on the stan-dardization of the multiview video coding extension of H.264/MPEG-4A VC, and the Video Coding Experts Group’s(VCEG)explorations toward a next-generation video coding standard,the3GPP activities for mobile broadcast and multicast standardization,and recently,the Joint Collaborative Team on Video Coding(JCT-VC)for development of high ef?ciency video coding (HEVC)standard.He has authored more than30publications in academic conferences and journals and has over40patents pending.

Dr.Ugur is a member of the research team that received the Nokia Quality Award in2006.

编码器使用与设置要点

从增量值编码器到绝对值编码器 旋转增量值编码器以转动时输出脉冲,通过计数设备来计算其位置,当编码器不动或停电时,依靠计数设备的内部记忆来记住位置。这样,当停电后,编码器不能有任何的移动,当来电工作时,编码器输出脉冲过程中,也不能有干扰而丢失脉冲,不然,计数设备计算并记忆的零点就会偏移,而且这种偏移的量是无从知道的,只有错误的生产结果出现后才能知道。 解决的方法是增加参考点,编码器每经过参考点,将参考位置修正进计数设备的记忆位置。在参考点以前,是不能保证位置的准确性的。为此,在工控中就有每次操作先找参考点,开机找零等方法。 这样的方法对有些工控项目比较麻烦,甚至不允许开机找零(开机后就要知道准确位置),于是就有了绝对编码器的出现。 绝对编码器光码盘上有许多道光通道刻线,每道刻线依次以2线、4线、8线、16线。。。。。。编排,这样,在编码器的每一个位置,通过读取每道刻线的通、暗,获得一组从2的零次方到2的n-1次方的唯一的2进制编码(格雷码),这就称为n位绝对编码器。这样的编码器是由光电码盘的机械位置决定的,它不受停电、干扰的影响。 绝对编码器由机械位置决定的每个位置是唯一的,它无需记忆,无需找参考点,而且不用一直计数,什么时候需要知道位置,什么时候就去读取它的位置。这样,编码器的抗干扰特性、数据的可靠性大大提高了。 从单圈绝对值编码器到多圈绝对值编码器 旋转单圈绝对值编码器,以转动中测量光电码盘各道刻线,以获取唯一的编码,当转动超过360度时,编码又回到原点,这样就不符合绝对编码唯一的原则,这样的编码只能用于旋转范围360度以内的测量,称为单圈绝对值编码器。 如果要测量旋转超过360度范围,就要用到多圈绝对值编码器。 编码器生产厂家运用钟表齿轮机械的原理,当中心码盘旋转时,通过齿轮传动另一组码盘(或多组齿轮,多组码盘),在单圈编码的基础上再增加圈数的编码,以扩大编码器的测量范围,这样的绝对编码器就称为多圈式绝对编码器,它同样是由机械位置确定编码,每个位置编码唯一不重复,而无需记忆。

图像的帧内预测编码

图像的帧内预测编码 设计内容:对一幅彩色或者灰度图像二维帧内预测编码,采用4阶线性预测器,根据最小均方误差原则(MMSE)设计预测器系数,并对预测误差进行量化处理,根据量化后的误差得到解码图像。 设计目的:掌握图像的预测编解码的原理。 课题要求:对任意大小的输入图像进行二维帧内预测编码,设计最佳预测器系数,比较不同图像预测器系数的共同点和不同点。对预测误差进行量化处理后,根据误差图像接到解码图像,对比图像质量,计算信噪比。以下是从网上找的 clc; clear all; close all; I2=imread('D:\MATLAB7\tuxiang\messi.bmp');%读入图片 I=double(I2);%设定I是double类型 fid=fopen('mydata.dat','w'); [m,n]=size(I); J=ones(m,n); J(1:m,1)=I(1:m,1); J(1,1:n)=I(1,1:n); J(1:m,n)=I(1:m,n);%把I(1,1)赋值给J(1,1) J(m,1:n)=I(m,1:n); for k=2:m-1 for l=2:n-1 J(k,l)=I(k,l)-(I(k,l-1)/2+I(k-1,l)/4+I(k-1,l-1)/8+I(k-1,l+1)/8);%前值预测,J为要拿来编码传输的误差 end end J=round(J) cont=fwrite(fid,J,'int8'); %解码 cc=fclose(fid); fid=fopen('mydata.dat','r'); I1=fread(fid,cont,'int8'); tt=1; B=ones(m,n) for l=1:n for k=1:m B(k,l)=I1(tt); tt=tt+1; end end B=double(B);

基于帧率逐级自适应的视频编码

基于帧率逐级自适应的视频编码 【摘要】传统帧率控制算法当场景持续的大运动量变化时,会有帧率波动过大、忽快忽慢的不连贯性而造成的闪烁感。为了解决这个问题,我们设计了基于帧率逐级自适应的算法,通过采用逐级自适应地调整目标帧率,以降低跳帧控制中的一次性跳帧数目,来达到帧率平稳以及图像质量提高的目的。 【关键词】码率;帧率;自适应;场景变换检测 1.引言 在多媒体通信系统中,以下因素直接影响视频图像质量。 1.1视频编码器的过高的输出码率会导致网络拥塞、丢包,而造成图像质量急剧下降;而过低的输出码率,也会导致图像质量下降和对网络资源的浪费。 1.2帧率的波动大小,也会直接影响到对图像效果的主观感受。如图1所示,横坐标为时间(单位:秒),纵坐标为帧率(单位:fps),最大帧率和最小帧率变化剧烈,如此连贯性不一致的现象会造成图像中运动的人或物体跳动和闪烁的主观感受。 因此,为了最终获得质量较高的视频图像,不仅要使编码器的输出码率尽可能的有效利用网络带宽资源,还要考虑输出图像帧率的波动大小。 图1 一般帧率变化曲线 目前现有的控制帧率波动的算法是,通过对场景变换的检测,实现图像在场景变换较小的时候进行跳帧,以便给场景变换较大时,分配较多比特进行编码,保证图像质量和连贯性。如下图所示: 图 2 本发明相关方案原理图 这种算法的缺点是。 1.2.1当场景持续的大运动量变化时,虽然存在某一瞬时的场景变化较小,但是由于时间过短,而没有可用来跳过的帧数,最终导致该算法会失效。 1.2.2实际网络的状况会存在可用网络带宽资源不恒定的情况,而该方案没有考虑网络自适应性能。 为了克服帧率波动过大、忽快忽慢的不连贯性而造成的闪烁感,我们设计了基于帧率逐级自适应的算法,这种算法同时兼顾了网络带宽自适应。

旋转编码器工作原理

增量式旋转编码器工作原理 增量式旋转编码器通过内部两个光敏接受管转化其角度码盘的时序和相位关系,得到其角度码盘角度位移量增加(正方向)或减少(负方向)。在接合数字电路特别是单片机后,增量式旋转编码器在角度测量和角速度测量较绝对式旋转编码器更具有廉价和简易的优势。 下面对增量式旋转编码器的内部工作原理(附图) A,B两点对应两个光敏接受管,A,B两点间距为 S2 ,角度码盘的光栅间距分别为S0和S1。 当角度码盘以某个速度匀速转动时,那么可知输出波形图中的S0:S1:S2比值与实际图的S0:S1:S2比值相同,同理角度码盘以其他的速度匀速转动时,输出波形图中的S0:S1:S2比值与实际图的S0:S1:S2比值仍相同。如果角度码盘做变速运动,把它看成为多个运动周期(在下面定义)的组合,那么每个运动周期中输出波形图中的S0:S1:S2比值与实际图的S0:S1:S2比值仍相同。 通过输出波形图可知每个运动周期的时序为 A B 1 1 0 1 0 0 1 0 A B 1 1 1 0 0 0 0 1 我们把当前的A,B输出值保存起来,与下一个A,B输出值做比较,就可以轻易的得出角度码盘的运动方向, 如果光栅格S0等于S1时,也就是S0和S1弧度夹角相同,且S2等于S0的1/2,那么可得到此次角度码盘运动位移角度为S0弧度夹角的1/2,除以所消毫的时间,就得到此次角度码盘运动位移角速度。

S0等于S1时,且S2等于S0的1/2时,1/4个运动周期就可以得到运动方向位和位移角度,如果S0不等于S1,S2不等于S0的1/2,那么要1个运动周期才可以得到运动方向位和位移角度了。 旋转编码器只有增量型和绝对值型两种吗?这两种旋转编码器如何区分?工作原理有何不同? 只有增量型和绝对型 增量型只是测角位移(间接为角速度)增量,以前一时刻为基点.而绝对型测从开始工作后角位移量. 增量型测小角度准,大角度有累积误差 绝对型测小角度相对不准,但大角度无累积误差 旋转编码器是用来测量转速的装置。它分为单路输出和双路输出两种。技术参数主要有每转脉冲数(几十个到几千个都有),和供电电压等。单路输出是指旋转编码器的输出是一组脉冲,而双路输出的旋转编码器输出两组相位差90度的脉冲,通过这两组脉冲不仅可以测量转速,还可以判断旋转的方向。 编码器的原理: 编码器的原理与应用 编码器是一种将角位移转换成一连串电数字脉冲的旋转式传感器,这些脉冲能用来控制角位移,如果编码器与齿条或螺旋杆结合在一起,也可于控制直线位移。 编码器中角位移的转换采用了光电扫描原理。读数系统是基于径向分度盘的旋转,该分度盘是由交替的透光窗口和不透光窗口构成的。此系统全部用一个红外光源垂直照射,这样光就把盘子和图像投射到接收器表面上,该接收器覆盖着一层光栅,称为准直仪,它具有和光盘相同的窗口。接收器的工作是感受光盘转动所产生的光变化,然后将光变化转换成相应的电变化。 增量型编码器 增量型编码器一般给出两种方波,它们的相位差90度,通常称为通道A和通道B。只有一个通道的读数给出与转速有关的信息,与此同时,通过所取得的第二通道信号与第一通道信号进行顺序对比的基础上,得到旋转方向的信号。还有一个可利用的信号称为Z通道或零通道,该通道给出编码器轴的绝对零位。此信号是一个方波,其相位与A通道在同一中心线上,宽度与A通道相同。 增量型编码器精度取决于机械和电气的因素,这些因素有:光栅分度误差、光盘偏心、轴承偏心、电子读数装置引入的误差以及光学部分的不精确性,误差存在于任何编码器中。 编码器如以信号原理来分,有增量型编码器,绝对型编码器。增量型编码器(旋转型) 工作原理: 由一个中心有轴的光电码盘,其上有环形通、暗的刻线,有光电发射和接收器件读取,获得四组正弦波信号组合成A、B、C、D,每个正弦波相差90度相位差(相对于一个周波为360度),将C、D信号反向 ,叠加在A、B两相上,可增强稳定信号;另每转输出一个Z相脉冲以代表零位参考位。 由于A、B两相相差90度,可通过比较A相在前还是B相在前,以判别编码器的正转与反转,通过零位脉冲,可获得编码器的零位参考位。 编码器码盘的材料有玻璃、金属、塑料,玻璃码盘是在玻璃上沉积很薄的刻线,其热稳定性好,精度高,金属码盘直接以通和不通刻线,不易碎,但由于金属有一定的厚度,精度就有限制,其热稳定性就要比玻璃的差一个数量级,塑料码盘是经济型的,其成本低,但精度、热稳定性、寿命均要差一些。

帧内预测模式

帧内宏块预测编码模式的改进:主要是提前退出,从而减少运算次数,达到提高运动估计速度的目标。或者根据周围块的预测编码模式提前预判本块可能的编码模式,减少计算量。还可以采取减少编码模式的策略,将很少使用的编码模式直接省略。利用纹理信息预先排除不可能的编码模式。 1、提前预判编码模式:根据周围块的预测模式提前预判本块编码模式。 (1)16X16块时 当LEFT和TOP都存在时,其编码模式最多有4种,I_PRED_16x16_V | I_PRED_16x16_H | I_PRED_16x16_DC | I_PRED_16x16_P; 当只有左块LEFT存在时,其编码模式有两种:I_PRED_16x16_DC_LEFT | I_PRED_16x16_H; 当只有上块TOP存在时,其编码模式有两种:I_PRED_16x16_DC_TOP | I_PRED_16x16_V;当左块和上块都不存在时,其编码模式只能为:I_PRED_16x16_DC_128。 (2)4X4块时 当LEFT和TOP都存在时,可能编码模式分两种情形: (A)当左上块也存在时,有9种:I_PRED_4x4_DC | I_PRED_4x4_H | I_PRED_4x4_V | I_PRED_4x4_DDL | I_PRED_4x4_DDR | I_PRED_4x4_VR | I_PRED_4x4_HD | I_PRED_4x4_VL | I_PRED_4x4_HU; (B)当左上块不存在时,有6种:I_PRED_4x4_DC | I_PRED_4x4_H | I_PRED_4x4_V | I_PRED_4x4_DDL | I_PRED_4x4_VL | I_PRED_4x4_HU; 当只有左块存在时,可能编码模式有3种:I_PRED_4x4_DC_LEFT | I_PRED_4x4_H | I_PRED_4x4_HU; 当只有上块存在时,可能编码模式有4种:I_PRED_4x4_DC_TOP | I_PRED_4x4_V | I_PRED_4x4_DDL | I_PRED_4x4_VL; 当左块与上块都不存在时,其编码模式只能为:I_PRED_4x4_DC_128。 (3)提前预判准则:在预测编码中,由于每帧图像的第一列宏块和第一行宏块的编码模式对其他块的编码模式起着非常重要的作用,所以对第一行和第一列宏块的编码模式不进行提前预判别。除此之外,当左块和上块都存在且它们的编码模式相同时:PRED_MODE = LEFT_MODE = TOP_MODE。(包括4X4分块及16X16宏块)考虑其最可能的预测模式为垂直模式,所以当垂直模式与水平模式代价相等时,首选垂直模式为其最优模式。 2、提前跳出:主要针对是否进行4X4分块;如果进行4X4分块,能否提前结束4X4块的编码模式代价运算。 (1)当左块和上块都存在,且其最小代价编码模式都为16X16时,不进行4X4分块运算。(2)对于16X16宏块,当该16X16块的某次编码模式代价很小时(某个阈值),直接结束代价运算,并把该次编码模式选定为该16X16块代价最小16X16编码模式 (3)如果进行4X4分块运算,当该4X4块的某个编码模式代价很小时(某个阈值),直接结束代价运算,并把该次编码模式选定为该4X4块代价最小4X4编码模式。 (4)如果4X4块编码代价和已经大于16X16模式时,提前结束4X4分块代价运算,并且选用16X16模式为最小代价编码模式。 3、利用纹理信息:根据纹理信息预先排除或者选择可能的编码模式 (1)16X16块时 (A)当纹理信息极其复杂(可以计算宏块像素点差值的平方和,即当其大于某个阈值时,也即无明显边界),直接将该16X16块编码模式设置为I_PRED_16x16_DC_128。 (B)如果纹理很复杂时,直接进行4X4块子编码模式代价计算(可以计算宏块像素点差值的平方和,即当其大于某个阈值且不满足A中的条件时)

视频图像帧内编码

视频图像帧内编码 --国立华侨大学 一实验目的 1.了解多媒体通信中图像压缩技术 2.熟悉视频帧内压缩编码过程 3.掌握二维DCT变换算法 二实验原理 视频帧内编码有多种模式,最基本的是基于8×8块的DCT顺序编码,将一帧图像分为8×8的块,然后按照从左至右、自上而下的顺序,对块进行DCT、量化和熵编码。其编、解码框图如下: 基于DCT的编码器 图1 基于DCT的顺序编码框图 DCT解码器 图2 基于DCT的顺序解压缩框图 视频帧内压缩编码算法的主要步骤: 1)正向离散余弦变换(DCT)。 2)量化(quantization)。 3)Z字形扫描(zigzag scan)。 4)使用差分脉冲编码调制(differential pulse code modulation,

DPCM)对直流系数(DC)进行编码。 5)使用行程长度编码(run-length encoding,RLE)对交流系数(AC) 进行编码。 6)熵编码(entropy coding)。 三实验过程 实验利用MATLAB仿真软件来实现 程序:I=imread('D:\p_large_iUNl_627c0001a3192d12.bmp') figure(1),imshow(I); title('原图像') I=rgb2gray(I); %将真彩色RGB图像转换成灰度图像 figure(11),imshow(I); title('灰度图像') I=im2double(I);% double(I)是将I变成double类型的。im2double(I)是将图象变成double类型的再归一化,比如对于8比特图象,就是将原来像素值除以255。 fun_1=@dct2; A_1=blkproc(I,[8 8],fun_1); figure(2),imshow(A_1); title('离散余弦变换后的图像') T=[0.3536 0.3536 0.3536 0.3536 0.3536 0.3536 0.3536 0.3536 0.4904 0.4157 0.2778 0.0975 -0.0975 -0.2778 -0.4157 -0.4904 0.4619 0.1913 -0.1913 -0.4619 -0.4619 -0.1913 0.1913 0.4619 0.4157 -0.0975 -0.4904 -0.2778 0.2778 0.4904 0.0975 -0.4157 0.3536 -0.3536 -0.3536 0.3536 0.3536 -0.3536 -0.3536 0.3536 0.2778 -0.4904 0.0975 0.4157 -0.4157 -0.0975 0.4904 -0.2778 0.1913 -0.4619 0.4619 -0.1913 -0.1913 0.4619 -0.4619 0.1913 0.0975 -0.2778 0.4157 -0.4904 0.4904 -0.4157 0.2778 -0.0975] A_2=blkproc(A_1,[8 8],'x./P1',T); figure(3),imshow(A_2); title('量化后的图像') A_3=blkproc(A_2,[8 8],'x.*P1',T); figure(4),imshow(A_3); title('逆量化后的图像') fun_2=@idct2;

帧间预测原理及过程函数

帧间预测是采用基于块的运动补偿从一个或多个先前编码的图像帧中产生一个预测模型的。 H.264与早起标准的主要不同之处在于支持不同的块尺寸(从16×16到4×4)以及支持精细子像素精度的运动矢量(亮度成分是1/4像素精度) 每个宏块(16×16)的亮度分量可以按四种方式划分,即按一个16×16块,或两个16×8块,或两个8×16块,或者4个8×8块的划分进行运动补偿。如果选择8×8模式,宏块中的4个8×8子宏块可以用另一种方式进一步划分,或者作为一个8×8块,或作为两个8×4块,或作为两个4×8块,或者作为四个4×4块。 每个分块或者子宏块都产生一个单独的运动矢量。每个运动矢量均需要编码和传输,同时分块模式信息需要进行编码并放在压缩比特流中。 每个色度块按照与亮度分量同样的分块方式进行划分。 编码每个分块的运动矢量需要大量比特位。由于相邻块的运动矢量高度相关,所以每个块的运动矢量都是从邻近的先前编码块中进行预测得到的。当前运动矢量与预测运动矢量MVp 的差值MVD被编码和传输。 MVp的预测规则如下: 假设E是当前宏块、子宏块或子宏块分块,A是E左边的分块或子分块,B是E上边的分块或子分块,C是E右上的分块或子分块。如果E左边的分块数大于1,则最上边的分块被选为A。如果E上边的分块数大于1,则最左边的分块被选为B。 1.除了16×8和8×16两种分块尺寸的其余传输块,MVp是分块A、B、C的运动矢量的中值(不是平均值) 2.对于16×8分块,上边16×8分块的MVp是从B预测得到的,下边16×8分块的MVp 是从A预测得到的。 3.对于8×16分块,左边8×16分块的MVp是从A预测得到的,右边8×16分块的MVp 是从C预测得到的。 4.对于skip宏块,产生一个16×16块的MVp,和第1种情况一样。MVp的形成规则相应修改。 如果得不到一个或多个先前传输块的话(如,它在当前条带之外),则MVp的形成原则相应修改。 ——————————————————————————————————————–Yeah! 又可以看实例了: 这里对foreman_part_qcif.yuv的第二帧中地址为40的宏块(白色框框住,图贴在文章开头)进行分析,关键代码还是在encode_one_macroblock_high中,由于该帧是P帧,所以会进行帧间预测。其中最重要的函数为BlockMotionSearch,该函数为所有大小的分块完成运动搜

哈夫曼编码步骤

哈夫曼编码步骤: 一、对给定的n个权值{W1,W2,W3,...,Wi,...,Wn}构成n棵二叉树的初始集合F= {T1,T2,T3,...,Ti,...,Tn},其中每棵二叉树Ti中只有一个权值为Wi的根结点,它的左右子树均为空。(为方便在计算机上实现算法,一般还要求以Ti的权值Wi的升序排列。) 二、在F中选取两棵根结点权值最小的树作为新构造的二叉树的左右子树,新二叉树的根结点的权值为其左右子树的根结点的权值之和。 三、从F中删除这两棵树,并把这棵新的二叉树同样以升序排列加入到集合F中。 四、重复二和三两步,直到集合F中只有一棵二叉树为止。 /*------------------------------------------------------------------------- * Name: 哈夫曼编码源代码。 * Date: 2011.04.16 * Author: Jeffrey Hill+Jezze(解码部分) * 在Win-TC 下测试通过 * 实现过程:着先通过HuffmanTree() 函数构造哈夫曼树,然后在主函数main()中 * 自底向上开始(也就是从数组序号为零的结点开始)向上层层判断,若在 * 父结点左侧,则置码为0,若在右侧,则置码为1。最后输出生成的编码。*------------------------------------------------------------------------*/ #include

X264帧内预测编码模式

X264帧内预测编码模式 帧内宏块预测编码模式:分别计算16X16和16个4X4块的代价,取两者中最小代价为该宏块的编码模式。 1、进行16X16模式的预测 (1)根据周围宏块的情况判断其可能的预测模式。(主要是上块TOP和左块LEFT) (2)计算各种可能模式的编码代价 (3)取最小代价 2、进行4X4块模式的预测 (1)根据周围宏块情况判断其可能的预测模式。(可以参考其他相邻宏块) (2)计算每个4X4块的每种预测模式的编码代价,并取代价最小 (3)将16个4X4块的最小代价相加,得到总代价和。 3、将16X16模式的代价与4X4模式的代价和进行比较,取两者最小为最后的宏块预测编码模式。 X264中的代码分析: static void x264_mb_analyse_intra( x264_t *h, x264_mb_analysis_t *a, int i_satd_inter )//函数功能:帧内预测编码模式选择 { const unsigned int flags = h->sh.i_type == SLICE_TYPE_I ? h->param.analyse.intra : h->param.analyse.inter; //判断是进行I片内的宏块帧内预测编码还是P或B片(帧间片)内的帧内模式预测编码uint8_t *p_src = h->mb.pic.p_fenc[0]; uint8_t *p_dst = h->mb.pic.p_fdec[0]; int i, idx; int i_max; int predict_mode[9]; int b_merged_satd = h->pixf.intra_satd_x3_16x16 && h->pixf.mbcmp[0] == h->pixf.satd[0]; /*---------------- Try all mode and calculate their score ---------------*/ /* 16x16 prediction selection */ predict_16x16_mode_available( h->mb.i_neighbour, predict_mode, &i_max );//获取16X16的可用预测编码模式 if( b_merged_satd && i_max == 4 )//如果b_merged_satd不为0且可用预测编码模式有4种,I帧时直接跳过 { h->pixf.intra_satd_x3_16x16( p_src, p_dst, a->i_satd_i16x16_dir ); h->predict_16x16[I_PRED_16x16_P]( p_dst ); a->i_satd_i16x16_dir[I_PRED_16x16_P] = h->pixf.mbcmp[PIXEL_16x16]( p_dst, FDEC_STRIDE, p_src, FENC_STRIDE );

视频编码帧介绍

视频编码之I帧率、P帧、B帧 1. 视频传输原理 视频是利用人眼视觉暂留的原理,通过播放一系列的图片,使人眼产生运动的感觉。单纯传输视频画面,视频量非常大,对现有的网络和存储来说是不可接受的。 为了能够使视频便于传输和存储,人们发现视频有大量重复的信息,如果将重复信息在发送端去掉,在接收端恢复出来,这样就大大减少了视频数据的文件,因此有 了H.264视频压缩标准。 在H.264压缩标准中I帧、P帧、B帧用于表示传输的视频画面。 1、I帧 I帧又称帧内编码帧,是一种自带全部信息的独立帧,无需参考其他图像便可独立进行解码,可以简单理解为一张静态画面。视频序列中的第一个帧始终都是I帧,因为它是关键帧。 2、P帧 P帧又称帧间预测编码帧,需要参考前面的I帧才能进行编码。表示的是当前帧画面与前一帧(前一帧可能是I帧也可能是P帧)的差别。解码时需要用之 前缓存的画面叠加上本帧定义的差别,生成最终画面。与I 帧相比,P帧通常占用更少的数据位,但不足是,由于P帧对前面的P和I参考帧有着复杂的依耐性,因 此对传输错误非常敏感。 3、B帧 B帧又称双向预测编码帧,也就是B帧记录的是本帧与前后帧的差别。也就是说要解码B帧,不仅要取得之前的缓存画面,还要解码之后的画面,通过前后画面的与本帧数据的叠加取得最终的画面。B帧压缩率高,但是对解码性能要求较高。 总结: I帧只需考虑本帧;P帧记录的是与前一帧的差别;B帧记录的是前一帧及后一帧的差别,能节约更多的空间,视频文件小了,但相对来说解码的时候就比较麻烦。因为在解码时,不仅要用之前缓存的画面,而且要知道下一个I或者P的画面,对于不支持B帧解码的播放器容易卡顿。 视频监控系统中预览的视频画面是实时的,对画面的流畅性要求较高。采用I帧、P帧进行视频传输可以提高网络的适应能力,且能降低解码成本所以现阶段的视频解码都只采用I帧和P帧进行传输。海康摄像机编码,I帧间隔是50,含49个P帧。

帧间预测运动估计算法研究

帧间预测运动估计算法研究 帧间预测编码法是视频编码过程中消除冗余的重要方法。运动估计和运动补偿技术是视频帧间预测编码中的核心技术。详细研究了块匹配运动估计的基本原理,重点介绍了几种经典的块匹配运动估计算法,通过实验定性地评价了各算法的性能特点,分析了各算法的优缺点,总结出了运动估计算法优化的方向,对目前运动估计技术的研究和设计具有重要意义。 标签:帧间预测编码;时间冗余;块匹配;运动估计;运动矢量 Abstract:Motion estimation and motion compensation are the core technologies in video inter-frame prediction coding. The basic principle of block matching motion estimation is studied in detail,and several classical block matching motion estimation algorithms are introduced in detail. The performance characteristics of each algorithm are evaluated qualitatively through experiments,and the advantages and disadvantages of each algorithm are analyzed. Keywords:interframe prediction coding;time redundancy;block matching;motion estimation;motion vector 引言 幀间预测是视频编码的关键内容,而运动估计是其核心。据统计在H.264/A VC编码中运动估计约占全部计算量的60%到80%,所以运动估计算法的性能至关重要。块匹配算法广泛应用标准视频编码。 在基于块匹配的运动估计算法中,对每一帧图像都被分成大小相同的宏块,然后以宏块为基本处理单元。最后对预测差值、运动矢量和相应的参考索引进行编码。 1 帧间预测原理 1.1 运动估计 在序列图像中,邻近帧存在着一定的相关性。因此,可将活动图像分成若干块或宏块,在参考帧中定义的搜索区域,按照一定的匹配准则,搜索出每个块或宏块在参考帧图像中的匹配块,并得出两者之间的空间位置的相对偏移量,即运动矢量。当前块从参考帧中求取最佳匹配块得到运动矢量的过程被称为运动估计[2]。运动估计的原理如图1。 假设当前帧为P,参考帧为Pr,当前编码块为B,B*与B在图像中坐标位置相同。在Pr中,按照搜索准则,寻找与B块相减残差最小的匹配块Br。这个过程就是运动估计,Br左上角坐标(xr,yr)与B*左上角坐标(x,y)之差,

案例五旋转编码器的安装与应用

案例五旋转编码器的安装与应用 1.项目训练目的 掌握旋转编码器的安装与使用方法。 2.项目训练设备 旋转编码器及相应耦合器一套。 3.项目训练内容 先熟悉旋转编码器的使用说明书。 (1)旋转编码的安装步骤及注意事项 ①安装步骤: 第一步:把耦合器穿到轴上。不要用螺钉固定耦合器和轴。 第二步:固定旋转编码器。编码器的轴与耦合器连接时,插入量不能超过下列值。 E69-C04B型耦合器,插入量 5.2mm;E69-C06B型耦合器,插人量 5.5mm;E69-Cl0B型耦合器,插入量7.lmm。 第三步:固定耦合器。紧固力矩不能超过下列值。E69-C04B型耦合器,紧固力矩2.0kfg?cm;E69-C06B型耦合器,紧固力矩 2.5kgf?cm;E69B-Cl0B型耦合器,紧固力矩4.5kfg?cm。 第四步:连接电源输出线。配线时必须关断电源。 第五步:检查电源投入使用。 ②注意事项: 采用标准耦合器时,应在允许值内安装。如图5-1所示。 图5-1 标准耦合器安装 连接带及齿轮结合时,先用别的轴承支住,再将旋转编码器和耦合器结合起来。如图 5-2所示。 图5-2 旋转编码器安装 齿轮连接时,注意勿使轴受到过大荷重。 用螺钉紧固旋转编码器时,应用5kfg?cm左右的紧固力矩。 固定本体进行配线时,不要用大于3kg的力量拉线。 可逆旋转使用时,应注意本体的安装方向和加减法方向。 把设置的装置原点和编码器的Z相对准时,必须边确定Z相输出边安装耦合器。 使用时勿使本体上粘水滴和油污。如浸入内部会产生故障。 (2)配线及连接

①配线应在电源0FF状态下进行。电源接通时,若输出线接触电源线,则有时会损坏输出回路。 ②若配线错误,则有时会损坏内部回路,所以配线时应充分注意电源的极性等。 ③若和高压线、动力线并行配线,则有时会受到感应造成误动作或损坏。 ④延长电线时,应在10m以下。还由于电线的分布容量,波形的上升、下降时间会延长,所以有问题时,应采用施密特回路等对波形进行整形。 还有为了避免感应噪声等,也要尽量用最短距离配线。集成电路输人时,要特别注意。 ⑤电线延长时,因导体电阻及线间电容的影响。波形的上升、下降时间变长,容易产 生信号间的干扰(串音),因此应使用电阻小、线间电容低的电线(双绞线、屏蔽线)。

一种快速AVS2帧内预测算法

一种快速AVS2帧内预测算法 摘要:针对目前AVS2帧内预测编码模式的选择和计算过程相对复杂的问题,提出了一种基于零系数块和底层角度判决的AVS2帧内预测算法。该算法先判断当前子块是否为零系数块,避免对零系数块进行变换等帧内编码的复杂操作。对于非零系数块,通过底层角度判决,从理论上排除了至少40%不可能的预测模式。实验表明,该算法对压缩效率的影响很小,将PSNR下降控制在0.2dB内,平均比特数增加少于2%,编码时间至少减少26%,有效地降低帧内编码的复杂度。 关键词:AVS2;帧内预测;零系数块;编码单元;底层角度判决 1 概述 AVS是我国第一个拥有自主知识产权的音视频编码标准[1]。在高清、超高清等应用需求的推动下,更高压缩效率的视频编码技术迅速发展。在此基础的背景上,2012年,工作组开始准备新一代音视频编码标准的制定工作,截至2014年6月,制定工作基本完成,即(Audio Video coding Standard Ⅱ,AVS2)。经过测试发现,AVS2的编码效率比第一代标准提高一倍以上,与最新国际标准HEVC(High Efficiency Video

Coding)相当[2]。 AVS2采用的关键技术主要有预测编码、变换编码和熵编码等。统计并比较AVS2各部分的编码时间可以发现,帧内预测部分消耗的时间(约35%)在各主要部分中占首位,新技术在提高压缩效率的同时,也显著增加了编码复杂度。 目前针对如何降低视频编码帧内预测的计算复杂度的研究有,雷海军,危雄,杨张等提出一种基于边缘方向强度检测的快速帧内预测模式决策算法[3],但该算法主要针对HEVC;陈云善,苏宛新,王春霞等提出一种基于(Sum of Absolute Transformed Difference,SATD)准则和空间相关性的快速帧内预测算法[4]来优化帧内预测模式的选择过程;PALOMINO D,CAVICHIOLI E,SUSIN A提出一种基于在编码树块的新检测顺序的快速帧内模式决策算法[5]。文章在结合零系数块的基础上,针对如何降低帧内预测模式,提出一种基于零系数块和底层角度判决的AVS2帧内预测算法。 2 AVS2帧内预测主要结构 AVS2采用四叉树编码结构,从图1中我们可以看到,将一幅图像划分为若干个最大编码单元(Largest Coding Unit,LCU),其最大尺寸为64×64。然后按照四叉树递归的方式可以将LCU划分为各种尺寸的编码单元CU,CU的尺寸可以表示成L×L的样式,L的取值有8,16,32或64。 不同的编码单元可以通过不同的方式划分成预测单元

【CN110022477A】一种基于CUTree的帧间预测模式快速选择方法【专利】

(19)中华人民共和国国家知识产权局 (12)发明专利申请 (10)申请公布号 (43)申请公布日 (21)申请号 201910248674.6 (22)申请日 2019.03.29 (71)申请人 中南大学 地址 410083 湖南省长沙市岳麓区麓山南 路932号 (72)发明人 张昊 向广 (74)专利代理机构 广州嘉权专利商标事务所有 限公司 44205 代理人 伍传松 (51)Int.Cl. H04N 19/122(2014.01) H04N 19/159(2014.01) H04N 19/30(2014.01) H04N 19/96(2014.01) (54)发明名称 一种基于CUTree的帧间预测模式快速选择 方法 (57)摘要 本发明提供了一种基于CUTree的帧间预测 模式快速选择方法,属于视频编码解码技术领 域。该方法针对CUTree对SATD的加权处理后的 值,将其进行归一化后作为该CU在帧间预测做模 式选择时的条件,减少了不必要的编码时间,提 高了编码效率,所提供的方法简单易行,有利于 在其他编码标准中进行推广。权利要求书2页 说明书13页 附图1页CN 110022477 A 2019.07.16 C N 110022477 A

权 利 要 求 书1/2页CN 110022477 A 1.一种基于CUTree的帧间预测模式快速选择方法,其特征在于,步骤包括: (1)获取当前CU深度层d的标志位flag_satdcost值; (2)判断所述CU是否都处于图像的内部:若是,进行步骤(3);若不是,直接进入下一深度层d+1的划分;即由当前CU往下四叉树划分为4个子CU; (3)判断是否进行选择Merge模式或Skip模式:若是,计算帧间预测中的Merge模式和Skip模式的RDcost,选出两者中其RDcost较小的模式作为暂时最佳模式;若否,跳过当前深度对Merge模式和Skip模式的选择,直接进行步骤(4); (4)判断是否进行下一CU深度的划分:若是,将CU进行下一深度d+1的四叉树划分;若否,直接进行步骤(5); (5)判断是否进行其八种帧间模式的判断:若是,则进行八种帧间模式的模式选择;若否,直接进行下一深度的划分; (6)得到当前CU的最佳预测模式。 2.根据权利要求1所述的方法,其特征在于,步骤(1)中,若CU深度层为0层, 当为P帧时,SATD值<15000时,flag_satdcost赋值为0;15000

各种视频帧的区别

I,P,B帧和PTS,DTS的关系 基本概念: I frame :帧内编码帧又称intra picture,I 帧通常是每个GOP(MPEG 所使用的一种视频压缩技术)的第一个帧,经过适度地压缩,做为随机访问的参考点,可以当成图象。I帧可以看成是一个图像经过压缩后的产物。 P frame: 前向预测编码帧又称predictive-frame,通过充分将低于图像序列中 前面已编码帧的时间冗余信息来压缩传输数据量的编码图像,也叫预测帧; B frame: 双向预测内插编码帧又称bi-directional interpolated prediction frame,既考虑与源图像序列前面已编码帧,也顾及源图像序列后面已编码帧之间的时间冗余信息来压缩传输数据量的编码图像,也叫双向预测帧; PTS:Presentation Time Stamp。PTS主要用于度量解码后的视频帧什么时候 被显示出来 DTS:Decode Time Stamp。DTS主要是标识读入内存中的bit流在什么时 候开始送入解码器中进行解码。 在没有B帧存在的情况下DTS的顺序和PTS的顺序应该是一样的。 IPB帧的不同: I frame:自身可以通过视频解压算法解压成一张单独的完整的图片。 P frame:需要参考其前面的一个I frame 或者B frame来生成一张完整的图片。 B frame:则要参考其前一个I或者P帧及其后面的一个P帧来生成一张完整的图片。 两个I frame之间形成一个GOP,在x264中同时可以通过参数来设定bf的大小,即:I 和p或者两个P之间B的数量。 通过上述基本可以说明如果有B frame 存在的情况下一个GOP的最后一个frame一定是P. DTS和PTS的不同: DTS主要用于视频的解码,在解码阶段使用.PTS主要用于视频的同步和输出.在display的时候使用.在没有B frame的情况下.DTS和PTS的输出顺序是一样的.

伺服电机旋转编码器旋变安装

伺服电机旋转编码器安装 一.伺服电机转子反馈的检测相位与转子磁极相位的对齐方式 1.永磁交流伺服电机的编码器相位为何要与转子磁极相位对齐 其唯一目的就是要达成矢量控制的目标,使d轴励磁分量和q轴出力分量解耦,令永磁交流伺服电机定子绕组产生的电磁场始终正交于转子永磁场,从而获得最佳的出力效果,即“类直流特性”,这种控制方法也被称为磁场定向控制(FOC),达成FOC控制目标的外在表现就是永磁交流伺服电机的“相电流”波形始终与“相反电势”波形保持一致,如下图所示: 图1 因此反推可知,只要想办法令永磁交流伺服电机的“相电流”波形始终与“相反电势”波形保持一致,就可以达成FOC控制目标,使永磁交流伺服电机的初级电磁场与磁极永磁场正交,即波形间互差90度电角度,如下图所示: 图2 如何想办法使永磁交流伺服电机的“相电流”波形始终与“相反电势”波形保持一致呢?由图1可知,只要能够随时检测到正弦型反电势波形的电角度相位,然后就可以相对容易地根据电角度相位生成与反电势波形一致的正弦型相电流波形了。 在此需要明示的是,永磁交流伺服电机的所谓电角度就是a相(U相)相反电势波形的正弦(Sin)相位,因此相位对齐就可以转化为编码器相位与反电势波形相位的对齐关系;另一方面,电角度也是转子坐标系的d轴(直轴)与定子坐标系的a轴(U轴)或α轴之间的夹角,这一点有助于图形化分析。 在实际操作中,欧美厂商习惯于采用给电机的绕组通以小于额定电流的直流电流使电机转子定向的方法来对齐编码器和转子磁极的相位。当电机的绕组通入小于额定电流的直流电流时,在无外力条件下,初级电磁场与磁极永磁场相互作用,会相互吸引并定位至互差0度相位的平衡位置上,如下图所示: