Eye Activity Detection and Recognition Using Morphological Scale-Space Decomposition

Eye Activity Detection and Recognition

using Morphological Scale-space Decomposition

I.Ravyse,H.Sahli,M.J.T.Reinders,J.Cornelis

Vrije Universiteit Brussel,Dept.of Electronics and Information processing-ETRO

Pleinlaan2,B-1050Brussels,Belgium

icravyse,hsahli,jpcornel@etro.vub.ac.be

Delft University of Technology,Dept.of Electrical Engineering

P.O.Box5031,2600GA Delft,The Netherlands

M.J.T.Reinders@its.tudelft.nl

Published by15th International Conference on Pattern Recognition,ICPR2000,

Vol.1,pp.1080-1083,September3-8,2000,Barcelona,Spain

Abstract

Automatic recovery of eye gestures from image se-quences is one of the important topics for face recognition and model-based coding of videophone https://www.360docs.net/doc/9012750615.html,u-ally complicated models of the eye and its motion are used. In this contribution an eye gesture parameter estimation is described.A previously published automatic eye de-tection/tracking algorithm,based on template matching,is used for the eye pose detection.The eye gesture analysis is realised with a mathematical morphology scale-space ap-proach,forming spatio-temporal curves out of scale mea-surement statistics.The resulting curves provide a direct measure of the eye gesture,which can then be used as an eye animation parameter.Experimental results demonstrate the ef?ciency and robustness.

1.Introduction

Vision-based facial gesture analysis extracts,from an image sequence,spatio-temporal information about the fa-cial features pose and expressions.The modeling and tracking of faces and expressions is a topic of increas-ing interest.An overview can be found in[6].Numer-ous techniques have been proposed for facial feature detec-tion and facial expression(gesture)analysis such as tem-plate matching[5],principal component analysis[1],inter-polated views[7],deformable templates[15],active con-tours[9],optical?ow[4],physical-model coupled with op-tical?ow[8],etc

Here,we propose a tracking algorithm based on template matching to locate the positions of the eye regions in subse-quent frames,coupled to a scale space approach,namely the sieves,to extract eye gesture parameters from the gray-level data directly.The proposed method resembles the work done by Matthews et al.[11]for lip reading,but differs in the implementation and the analysis of the spatio-temporal curves for eye gesture parameter estimation.In this paper, the eye gesture is de?ned as the closing of the eyelids.The direction of gaze is not considered yet.The estimated low-level animation parameter is represented by an Action Unit (AU)[12].An AU stands for a small visible change in the facial expression.More precisely,AU43parameterizes the amount of eye closure.

The paper is organized as follows.Section2summa-rizes the approach for the tracking of eye regions.Section3 describes the sieve decomposition.In section4spatio-temporal curves of granules are introduced,from which ges-ture parameters are estimated.Section5discusses the ex-perimental results obtained with test sequences Suzie and Miss America.Final conclusions are drawn in section6.

2.Tracking eye regions

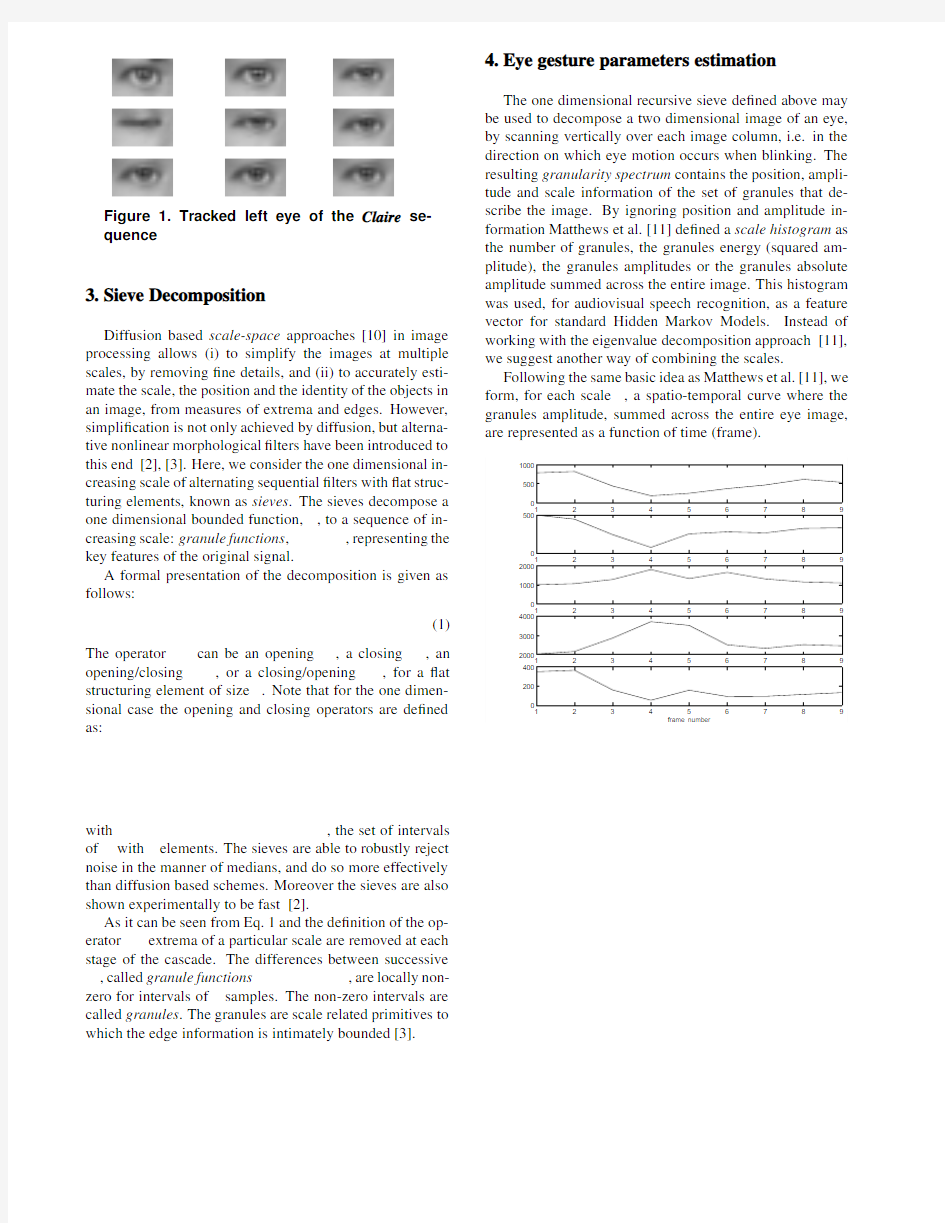

A previously published eye tracking scheme[14][13], is used for the tracking of the location of the left and right eye regions in image sequences.The proposed scheme is based on template matching,but is kept invariant for orien-tation,scale and shape by exploiting temporal information. To cope with appearance changes of the eye feature,a code-book of iconic views is automatically constructed during the eye region tracking.For each frame the position and orien-tation of the left and right eye region are provided as well as the normalized eye regions for the template matching. Figure1shows the detected left eye region for the?rst9 frames of the Claire sequence.These images will be used to illustrate our algorithm for eye state(gesture)estimation.

Figure1.Tracked left eye of the Claire se-

quence

3.Sieve Decomposition

Diffusion based scale-space approaches[10]in image processing allows(i)to simplify the images at multiple scales,by removing?ne details,and(ii)to accurately esti-mate the scale,the position and the identity of the objects in an image,from measures of extrema and edges.However, simpli?cation is not only achieved by diffusion,but alterna-tive nonlinear morphological?lters have been introduced to this end[2],[3].Here,we consider the one dimensional in-creasing scale of alternating sequential?lters with?at struc-turing elements,known as sieves.The sieves decompose a one dimensional bounded function,,to a sequence of in-creasing scale:granule functions,,representing the key features of the original signal.

A formal presentation of the decomposition is given as follows:

(1) The operator can be an opening,a closing,an opening/closing,or a closing/opening,for a?at structuring element of size.Note that for the one dimen-sional case the opening and closing operators are de?ned as:

with,the set of intervals of with elements.The sieves are able to robustly reject noise in the manner of medians,and do so more effectively than diffusion based schemes.Moreover the sieves are also shown experimentally to be fast[2].

As it can be seen from Eq.1and the de?nition of the op-erator extrema of a particular scale are removed at each stage of the cascade.The differences between successive ,called granule functions,are locally non-zero for intervals of samples.The non-zero intervals are called granules.The granules are scale related primitives to which the edge information is intimately bounded[3].4.Eye gesture parameters estimation

The one dimensional recursive sieve de?ned above may be used to decompose a two dimensional image of an eye, by scanning vertically over each image column,i.e.in the direction on which eye motion occurs when blinking.The resulting granularity spectrum contains the position,ampli-tude and scale information of the set of granules that de-scribe the image.By ignoring position and amplitude in-formation Matthews et al.[11]de?ned a scale histogram as the number of granules,the granules energy(squared am-plitude),the granules amplitudes or the granules absolute amplitude summed across the entire image.This histogram was used,for audiovisual speech recognition,as a feature vector for standard Hidden Markov Models.Instead of working with the eigenvalue decomposition approach[11], we suggest another way of combining the scales.

Following the same basic idea as Matthews et al.[11],we form,for each scale,a spatio-temporal curve where the granules amplitude,summed across the entire eye image, are represented as a function of time(frame).

their z-scores.The z-score of an observation in a sample ,...,is given by,where

and are the mean and the standard deviation of,respectively.Since the mean is sensitive to outliers, replacing it by the median provides more robust z-scores.

Let the granules amplitude summed across the im-age frame,for a given scale.The robust z-score for an observation is given by:

(2) where

is the mean absolute deviation.Figure3shows the curve of the robust z-scores for the observations of scale (Figure2).Abnormal observations representing a clear eye gesture change(open or closed),are marked on the curve.

Figure3.obtained using the observations

in Figure2for scale

The robust z-score for the image frame is then de?ned to be:(3)

where is the number of images,and is the robust z-score corresponding to observation

at scale.

Figure4.Total z-score

The normalized values,estimated using the observa-tions of Figure2are shown in Figure4.As it can be seen, the estimated values follow the eye gesture shown in Fig-ure1:the lowest value corresponds to an open eye,and the highest value to a closed eye.The scores(Figure4)can be used as values for the AU43for eye animation(see Fig-ure10).

5.Experimental Results

For testing the performance of the proposed approach,it has been applied to the Suzie and Miss America sequences,for150frames each.The left and right eye positions were estimated using the tracking algorithm,and the eye gesture analysis using the opening sieve decomposition.Figure5 shows the results

of the estimated left eye gesture for Suzie.

Figure5.Estimated for the Suzie sequence

By labeling the frames where the eye is closed, groundtruth is created.This is illustrated for the left eyes of the Miss America sequence in Figure7.The choice of an optimal threshold on the graph of the estimated parameter depends on the miss detections and false alarms of the re-ceiver operating curve(ROC)(Figure6).The ROC shows the number of miss detection(de?ned as‘eye not estimated as closed but labeled as closed’)and of false alarm(de?ned as‘eye estimated as closed and not labeled as closed’),plot-ted as a function of the threshold.The optimal threshold on the graph is situated at the joint minimum of both curves. It is indicated on the graph in Figure7.

Figure6.Receiver operating curves for the Miss America sequence

Figure7.Closed eye groundtruth(double underlined parts),Estimated and optimal treshold for the Miss America sequence

For all frames,the decision between a closed and an open eye as well as the automatical estimation of the AU43value for face animation,have been carried out successfully by the proposed algorithm.

The tracking algorithm can also be applied on the mouth corners.Images of the mouth are then used to detect vertical opening,as in the eye images(Figure8).Although the graph can be considered as a?rst estimate of the mouth state (Figure9),at the optimal threshold more miss detections and false alarms are found then for the eyes.This is because

Figure 8.Estimated

for the mouth

of the Miss America sequence

(a)

(b)

(c)

Figure 9.(a)Open mouth (b)Closed mouth

and (c)miss detected closed mouth

a mouth has much more ways to open and re?ections make the lips look more white (as in Figure 9(c)).

6.Discussion -conclusions

The paper presents an approach for automatic estima-tion of eye gesture.By using morphological scale-space,

information about edges and regions of the eye is preserved.We have used a similar approach as Matthews et al.but at the same time,we proposed some necessary modi?ca-tions to incorporate additional information about the spatio-temporal nature of an eye gesture.For the estimation of an-imation parameters,we introduce an observation measure that depends both on scale and time and re?ects more ef?-ciently each eye gesture state.It is shown that in a typical video sequence,we can automatically detect the eyes re-gions and estimate eyes gestures states,for almost all the images.In our future work,we will consider the complete deformation of the facial feature.

References

[1]P.Antoszczyszyn,J.Hannah,and P.Grant.Automatic ?t-ting and tracking of facial features in head-and-shoulders se-quences.In Proceedings IEEE International Conference on Acoustics ,pages 2609–2612,May 1998.

[2]J.Bangham,P.Ling,and R.Harvey.Scale-space from non-linear ?lters.IEEE Transactions on Pattern Analysis and Machine Intelligence ,18(5):520–528,May 1996.

[3]J.Bangham,P.Chardaire,C.Pye,and P.Ling.Multiscale

nonlinear decomposition:The sieve decomposition theo-rem.IEEE Transactions on Pattern Analysis and Machine Intelligence ,18(5):529–539,May 1996.

[4] B.Bascle and A.Blake.Separability of pose and expression

in facial tracking and animation.In IEEE Proceedings 6th.International Conference on Computer Vision ,1998.

[5]R.

Brunelli and T.Poggio.

Face recognition:Features ver-

sus templates.IEEE Transactions on Pattern

Analysis and Machine Intelligence

,15(10):1024–1053,Oct.

1993.

Figure 10.Left eye animation results using as values for the AU43,related to the se-quence given in Figure 1

[6]R.Chellapa,C.Wilson,and S.Sirokey.Human and ma-chine recognition of faces:a survey.Proceedings IEEE ,83(5):705–740,1995.

[7]T.Darrell,I.Essa,and A.Pentland.Task-speci?c ges-ture analysis in real time using interpolated views.IEEE Transactions on Pattern Analysis and Machine Intelligence ,18(2):1236–1242,Dec.1996.

[8]I.Essa and A.Pentland.Facial expression recognition using

image motion.In Motion-based Recognition M.Shah and R.Jain ,pages 271–298.Kluwer Academic Publishers,1997.[9]S.Leps?y and S.Curinga.Conversion of articulatory param-eters into active shape model coef?cients for lip motion rep-resentation and synthesis.Signal Processing:Image Com-munication ,13:209–225,1998.

[10]T.Lindeberg.Scale space theory in computer vision .

Kluwer,1994.

[11]I.Matthews,J.Bangham,R.Harvey,and S.Cox.A compar-ison of active shape model and scale decomposition based features for visual speech recognition.In Proceedings Eu-ropean Conference on Computer Vision:Lecture Notes in Computer Science ,pages 514–528.Springer-Verlag,1998.[12] F.Parke and https://www.360docs.net/doc/9012750615.html,puter Facial Animation .A K

Peters,1996.

[13]I.Ravyse,M.Reinders,J.Cornelis,and H.Sahli.Eye ges-ture estimation.In IEEE Benelux Signal Processing Chap-ter,Signal Processing Symposium,SPS2000(March 23-24,2000)Hilvarenbeek,The Netherlands ,page 4,Mar.2000.[14]M.Reinders.Eye tracking by template matching using an

automatic codebook generation scheme.In Proceedings of third Annual Conference of the Advanced School for Com-puting and Imaging (ASCI),Heijen,The Netherlands ,pages 215–221,Jun.1997.

[15] A.Yuille and P.Hallinan.Deformable templates.In Active

Vision,A.Blake and A.Yuille Edt.,pages 21–38.MIT press,1993.