Learning to Classify Email into Speech Acts

Learning to Classify Email into Speech Acts

Abstract

It is often useful to classify email accord-

ing to the intent of the sender (e.g., "pro-

pose a meeting", "deliver information").

We present experimental results in learn-

ing to classify email in this fashion,

where each class corresponds to a verb-

noun pair taken from a predefined ontol-

ogy describing typical “email speech

acts”. We demonstrate that, although

this categorization problem is quite dif-

ferent from “topical” text classification,

certain categories of messages can none-

theless be detected with high precision

(above 80%) and reasonable recall (above

50%) using existing text-classification

learning methods. This result suggests

that useful task-tracking tools could be

constructed based on automatic classifi-

cation into this taxonomy.

1Introduction

In this paper we discuss using machine learn-ing methods to classify email according to the intent of the sender. In particular, we classify emails according to an ontology of verbs (e.g., propose, commit, deliver) and nouns (e.g., infor-mation, meeting, task), which jointly describe the “speech act” implicit in the email.

A method for accurate classification of email into such categories would have many potential benefits. For instance, it could be used to help an email user track the status of ongoing joint activi-ties. Delegation and coordination of joint tasks is a time-consuming and error-prone activity, and the cost of errors is high: for people involved in many joint activities, it is not uncommon that commitments are forgotten, deadlines are missed, and opportunities are wasted because of a failure to properly track, delegate, and prioritize sub-tasks. The classification methods we consider could be used to partially automate this sort of activity tracking. A hypothetical example of an email assistant that works along these lines is

Figure 1 - Dialog with a hypothetical email assistant that can detect speech acts. Dashed boxes indicate outgoing messages.

2Related Work

Our research builds on earlier work defining il-locutionary points of speech acts (Searle, 1975), and relating such speech acts to email and work-flow tracking (Winograd, 1987, Flores & Lud-low, 1980, Weigant et al, 2003). Winograd suggested that research explicating the speech-act based “language-action perspective” on human communication could be used to build more tools for coordinating joint activities. The Coordinator (Winograd, 1987) was one such system, in which users augmented email messages with additional annotations indicating intent.

While such systems have been useful in lim-ited contexts, they have also been criticized as cumbersome: by forcing users to conform to a particular formal system, they constrain commu-nication and make it less natural and flexible (Schoop, 2001); in short, users often prefer un-structured email interactions (Camino et al. 1998). We note that these difficulties are avoided if messages can be automatically annotated by intent.

Murakoshi et al. (1999) propose an annotation scheme for email broadly similar to ours called a

“deliberation tree”, and propose an algorithm for constructing deliberation trees automatically, but theirs was not quantitatively evaluated, and ap-pears to be specific to Japanese-language emails. Prior research on machine learning for text classification has primarily considered classifica-tion of documents by topic (Lewis, 1992; Yang, 1999), but also has addressed sentiment detection (Peng et al., 2002; Weibe et al., 2001) and au-thorship attribution (e.g., Argamon et al, 2003). There has been some previous use of machine learning to classify email messages (Cohen 1996; Sahami et al., 1998; Rennie, 2000; Segal & Kephart, 2000); however, to our knowledge, none of these systems have investigated learning methods for assigning speech acts to email. In-stead, email is generally classified into folders (i.e., according to topic) or according to whether or not it is “spam”. Learning systems have been previously used to automatically detect acts in conversational speech (e.g. Finke et al., 1998).

3An Ontology of Email Acts

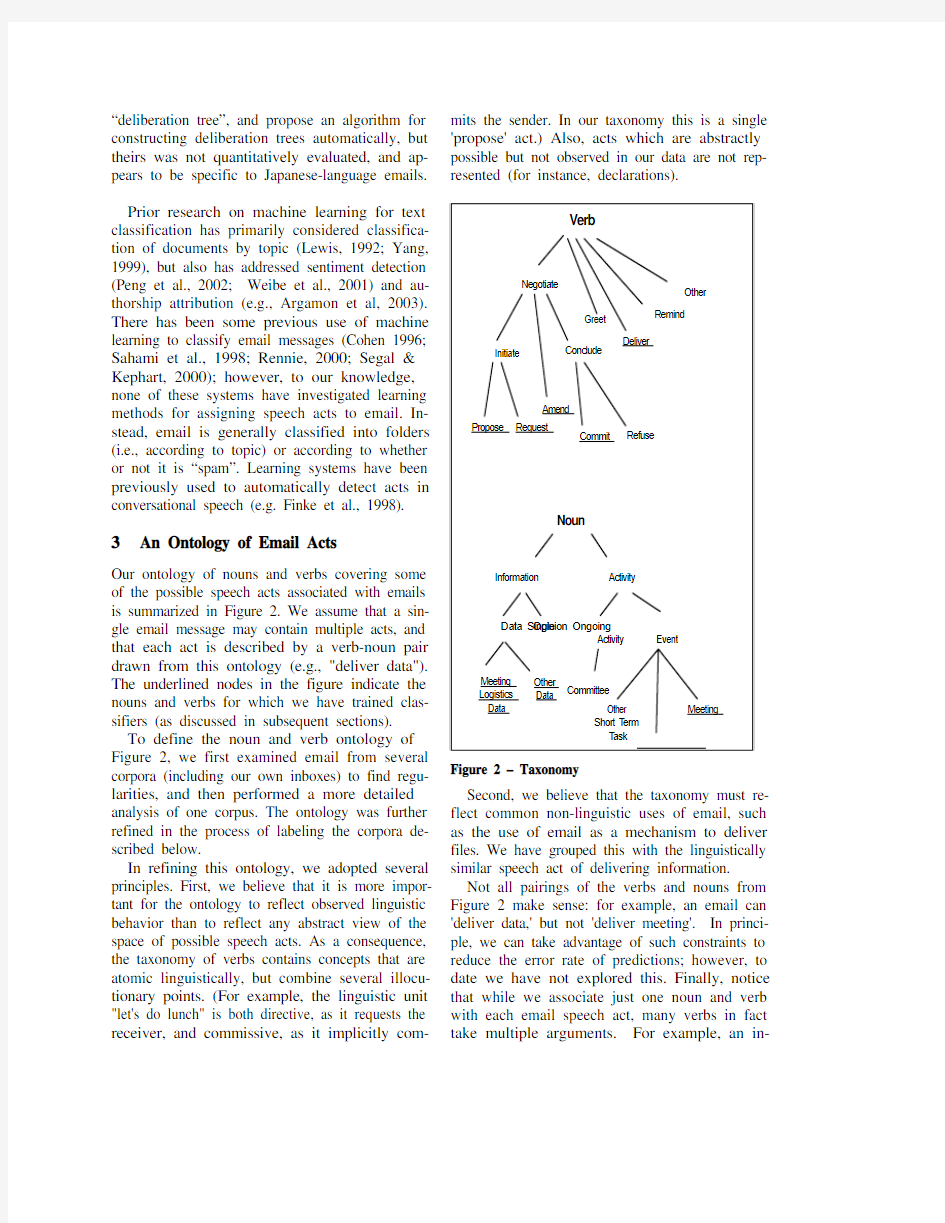

Our ontology of nouns and verbs covering some of the possible speech acts associated with emails is summarized in Figure 2. We assume that a sin-gle email message may contain multiple acts, and that each act is described by a verb-noun pair drawn from this ontology (e.g., "deliver data"). The underlined nodes in the figure indicate the nouns and verbs for which we have trained clas-sifiers (as discussed in subsequent sections).

To define the noun and verb ontology of Figure 2, we first examined email from several corpora (including our own inboxes) to find regu-larities, and then performed a more detailed analysis of one corpus. The ontology was further refined in the process of labeling the corpora de-scribed below.

In refining this ontology, we adopted several principles. First, we believe that it is more impor-tant for the ontology to reflect observed linguistic behavior than to reflect any abstract view of the space of possible speech acts. As a consequence, the taxonomy of verbs contains concepts that are atomic linguistically, but combine several illocu-tionary points. (For example, the linguistic unit "let's do lunch" is both directive, as it requests the receiver, and commissive, as it implicitly com-mits the sender. In our taxonomy this is a single 'propose' act.) Also, acts which are abstractly possible but not observed in our data are not rep-resented (for instance, declarations).

Figure 2 – Taxonomy

Second, we believe that the taxonomy must re-flect common non-linguistic uses of email, such as the use of email as a mechanism to deliver files. We have grouped this with the linguistically similar speech act of delivering information.

Not all pairings of the verbs and nouns from Figure 2 make sense: for example, an email can 'deliver data,' but not 'deliver meeting'. In princi-ple, we can take advantage of such constraints to reduce the error rate of predictions; however, to date we have not explored this. Finally, notice that while we associate just one noun and verb with each email speech act, many verbs in fact take multiple arguments. For example, an in-

stance of the verb 'deliver' can involve a deliv-erer, a deliveree, and a delivered-item. Fortu-nately, some role fillers are often obvious from the email context (e.g., the email sender fills the deliverer role, the receiver fills the deliveree role, and hence the noun we wish to associate with 'deliver' is the noun filling the 'delivered-item' role).

The verbs in Figure 1 are defined as follows.

A request asks (or orders) the recipient to per-form some activity. A question is also considered a request (for delivery of information).

A propose message proposes a joint activity, i.e., asks the recipient to perform some activity and commits the sender as well, provided the re-cipient agrees to the request. An email suggest-ing a joint meeting is a typical example.

An amend message amends an earlier proposal. Like a proposal, the message involves both a commitment and a request. However, while a proposal is associated with a new task, an amendment is a suggested modification of an already-proposed task.

A commit message commits the sender to some future course of action, or confirms the senders' intent to comply with some previously described course of action.

A d eliver message delivers something, e.g., some information, a PowerPoint presentation, the URL of a website, the answer to a question, a message sent "FYI”, or an opinion.

The refuse, greet, and remind verbs occurred infrequently in our data, and will not be dis-cussed further here.

The nouns in Figure 2 constitute possible ob-jects for the email speech act verbs. The nouns fall into two broad categories.

Information nouns are associated with email speech acts described by the verbs Deliver,Re-mind and Amend, in which the email explicitly contains information. We also associate informa-tion nouns with the verb Request, where the email contains instead a description of the needed information (e.g., "Please send your birthdate." versus "My birthdate is …". The request act is actually for a 'deliver information' activity). In-formation includes data believed to be fact as well as opinions, and also attached data files Activity nouns are generally associated with email speech acts described by the verbs Pro-pose, Request, Commit, and Refuse. Activities include meetings, as well as longer term activities such as committee memberships.

Notice every email speech act is itself an ac-tivity. The

4Categorization Results

4.1Corpora

The corpora used in the experiments consist of four different datasets: N01F3, N02F2, N03F2 and PW CALO. The first three datasets are sub-sets of a larger corpus, the CSpace email corpus, which contains approximately 15,000 email mes-sages collected from a Management Game at Carnegie Mellon University. In this game, 277 MBA students, organized in approximately 50 teams of four to six members, ran simulated companies in different market scenarios over a 14-week period (Kraut et al.). N02F2, N01F3 and N03F2 are collections of all email from three different teams, and contain 351, 341 and 443 different email messages respectively.

The PW CALO corpus was produced by a simulated game at SRI. During four days, a group of six players assumed different work roles (e.g. project leader, finance manager, researcher, ad-ministrative assistant, etc). There are 222 differ-ent email messages from this corpus.

All messages were preprocessed by manually removing quoted material, attachments, and non-subject header information.

4.2Inter-Annotator Agreement

Each message may be annotated with several labels, as it may contain more than one speech act. To evaluate inter-annotator agreement, we double-labeled N03F2 for the verbs Deliver, Commit, Request, Amend, and Propose, and the

noun, Meeting, and computed the kappa statistic (Carletta, 1996) for each of these, defined as R R

A ??=1κ where A is the empirical probability of agreement on a category, and R is the probability of agree-ment for two annotators that label documents at random (with the empirically observed frequency of each label). Hence kappa ranges from -1 to +1. The results in Table 1 show that agreement is good, but not perfect.

Email Act Kappa

Meeting 0.82

Deliver 0.75

Commit 0.72 Request 0.81 Amend 0.83 Propose 0.72

Table 1 - Inter-Annotator Agreement on N03F2. We also took doubly-annotated messages which had only a single verb label and con-structed the 5-class confusion matrix for the two annotators shown in Table 2. Note kappa values are somewhat higher for the shorter one-act mes-sages.

Req Prop Amd Cmt Dlv kappa Req 55 0 0 0 0 0.97

Prop 1 11 0 0 1 0.77

Amd 0 1 15 0 0 0.87

Cmt 1 3 1 24 4 0.78

Dlv 1 0 2 3 135 0.91

Table 2 - Inter-annotator agreement on documents

with only one category.

4.3 Learnability of Categories

Representation of documents. To assess the types of message features that are most important for prediction, we adopted Support Vector Ma-chines (Joachims, 2001) as our baseline learning method, and a TFIDF-weighted bag-of-words as a baseline representation for messages. We then conducted a series of experiments with the N03F2 corpus only to explore the effect of dif-ferent representations. We noted that the most discriminating words for most of these categories were common words,

not the low-to-intermediate frequency words that are most discriminative in topical classification.

This suggested that the TFIDF weighting was

inappropriate, but that a bigram representation might be more informative. Experiments showed that adding bigrams to an unweighted bag of words representation slightly improved perform-ance, especially on Deliver . These results are shown in Table 4 on the rows marked “no tfidf” and “bigrams”. (The TFIDF-weighted SVM is shown in the row marked “baseline”, and the ma-jority classifier in the row marked “default”.)

Examination of messages suggested other possi-ble improvements. Since much negotiation in-volves timing, we ran a hand-coded extractor for time and date expressions on the data, and ex-tracted as features the number of time expres-sions in a message, and the words that occurred near a time (for instance, one such feature is “the

word ‘before’ appears near a time”). These re-sults appear in the row marked “times”. Simi-larly, we ran a part of speech (POS) tagger and added features for words appearing near a pro-noun or proper noun (“personPhrases” in the ta-ble), and also added POS counts.

To derive a final representation for each cate-gory, we pooled all features that improved per-formance over “no tfidf” for that category. We

then compared performance of these document representations to the original TFIDF bag of words baseline on the (unexamined) N02F2 and N01F3 corpora. As Table 3 shows, substantial improvement with respect to F1 and kappa was

obtained by adding these additional features over the baseline representation.This is surprising given previous experiments with bigrams for topical text classification (Scott & Matwin, 1999) and sentiment detection (Pang et al., 2002). Learning methods. In another experiment,

we fixed the document representation to be un-weighted word frequency counts and varied the learning algorithm. In most these experiments, we pooled all the data from the four corpora, a total of 1357 messages, and since the nouns and verbs are not mutually exclusive, we formulated the classification task as a set of several binary decision problems, one for each verb. The learners used were the following. VP is an implementation of the voted perceptron algo-

rithm (Freund & Schapire, 1999). DT is a simple decision tree learning system, which learns trees of depth at most five, and chooses splits to

maximize the function ()

00112?+?++W W W W

suggested by Schapire and Singer (1999) as an appropriate objective for “weak learners”. AB is an implementation of the confidence-rated boost-ing method described by Singer and Schapire (1999), used to boost the DT algorithm 10 times. SVM is a support vector machine with a linear

kernel (as used above).

Act

VP AB SVM

DT

Req (450/907) Error F1 0.25 0.58 0.22 0.65 0.23 0.64 0.20 0.69 Prop

(140/1217) Error F1 0.11 0.19 0.12 0.26 0.12 0.44 0.10 0.13 Dlv

(873/484) Error F1 0.26 0.80 0.28 0.78 0.27 0.78 0.30

0.76 Cmt

(208/1149) Error F1 0.15 0.21 0.14 0.44 0.17 0.47 0.15 0.11 Directive (605/752) Error F1 0.25 0.72 0.23 0.73 0.23 0.73 0.19 0.78 Commis-sive

(993/364) Error F1 0.23 0.84 0.23 0.84 0.24 0.83 0.22 0.85 Meet

(345/1012) Error F1 0.187 0.573 0.17 0.62 0.14 0.72 0.180.60 dData

(223 / 1134)

Error F1

0.11 0.58

0.12 0.58

0.13 0.59

0.13 0.57

Table 3 – Learning on the complete corpus.

Table 3 reports the results on the most com-mon verbs, using 5-fold cross-validation to assess accuracy. One surprise was that DT (which we had intended merely as a base learner for AB ) works surprisingly well, particularly at detecting requests, while AB seldom improves much over DT. We conjecture that the bias towards large-margin classifiers that is followed by SVM, AB, and VP (and which has been so successful in topic-oriented text classification) may be less appropriate for this task, perhaps because posi-tive and negative classes are not clearly separated (as suggested by substantial inter-annotator dis-agreement).

Further results are shown in Figure 3, which gives precision-recall curves for many of these classes. Note that the lowest recall level in these graphs corresponds to the precision of random guessing. These graphs indicate that high-precision predictions can be made for the top-level of the verb hierarchy, as well as verbs Re-quest and Deliver, if one is willing to slightly reduce recall.

Figure 3 - Precision/Recall for Commissive act

Figure 4 - Precision/Recall for Directive act

using AdaBoost

Transferability. Another important question to investigate is the generality of these classifiers - that is, the range of corpora to which they can be accurately applied. Is it possible to train a single set of email-act classifiers that work for many users, or is it necessary to train individual classi-fiers for each user? To explore this issue we

trained a DT classifier for Directive emails on the NF01F3 corpus, and tested it on the NF02F2 cor-pus; trained the same classifier on NF02F2 and tested it on NF01F3; and also performed a 5-fold

Results on NF032 Commit Deliver Directive Error F1 kappa Error F1 Kappa Error F1 Kappa Default 12.2 - - 36.8 - - 48.8 - Baseline SVM 10.8 25.0 22.0 29.1 49.8 31.3 23.9 75.2 52.1 no tfidf 13.1 47.3 39.8 31.8 58.4 32.7 24.4 74.6 51.7 +bigrams 11.1 46.1 40.2 25.5 66.1 45.6 22.1 76.0 55.6 +times 12.9 43.6 36.3 29.6 60.1 36.7 25.6 73.2 48.9 +POSTags 12.4 48.6 41.5 29.3 61.8 38.0 23.7 75.4 52.5 +personPhrases 12.9 41.2 34.1 29.3 61.1 37.5 25.1 73.4 49.7

Results on NF01F3 and NF02F2 Default 14.9 31.7 40.4 Baseline SVM 14.2 10.1 9.2 22.0 56.3 42.7 24.3 66.1 47.7 All ‘useful’ features 14.7 42.0 33.9 22.1 64.0 48.0 20.4 73.3 56.9

Table 4 - Experiments with different document representations.

cross-validation experiment within each corpus. (NF02F2 and NF01F3 are for disjoint sets of us-ers, but are approximately the same size.) We then performed the same experiment with VP for Deliver verbs and SVM for Commit verbs (in each case picking the top-performing learner with respect to F1). The results are shown in Table 5.

Test Data DT/Directive 1f3 2f2 Train Data Error F1 Error F1 1f3 25.1 71.6 16.4 72.8 2f2 20.1 68.8 18.8 71.2 VP/Deliver 1f3 30.1 55.1 21.1 56.1 2f2 35.0 25.4 21.1 35.7 SVM/Commit 1f3 23.4 39.7 15.2 31.6 2f2

31.9 27.3 16.4 15.1 Table 5 - Transferability of classifiers

If learned classifiers were highly specific to a particular set of users, one would expect that the diagonal entries of these tables (the ones based on cross-validation within a corpus) would ex-hibit much better performance than the off-diagonal entries. In fact, no such pattern is shown. For Directive verbs, performance is simi-lar across all table entries, and for Deliver and Commit , it seems to be somewhat better to train on NF01F3 regardless of the test set.

4.4 Future Directions

None of the algorithms or representations dis-cussed above take into account the context of an

email message, which intuitively is important in detecting implicit speech acts. A plausible notion of context is simply the preceding message in an email thread.

Exploiting this context is non-trivial for sev-eral reasons. Detecting threads is difficult; al-though email headers contain a “reply-to” field, users often use the “reply” mechanism to start what is intuitively a new thread. Also, since email is asynchronous, two or more users may reply simultaneously to a message, leading to a thread structure which is a tree, rather than a se-quence. Finally, most sequential learning models assume a single category is assigned to each in-stance---e.g., (Ratnaparkhi, 1999)--whereas our

scheme allows multiple categories. Classification of emails according to our verb-noun ontology constitutes a special case of a gen-eral family of learning problems we might call factored classification problems , as the classes (email speech acts) are factored into two features (verbs and nouns) which jointly determine this class. From a practical viewpoint, a variety of real-world text classification problems can be naturally expressed as factored problems, and from a theoretical viewpoint, the additional struc-ture may allow construction of new, more effec-tive algorithms. For example, the factored classes provide a

more elaborate structure for generative probabil-istic models, such as those assumed by Na?ve

Bayes. For instance, in learning email acts, one might assume words were drawn from a mixture distribution with one mixture component pro-duces words conditioned on the verb class factor, and a second mixture component generates words conditioned on the noun (see Blei et al (2003) for a related mixture model. Alternatively, models of the dependencies between the different factors (nouns and verbs) might also be used to improve classification accuracy, for instance by building into a classifier the knowledge that some nouns and verbs are incompatible.

The fact that an email can contain multiple email speech acts almost certainly makes learn-ing more difficult: in fact, disagreement between human annotators is generally higher for longer messages. This problem could be addressed by more detailed annotation: rather than annotating each message with all the acts it contains, human annotators could label smaller message segments (say, sentences or paragraphs). An alternative to more detailed (and expensive) annotation would be to use learning algorithms that implicitly seg-ment a message. As an example, another mixture model formulation might be used, in which each mixture component corresponds to a single verb category.

5Concluding Remarks

We have presented an ontology of “email speech acts” that is designed to capture some im-portant properties of a central use of email: nego-tiating and coordinating joint activities. Unlike previous attempts to combine speech act theory with email (Winograd, 1987; Flores and Ludlow, 1980), we propose a system which passively ob-serves email and automatically classifies it by intention. This reduces the burden on the users of the system, and avoids sacrificing the flexibility and socially desirable aspects of informal, natural language communication.

This problem also raises a number of interest-ing research issues. This categorization problem is quite different from “topical” text classifica-tion, in terms of the types of features that are most informative. We show that part of speech tagging and entity extraction can be used to im-prove classification performance; we leave open the question of whether other types of linguistic analysis would be useful. Predicting implicit speech acts requires context, (which makes the prediction problem a sequential task) and the la-bels assigned to messages have non-trivial struc-ture; we also leave open the question of whether these properties can be effectively exploited.

Notwithstanding these limitations in the scope of our study, our experiments show that many categories of messages can be detected, with high precision and moderate recall, using existing text-classification learning methods. This suggests that useful task-tracking tools could be constructed based on automatic classifi-ers—a potentially important practical application. References

S. Argamon, M. ?ari? and S. S. Stein. (2003). Style mining of electronic messages for multiple authorship discrimination: first results. Proceedings of the 9th ACM SIGKDD, Washington, D.C.

V. Bellotti, N. Ducheneaut, M. Howard and I. Smith. (2003). Taking email to task: the design and evalua-

tion of a task management centered email tool. Pro-ceedings of the Conference on Human Factors in Computing Systems, Ft. Lauderdale, Florida.

D. Blei, T. Griffiths, M. Jordan, and J. Tenenbaum. (2003). Hierarchical topic models and the nested Chi-nese restaurant process. Advances in Neural Informa-tion Processing Systems, 16, MIT Press.

B. M. Camino, A. E. Millewski, D. R. Millen and T. M. Smith. (1998). Replying to email with structured responses. International Journal of Human-Computer Studies. Vol. 48, Issue 6, pp 763 – 776.

J. Carletta. (1996). Assessing Agreement on Classifi-cation Tasks: The Kappa Statistic. Computational Linguistics, Vol. 22, No. 2, pp 249-254.

W. W. Cohen. (1996). Learning Rules that Classify E-Mail. Proceedings of the 1996 AAAI Spring Sympo-sium on Machine Learning and Information Access, Palo Alto, California.

M. Finke, M. Lapata, A. Lavie, L. Levin, L. May-fieldTomokiyo, T. Polzin, K. Ries, A. Waibel and K. Zechner. (1998). CLARITY: Inferring Discourse Structure from Speech. In Applying Machine Learn-

ing to Discourse Processing, AAAI'98.

F. Flores, and J.J. Ludlow. (1980). Doing and Speak-

ing in the Office. In: G. Fick, H. Spraque Jr. (Eds.). Decision Support Systems: Issues and Challenges, Pergamon Press, New York, pp. 95-118.

Y. Freund and R. Schapire. (1999). Large Margin Classification using the Perceptron Algorithm. Ma-chine Learning 37(3), 277—296.

T. Joachims. (2001). A Statistical Learning Model of Text Classification with Support Vector Machines. Proc. of the Conference on Research and Develop-ment in Information Retrieval (SIGIR), ACM, 2001.

R. E. Kraut, S. R. Fussell, F. J. Lerch, and J. A. Espinosa. (under review). Coordination in teams: evi-dence from a simulated management game. To appear in the Journal of Organizational Behavior.

D. D. Lewis. (1992). Representation and Learning in Information Retrieval. PhD Thesis, No. 91-93, Com-puter Science Dept., Univ of Mass at Amherst

A. McCallum, D. Freitag and F. Pereira. (2000). Maximum Entropy Markov Models for Information Extraction and Segmentation. Proc. of the 17th Int’l Conf. on Machine Learning, Nashville, TN.

B. Pang, L. Lee and S. Vaithyanathan. (2002). Thumbs up? Sentiment Classification using Machine Learning Techniques. Proc. of the 2002 Conference on Empirical Methods in Natural Language Process-ing (EMNLP), pp 79-86.

A. E. Milewski and T. M. Smith. (1997). An Experi-mental System For Transactional Messaging. Proc. of the international ACM SIGGROUP conference on Supporting group work: the integration challenge, pp. 325-330.

H. Murakoshi, A. Shimazu and K. Ochimizu. (1999). Construction of Deliberation Structure in Email Communication,. Pacific Association for Computa-tional Linguistics, pp. 16-28, Waterloo, Canada.

A. Ratnaparkhi. (1999). Learning to Parse Natural Language with Maximum Entropy Models. Machine Learning, Vol. 34, pp. 151-175.

J. D. M. Rennie. (2000). Ifile: An Application of Ma-chine Learning to Mail Filtering. Proc. of the KDD-2000 Workshop on Text Mining, Boston, MA.

M. Sahami, S. Dumais, D. Heckerman and E. Horvitz. (1998). A Bayesian Approach to Filtering Junk E-Mail. AAAI'98 Workshop on Learning for Text Cate-gorization. Madison, WI.

M. Schoop. (2001). An introduction to the language-action perspective. SIGGROUP Bulletin, Vol. 22, No. 2, pp 3-8.

S. Scott and S. Matwin. (1999). Feature engineering for text classification. Proc. of 16th International Con-ference on Machine Learning, Bled, Slovenia. J. R. Searle. (1975). A taxonomy of illocutionary acts. In K. Gunderson (Ed.), Language, Mind and Knowl-edge, pp. 344-369. Minneapolis: University of Min-nesota Press.

R. B. Segal and J. O. Kephart. (2000). Swiftfile: An intelligent assistant for organizing e-mail. In AAAI 2000 Spring Symposium on Adaptive User Interfaces, Stanford, CA.

Y. Yang. (1999). An Evaluation of Statistical Ap-proaches to Text Categorization. Information Re-trieval, Vol. 1, Numbers 1-2, pp 69-90.

S. Wermter and M. L?chel. (1996). Learning dialog act processing. Proc. of the International Conference on Computational Linguistics, Kopenhagen, Denmark. R. E. Schapire and Y. Singer. (1998). Improved boost-ing algorithms using confidence-rated predictions. The 11th Annual Conference on Computational Learning Theory, Madison, WI.

H. Wiegend, G. Goldkuhl, and A. de Moor. (2003). Proc. of the Eighth Annual Working Conference on Language-Action Perspective on Communication Modelling (LAP 2003), Tilburg, The Netherlands.

J. Wiebe, R. Bruce, M. Bell, M. Martin and T. Wilson. (2001). A Corpus Study of Evaluative and Speculative Language. Proceedings of the 2nd ACL SIGdial Workshop on Discourse and Dialogue. Aalborg, Denmark.

T. Winograd. 1987. A Language/Action Perspective on the Design of Cooperative Work. Human-Computer Interactions, 3:1, pp. 3-30.

S. Whittaker, Q. Jones, B. Nardi, M. Creech, L. Terveen, E. Isaacs and J. Hainsworth. (in press). Us-ing Personal Social Networks to Organize Communi-cation in a Social desktop. To appear in Transactions on Human Computer Interaction.

最新The_Monster课文翻译

Deems Taylor: The Monster 怪才他身材矮小,头却很大,与他的身材很不相称——是个满脸病容的矮子。他神经兮兮,有皮肤病,贴身穿比丝绸粗糙一点的任何衣服都会使他痛苦不堪。而且他还是个夸大妄想狂。他是个极其自负的怪人。除非事情与自己有关,否则他从来不屑对世界或世人瞧上一眼。对他来说,他不仅是世界上最重要的人物,而且在他眼里,他是惟一活在世界上的人。他认为自己是世界上最伟大的戏剧家之一、最伟大的思想家之一、最伟大的作曲家之一。听听他的谈话,仿佛他就是集莎士比亚、贝多芬、柏拉图三人于一身。想要听到他的高论十分容易,他是世上最能使人筋疲力竭的健谈者之一。同他度过一个夜晚,就是听他一个人滔滔不绝地说上一晚。有时,他才华横溢;有时,他又令人极其厌烦。但无论是妙趣横生还是枯燥无味,他的谈话只有一个主题:他自己,他自己的所思所为。他狂妄地认为自己总是正确的。任何人在最无足轻重的问题上露出丝毫的异议,都会激得他的强烈谴责。他可能会一连好几个小时滔滔不绝,千方百计地证明自己如何如何正确。有了这种使人耗尽心力的雄辩本事,听者最后都被他弄得头昏脑涨,耳朵发聋,为了图个清静,只好同意他的说法。他从来不会觉得,对于跟他接触的人来说,他和他的所作所为并不是使人产生强烈兴趣而为之倾倒的事情。他几乎对世间的任何领域都有自己的理

论,包括素食主义、戏剧、政治以及音乐。为了证实这些理论,他写小册子、写信、写书……文字成千上万,连篇累牍。他不仅写了,还出版了这些东西——所需费用通常由别人支付——而他会坐下来大声读给朋友和家人听,一读就是好几个小时。他写歌剧,但往往是刚有个故事梗概,他就邀请——或者更确切说是召集——一群朋友到家里,高声念给大家听。不是为了获得批评,而是为了获得称赞。整部剧的歌词写好后,朋友们还得再去听他高声朗读全剧。然后他就拿去发表,有时几年后才为歌词谱曲。他也像作曲家一样弹钢琴,但要多糟有多糟。然而,他却要坐在钢琴前,面对包括他那个时代最杰出的钢琴家在内的聚会人群,一小时接一小时地给他们演奏,不用说,都是他自己的作品。他有一副作曲家的嗓子,但他会把著名的歌唱家请到自己家里,为他们演唱自己的作品,还要扮演剧中所有的角色。他的情绪犹如六岁儿童,极易波动。心情不好时,他要么用力跺脚,口出狂言,要么陷入极度的忧郁,阴沉地说要去东方当和尚,了此残生。十分钟后,假如有什么事情使他高兴了,他就会冲出门去,绕着花园跑个不停,或者在沙发上跳上跳下或拿大顶。他会因爱犬死了而极度悲痛,也会残忍无情到使罗马皇帝也不寒而栗。他几乎没有丝毫责任感。他似乎不仅没有养活自己的能力,也从没想到过有这个义务。他深信这个世界应该给他一条活路。为了支持这一信念,他

新版人教版高中语文课本的目录。

必修一阅读鉴赏第一单元1.*沁园春?长沙……………………………………毛泽东3 2.诗两首雨巷…………………………………………戴望舒6 再别康桥………………………………………徐志摩8 3.大堰河--我的保姆………………………………艾青10 第二单元4.烛之武退秦师………………………………….《左传》16 5.荆轲刺秦王………………………………….《战国策》18 6.*鸿门宴……………………………………..司马迁22 第三单元7.记念刘和珍君……………………………………鲁迅27 8.小狗包弟……………………………………….巴金32 9.*记梁任公先生的一次演讲…………………………梁实秋36 第四单元10.短新闻两篇别了,“不列颠尼亚”…………………………周婷杨兴39 奥斯维辛没有什么新闻…………………………罗森塔尔41 11.包身工………………………………………..夏衍44 12.*飞向太空的航程……………………….贾永曹智白瑞雪52 必修二阅读鉴赏第一单元1.荷塘月色…………………………………..朱自清2.故都的秋…………………………………..郁达夫3.*囚绿记…………………………………..陆蠡第二单元4.《诗经》两首氓采薇5.离骚………………………………………屈原6.*《孔雀东南飞》(并序) 7.*诗三首涉江采芙蓉《古诗十九首》短歌行……………………………………曹操归园田居(其一)…………………………..陶渊明第三单元8.兰亭集序……………………………………王羲之9.赤壁赋……………………………………..苏轼10.*游褒禅山记………………………………王安石第四单元11.就任北京大学校长之演说……………………..蔡元培12.我有一个梦想………………………………马丁?路德?金1 3.*在马克思墓前的讲话…………………………恩格斯第三册阅读鉴赏第一单元1.林黛玉进贾府………………………………….曹雪芹2.祝福………………………………………..鲁迅3. *老人与海…………………………………….海明威第二单元4.蜀道难……………………………………….李白5.杜甫诗三首秋兴八首(其一) 咏怀古迹(其三) 登高6.琵琶行(并序)………………………………..白居易7.*李商隐诗两首锦瑟马嵬(其二) 第三单元8.寡人之于国也…………………………………《孟子》9.劝学……………………………………….《荀子》10.*过秦论…………………………………….贾谊11.*师说………………………………………韩愈第四单元12.动物游戏之谜………………………………..周立明13.宇宙的边疆………………………………….卡尔?萨根14.*凤蝶外传……………………………………董纯才15.*一名物理学家的教育历程……………………….加来道雄第四册阅读鉴赏第一单元1.窦娥冤………………………………………..关汉卿2.雷雨………………………………………….曹禹3.*哈姆莱特……………………………………莎士比亚第二单元4.柳永词两首望海潮(东南形胜) 雨霖铃(寒蝉凄切) 5.苏轼词两首念奴娇?赤壁怀古定风波(莫听穿林打叶声) 6.辛弃疾词两首水龙吟?登建康赏心亭永遇乐?京口北固亭怀古7.*李清照词两首醉花阴(薄雾浓云愁永昼) 声声慢(寻寻觅觅) 第三单元8.拿来主义……………………………………….鲁迅9.父母与孩子之间的爱……………………………..弗罗姆10.*短文三篇热爱生

大学英语四六级写作万能模板

大学英语四六级写作体裁 1. Argumentation 2. Report 3. Caricature Writing 4. Proverb Commenting Argumentation 1. 文章的要求:On Students' Evalation of Teachers A:近年来,很多大学把学生对教师的评价纳入评估体系。B:就其利弊而言,人们之间存在争议。C: 你的看法。 On Students' Evaluation of Teachers With the reformation and development of higher education, many universities have gradually shifted their management from the traditional system of academic year to the system of credit, and from teacher-centered to student -centered teaching. As a result,teachers are now managed on the basis of students' evaluation, which has aroused heated disputes. Many people agree that students' opinion and appraisals are crucial to both the teaching process and the teacher-student relationship. For one thing, the teacher may reflect on his teaching, and then change accordingly.For another, mutual undersatnding is enhanced. However, many experts worry that some of the judgements are rather subjective, and even strongly biased. To make things worse, some students take it as a game--" anyway, my ideas do not count at all". Consequently, nobody will benefit from the valueless feedback. As far as I am concerned, students' views should be taken into consideration, but the views should not be taken as decisive factors when it comes to evaluating a teacher. The improvement of the mechanism requires the change of notion about teaching. We used to relate the evaluation to the employment of a teacher, but if we take advantage of the evaluation as a guide,it might be another story. Marriage upon Graduation 1. 越来越多的大学生一毕业就进入婚姻的殿堂。 2.产生这种现象的原因。 3. 我的看法。 A growing number of college students get married almost at the very moment of their graduation. As for this issue, we cannot help wondering what account for this trend. To begin with, there is no denying that the intensive employment competition is a major factor for this phenomenon. Getting married means the couple can support each other in the harsh environment and equally new couples can get the financial assistance from both families. Furthermore, compared to some instant marriages relying on web love or money worship, marriages upon graduation make a more romantic and reliable choice.

大学英语四六级作文.

大学英语四六级作文 2019-11-20 大学英语四级范文:说谎 题目要求: Directions: Write a composition entitled Telling Lies. You should write at least 120 words according to the outline given below in Chinese: 1. 人们对说谎通常的看法; 2. 是否说谎都是有害的?说明你的看法。 3. 总结全文。 参考范文: Sample: Telling Lies Telling lies is usually looked upon as an evil, because some people try to get benefit from dishonest means or try to conceal their faults. However,_I_thinkdespitejits_neg1ative_effects,jsometimes_itjisjessent ialto iemigsinour_dailylifei First, the liar may benefit from the lie by escaping from the pressure of unnecessary embarrassment. Meanwhile, the listener may also feel more comfortable by reasonable excuses. For ex ample, if a little girl’s father died in an accident, her mother would comfort her by saying “farther has gone to another beautiful land”. In such cases, a lie with original goodwill can make the cruel nice. Second^thejsk^lsjofJtellingJlies^jtojsome extent,canbe_regard_asja_capacity_ofcreationandjimagination. Therefore,jtakingjallJthese_factor^jntojconsideration,_wecandefinitel y come to the conclusion that whether telling a lie is harmful depends on its original intention and the ultimate result it brings. (159 words)

高中外研社英语选修六Module5课文Frankenstein's Monster

Frankenstein's Monster Part 1 The story of Frankenstein Frankenstein is a young scientist/ from Geneva, in Switzerland. While studying at university, he discovers the secret of how to give life/ to lifeless matter. Using bones from dead bodies, he creates a creature/ that resembles a human being/ and gives it life. The creature, which is unusually large/ and strong, is extremely ugly, and terrifies all those/ who see it. However, the monster, who has learnt to speak, is intelligent/ and has human emotions. Lonely and unhappy, he begins to hate his creator, Frankenstein. When Frankenstein refuses to create a wife/ for him, the monster murders Frankenstein's brother, his best friend Clerval, and finally, Frankenstein's wife Elizabeth. The scientist chases the creature/ to the Arctic/ in order to destroy him, but he dies there. At the end of the story, the monster disappears into the ice/ and snow/ to end his own life. Part 2 Extract from Frankenstein It was on a cold November night/ that I saw my creation/ for the first time. Feeling very anxious, I prepared the equipment/ that would give life/ to the thing/ that lay at my feet. It was already one/ in the morning/ and the rain/ fell against the window. My candle was almost burnt out when, by its tiny light,I saw the yellow eye of the creature open. It breathed hard, and moved its arms and legs. How can I describe my emotions/ when I saw this happen? How can I describe the monster who I had worked/ so hard/ to create? I had tried to make him beautiful. Beautiful! He was the ugliest thing/ I had ever seen! You could see the veins/ beneath his yellow skin. His hair was black/ and his teeth were white. But these things contrasted horribly with his yellow eyes, his wrinkled yellow skin and black lips. I had worked/ for nearly two years/ with one aim only, to give life to a lifeless body. For this/ I had not slept, I had destroyed my health. I had wanted it more than anything/ in the world. But now/ I had finished, the beauty of the dream vanished, and horror and disgust/ filled my heart. Now/ my only thoughts were, "I wish I had not created this creature, I wish I was on the other side of the world, I wish I could disappear!” When he turned to look at me, I felt unable to stay in the same room as him. I rushed out, and /for a long time/ I walked up and down my bedroom. At last/ I threw myself on the bed/ in my clothes, trying to find a few moments of sleep. But although I slept, I had terrible dreams. I dreamt I saw my fiancée/ walking in the streets of our town. She looked well/ and happy/ but as I kissed her lips,they became pale, as if she were dead. Her face changed and I thought/ I held the body of my dead mother/ in my arms. I woke, shaking with fear. At that same moment,I saw the creature/ that I had created. He was standing/by my bed/ and watching me. His

人教版高中语文必修必背课文精编WORD版

人教版高中语文必修必背课文精编W O R D版 IBM system office room 【A0816H-A0912AAAHH-GX8Q8-GNTHHJ8】

必修1 沁园春·长沙(全文)毛泽东 独立寒秋, 湘江北去, 橘子洲头。 看万山红遍, 层林尽染, 漫江碧透, 百舸争流。 鹰击长空, 鱼翔浅底, 万类霜天竞自由。 怅寥廓, 问苍茫大地, 谁主沉浮。 携来百侣曾游, 忆往昔峥嵘岁月稠。

恰同学少年, 风华正茂, 书生意气, 挥斥方遒。 指点江山, 激扬文字, 粪土当年万户侯。 曾记否, 到中流击水, 浪遏飞舟。 雨巷(全文)戴望舒撑着油纸伞,独自 彷徨在悠长、悠长 又寂寥的雨巷, 我希望逢着 一个丁香一样地

结着愁怨的姑娘。 她是有 丁香一样的颜色, 丁香一样的芬芳, 丁香一样的忧愁, 在雨中哀怨, 哀怨又彷徨; 她彷徨在这寂寥的雨巷,撑着油纸伞 像我一样, 像我一样地 默默彳亍着 冷漠、凄清,又惆怅。她默默地走近, 走近,又投出 太息一般的眼光

她飘过 像梦一般地, 像梦一般地凄婉迷茫。像梦中飘过 一枝丁香地, 我身旁飘过这个女郎;她默默地远了,远了,到了颓圮的篱墙, 走尽这雨巷。 在雨的哀曲里, 消了她的颜色, 散了她的芬芳, 消散了,甚至她的 太息般的眼光 丁香般的惆怅。 撑着油纸伞,独自

彷徨在悠长、悠长 又寂寥的雨巷, 我希望飘过 一个丁香一样地 结着愁怨的姑娘。 再别康桥(全文)徐志摩 轻轻的我走了,正如我轻轻的来; 我轻轻的招手,作别西天的云彩。 那河畔的金柳,是夕阳中的新娘; 波光里的艳影,在我的心头荡漾。 软泥上的青荇,油油的在水底招摇; 在康河的柔波里,我甘心做一条水草! 那榆荫下的一潭,不是清泉, 是天上虹揉碎在浮藻间,沉淀着彩虹似的梦。寻梦?撑一支长篙,向青草更青处漫溯, 满载一船星辉,在星辉斑斓里放歌。

email营销邮件模板

email营销邮件模板 篇一:电子邮件营销之优秀邮件模板制作秘诀 如何制作一个讨人喜欢的邮件模板,让顾客眼前一亮,是众多邮件营销人员头痛的事。因为水能载舟,亦能覆舟。好的EDM邮件模板可以轻松引导顾客注意你希望表达的重要信息,反之,将导致他们觉得不知所云,让整个电子邮件营销前功尽弃。 U-Mail邮件群发平台总结发现,制作一套优秀的电子邮件模板的秘诀,重点遵从以下规则: 一、格式编码注意事项: 1.邮件内容页面宽度在600到800px(像素)以内,长度1024px内 2.电子邮件模板使用utf-8编码 3.源代码在10kb以内 4.用Table表格进行布局 5.邮件要求居中时,在table里设定居中属性 6.文件不能由WORD直接转换而成 7.不能有外链的css样式定义文字和图片 8.不要有动态形式的图片 1 9.没使用<table</table以外的body、meta和html之类的标签 10.背景图片代码写法为:<table background=background.gif cellspacing=0 cellpadding=0 11.没有出现onMouseOut onMouseOver 12.没使用alt

13. font-family属性不为空 二、电子邮件模板文字规则: 1.邮件主题在18个字以内,没有――~??等符号 2.邮件群发主题和内容中不存在带有网站地址的信息 3.文字内容、版面简洁,主题突出 4.没有敏感及带促销类的文字 5.本次模板发送不超过20万封 三、电子邮件模板图片规则: 1.尽量使用图片,减少文字量 2.整页图片在6张以内,每张图片最大不超过15kb 3.图片地址不是本地路径 4.图片名称不含有ad字符 5.整个邮件模板不是一张图 四、电子邮件模板链接规则: 1.链接数量不超过10个 2 2.链接是绝对地址 3.链接地址的长度不超过255个字符 4.不使用map功能 5.制作了一份和邮件内容一样的web页面,在邮件顶部写: “如果您无法查看邮件内容,请点击这里”,链接指向与邮件营销内容一致的web网页。 篇二:英语外贸邮件模板大全, 各类正式外贸电子邮件开 头模板, 英文商务回复跟进email模板

(完整版)Unit7TheMonster课文翻译综合教程四

Unit 7 The Monster Deems Taylor 1He was an undersized little man, with a head too big for his body ― a sickly little man. His nerves were bad. He had skin trouble. It was agony for him to wear anything next to his skin coarser than silk. And he had delusions of grandeur. 2He was a monster of conceit. Never for one minute did he look at the world or at people, except in relation to himself. He believed himself to be one of the greatest dramatists in the world, one of the greatest thinkers, and one of the greatest composers. To hear him talk, he was Shakespeare, and Beethoven, and Plato, rolled into one. He was one of the most exhausting conversationalists that ever lived. Sometimes he was brilliant; sometimes he was maddeningly tiresome. But whether he was being brilliant or dull, he had one sole topic of conversation: himself. What he thought and what he did. 3He had a mania for being in the right. The slightest hint of disagreement, from anyone, on the most trivial point, was enough to set him off on a harangue that might last for hours, in which he proved himself right in so many ways, and with such exhausting volubility, that in the end his hearer, stunned and deafened, would agree with him, for the sake of peace. 4It never occurred to him that he and his doing were not of the most intense and fascinating interest to anyone with whom he came in contact. He had theories about almost any subject under the sun, including vegetarianism, the drama, politics, and music; and in support of these theories he wrote pamphlets, letters, books ... thousands upon thousands of words, hundreds and hundreds of pages. He not only wrote these things, and published them ― usually at somebody else’s expense ― but he would sit and read them aloud, for hours, to his friends, and his family. 5He had the emotional stability of a six-year-old child. When he felt out of sorts, he would rave and stamp, or sink into suicidal gloom and talk darkly of going to the East to end his days as a Buddhist monk. Ten minutes later, when something pleased him he would rush out of doors and run around the garden, or jump up and down off the sofa, or stand on his head. He could be grief-stricken over the death of a pet dog, and could be callous and heartless to a degree that would have made a Roman emperor shudder. 6He was almost innocent of any sense of responsibility. He was convinced that

人教版高中语文必修一背诵篇目

高中语文必修一背诵篇目 1、《沁园春长沙》毛泽东 独立寒秋,湘江北去,橘子洲头。 看万山红遍,层林尽染;漫江碧透,百舸争流。 鹰击长空,鱼翔浅底,万类霜天竞自由。 怅寥廓,问苍茫大地,谁主沉浮? 携来百侣曾游,忆往昔峥嵘岁月稠。 恰同学少年,风华正茂;书生意气,挥斥方遒。 指点江山,激扬文字,粪土当年万户侯。 曾记否,到中流击水,浪遏飞舟? 2、《诗两首》 (1)、《雨巷》戴望舒 撑着油纸伞,独自 /彷徨在悠长、悠长/又寂寥的雨巷, 我希望逢着 /一个丁香一样的 /结着愁怨的姑娘。 她是有 /丁香一样的颜色,/丁香一样的芬芳, /丁香一样的忧愁, 在雨中哀怨, /哀怨又彷徨; /她彷徨在这寂寥的雨巷, 撑着油纸伞 /像我一样, /像我一样地 /默默彳亍着 冷漠、凄清,又惆怅。 /她静默地走近/走近,又投出 太息一般的眼光,/她飘过 /像梦一般地, /像梦一般地凄婉迷茫。 像梦中飘过 /一枝丁香的, /我身旁飘过这女郎; 她静默地远了,远了,/到了颓圮的篱墙, /走尽这雨巷。 在雨的哀曲里, /消了她的颜色, /散了她的芬芳, /消散了,甚至她的 太息般的眼光, /丁香般的惆怅/撑着油纸伞,独自 /彷徨在悠长,悠长 又寂寥的雨巷, /我希望飘过 /一个丁香一样的 /结着愁怨的姑娘。 (2)、《再别康桥》徐志摩 轻轻的我走了, /正如我轻轻的来; /我轻轻的招手, /作别西天的云彩。 那河畔的金柳, /是夕阳中的新娘; /波光里的艳影, /在我的心头荡漾。 软泥上的青荇, /油油的在水底招摇; /在康河的柔波里, /我甘心做一条水草!那榆阴下的一潭, /不是清泉,是天上虹 /揉碎在浮藻间, /沉淀着彩虹似的梦。寻梦?撑一支长篙, /向青草更青处漫溯, /满载一船星辉, /在星辉斑斓里放歌。但我不能放歌, /悄悄是别离的笙箫; /夏虫也为我沉默, / 沉默是今晚的康桥!悄悄的我走了, /正如我悄悄的来;/我挥一挥衣袖, /不带走一片云彩。 4、《荆轲刺秦王》 太子及宾客知其事者,皆白衣冠以送之。至易水上,既祖,取道。高渐离击筑,荆轲和而歌,为变徵之声,士皆垂泪涕泣。又前而为歌曰:“风萧萧兮易水寒,壮士一去兮不复还!”复为慷慨羽声,士皆瞋目,发尽上指冠。于是荆轲遂就车而去,终已不顾。 5、《记念刘和珍君》鲁迅 (1)、真的猛士,敢于直面惨淡的人生,敢于正视淋漓的鲜血。这是怎样的哀痛者和幸福者?然而造化又常常为庸人设计,以时间的流驶,来洗涤旧迹,仅使留下淡红的血色和微漠的悲哀。在这淡红的血色和微漠的悲哀中,又给人暂得偷生,维持着这似人非人的世界。我不知道这样的世界何时是一个尽头!

人教版高中语文必修必背课文

必修1 沁园春·长沙(全文)毛泽东 独立寒秋, 湘江北去, 橘子洲头。 看万山红遍, 层林尽染, 漫江碧透, 百舸争流。 鹰击长空, 鱼翔浅底, 万类霜天竞自由。 怅寥廓, 问苍茫大地, 谁主沉浮。 携来百侣曾游, 忆往昔峥嵘岁月稠。 恰同学少年, 风华正茂, 书生意气, 挥斥方遒。 指点江山, 激扬文字, 粪土当年万户侯。 曾记否, 到中流击水, 浪遏飞舟。 雨巷(全文)戴望舒 撑着油纸伞,独自 彷徨在悠长、悠长 又寂寥的雨巷, 我希望逢着 一个丁香一样地 结着愁怨的姑娘。 她是有 丁香一样的颜色, 丁香一样的芬芳, 丁香一样的忧愁, 在雨中哀怨, 哀怨又彷徨;

她彷徨在这寂寥的雨巷, 撑着油纸伞 像我一样, 像我一样地 默默彳亍着 冷漠、凄清,又惆怅。 她默默地走近, 走近,又投出 太息一般的眼光 她飘过 像梦一般地, 像梦一般地凄婉迷茫。 像梦中飘过 一枝丁香地, 我身旁飘过这个女郎; 她默默地远了,远了, 到了颓圮的篱墙, 走尽这雨巷。 在雨的哀曲里, 消了她的颜色, 散了她的芬芳, 消散了,甚至她的 太息般的眼光 丁香般的惆怅。 撑着油纸伞,独自 彷徨在悠长、悠长 又寂寥的雨巷, 我希望飘过 一个丁香一样地 结着愁怨的姑娘。 再别康桥(全文)徐志摩 轻轻的我走了,正如我轻轻的来;我轻轻的招手,作别西天的云彩。 那河畔的金柳,是夕阳中的新娘;波光里的艳影,在我的心头荡漾。 软泥上的青荇,油油的在水底招摇;

在康河的柔波里,我甘心做一条水草! 那榆荫下的一潭,不是清泉, 是天上虹揉碎在浮藻间,沉淀着彩虹似的梦。 寻梦?撑一支长篙,向青草更青处漫溯, 满载一船星辉,在星辉斑斓里放歌。 但我不能放歌,悄悄是别离的笙箫; 夏虫也为我沉默,沉默是今晚的康桥。 悄悄的我走了,正如我悄悄的来; 我挥一挥衣袖,不带走一片云彩。 记念刘和珍君(二、四节)鲁迅 二 真的猛士,敢于直面惨淡的人生,敢于正视淋漓的鲜血。这是怎样的哀痛者和幸福者?然而造化又常常为庸人设计,以时间的流驶,来洗涤旧迹,仅使留下淡红的血色和微漠的悲哀。在这淡红的血色和微漠的悲哀中,又给人暂得偷生,维持着这似人非人的世界。我不知道这样的世界何时是一个尽头! 我们还在这样的世上活着;我也早觉得有写一点东西的必要了。离三月十八日也已有两星期,忘却的救主快要降临了罢,我正有写一点东西的必要了。 四 我在十八日早晨,才知道上午有群众向执政府请愿的事;下午便得到噩耗,说卫队居然开枪,死伤至数百人,而刘和珍君即在遇害者之列。但我对于这些传说,竟至于颇为怀疑。我向来是不惮以最坏的恶意,来推测中国人的,然而我还不料,也不信竟会下劣凶残到这地步。况且始终微笑着的和蔼的刘和珍君,更何至于无端在府门前喋血呢? 然而即日证明是事实了,作证的便是她自己的尸骸。还有一具,是杨德群君的。而且又证明着这不但是杀害,简直是虐杀,因为身体上还有棍棒的伤痕。 但段政府就有令,说她们是“暴徒”! 但接着就有流言,说她们是受人利用的。 惨象,已使我目不忍视了;流言,尤使我耳不忍闻。我还有什么话可说呢?我懂得衰亡民族之所以默无声息的缘由了。沉默啊,沉默啊!不在沉默中爆发,就在沉默中灭亡。

大学英语四六级考试万能作文模板

大学英语四六级考试万能作文模板 在CET4/6试题中,有两个拉分最厉害的部分,一是听力题,一是 作文题。,跟汉语作文的“起承转合”类似,英语作文其实也有固 定模式。 我到那里一看,果然有五个模板,认真拜读一遍之后,不由得 大吃二惊。第一惊的是:我们中国真是高手如林,多么复杂、困难 的问题,都能够迎刃而解,实在佩服之极。第二惊的是:“八股文”这朵中国传统文化的奇葩,在凋谢了一百多年之后,竟然又在中华 大地上重新含苞怒放,真是可喜可贺。 感慨之后,转入正题。这五个模板,在结构上大同小异,掌握 一种即可,所以我从中挑选了一个最简单、最实用的,稍加修改, 给各位介绍一下。这个模板的中文大意是:在某种场合,发生某种 现象,并提供一些相关数据,然后列出这种现象的三个原因,并将 三个原因总结为一个最主要原因,最后提出避免这种现象的两个办法。总的来说,利用这个模板写英语作文,是相当容易的,您只要 将适当的内容,填写到对应的方括号中,一篇通顺的英语作文即可 完成。下面就是这个模板。 Nowadays, there are more and more [某种现象] in [某种场合]. It is estimated that [相关数据]. Why have there been so many [某种现象]? Maybe the reasons can be listed as follows. The first one is [原因一]. Besides, [原因二]. The

third one is [原因三]. To sum up, the main cause of [某种现象] is due to [最主要原因]. It is high time that something were done upon it. For one thing, [解决办法一]. On the other hand, [解决办法二]. All these measures will certainly reduce the number of [某种现象]. 为便于读者理解,我特意用这个模板,写了一篇关于ghost writer(捉刀代笔的枪手)的示范性小作文,请您观摩一下。 Nowadays, there are more and more [ghost writers / 枪手] in [China's examinations / 中国的考场]. It is estimated that [5% examinees are ghost writers / 5%的应试者是枪手]. Why have there been so many [ghost writers / 枪手]? Maybe the reasons can be listed as follows. The first one is [hirers' ignorance / 雇主无知]. Besides, [hirers' indolence / 雇主懒惰]. The third one is [hirers' obtusity / 雇主迟钝]. To sum up, the main cause of [ghost writers / 枪手] is due to [hirers' low IQ / 雇主智商低]. It is high time that something were done upon it. For one thing, [flagellation / 鞭打]. On the other hand, [decapitation / 斩首]. All these measures will certainly reduce the number of [ghost writers / 枪手].

邮件营销计划书

1.营销的计划和目的 2017年是邮件营销突飞猛进的一年。社交媒体和移动互联网发展迅速,传统的邮件营销方式在与微博、微信、QR 码、人人网等社交网络方式的积极融合中,展现了新的营销活力,邮件营销的可拓展性得到延伸,我们企业把邮件营销列为重要营销工具之一。为推广我们名裳的服装在线营销,吸引广大客户的加入和购买我们制定了一系列计划: 1.先整理出要发送的主题和内容,配置图文最好。 2.甄选客户,最好挑一些有代表性的,可以提供建议的,新老客户都可以。 3.发送邮件,一定要确保邮件到收信人手中。 4.初步统计邮件的开启率及邮件内容链接的跟踪率‘ 5.做好统计记录,这次邮件发送出现的问题及时改进。 目的:1、提升公司及产品品牌知名度和认知度 2、建立庞大的客户信息邮件数据库,可以有计划有组织地开展营销活动 3、与客户建立良好的关系,提高客户的品牌忠诚度 4、让客户第一时间获取公司及相关行业内信息 5.能够探测市场, 找到新的市场机会和提供新产品与新服务 6、可实现一对一的销售模式 7、必须将宣传对象引到网站来,因为网站才能提供详尽的信息,才更有说服力。 2.如何获取邮件列表 1.发动注册会员收集邮件列表 邀请了人同时在网站注册,初次购买可以享受折扣、送积分等等,要顾及注册会员的利益。获得的邮件列表,再拿来群发邮件。 2.做广告吸引用户主动订阅 把邮件地址印在名片、文具、发/票、传真、印刷品上,即使在广播电视广告上也留下邮件地址,这样,便于顾客通过邮件和你联系, 3.在报刊上发布新闻 在报刊上发表人们必须通过邮件才可以接收的免费报告,或者可以通过邮件发送的产品,可以发表一些引起读者共鸣的话题,在读者回应的过程中收集其姓名和邮件地址。 4.现有客户的邮件列表 下次与客户通过邮寄或邮件联系时,可以为通过邮件回复者提供特别的服务,例如一份免费报告或者特别折扣优惠等,你必须尽可能地得到顾客的邮件地址。5.购买邮件列表 现在有很多的公司有邮件列表买,很多人也是选择的这个方法,如果选择的渠道比较好的话,收效也是相当快的,当然花费的费用也不低。这些数据一般都是有比较详细的数据,不单单是一个邮箱,有性别、年龄、职业、地区等,购买的时候最好先分析一下对自己产品感兴趣的人员的一些特征,然后筛选后购买,这样相关性会高很多,自己以后群发邮件的时候效果也会好很多。当然购买邮件列表最好能够选择一个好一些的机构,信誉要高些的,否则大部分邮件地址都无效,也就白白浪费钱了。 6.做好邮件列表管理