A vague-rough set approach for uncertain knowledge acquisition

A vague-rough set approach for uncertain knowledge acquisition

Lin Feng a ,b ,?,Tianrui Li b ,Da Ruan c ,d ,Shirong Gou a

a

College of Computer Science,Sichuan Normal University,Chengdu 610101,PR China

b

School of Information Science and Technology,Southwest Jiaotong University,Chengdu 610031,PR China c

Belgian Nuclear Research Centre (SCK áEN),Boeretang 200,2400Mol,Belgium d

Department of Applied Mathematics &Computer Science,Ghent University,Krijgslaan 281(S9),9000Gent,Belgium

a r t i c l e i n f o Article history:

Received 5February 2010

Received in revised form 15March 2011Accepted 20March 2011

Available online 3April 2011Keywords:

Knowledge acquisition Rough sets Vague sets

Vague rough sets Attribute reduction

Uncertain information system

a b s t r a c t

By combining both vague sets and rough sets in fuzzy data processing,we propose a vague-rough set approach for extracting knowledge under uncertain environments.We compute all attribute reductions using the vague-rough lower approximation distribution,concepts of attribute reduction and the discern-ibility matrix in a vague decision information system (VDIS).Research results for extracting decision rules from the VDIS show the proposed approaches extend the corresponding method in classical rough set theory and provide a new avenue to uncertain vague knowledge acquisition.

ó2011Elsevier B.V.All rights reserved.

1.Introduction

Rough set theory by Pawlak [1]is a suitable mathematical ap-proach for handling imprecision,incompletion and uncertainty in data analysis.Its ef?ciency has been successfully demonstrated by many related applications such as attribute reduction,pattern recognition,data mining,and fault detection [2–6].The key ele-ments of rough set theory as cited from [7]are as follows:(1)Only the facts that hidden in the data are analyzed;

(2)No additional information about the data such as thresholds

or expert knowledge is required;

(3)Given a data set with discretized attribute values,it is possi-ble to ?nd a subset (termed as reduct)within the original attributes that are the most informative.Attribute values in real-world applications are often both sym-bolic and real-valued in data sets.The classical rough set theory is mainly based on the indiscernibility relation (equivalence relation)and cannot handle effectively such real-valued attributes.One pos-sible way to solve this problem is to discretize the attribute values beforehand,but it may cause information loss [8,9].Another feasi-ble approach to overcome this shortcoming is to use the fuzzy-rough set theory,in which fuzzy and rough sets encapsulate the re-lated but distinct concepts of fuzziness and indiscernibility,both occurring as a result of uncertainty existed in knowledge [10].In a fuzzy-rough set,a fuzzy similarity relation characterizes the de-gree of similarity between two objects instead of the equivalence relation used in the classical rough sets [11].To date,many re-search results have been obtained in the ?eld of fuzzy-rough sets.For example,Dubois and Prade [12]combined fuzzy and rough sets as a conception of rough fuzzy sets and fuzzy rough sets.Tsang et al.[13]established solid mathematical foundations for an attri-bute reduction in fuzzy rough sets.Pal and Mitra [14]proposed a rough-fuzzy hybridization scheme for case generations.Shen and Jensen [15]developed a fuzzy rule induction based on fuzzy rough sets for feature selection.Wu and Zhang [16]presented construc-tive and axiomatic approaches of fuzzy approximation operators.Lingras and Jensen [17]reviewed the fuzzy and rough hybridiza-tion in supervised learning,information retrieval,feature selection,and neural and evolutionary computing.

Roughly speaking,a fuzzy set F of a universe of discourse U ,U ={x 1,x 2,...,x n },can be represented by F =l F (x 1)/x 1+l F (x 2)/x 2+ááá+l F (x n )/x n ,where l F is a membership function of the fuzzy set F ,l F :U ?[0,1],and l F (x i )indicates the grade of membership of x i in the fuzzy set F .Obviously,for any x i 2U ,the membership va-lue l F (x i )is a single value between 0and 1.On the other hand,if there exist some uncertainties,then a fuzzy set may be inadequate to some models as follows:

0950-7051/$-see front matter ó2011Elsevier B.V.All rights reserved.doi:10.1016/j.knosys.2011.03.005

?Corresponding author at:College of Computer Science,Sichuan Normal University,Chengdu 610101,PR China.

E-mail addresses:scfengyc@https://www.360docs.net/doc/be10011057.html, (L.Feng),trli30@https://www.360docs.net/doc/be10011057.html, (T.Li),druan@sckcen.be ,da.ruan@ugent.be (D.Ruan).

(1)The exact grade of membership l F (x i )of x i may be uncertain

due to limitations or errors of measuring devices;

(2)The exact grades of l F (x i )of x i may be unknown due to the

limitation of human cognitive ability;

(3)Consequents may be bounded by a subinterval,especially

when knowledge is acquired from a group of experts that do not collectively agree with each other,such as a vote model.Gau and Buehrer hence introduced the concept termed vague sets [18].They used a truth-membership function t F and a false-membership function f F to characterize the lower bound on l F .The lower bounds are used to create a subinterval on [0,1],namely [t F (x i ),1àf F (x i )],to generalize the l F (x i )of a fuzzy set.The interval-based membership function generalization in a vague set is more expressive in capturing vagueness of data,and has the ability to model the above-mentioned uncertainties.Vague sets are different from ordinary sets,fuzzy sets,and interval-valued sets [18].The major advantage of vague sets over fuzzy sets is that vague sets separate the positive and negative evidence for membership of an element in the universe [18].In the past decades,investigations of vague set theory and their mathematical properties have been carried out by many researchers [19–24].On the other hand,the existing theories and approaches of knowledge acquisition based on fuzzy-rough sets could not be directly applied to vague data sets [25].It is thus necessary to develop an ef?cient and appropriate ap-proach for knowledge acquisition from vague data sets based on rough sets.

In this paper,we attempt to extend the approaches of attribute reduction by combing rough sets and vague sets from [26].First,we introduce basic ideas of rough sets given in the form of the low and upper approximations in Vague Approximate Space (VAS).Then,we develop a discernibility-matrix approach to com-pute all the attributes reductions in a Vague Decision Information System (VDIS).Finally,we propose an extracting for decision rules from VDIS.

The rest of the paper is organized as follows.We review basic notions and operators of vague sets in Section 2.We discuss vague rough approximations sets in VAS in Section 3.We develop ap-proaches for knowledge reduction and knowledge acquisition in VDIS in Section 4.We summarize the study and outline the further research work in Section 5.2.Preliminaries

2.1.Basic notions of vague sets

Basic notions of vague sets are mainly cited from [18].Let U be a space of points (objects),with a generic element of U denoted by x .A vague set V in U is characterized by a true-membership function t V and a false-membership function f V .t V (x )is a lower bound on the grade of membership of x derived from the evidence for x ,and f V (x )is a lower bound on the negation of x derived from the evidence against x .Both t V (x )and f V (x )associate with a real number in the interval [0,1]with each point in U ,where t V (x )+f V (x )61.That is,

t V :U !?0;1 ;f V :U !?0;1 :

e1T

This approach bounds the membership grade of x to a subinterval [t V (x ),1àf V (x )]of [0,1].In other words,the exact membership grade l V (x )of x may be unknown,but is bounded by t V (x )6l V (x )61àf V (x ),where t V (x )+f V (x )61.

The precision of human knowledge about x is immediately clear,with the uncertainty described by the difference

D (x )(D (x )=1àf V (x )àt V (x )).If D (x )is small,human knowledge about x is relatively precise;if it is large,the knowledge about x is relatively little.If 1àf V (x )is equal to t V (x ),i.e.,D (x )=0,the knowledge about x is exact,and the theory reverts back to that of fuzzy sets.If 1àf V (x )and t V (x )are both equal to 1or 0,i.e.,D (x )=[0,0]or D (x )=[1,1],depending on whether x does or does not belong to V ,the knowledge about x is exact and the theory re-verts back to that of ordinary sets (i.e.,sets with two-value charac-teristic functions)[16].

When U is continuous,a vague set is written as V ?Z

U

?t V ex T;1àf V ex T =x x 2U :e2T

When U is discrete,a vague set is written as

V ?

X

n i ?1

?t V ex i T;1àf V ex i T =x i x i 2U :e3T

The vague set theory can be interpreted by a voting model.Suppose that V is a vague set in U ,x 2U ,and the vague value is [0.6,0.8],that is,t V (x )=0.6,f V (x )=1à0.8=0.2.Then,the degree of x 2V is 0.6,and the degree of x R V is 0.2.The vague value [0.6,0.8]can be inter-preted as ‘‘The vote for a resolution is 6in favor,2against,and 2abstentions (The number of the total voting people is assumed to be 10)’’.

2.2.Algebraic operations

We list basic operations of vague sets,including containment,equal,union,intersection,and complement.Let x be an element of U .

De?nition 1(Containment ).A vague set X is contained in another vague set Y ,written as X #Y ,if and only if

t X ex T6t Y ex T;1àf X ex T61àf Y ex T:

e4T

De?nition 2(Equal ).Vague sets X and Y are equal,written as X =Y ,if and only if X #Y and X Y ;that is

t X ex T?t Y ex T;

1àf X ex T?1àf Y ex T:

e5T

De?nition 3(Union ).The union of two vague sets X and Y with respective truth-membership and false-membership functions t X ,f X ,t Y and f Y is a vague set Z ,written as Z =X [Y ,whose truth-mem-bership and false-membership functions are related to those of X and Y by

t Z ex T?max et X ex T;t Y ex TT;

1àf Z ex T?max e1àf X ex T;1àf Y ex TT?1àmin ef X ex T;f Y ex TT:

e6T

De?nition 4(Intersection ).The intersection of two vague sets X and Y with respective truth-membership and false-membership functions t X ,f X ,t Y and f Y is a vague set Z ,written as Z =X \Y ,whose truth-membership and false-membership functions are related to those of X and Y by

t Z ex T?min et X ex T;t Y ex TT;

1àf Z ex T?min e1àf X ex T;1àf Y ex TT?1àmax ef X ex T;f Y ex TT:

e7T

838L.Feng et al./Knowledge-Based Systems 24(2011)837–843

De?nition 5(Complement ).The complement of a vague set X denoted by X c is de?ned by

t X c ex T?f X ex T;1àf X c ex T?1àt X ex T:

e8T

3.Generalized vague rough approximations

Denote (U ,R )a Pawlak approximation space,where U is a ?nite

and non-empty set of objects called a universe,and R is an equiv-alence relation on U .We know a set X (X #U ),can be represented by rough sets (R à(X ),R à(X )).We also know a set X can be repre-sented by fuzzy rough sets eb R

àeX T;b R àeX TTwhen R is a fuzzy rela-tion b R

on U .However,if R is a vague relation e R ,an extension of a fuzzy relation on U ,how to describe X in eU ;e R

T?To solve this prob-lem,we develop here a vague-rough set.To begin with,we intro-duce a method for measuring the degree of containment and intersection between two vague sets based on fuzzy rough sets in [27,28].

Let X and Y be two vague sets of the universe of the discourse U .By De?nition 1,X is contained in Y

X #Y ()For any x 2U ;t X ex T6t Y ex Tand

1àf X ex T61àf Y ex T:

e9T

If the condition (9)is satis?ed,then X is in Y .However,we intend to evaluate the containment degree of X in Y about each object of U .Therefore,for all x 2U ,we obtain a new vague set,which is called

as the vague containment of X in Y and denoted by X ~#

Y .By using an implication operator ‘‘?’’,we have:

l X ~#Y ex T?l X ex T!l Y ex T??l X ex T c

[l Y ex T:

e10T

By De?nitions 3and 5,(10)is rewritten as

l X ~#Y ex T?max ef X ex T;t Y ex TT;max e1àt X ex T;1àf Y ex TT? :

e11T

Applying (11),the containment degree of X in Y ,denoted by I (X ,Y ),

is a vague value,which is de?ned as

I eX ;Y T?inf x 2U

max ef X ex T;t Y ex TT;inf x 2U

max e1àt X ex T;1àf Y ex TT

:

e12T

At the same time,by De?nition 4,the intersection degree of be-tween X and Y ,denoted by T (X ,Y ),is a vague value,which is de?ned

as

T eX ;Y T?sup x 2U

min et X ex T;t Y ex TT;sup x 2U

min e1àf X ex T;1àf Y ex TT

:

e13T

Example 1.Assume that U ={x 1,x 2,x 3,x 4}.Let

X ??0:7;0:8 =x 1t?0:5;0:9 =x 2t?0:6;0:8 =x 3t?0:3;0:5 =x 4;

Y ??0:2;0:3 =x 1t?0:7;0:9 =x 2t?0:6;0:8 =x 3t?0:3;0:8 =x 4:

By (12)and (13),we have

I eX ;Y T?inf x i 2U

e0:2;0:7;0:6;0:5T;inf x i 2U

e0:3;0:9;0:8;0:8T

??0:2;0:3 ;

T eX ;Y T?sup x i 2U

e0:2;0:5;0:6;0:3T;sup x i 2U

e0:3;0:9;0:8;0:5T

"

#

??0:6;0:9 :

De?nition 6(Vague relation ).Let U be a ?nite and nonempty set of objects and U ?U be the product set of U and U ."x ,y ,z 2U ,any

vague subset e R

of U ?U is a vague relation on U ,which denotes e R ex ;y T??t e R

ex ;y T;1àf e

R

ex ;y T ,if e R satis?es:(1)Re?exivity:e R

ex ;x T??1;1 ;(2)Symmetry:e R

ex ;y T?e R ey ;x T;(3)Transitivity:t R ex ;z TP sup y 2U

min et R ex ;y T;t R ey ;z TTand

1àf R ex ;z TP sup y 2U

min e1àf R ex ;y T;1àf R ey ;z TT:

Clearly,e R

is a vague equivalence relation.If e R satis?es condi-tions (1)and (2),e R

is a vague similarity relation.As mentioned in Section 2,for any x ,y 2U ,if e R

ex ;y T??0;0 or [1,1],e R degener-ates a classical equivalence relation.If t e R ex ;y T?1àf e R

ex ;y T;e R

degenerates a fuzzy equivalence relation.

Because U is discrete,by De?nition 6,the e R -vague classes ?z e R

containing object z can be written as a vague set ?z e R

?P y 2U ?t e R ez ;y T;1àf e R

ez ;y T =ez ;y T.On the other hand,for all x 2U ,the vague rough approximations need to calculate the containment

and the intersection degree of X in e R -vague classes ?x e R

.By (12)and (13),the vague rough approximations could be de?ned as follows.De?nition 7(Vague rough approximations ).Let eU ;e R

Tbe a Vague Approximation Space (VAS),where U is a ?nite and non-empty set

of objects called a universe,and e R

is a vague relation.For any x 2U ,X #U ,the vague lower approximation of X with respect to eU ;e R

T,denoted by e R

àeX T,is a vague set of U ,whose membership function is de?ned by

l e R àeX Tex T?inf y 2U max ef e R ex ;y T;t X ey TT;inf y 2U

max e1àt e R ex ;y T;1àf X ey TT

:e14T

The vague upper approximation of X with respect to eU ;e R

T,denoted by e R àeX T,is a vague set of U ,whose membership function is de?ned by

l e R àeX Tex T?sup y 2U

min et e R ex ;y T;t X ey TT;sup y 2U

min e1àf e R

ex ;y T;1àf X ey TT"#

:e15T

The pair ee R

àeX T;e R àeX TTis called the generalized vague-rough sets of X with respect to eU ;e R

T.Since X is a crisp set,we have ?t X ey T;1àf X ey T ?

?1;1 ;y 2X ;?0;0 ;

y R X :

e16T

We thus easily derive (17)from (14)–(16),

l e R àeX Tex T??inf y R X f e R ex ;y T;inf y R X

e1àt e R ex ;y TT ;l e R à

eX T

ex T??sup y 2X

t e R

ex ;y T;sup y 2X

e1àf e R

ex ;y TT :

8

<:e17T

Proposition 1.Let eU ;e R

Tbe a VAS.If e R degenerates into an equiva-lence relation,vague rough approximation sets,e R

àeX Tand e R àeX T,are equivalent to Pawlak approximation sets R à(X)and R à(X),respectively.

Proof.If e R

is an equivalence relation,then for any x ;y 2U ;l e R ex ;y T??1;1 or l e R ex ;y T??0;0 ,i.e.,?t ?x e R ey T;1àf ?x e R ey T ??1;1 y 2?x e

R ?0;0 y R ?x e

R

(

.

By (14),we have l e R àeX Tex T??t X e?x e R T;1àf X e?x e R

T ??1;1 ,which implies e R àeX T?f x 2U j?x e R

#X g .Thus,e R àeX Tis equivalent to R à(X ).

Similarly,e R

àeX Tis equivalent to R à(X ).h L.Feng et al./Knowledge-Based Systems 24(2011)837–843839

Theorem1.LeteU;e RTbe a VAS.X,Y#U.If e R is a vague similarity relation,a vague-rough set has the following algebra properties:

(1)e RàeX\YT?e RàeXT\e RàeYT;

(2)e RàeX[YT?e RàeXT[e RàeYT;

(3)X#Y)e RàeXT#e RàeYT;

(4)X#Y)e RàeXT#e RàeYT;

(5)e RàeX[YT e RàeXT[e RàeYT;

(6)e RàeX\YT#e RàeXT\e RàeYT.

Theorem2.LeteU;e RTbe a VAS.X,Y#U.If e R is a vague equivalence relation,a vague-rough set has the following algebra properties:

(1)e RàeX\YT?e RàeXT\e RàeYT;

(2)e RàeX[YT?e RàeXT[e RàeYT;

(3)X#Y)e RàeXT#e RàeYT;

(4)X#Y)e RàeXT#e RàeYT;

(5)e RàeX[YT e RàeXT[e RàeYT;

(6)e RàeX\YT#e RàeXT\e RàeYT;

(7)e RàeXT#X#e RàeXT;

(8)e Ràee RàeXTT?e RàeXT;

(9)e Ràee RàeXTT?e RàeXT.

From Theorems1and2,we conclude that the operations of intersection,union and containment between vague rough sets are not the same as the corresponding operations of vague sets in Section2.Because a vague-rough set is de?ned on the special knowledge space(approximation space),it has many properties that may contribute to knowledge acquisition whereas a pure va-gue set does not own.Next,we will use the vague-rough set model to study approaches of attribute reduction and knowledge discov-ery in VDIS.

4.Attribute reductions and knowledge acquisition in VDIS

4.1.Vague rough approximations in VDIS

Denote S=(U,A)a Vague Information System(VIS),where U is a ?nite nonempty set of objects called a discourse,A is a?nite non-

empty set of attributes.For any a i2A,a i:U!V a

i ,where V a

i

is

called the value domain of a i,which can be represented as a set of linguistic terms V a

i

?f v i1;v i2;...;v im g.For any v ij2V a i,v ij is a vague set of U.For any x i2U,a i(x i)indicates the values of x i with respect to a i,which can be represented as a vague set of a uni-

verse of the discourse V a

i ,such that a iex iT?l v

i1

ex iT=v i1t

l v

i2ex iT=v i2tááátl v imex iT=v im,where l v ij is a grade of membership

of x i belonging to v ij.

If A=C[{d},C\{d}=;,where C is a?nite non-empty set of conditional attributes,d is a decision attribute,VIS is called a VDIS. Obviously,in a VDIS,for any v i12V a i and v i22V a iàf v i1g,if a(x i)(v i1)=[1,1]and a(x i)(v i2)=[0,0],then VDIS degenerates into a Pawlak information system.

We now study the approximation sets in VDIS based on the dis-cussions of the fuzzy approximations in[31].

De?nition8.Approximations in VDISLet S=(U,C[{d})be a VDIS. For any x,y2U,a2C,a vague relation~a on a is de?ned by

~aex;yT?sup

v2V a minet vexT;t veyTT;sup

v2V a

mine1àf vexT;1àf veyTT

"#

:

e18TTherefore,for any B#A,a vague relation e B on B can be induced

e Bex;yT?inf

a2B sup

v2V a

minet vexT;t veyTT;inf

a2B

sup

v2V a

mine1àf vexT;1àf veyTT

"#

:

e19TExample2.Table1is a VDIS,which is extended by a fuzzy deci-sion table in[29],while some instances are modi?ed according to[30].It has four condition attributes C={Outlook(a1),Tempera-ture(a2),Humidity(a3),Wind(a4)},and a decision attribute Play Tennis(d).Each condition attribute has linguistic terms,i.e., V a1={Sunny(v11),Cloudy(v12),Rain(v13)},V a2={Hot(v21),Mild (v22),Cool(v23)},V a3={Humid(v31),Normal(v32)},and V a4={Wind

(v41),Not windy(v42)}.Decision attribute d has two values,i.e., V d={No(0),Yes(1)}.The a1(x1)can be represented as a vague set of a universe of the discourse V a1,i.e.,a1(x1)=[0.8,0.9]/v11+ [0.1,0.3]/v12+[0,0]/v13.The v11is a vague set of U,which can be represented as v11=[0.8,0.9]/x1+[0.8,1]/x2+ááá+[0,0]/x14.

At the same time,in Table1,for attribute a3,we have

~a

3

ex1;x2T?sup f min f0:8;0:8g;min f0:2;0:3gg;

?

sup f min f1;1g;min f0:3;0:4gg ??0:8;1 :

Also for attribute a4,we have

~a

4

ex1;x2T?sup f min f0:4;0:7g;min f0:6;0:4gg;

?

sup f min f0:6;0:9g;min f0:7;0:5gg ??0:4;0:5 : Therefore,for attribute sets M={a3,a4},we obtain

e Mex1;x2T?in

f f0:8;0:4g;inf f1;0:5g

? ??0:4;0:5 :

Let S=(U,C[{d})be a VDIS.For any B#A,X#U,e B is a vague rela-tion on https://www.360docs.net/doc/be10011057.html,ing(17),the membership functions of the lower approximation e BàeXTand the upper approximation e BàeXTin VDIS, denoted as l e

BàeXT

exTand l e

BàeXT

exTrespectively,are de?ned as

l e

BàeXT

exT?inf

y R X

f e

B

ex;yT;inf

y R X

e1àt e

B

ex;yTT

;

l e

BàeXT

exT?sup

y2X

t e

B

ex;yT;sup

y2X

e1àf e

B

ex;yTT

"#

:

8

>>>

><

>>>

>:

e20T

Proposition2.Let S=(U,C[{d})be a VDIS,a2C and x,y2U.If a(x)#a(y),then e Cex;zT#e Cey;zT,for all z2U.

Proof.For all z2U,we have

~aex;zT?sup

v2V a

minet vexT;t vezTT;sup

v2V a

mine1àf vexT;1àf vezTT"#

and

~aey;zT?sup

v2V a

minet veyT;t vezTT;sup

v2V a

mine1àf veyT;1àf vezTT"#

:

Since a(x)#a(y),for any v2V a,we obtain t v(x)6t v(y)and 1àf v(x)61àf v(y).Therefore,we have~aex;zT#~aey;zT.And since e Cex;zT?inf

a2C

~aex;zTand e Cey;zT?inf

a2C

~aey;zT,we have e Cex;zT#

e Cey;zT.h

4.2.Attribute reduction in VDIS

One fundamental aspect of rough sets for knowledge acquisi-tion involves the searching for some particular subsets of condition attributes.One subset can provide the same quality of classi?ca-tion as the original.Such subsets are called reducts.To facilitate our discussion,we?rst introduce the notion of attribute reduction in VDIS.

Let S=(U,C[{d})be a VDIS,U=f d g?f D1;D2;...;D j V

d

j

g.We denote

n CexT?el e

CàeD1T

exT;l e

CàeD2T

exT;...;l e

CàeD j V

d j

T

exTT;

c

C

exT?f D k j maxet D

k

exTàf D

k

exTT;k?1;2;...;j V d jg:

(

e21T

840L.Feng et al./Knowledge-Based Systems24(2011)837–843

n C(x)and c C(x)are called the lower approximation distribution, maximum lower approximation distribution of x with respect to d of C,respectively.

De?nition9(Reductions in VDIS).Let S=(U,C[{d})be a VDIS.For an attribute subset B#C and x2U,B is referred to as an attribute reduction in VDIS if and only if n B(x)=n C(x)and"b2B(n B n{b}(x)–n C(x)).

By De?nition9,a reduct B is a minimum attribute subset of C in VDIS,which enables us to reduce condition attributes C in such a way that for any x2U,the lower approximation distribution of x with respect to decision d of C is preserved.

In real situations,it might not be convenient to obtain n B (x)=n C(x)and"b2B(n B n{b}(x)–n C(x))for any x in U.Hence, we introduce a notion of the discernibility matrix to serve as a tool for discussing and analyzing attribute reductions in the VDIS.

De?nition10(Discernibility matrix in VDIS).Let M d be a discern-ibility matrix in a VDIS,and U={x1,x2,...,x n}.An element of M d in row i of column j,denoted by M d(i,j),is de?ned as

M dei;jT?

f a i j a i2C^ge~a iex i;x jTT?;and xex i;x jTg;

;;otherwise:

e22T

x(x i,x j)satis?es one of the following conditions:

(1)jen e

C ex iTT–;and jen e

C

ex jTT?;;

(2)jen e

C ex iTT?;and jen e

C

ex jTT–;;

(3)jen e

C ex iTT–;;jen e

C

ex jTT–;and jen e

C

ex iTT–jen e

C

ex jTT.

where jen e

C ex iTT?f

D k jet D

k

ex iTàf D

k

ex iTT>0g,l e

CàeD kT

ex iT?

?t D

k ex iT;1àf D

k

ex iT ek?1;2;...;j V d jT,

ge~a iex i;x jTT?f a i2C jet~a

i ex i;x jTàf~a

i

ex i;x jTT60g:

Obviously,the discernibility matrix is an n?n matrix,where n rep-resents the number of objects in the VDIS.Its properties are:

(1)M d(i,j)=M d(j,i);

(2)M d(i,i)=;.

Proposition3.Let S=(U,C[{d})be a VDIS.If the vague conditional-attribute space degenerates into the crisp data space,the discernibility matrix in the VDIS is equivalent to the Skowron’s discernibility matrix in Pawlak decision information systems.Proof.If the vague conditional-attribute space degenerates into the crisp data space,a i2C,for any v ik2V a i,v il2V a iàf v ik g, l v

ik

exT??1;1 and l v

il

exT??0;0 ,the VDIS degenerates the Pawlak decision information system.And x(x i,x j)degenerates into the fol-lowing conditions:

(1)x i2POS C({d})and x j R POS C({d});

(2)x i R POS C({d})and x j2POS C({d});

(3)x i,x j2POS C({d})and(x i,x j)R IND({d}).

According to De?nition of the discernibility matrix in[27],if x(x i,x j) satis?es one of the above conditions,it is a Skowron’s discernibility matrix.h

De?nition11.Discernibility functionLet S=(U,C[{d})be a VDIS. For any x i,x j2U,L=^(_{a i j a i2M d(i,j)})is called the discernibility function in the VDIS.

Theorem3.Each minimal disjunction normal formula of L is a reduc-tion set in a VDIS.

Proof.Suppose one of the disjuncts in the discernibility function is a1_a2,while another disjunct is a1_a2_a3.Obviously,the second disjunct will be satis?ed by either a1or a2,and a3is not required, i.e.,the second disjunct a1_a2_a3could be removed.We may obtain all reductions when this equation is reduced to a disjunction of conjunctions,i.e.,each conjunctive item of the disjunction nor-mal formula corresponds to a reduct set.h

Proposition4.Let S=(U,C[{d})be a VDIS.If the vague conditional-attribute space degenerates into the crisp data space,the reducts in the VDIS are equivalent to those in Pawlak information systems.

Proof.If the vague conditional-attribute space degenerates into the crisp data space,then by Proposition3and De?nition11,we have that the discernibility function L is equivalent to the Skowron discernibility function in[32].Thus,each minimal disjunction nor-mal formula of L in the VDIS is equivalent to in Pawlak information systems.By Theorem3,the conclusion holds.h

Algorithm 1.Attribute reductions based on the discernibility matrix in the VDIS.

Input:VDIS=(U,C[{d}),where C={a i j i=1, 2,...,j C j};

Output:Attribute reduction in the VDIS.

Table1

A VDIS for the Saturday morning play tennis problem.

U Outlook(a1)Temperature(a2)Humidity(a3)Wind(a4)Play tennis(d) Sunny(v11)Cloudy(v12)Rain(v13)Hot(v21)Mild(v22)Cool(v23)Humid(v31)Normal(v32)Windy(v41)Not-windy(v42)

x1[0.8,0.9][0.1,0.3][0,0][1,1][0,0][0,0][0.8,1][0.2,0.3][0.4,0.5][0.6,0.7]No(0) x2[0.8,1][0.2,0.3][0,0][0.5,0.7][0.3,0.4][0.1,0.2][0.8,1][0.3,0.4][0.7,0.9][0.4,0.5]No(0) x3[0.1,0.2][0.7,0.9][0.2,0.4][0.9,1][0.2,0.3][0,0][0.7,0.9][0.4,0.5][0.2,0.3][0.8,0.9]Yes(1) x4[0,0][0.1,0.2][0.9,1][0.2,0.4][0.8,0.9][0,0][0.6,0.9][0.2,0.5][0.3,0.4][0.7,0.9]Yes(1) x5[0,0][0.2,0.4][0.7,0.9][0,0][0.3,0.5][0.7,0.9][0.5,0.6][0.5,0.7][0.5,0.5][0.5,0.6]Yes(1) x6[0,0][0.3,0.5][0.7,0.8][0,0][0.3,0.4][0.8,0.9][0.3,0.5][0.7,0.9][0.6,0.7][0.4,0.5]No(0) x7[0.1,0.2][0.7,0.8][0.1,0.3][0,0][0.2,0.3][0.7,0.9][0.3,0.5][0.7,0.9][0.9,1][0.1,0.2]Yes(1) x8[0.7,0.9][0.2,0.3][0,0][0.1,0.3][0.7,0.9][0.1,0.2][0.8,1][0.2,0.4][0.2,0.4][0.8,0.9]No(0) x9[0.9,1][0.1,0.5][0,0][0,0][0.1,0.3][0.9,1][0.3,0.5][0.7,0.9][0.3,0.4][0.7,0.9]Yes(1) x10[0,0][0.2,0.3][0.7,0.9][0.1,0.3][0.7,0.9][0.1,0.2][0.4,0.6][0.6,0.9][0.3,0.5][0.7,0.9]Yes(1) x11[0.8,1][0.2,0.2][0,0][0.1,0.3][0.9,1][0,0][0.2,0.4][0.6,0.8][0.8,0.9][0.2,0.4]Yes(1) x12[0.2,0.3][0.6,0.9][0.1,0.3][0.2,0.5][0.8,0.9][0,0][0.7,0.8][0.3,0.4][0.7,0.8][0.3,0.6]Yes(1) x13[0.2,0.3][0.8,1][0.1,0.1][0.8,0.9][0.2,0.3][0,0][0.2,0.5][0.8,0.9][0.2,0.4][0.8,1]Yes(1) x14[0,0][0.1,0.2][0.9,1][0.5,0.5][0.5,0.6][0,0][0.9,1][0.1,0.2][0.8,0.9][0.2,0.3]No(0)

L.Feng et al./Knowledge-Based Systems24(2011)837–843841

Step https://www.360docs.net/doc/be10011057.html,pute the discernibility matrix M d in the VDIS;Step https://www.360docs.net/doc/be10011057.html,pute L ij ?_a i 2M d ei ;j Ta i ,where M d (i ,j )–;;Step https://www.360docs.net/doc/be10011057.html,pute the discernibility function L =^i ,j L ij ;Step 4.Convert L into a disjunctive normal form L ?_i L 0i ;

Step 5.

Each item L 0i in L corresponds to an attribute reduction.

Through Algorithm 1,the all attribute reduction sets can be found,which keep the lower approximation distribution preserved.

4.3.Vague decision rules in a VDIS

Let S =(U ,C [{d })be a VDIS,and B (B #C )be an attribute reduc-tion.For any x 2U ,assume that the maximum lower approxima-tion distribution c B (x )={D 1,D 2,...,D r }(16r 6j V d j ).Then,the knowledge hidden in the VDIS may be extracted in the form of decision rules

l !d econf ;supp T;

e23T

where l ?^a 2B

ea ?t ev TT;t ev T?f v 2V a jet v e_N

B ex TTàf v e_N B ex TTP 0g ,_N B ex T?f y 2U jet e B

ex ;y Tàf e

B

ex ;y TT>0g ;d ?c B ex T:

conf is the con?dence degree of the rule,and is de?ned as

conf ??inf v 2B

t v e_N

B ex TT;inf v 2B

e1àf v e_N B ex TTT ;e24T

supp is the support degree of the rule,and is de?ned as

supp ?

j _N

B ex Tj :e25T

Proposition 5.Let S =(U,C [{d })be a VDIS.If the vague conditional-attribute space degenerates into the crisp data space,the decision rules in the VDIS are equivalent to the positive region decision rules in Pawlak information systems.

Proof.For a vague decision rule l ?d ,if the vague conditional-attribute space degenerates into the crisp data space in VDIS,then for any x 2U ,l denotes the logic formula deducing a crisp equiva-lence class [x ]l ,and d derives a decision class [x ]d .Then,we obtain [x ]l #[x ]d .By the basic de?nitions of rough decision rules in [33],we have l ?d is a positive decision rule.The conclusion holds.h

5.An illustration to the Saturday morning tennis-play problem Through a Saturday morning play tennis problem (see Table 1),we illustrate the knowledge acquisition process of the proposed approaches and its application.

In Table 1,there are 14instances x i (i =1,2,...,14)to be consid-ered,which are evaluated by vague values.Decision is classi?ed into two classes.We now apply the proposed approaches for knowledge acquisition.

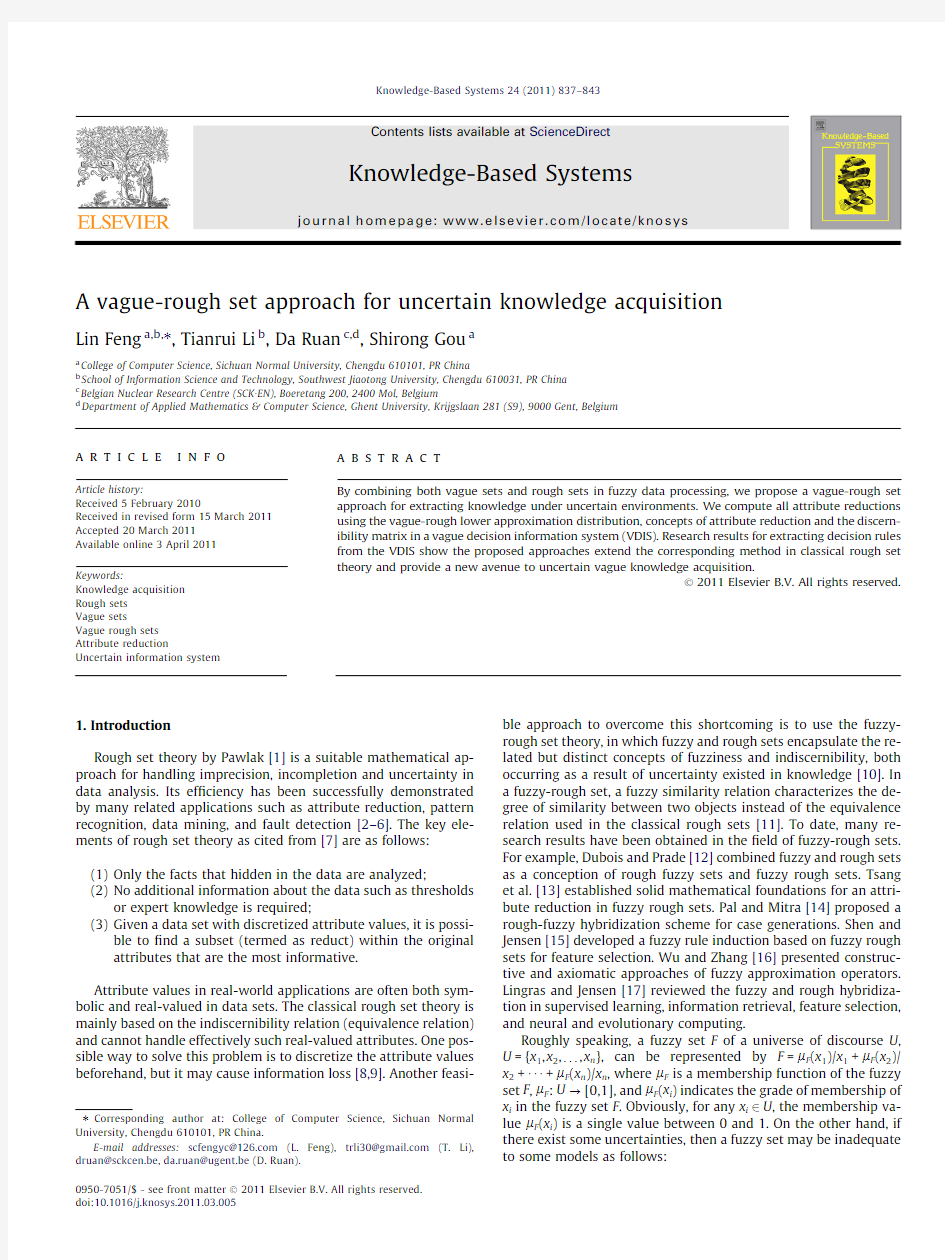

From Table 1,U /{d }={{x 1,x 2,x 6,x 8,x 14},{x 3,x 4,x 5,x 7,x 9,x 10,x 11,x 12,x 13}}.Suppose D 1={x 1,x 2,x 6,x 8,x 14},D 2={x 3,x 4,x 5,x 7,x 9,x 10,x 11,x 12,x 13}.Using (21),we compute the maximum lower approximation distribution of Table 1and list the results in Table 2.By De?nition 10,we obtain the discernibility matrix in Fig.1.Then,we calculate the disjunctive normal form of L ,i.e.,L =a 1^a 4^(a 2_a 3).Thus,the disjunctive normal form of L is (a 1^a 2^a 4)_(a 1^a 3^a 4).Through using Algorithm 1,we ob-tain all of the reducts from Table 1as a 1^a 2^a 4or a 1^a 3^a 4.Let B ={a 1^a 2^a 4}be an attribute reduction.The main deci-sion rules from Table 1could be induced as follows:

R 1:If Outlook =‘‘Cloudy’’^Temperature =‘‘Hot’’^Wind =‘‘Not-windy’’Then Play tennis =‘‘Yes’’with conf =[0.7,0.9]and supp =0.14;

R 2:If Outlook =‘‘Rain’’^Temperature =‘‘Mild’’^Wind =‘‘Not-windy’’Then Play tennis =‘‘Yes’’with conf =[0.7,0.9]and supp =0.14;

R 3:If Outlook =‘‘Sunny’’^Temperature =‘‘Hot’’^Wind =‘‘Not-windy’’Then Play tennis =‘‘No’’with conf =[0.6,0.7]and supp =0.07;R 4:If Outlook =‘‘Sunny’’^Temperature =‘‘Hot’’^Wind =‘‘Windy’’Then Play tennis =‘‘No’’with conf =[0.5,0.7]and supp =0.07;R 5:If Outlook =‘‘Sunny’’^Temperature =‘‘Cool’’^Wind =‘‘Not-windy’’Then Play tennis =‘‘Yes’’with conf =[0.7,0.9]and supp =0.07.

With the arbitrary vague decision rule given above,it induced from a vague or imprecise data set using the proposed vague rough sets theory,and could be used for description of the Saturday morning tennis-play decision policies.On the other hand,there are good grounds for keeping a subinterval of [0,1]for the con?-

Table 2

The maximum lower approximation distribution of Table 1.

x 1

x 2x 3x 4x 5x 6x 7x 8x 9x 10x 11x 12x 13x 14c C (x i )D 1

D 1

D 2

D 2

D 2

D 1

D 2

D 1

D 2

D 2

D 2

D 2

D 2

D

1

842L.Feng et al./Knowledge-Based Systems 24(2011)837–843

dence degree of each vague decision rule.According to vague sets theory[18],this subinterval keeps track of both the favoring evi-dence and the opposing evidence.Therefore,the lower/upper bound of con?dence degree for each vague decision rule can be used to perform constraint decisions.We not only have an estimate of how likely the con?dence degree of each vague decision rule is, but we also have a lower and upper bound on this likelihood.

6.Conclusions and future work

In this paper,we presented a new concept of a vague-rough set, as a generalization of a rough set by combing the vague set and rough https://www.360docs.net/doc/be10011057.html,ing the vague-rough approximation sets,we proposed the concept of attribute reduction and an approach for attribute reduction based on the discernibility matrix in a VDIS.Thus,the knowledge hidden in the VDIS could be unraveled in the form of decision rules.The research results contribute to uncertain knowl-edge acquisition in vague information systems.We will focus on the simpli?cation of vague decision rules and application of the proposed approaches in real-life vague information systems as our future research task.

Acknowledgments

The authors thank the anonymous referees for their valuable comments.This paper is in part supported by the National Natural Science Foundation of PR China under Grants No.60873108,the Scienti?c Research Fund of Sichuan Provincial Education Depart-ment under Grants No.09ZC079,the Scienti?c Research Fund of Sichuan Key Laboratory of Visualization Computing and Virtual Reality under Grants No.J2010N01,and the Key Research Founda-tion of Sichuan Normal University,respectively.

References

[1]Z.Pawlak,Rough sets,International Journal Computer Information Science11

(5)(1982)341–356.

[2]T.R.Li,D.Ruan,W.Geert,J.Song,Y.Xu,A rough set based characteristic

relation approach for dynamic attribute generalization in data mining, Knowledge-Based Systems20(5)(2007)485–494.

[3]L.Feng,G.Y.Wang,T.R.Li,Knowledge acquisition from decision tables

containing continuous-valued attributes,Acta Electronica Sinica37(11) (2009)2432–2438.

[4]J.S.Mi,Y.Leung,W.Z.Wu,Approaches to attribute reduction in concept lattices

induced by axialities,Knowledge-Based Systems23(6)(2010)504–511. [5]G.L.Liu,Rough set theory based on two universal sets and its applications,

Knowledge-Based Systems23(2)(2010)110–115.

[6]P.Guo,Rough set feature extraction by remarkable degrees with real world

decision-making problems,Soft Computing,doi:10.1007/s00500-009-0494-1.

[7]H.Y.Wu,Y.Y.Wu,J.P.Luo,An interval type-2fuzzy rough set model for

attribute reduction,IEEE Transactions on Fuzzy Systems17(2)(2009)301–315.

[8]H.S.Nguyen,Discretization problem for rough sets methods,Lecture Notes in

Arti?cial Intelligence1424(1998)545–552.

[9]X.Z.Wang,S.Y.Zhao,J.H.Wang,Simpli?cation of information table with fuzzy-

valued attributes based on rough sets,Journal of Computer Research and Development41(11)(2004)1974–1981.

[10]Y.Y.Yao,A comparative study of fuzzy sets and rough sets,Information

Sciences109(1998)227–242.

[11]D.S.Yeung,D.Chen,E.C.C.Tsang,J.W.T.Lee,X.Z.Wang,On the generalization

of fuzzy rough sets,IEEE Transactions on Fuzzy Systems13(3)(2005)343–361.

[12]D.Dubois,H.Prade,Rough fuzzy sets and fuzzy rough sets,International

Journal of General Systems17(2)(1990)191–209.

[13]E.C.C.Tsang,D.Chen,D.S.Yeung,X.Z.Wang,J.W.T.Lee,Attributes reduction

using fuzzy rough sets,IEEE Transactions on Fuzzy Systems16(5)(2008) 1130–1141.

[14]S.K.Pal,P.Mitra,Case generation using rough sets with fuzzy representation,

IEEE Transactions on Knowledge and Data Engineering16(3)(2004)292–300.

[15]Q.Shen,R.Jensen,Selecting informative features with fuzzy-rough sets and its

application for complex systems monitoring,Pattern Recognition37(2004) 1351–1363.

[16]W.Z.Wu,W.X.Zhang,Constructive and axiomatic approaches of fuzzy

approximation operators,Information Sciences159(2004)233–254.

[17]P.Lingras,R.Jensen,Survey of rough and fuzzy hybridization,in:Proceeding of

the16th International Conference of Fuzzy Systems2007,pp.125–130. [18]W.L.Gau,D.J.Buehrer,Vague sets,IEEE Transactions on Systems,Man and

Cybernetics23(2)(1993)610–614.

[19]Y.Liu,G.Y.Wang,L.Feng,A general model for transforming vague sets into

fuzzy sets,LNCS Transaction on Computer Science II5150(2008)133–144.

[20]D.H.Hong,K.Chul,A note on similarity measures between vague sets and

between elements,Information Sciences115(1999)83–96.

[21]D.Q.Yan,Z.X.Chi,Similarity measure between vague sets,Pattern Recognition

and Arti?cial Intelligence17(1)(2004)22–26.

[22]C.Y.Xu,The universal approximation of a class of vague systems,Chinese

Journal of Computer28(9)(2005)1508–1513.

[23]S.M.Chen,Similarity measures between vague sets and between elements,

IEEE Transactions on Systems,Man,and Cybernetics,Part B:Cybernetics27(1) (1997)153–158.

[24]S.M.Chen,Measures of similarity between vague sets,Fuzzy Sets and Systems

74(2)(1995)217–223.

[25]L.Feng,G.Y.Wang,X.X.Li,Knowledge acquisition in vague objective

information systems based on rough sets,Expert Systems27(2)(2010) 129–142.

[26]L.Feng,G.Y.Wang,Knowledge acquisition in vague information systems,in:

Proceeding of2006International Conference on Arti?cial Intelligence2006, pp.533–537.

[27]W.X.Zhang,W.Z.Wu,J.Y.Liang,D.Y.Li,Rough Set Theory and Approach,

Science Press,Beijing,2003.pp.158–168.

[28]M.R.Alicja,R.Leszek,Variable precision fuzzy rough sets,LNCS Transactions

on Rough Sets I3100(2004)144–160.

[29]S.M.Chen,S.H.Lee,C.H.Lee,A new method for generating fuzzy rules from

numerical data for handling classi?cation problems,Applied Arti?cial Intelligence15(2001)645–664.

[30]L.Y.Chang,G.Y.Wang,Y.Wu,An approach for attribute reduction and rule

generation based on rough set theory,Journal of Software10(11)(1999) 1206–1211.

[31]W.Z.Wu,W.X.Zhang,H.Z.Li,Knowledge acquisition in incomplete fuzzy

information systems via the rough set approach,Expert Systems20(5)(2004) 280–286.

[32]A.Skowron, C.Rauszer,The discernibility matrices and functions in

information systems,in:R.S?owinski(Ed.),Intelligent Decision Support.

Handbook of Applications and Advances of the Rough Set Theory,Kluwer, Dordrecht,1993.

[33]Y.Y.Yao,Three-way decision:an interpretation of rules in rough set theory,

Lecture Notes in Computer Science5589(2009)642–649,doi:10.1007/978-3-642-02962-2_81.

L.Feng et al./Knowledge-Based Systems24(2011)837–843843

循环系统练习题(含答案)

循环系统练习题(含答案) 《循环系统疾病病人的护理》练习题一、A1型单选题 1、循环系统疾病的常见症状不包括 A、发热 B、心悸 C、呼吸困难 D、水肿 E、晕厥答案:A 2、心源性呼吸困难病人最重要的护理诊断是A、低效性呼吸型态B、体液过多C、清理呼吸道无效D、活动无耐力E、气体交换受损答案:E 3、长期卧床的心源性水肿病人其水肿最早、最明显的部位在A、眼睑B、心前区C、腰骶部D、足踝部E、颜面部答案:C 4、严重心悸病人休息卧床时应避免取A、高枕卧位B、仰卧位C、左侧卧位D、半卧位E、右侧卧位答案:C 5、心前区疼痛最常见的病因是A、肺心病B、高血压病C、风心病D、

冠心病E、心肌炎答案:D 6、心源性晕厥最具特征性的表现是A、头晕B、眩晕C、休克 D、黑矇 E、短暂意识丧失答案: E 7、治疗心力衰竭最常用的药物是A、利尿剂B、血管扩张剂C、洋地黄D、β受体激动剂E、血管紧张素转换酶抑制剂答案:A 8、导致慢性心力衰竭最常见的诱因是 A、呼吸道感染 B、心律失常 C、身心过劳 D、血容量过多 E、不恰当停用洋地黄或降压药等答案:A 9、左心衰竭最重要的临床表现是A、咳嗽、咳痰、咯血B、呼吸困难C、乏力、头晕、心悸D、少尿及肾功能损害E、心脏增大答案:B 10、右心衰竭最常见的症状是A、食欲不振、恶心、呕吐B、水肿、尿少C、乏力、头晕、心悸D、呼吸困难E、咳嗽、咯血答案:A 11、能反映左心功能状况的心导管检查是A、PCWP

B、CO C、CI D、CVP E、血氧含量答案:A 12、能反映右心功能状况的心导管检查是A、PCWP B、CO C、CI D、CVP E、血氧含量答案:D 13、不符合心力衰竭膳食原则的一项是1 A、高热量B、低盐C、清淡、易消化D、产气少E、富含维生素答案:A 14、处理洋地黄中毒不正确的措施是A、减少洋地黄用量B、及时与医生取得联系C、进行心电图检查D、停用排钾利尿剂E、纠正心律失常答案:A 15、除非紧急情况,利尿剂的应用时间一般不选用A、早晨B、上午C、中午D、下午E、晚上答案:E 16、急性心力衰竭的诱发因素不包括A、急性感染B、过度疲劳C、情绪激动D、严重心律失常E、静脉输液过多过快答案:C 17、关于硝普钠治疗的护理措施不正确的一项是A、一般剂量

a rose for Emily 分析

A Rose for Emily 的评析(2010-06-21 23:49:34)转载▼ 标签:文化 威廉.福克纳和他的《献给爱米丽的玫瑰》 摘要:福克纳把南方的历史和现实社会作为自己创作源泉而成为美国南方文学的代表。《献给爱米丽的玫瑰》通过爱米丽的爱情悲剧揭示了新旧秩序的斗争及没落贵族阶级的守旧心态,福克纳运用神秘、暗语、象征、时序颠倒等写作手法来揭示这一主题。 关键词:威廉·福克纳;献给爱米丽的玫瑰;南方小说 一、威廉·福克纳的南方情结 威廉·福克纳(William Faulkner,1897-1962)是美国文学史上久负盛名的作家之一,生于密西西比州一个在内战中失去财富和地位的没落的南方种植园家庭。福克纳的大多数作品都以美国南方为背景,强调南方主题和南方意识。在他19部长篇小说和75篇短篇小说中,绝大多数小说的故事都发生在他虚构的美国的约克纳帕塔法县(Yoknapatawpha county)和杰弗生镇。这些作品所展示的生活画卷 和人物形象构成了福克纳笔下的“约克纳帕塔法世系”。 “约克纳帕塔法世系”是以该县家族的兴衰、变迁为主题,故事所跨越的时间上起自印地安人与早期殖民者交往的岁月,止于第二次世界大战后,长约二百年。他的世系小说依南方家系人物的生活而展开,以南方浓郁的泥土气息伴随着因工业文明而带来的焦虑、惶惑、无奈,把一百多年来即从1800年到第二次世界大战之后社会发展过程中,南方人所独有的情感和心态通过独特的艺术方式展示出来,可谓一部“南方生活的史诗”。在这部史诗的字里行间,留下了作家的血与泪之痕:割不断爱恋南方古老精神的一片深情,可又抵御不了现代文明进程的必然性。正如福克纳所说:“我爱南方,也憎恨它。这里有些东西我本不喜欢。但是我生在这里,这是我的家。因此,我愿意继续维护它,即便是怀着憎恨。”这种矛盾恰好构成了福克纳情感意识及其小说世界的无穷魅力。结果,约克纳帕塔法县成了旧南方的象征,而福克纳也借此成功地表现了整个南方社会的历史和意识。 二、《献给爱米丽的玫瑰》暗示南方的腐朽没落 福克纳素以长篇小说著称于世,但其短篇小说,无论艺术构思、意境创造、人物塑造抑或语言风格、结构艺术等,均可与其长篇小说互论短长,而其最著名的短篇之一《献给爱米丽的玫瑰》 A rose for Emily则对理解、研究福克纳的主要作品,即“约克纳帕塔法世系”具有十分重要的意义。在这篇小说中,作者以凝练的笔触、独特的结构成功地塑造了爱米丽·格里尔森Emily Grieson这个艺术典型。 《献给爱米丽的玫瑰》是福克纳1930年4月发表的被誉为最负盛名的短篇小说。故事发生在约克纳帕塔法县的杰弗生镇,小说分为五小部分,在这五个写作空间里,运用回忆来描写爱米丽·格里尔森(Emily Grieson)神秘的一生,讲述了一位被剥夺了与他人建立正常人际关系的妇女如何逃避现实以至于精神失常的故事,表现了新旧南方价值观念之间的冲突。爱米丽的个性冲突、所做所为源于她南方古老而辉煌的家庭背景,福克纳用神秘、暗语、象征等写作方法来揭示爱米丽是南方腐朽传统的象征。故事的叙述采用了时序颠倒的手法,这一手法不仅使小说结构奇突,情节跳跃,更为重要的是其具有深化主题思想的艺术功效。作者用这种手法打破时空界限,把过去和现在直接放在一起,在它们之间形成鲜明的对照,从而使读者深深地感到时代的变迁和传统价值观念的沦丧。 小说一开始就告诉读者爱米丽死了,全镇的人都去送丧。她的死象征着南方古老传统、价值观念、生活方式的彻底灭亡和消失。为展示新旧双方的矛盾和以爱米丽为代表的贵族阶级虽大势已去却拒不接受社会变革的心态,作者选取了一个极为典型的事件——纳税事件。

介词from的语法特点与用法习惯

介词?f rom的语法特点与用法习惯 1.不要根据汉语意思在及物动词后误加介词?from。如: 他上个星期离开中国去日本了。 误:?H e left from China for Japan last week. 正:?H e left Chine for Japan last week. 另外,也不要根据汉语意思错用介词?from。如: 太阳从东方升起,从西方落下。 误:?T he sun rises from the east and sets from the west. 正:?T he sun rises in the east and sets in the west. 2.f rom虽然本身是介词,但它有时也可接介词短语作宾语。如: Choose a book from among these. 从这些书中选一本吧。 A man stepped out from behind the wall. 一个人从墙后走出来。 比较: I took it from the bed. 我从床那儿(或床上)拿的。 I took it from under the bed. 我从床下拿的。 注意,下面一句用了?from where(引导非限制性定语从句),而未用?f rom which,其中的where=i n the tree,即?from where=f rom in the tree。如: He hid himself in a tree, from where he could see the enemy in the distance. 他躲在一棵树上,从那儿他可以看到远处的敌人。 3.有时其后可接?w hen, where引导的宾语从句,此时可视为其前省略了?t he time, the place。如: He didn’t speak to me from when we moved in. 从我们迁入之时起,他没和我说过话。

“的、地、得”用法分析及练习(后附答案)

“的、地、得”用法分析及练习(后附答案) 一、的、地、得用法分析: “的”后面跟的都是表示事物名称的词或词语,如:敬爱的总理、慈祥的老人、戴帽子的男孩、珍贵的教科书、鸟的天堂、伟大的祖国、有趣的情节、优雅的环境、可疑的情况、团结友爱的集体、他的妈妈、可爱的花儿、谁的橡皮、清清的河水...... “地”后面跟的都是表示动作的词或词语,如:高声地喊、愉快地唱、拼命地逃、疯狂地咒骂、严密地注视、一次又一次地握手、迅速地包围、沙沙地直响、斩钉截铁地说、从容不迫地申述、用力地踢、仔细地看、开心地笑笑......” “得”前面多数是表示动作的词或词语,少数是形容词;后面跟的都是形容事物状态的词或词语,表示怎么怎么样的,如:走得很快、踩得稀烂、疼得直叫唤、瘦得皮包骨头、红得发紫、气得双脚直跳、理解得十分深刻、乐得合不拢嘴、惊讶得目瞪口呆、大得很、扫得真干净、笑得多甜啊...... 二、的、地、得用法补充说明: 1、如果“de”的后面是“很、真、太”等这些词,十有八九用“得”。 2、有一种情况,如“他高兴得一蹦三尺高”这句话里,后面的“一蹦三尺高”虽然是表示动作的,但是它是来形容“高兴”的程度的,所以也应该用“得”。

三、的、地、得用法总结: 1、“的”前面的词语一般用来修饰、限制“的”后面的事物,说明“的”后面的事物怎么样。结构形式一般为:修饰、限制的词语+的+名词。 2、“地”前面的词语一般用来形容“地”后面的动作,说明“地”后面的动作怎么样。结构方式一般为:修饰、限制的词语+地+动词。 3、“得”后面的词语一般用来补充说明“得”前面的动作怎么样,结构形式一般为:动词(形容词)+得+补充、说明的词语。 四、的、地、得用法例句: 1. 蔚蓝色的海洋,波涛汹涌,无边无际。 2. 向日葵在微风中向我们轻轻地点头微笑。 3. 小明在海安儿童公园玩得很开心。 五、“的、地、得”的读音: “的、地、得”是现代汉语中高频度使用的三个结构助词,都起着连接作用;它们在普通话中都各自有着各自的不同的读音,但当他们附着在词,短语,句子的前面或后面,表示结构关系或某些附加意义的时候都读轻声“de”,没有语音上的区别。 但在书面语中有必要写成三个不同的字,这样可以区分他们在书面语用法上的不同。这样做的好处,就是可使书面语言精确化。

动名词的语法特征及用法

动名词的语法特征及用法 动名词由动词加-ing词尾构成,既有名词的特征,又有动词的特征。了解动名词的语法特征可帮助学习者深入理解动名词的意义,从而正确使用动名词。 一、动名词的名词特征 动名词的名词特征表现在它可在句子中当名词来用,作主语、宾语、表语、定语。例如: Beating a child will do more harm than good.打孩子弊大于利。(作主语) Do you mind answering my question?你不介意回答我的问题吧?(作宾语) To keep money that you have found is stealing.把拾到的钱留起来是偷盗行为。(作表语) No one is allowed to speak aloud in the reading room.阅览室里不许大声说话。(作定语) 在动名词担任这些句子成分时,学习者需注意的是: 1、有些动词后只能用动名词作宾语,构成固定搭配,需特别记忆。常见的这类动词有:admit(承认),advise(建议),allow(允许), appreciate(感激),avoid(避免),can't help(禁不住),consider(考虑),deny(否认),dislike(不喜欢),enjoy(喜欢),escape(逃脱),excuse(原谅),feel like(想要),finish(结束),give up(放弃),imagine(想象),involve(包含),keep(保持),mind(介意),miss(错过),permit(允许),practise(练习),quit(停止),recollect (记得),recommend(推荐),suggest(建议),stop(停止),resent(对……感到愤恨、怨恨),risk(冒……危险),cannot stand(受不了)等。例如: We do not permit smoking in the office.我们不允许在办公室吸烟。 In fighting the fire,he risked being burnt to death.在救火中,他冒着被烧死的危险。 She denied having stolen anything.她否认偷过任何东西。 I suggest doing it in a different way.我建议换一个方法做这件事。 2、动名词常用于一些固定句型中,常见的有:It is no use /no good...;It is a waste oftime...;It is fun /nice /good...;There isno...(不可以/不可能……)等。例如: It is no use asking him.He doesn't know any more than you do. 问他也没用,他并不比你知道得更多。 It's no fun being lost in rain.在雨中迷路可不是好玩的。 It's a waste of time your reasoning with him.你和他讲道理是在浪费时间。

循环系统复习题(带答案)

单选题 1.典型慢性肺原性心脏病应具备哪一项X线表现?(A ) A、肺动脉高压、右室大 B、肺瘀血、右室大、左房大 C、肺充血、左、右室大 D、肺少血、右室大、主动脉宽 E、肺水肿,心脏普遍大 2.指出不属于肺循环改变的肺部病变是(C ) A、肺充血 B、肺淤血(含肺水肿) C、肺出血 D、肺血减少 E、肺动脉高压 3.心脏正侧位片,显示两肺呈瘀血征,心影呈二尖瓣型,心胸比值为0.53,心影可见左心缘出现四个弓,主动脉弓较小,心尖上翘,心底有双重密度影。应诊断为( D ) A、慢性肺源性心脏病 B、缩窄性心包炎 C、先天性心脏病房间隔缺损 D、风湿性心脏病二尖瓣狭窄 E、风湿性心脏病二尖瓣狭窄伴关闭不全 4.“二尖瓣型心”的基本特征是(B ) A、右心室增大 B、肺动脉段膨隆 C、主动脉结突出 D、右心房增大 E、心尖向左下延伸 5.只引起左心室负荷加大的主要原因是(B ) A、肺循环高压 B、体循环高压 C、右心回心血量增加 D、三尖瓣关闭不全 E、心房水平左向右分流 6.女性患儿,8岁,自幼心慌、气短、活动受限、易感冒,体检胸左缘第2-3肋间闻及机器样连续性杂音。照片示两肺多血,主动脉结宽,肺动脉段凸出,二者之间填平,左、右心室增大,心及主动脉搏动强烈。应诊断为( D ) A、动脉导管未闭 B、二尖瓣关闭不全 C、房间隔缺损 D、室间隔缺损

E、法洛四联症 7.女性患者,24岁,因患风湿性心脏病而摄心、大血管远达高千伏心脏后前位和左侧位。照片示二尖瓣型心,心胸比值0、53,左心房和右心室增大,两肺门影增大模糊,上肺门大于下肺门,两肺纹理多而模糊,并有网状影及淡薄的密度增高影为背景。肺部改变应考虑为( E ) A、肺充血 B、肺动脉高压 C、肺少血 D、肺栓塞 E、肺瘀血 8.男性患者,23岁,右心房、室增大,肺脉动脉段凸出,两肺门大而搏动增强,右下肺动脉干增粗达17mm,两肺纹理增多增粗,边界清晰,肺野透过度正常其肺循环变化是( B ) A、肺少血 B、肺多血 C、肺淤血 D、肺出血 E、肺栓塞 9.肺循环血流量增多,而左心室、主动脉及体循环血流量减少的先天性心脏病为(C ) A、动脉导管未闭 B、室间隔缺损 C、房间隔缺损 D、法洛四联征 E、肺动脉瓣狭窄 10.心脏右前斜位片主要观察(A ) A、左心房、肺动脉主干和右心室 B、左心室、主动脉弓的全貌 C、右心房、主动脉 D、气管分叉 E、以上全不是 11.右心房增大,下列哪一项是错误的(E ) A、后前位,右侧第二弓增大,右心膈角锐利 B、左前斜位,心前缘上段突出或(和)延长 C、右前斜位,心后缘下部向后突出 D、右房增大常发生在房缺 E、右房增大多为室缺所致 12.指出引起左心房增大的疾病(A ) A、风湿性心脏病——二尖瓣狭窄

循环系统练习试题(含答案解析)

《循环系统疾病病人的护理》练习题 一、A1型单选题 1、循环系统疾病的常见症状不包括 A、发热 B、心悸 C、呼吸困难 D、水肿 E、晕厥 答案:A 2、心源性呼吸困难病人最重要的护理诊断是 A、低效性呼吸型态 B、体液过多 C、清理呼吸道无效 D、活动无耐力 E、气体交换受损 答案:E 3、长期卧床的心源性水肿病人其水肿最早、最明显的部位在 A、眼睑 B、心前区 C、腰骶部 D、足踝部 E、颜面部 答案:C 4、严重心悸病人休息卧床时应避免取 A、高枕卧位 B、仰卧位 C、左侧卧位 D、半卧位 E、右侧卧位 答案:C 5、心前区疼痛最常见的病因是 A、肺心病 B、高血压病 C、风心病 D、冠心病 E、心肌炎 答案:D 6、心源性晕厥最具特征性的表现是 A、头晕 B、眩晕 C、休克 D、黑矇 E、短暂意识丧失 答案:E 7、治疗心力衰竭最常用的药物是 A、利尿剂 B、血管扩剂 C、洋地黄 D、β受体激动剂 E、血管紧素转换酶抑制剂 答案:A 8、导致慢性心力衰竭最常见的诱因是 A、呼吸道感染 B、心律失常 C、身心过劳 D、血容量过多 E、不恰当停用洋地黄或降压药等 答案:A 9、左心衰竭最重要的临床表现是 A、咳嗽、咳痰、咯血 B、呼吸困难 C、乏力、头晕、心悸 D、少尿及肾功能损害 E、心脏增大 答案:B 10、右心衰竭最常见的症状是 A、食欲不振、恶心、呕吐 B、水肿、尿少 C、乏力、头晕、心悸 D、呼吸困难 E、咳嗽、咯血 答案:A 11、能反映左心功能状况的心导管检查是 A、PCWP B、CO C、CI D、CVP E、血氧含量 答案:A 12、能反映右心功能状况的心导管检查是 A、PCWP B、CO C、CI D、CVP E、血氧含量 答案:D 13、不符合心力衰竭膳食原则的一项是

ARoseforEmily英文分析及简评

“A Rose for Emily” is divided into five sections. The first section opens with a description of the Grierson house in Jefferson. The narrator mentions that over the past 100 years, Miss Emily Grierson’s home has fall into disrepair and become “an eyesore among eyesores.” The first sentence of the story sets the tone of how the citizens of Jefferson felt about Emily: “When Miss Emily Grierson died, our whole town went to the funeral: the men through a sort of respectful affection for a fallen monument, the women mostly out of curiosity to see the inside of her house, which no one save an old manservant – a combined gardener and cook – had seen in at least ten years.” It is known around town that Emily Grierson has not had guests in her home for the past decade, except her black servant who runs errands for her to and from the market. When a new city council takes over, however, they begin to tax her once again. She refuses to pay the taxes and appear before the sheriff, so the city authorities invite themselves into her house. When confronted on her tax evasion, Emily reminds them that she doesn't have to pay taxes in Jefferson and to speak to Colonel Sartoris, although he had died 10 years before. In section two, the narrator explains that the Griersons had always been a very proud Southern family. Mr. Grierson, Emily’s father, believes no man is suitable for his daughter and doesn't allow her to date. Emily is largely dependent upon her father, and is left foundering when he dies. After Mr. Grierson's death, Emily does not allow the authorities to remove his body for three days, claiming he is still alive. She breaks down and allows authorities to take the body away for a quick burial. Section three introduces Emily’s beau, Homer Barron, a foreman from the north. Homer comes to Jefferson with a crew of men to build sidewalks outside the Grierson home. After Emily and Homer are seen driving through town several times, Emily visits a druggist. There, she asks to purchase arsenic. The druggist asks what the arsenic is for since it was required of him to ask by law. Emily does not respond and coldly stares him down until he looks away and gives her the arsenic. When Emily opens the package, underneath the skull and bones sign is written, "For Rats." Citizens of Jefferson believe that Miss Emily is going to commit suicide since Homer has not yet proposed in the beginning of section four. The townspeople contact and invite Emily's two cousins to comfort her. Shortly after their arrival, Homer leaves and then returns after the cousins leave Jefferson. After staying in Jefferson for one night, Homer is never seen again. After Homer’s disappearance, Emily begins to age, gain weight, and is rarely seen outside of her home. Soon, Miss Emily passes away.

法语语法-名词的特点和用法

{1} 1. 名词(le nom, le substantif)的特点 名词是实体词,用以表达人、物或某种概念,如:le chauffeur(司机),le camion(卡车),la beauté(美丽)等。 法语的名词各有性别,有的属阳性,如:le soleil(太阳),le courage(勇敢),有的属阴性,如:la lune(月亮),la vie(生活)。名词还有单数和复数,形式不同,如:un ami(一个朋友),des amis(几个朋友)。 法语名词前面一般要加限定词(le déterminant),限定词可以是数词、主有形容词,批示 形容词或冠词。除数词外,均应和被限定性名词、数一致,如:la révolution(革命),un empire (一个帝国),cermarins(这些水手),mon frère(我的兄弟)。https://www.360docs.net/doc/be10011057.html, 大部分名词具有多义性,在文中的意义要根据上下文才能确定,如: C’est une pluie torrentielle.(这是一场倾盆大雨。) Lorsque rentre la petite fille, c’est sur elle une pluie de baisers.(当小姑娘回家时,大家都拥上去亲吻她)。 第一例, pluie是本义,第二例, pluie是上引申意义。 2. 普通名词和专有名词(le nom commun et le nom propre) 普通名词表示人、物或概念的总类,如:un officier(军官),un pays(国家),une montagne (山),la vaillance(勇敢、正直)。 专有名词指特指的人、物或概念,如:la France(法国)。 专有名词也有单、复数;阴阳性。如:un Chinois(一个中国男人),une Chinoise(一个中国女人),des Chinois(一些中国人)。 3. 普通名词和专有名词的相互转化(le passage d’une catégorie àl’autre) 普通名词可转化为专有名词,如:报刊名:l’Aube(黎明报),l’Humanité(人道报),l’Observateur(观察家报)等报刊名称是专有名词,但它们是从普通名词l’aube(黎明),I’humanité(人道),l’Observateur(观察家)借用来的。 专有名词也可以转化为普通名词,意义有所延伸,其中许多还保持第一个字母大写的形式,如商品名:le champagne(香槟酒),une Renault(雷诺车),le Bourgogne(布尔戈涅洒)。以上三例分别来自专有名词la Champagne(香槟省),Renault(雷诺,姓),la Bourgogne(布尔戈涅地区)。 4. 具体名词和抽象名词(les noms concrèts et les noms abstraits)

循环系统试题

第九章循环系统 一、自测试题 (一)选择题 单选题 1.内皮细胞得特征结构就是() A、发达得高尔基体 B、细胞间10-20nm得间隙 C、丰富得紧密连接 D、W—P小体 E、丰富得质膜小泡 2.关于内皮细胞得结构,下列哪项就是错误得() A、细胞衣 B、紧密连接 C、吞饮小泡 D、微管成束 E、W—P小体 3、内皮细胞内W—P小体得功能就是( ) A、分泌作用 B、物质转运 C、吞噬功能 D、止血凝血 E、传递信息 4.关于W—P小体得结构与功能,下列哪项就是错误得( ) A、多条平行细管 B、内皮细胞特殊得细胞器 C、合成与储存凝血因子 D、合成与储存第Ⅷ因子相关抗原 E、与止血凝血功能有关 5。内、外弹性膜最明显得血管就是( ) A、大动脉

C、小动脉 D、中静脉 E、大静脉 6.关于中动脉得结构,下列哪项就是错误得( ) A、内弹性膜明显 B、三层结构明显 C、环行平滑肌较多 D、内皮下层明显 E、外弹性膜明显 7.导致老年人血管硬化增加得因素不包括() A、平滑肌减少 B、内膜钙化 C、血管壁增厚 D、弹性膜减少 E、脂类物质沉积 8.以下称为肌性动脉得就是( ) A大动脉 B.中等动脉 C、主动脉 D微动脉 E.肺动脉 9.以下称为弹性动脉得就是( ) A 微动脉 B中等动脉 C、小动脉 A、大动脉 B、肾动脉 10.称为外周阻力血管得就是( ) A.大动脉

C.股动脉 D。肺动脉 E.小动脉 11.中等动脉中膜得主要成分就是( ) A。胶原纤维 B。平滑肌纤维 C。弹性纤维 D。网状纤维 E.弹性膜 12。以下哪种血管管壁不具有营养血管( ) A。大动脉 B.大静脉 C.中等动脉 D.中等静脉 E。微动脉 13.以下哪种管壁得中膜与外膜厚度大致相等( ) A.大动脉 B.大静脉 C。中等动脉 D.中等静脉 E.心脏 14。毛细血管得构成就是( ) A、内膜、中膜与外膜 B、内皮、基膜与1~2层平滑肌 C、内皮与基膜 D、内皮、基膜与少量周细胞 E、内膜与外膜 15.毛细血管管壁中具有分化能力得细胞就是( ) A、周细胞

常见系动词的分类及使用特点

常见系动词的分类及使用特点 系动词词义不完整,在句中不能单独使用(除省略句外),后面必须接有表语,系动词和表语一起构成合成谓语。常见的系动词大致可分为三类。 第一类:表示特征或状态的,有 be, look, feel, seem, appear, smell, taste, sound, turn out(结果是、证明是)等。 You'll be all right soon. You don't look very well. I feel rather cold. He seems to be ill. It appears that he is unhappy. The roses smell sweet. The mixture tasted horrible. How sweet the music sounds! The day turned out (to be)a fine one. 第二类:表示从一种状态到另一种状态的变化,有 become, get, grow, turn, fall, go, come, run 等。 He became a world-famous scientist. It is getting warmer and warmer. It grew dark. The food has turned bad. Yesterday he suddenly fell ill. Mary's face went red. His dream has come true. The boy's blood ran cold. 第三类:表示保持状态的,有keep, remain, continue 等。 Keep quiet, children! The weather continued fine for a long time. It remains to be proved. 系动词后的表语可以是名词、代词、数词、形容词、分词、动名词、不定式、副词、介词短语、词组、从句,系动词 be 可用于上述所有情况。如: The people are the real heroes. (名词) That's something we have always to keep in mind. (代词) She is often the first to come here. (数词) She is pretty and wise. (形容词). The news was surprising. (分词) His job is teaching English. (动名词) The only method is to give the child more help. (不定式) I must be off now. (副词) The bridge is under construction. (介词短语) That would be a great weight off my mind. (词组) This is why he was late. (从句) 系动词的使用特点: 1、所有的系动词都可接形容词作表语,此处略举数例。

标点符号用法分析

标点符号用法 一、标点符号 标点符号:辅助文字记录语言的符号,是书面语的有机组成部分,用来表示语句的停顿、语气以及标示某些成分(主要是词语)的特定性质和作用。 句子:前后都有较大停顿、带有一定的语气和语调、表达相对完整意义的语言单位。 复句:由两个或多个在意义上有密切关系的分句组成的语言单位,包括简单复句(内部只有一层语义关系)和多重复句(内部包含多层语义关系)。 分句:复句内两个或多个前后有停顿、表达相对完整意义、不带有句末语气和语调、有的前面可添加关联词语的语言单位。 陈述句:用来说明事实的句子。 祈使句:用来要求听话人做某件事情的句子。 疑问句:用来提出问题的句子。 感叹句:用来抒发某种强烈感情的句子。 词语:词和短语(词组)。词,即最小的能独立运用的语言单位。短语,即由两个或两个以上的词按一定的语法规则组成的表达一定意义的语言单位,也叫词组。 二、分类 标点符号分为点号和标号两大类。

点号的作用是点断,主要表示说话时的停顿和语气。点号又分为句末点号和句内点号。 句末点号用在句末,表示句末停顿和句子的语气,包括句号、问号、叹号。 句内点号用在句内,表示句内各种不同性质的停顿,有逗号、顿号、分号、冒号。 标号的作用是标明,主要标示某些成分(主要是词语)的特定性质和作用。包括引号、括号、破折号、省略号、着重号、连接号、间隔号、书名号、专名号、分隔号。 (一)句号 1.用于句子末尾,表示陈述语气。使用句号主要根据语段前后有较大停顿、带有陈述语气和语调,并不取决于句子的长短。 2.有时也可表示较缓和的祈使语气和感叹语气。 请您稍等一下。 我不由地感到,这些普通劳动者也是同样值得尊敬的。 (二)问号 主要表示句子的疑问语气。形式是“?”。 1.用于句子末尾,表示疑问语气(包括反问、设问等疑问类型)。使用问号主要根据语段前后有较大停顿、带有疑问语气和语调,并不取决于句子的长短。 2.选择问句中,通常只在最后一个选项的末尾用问号,各个选项之间一般用逗号隔开。当选项较短且选项之间几乎没有停顿时,选项之间可不用逗号。当选项较多或较长,或有意突出每个选项的独立性时,也可每个选项之后都用问号。 3.问号也有标号的用法,即用于句内,表示存疑或不详。 马致远(1250?―1321)。 使用问号应以句子表示疑问语气为依据,而并不根据句子中包含有疑问词。当含有疑问词的语段充当某种句子成分,而句子并不表示疑问语气时,句末不用问号。

人体解剖学循环系统习题及答案

选择题1.脉管系统的构成 A.心血管系统和淋巴管组成 B.心、动脉、毛细血管和静脉 C.心、血管系统和淋巴器官 D.心、动脉、静脉和淋巴导管 E.心血管系统和淋巴系统 2.有关心脏正确的说法是 A.心前面两心耳之间为主动脉根 B.右心房构成心右缘 C.居于胸腔的正中 D.位于两侧肺之间的前纵膈内 E.冠状沟将心脏分为左、右半 3.关于心脏各腔的位置正确的是 A.xx构成心前壁大部 B.右心室构成心脏的右缘 C.右心房构成心后壁大部 D.左心房构成心脏的左缘 E.心尖由xx构成 4.关于心脏胸肋面正确的描述是 A.朝向左下方

B.左、右心耳位于主动脉根部两侧 C.由右心房、右心室构成 D.隔心包与胸骨、肋骨直接相贴 E.右心室构成此面大部分 5.关于心脏表面标志正确的说法是 A.冠状沟分隔左、右心房 B.界沟分隔心房、心室 C.室间沟深部为室间隔 D.心尖处有心尖切迹 E.冠状沟位于人体的冠状面上 6.关于右心房出、xx结构错误的描述是 A.上腔静脉口通常无瓣膜 B.冠状xx位于房室交点的深面 C.冠状xx周围多数具有瓣膜 D.出口处有二尖瓣 E.下腔静脉瓣连于卵圆窝缘 7.有关右心房错误的描述是 A.界嵴分隔腔静脉xx和固有心房 B.固有心房的前上部为右心耳 C. Koch三角的深面为房室结 D.右心房收集除心脏以外体循环的静脉血

E 梳状肌起自界嵴 8.关于心腔内结构正确的说法是 A.冠状xx位于左心房 B.右心室的出口为主动脉口 C.三尖瓣口连接左心房与xx D.界嵴为xx的分部标志 E.节制xx位于右心室 9.心脏收缩射血期瓣膜的状态是 A.主动脉瓣、肺动脉瓣开放 B.二尖瓣、三尖瓣开放 C.主动脉瓣开放,肺动脉瓣关闭 D.二尖瓣关闭、三尖瓣开放 E.二尖瓣开放,主动脉瓣关闭 10.心室舒张充盈期防止血液逆流的装置是 A.主动脉瓣和二尖瓣 B.肺动脉和三尖瓣 C.主动脉瓣和三尖瓣 D.主动脉瓣和肺动脉瓣 E.二尖瓣和三尖瓣 11.关于心壁的正确说法是 A.卵圆窝位于室间隔的上部

统编《语文》二年级下册主要特点及使用建议

统编《语文》二年级下册主要特点及使用建议 原创 2018-03-21 张立霞人教教材培训 导读 2018年春季统编《语文》二年级下册教材网络培训讲义精要。 一、激发儿童学习兴趣,保护儿童天性 1. 选文富有童趣,利于激活儿童的经验、想象。 儿童有自己的世界,有应予尊重的天性。为顺应儿童心理,保护儿童天性,提高教育、教学效果,教科书进行了多方面的尝试与探索。 首先选文富有童趣,利于激活儿童的经验、

想象。生活故事,注意选择儿童凭借有限的生活经验就能理解的文本。童话故事,知识背景相对简单,利于减少阅读障碍。孩童化的表达,贴近儿童的心灵,同时又含了某种诗意和哲理。读起来好玩、有趣,同时内心里会沉淀些有份量的、值得未来去品味的东西。 教材的选文注重借助该年龄段有限的现实经验和相对丰富的想象,激发学习的动力和兴趣。 2. 采用游戏、活动等方式让学生在玩中学。 课后练习:在准确把握习题意图的前提下,尽可能寻找练习中的游戏、活动因素,让学习变得有趣、轻松。 文中泡泡。 字词句运用。

口语交际。 3. 注意练习的趣味性,减少畏难情绪。 写话: (1)精心设计写话内容及呈现方式,尽可能减少畏难情绪。表格的呈现方式,直观提示要写的内容,表格的示例内容也尽量贴近儿童真实生活,利于调动生活积累。 (2)色彩丰富的画面,儿童化的角色选择,有趣的情节设定,可以调动儿童的参与积极性,减少写话障碍。 (3)引导学生不拘形式地写下自己想说的话。 二、注重文化传承,立德树人自然渗透,涵养品格 教科书中的课文,“有意思”与“有意义”兼具,在激发学生学习兴趣的同时,有助于学生的精神成长。教科书统筹安排中华优秀传统文化内容,增强学生的文化认同感和民族自豪感。

定语从句用法分析

定语从句用法分析 定语从句在整个句子中担任定语,修饰一个名词或代词,被修饰的名词或代词叫先行词。定语从句通常出现在先行词之后,由关系词(关系代词或关系副词)引出。 eg. The boys who are planting trees on the hill are middle school students 先行词定语从句 #1 关系词: 关系代词:who, whom, whose, that, which, as (句子中缺主要成份:主语、宾语、定语、表语、同位语、补语), 关系副词:when, where, why (句子中缺次要成份:状语)。 #2 关系代词引导的定语从句 关系代词引导定语从句,代替先行词,并在句中充当主语、宾语、定语等主要成分。 1)who, whom, that 指代人,在从句中作主语、宾语。 eg. Is he the man who/that wants to see you?(who/that在从句中作主语) ^ He is the man who/whom/ that I saw yesterday.(who/whom/that在从句中作宾语) ^ 2)whose 用来指人或物,(只用作定语, 若指物,它还可以同of which互换)。eg. They rushed over to help the man whose car had broken down. Please pass me the book whose cover is green. = the cover of which/of which the cover is green. 3)which, that指代物,在从句中可作主语、宾语。 eg. The package (which / that)you are carrying is about to come unwrapped. ^ (which / that在从句中作宾语,可省略) 关系代词在定语从句中作主语时,从句谓语动词的人称和数要和先行词保持一致。 eg. Is he the man who want s to see you? #3.关系副词引导的定语从句 关系副词when, where, why引导定语从句,代替先行词(时间、地点或理由),并在从句中作状语。 eg. Two years ago, I was taken to the village where I was born. Do you know the day when they arrived? The reason why he refused is that he was too busy. 注意: 1)关系副词常常和"介词+ which"结构互换 eg. There are occasions when (on which)one must yield (屈服). Beijing is the place where(in which)I was born. Is this the reason why (for which)he refused our offer? * 2)在非正式文体中,that代替关系副词或"介词+ which",放在时间、地点、理由的名词,在口语中that常被省略。 eg. His father died the year (that / when / in which)he was born. He is unlikely to find the place (that / where / in which)he lived forty years ago.