Application of particle swarm optimization algorithm in bellow optimum design

粒子群改进算法 matlab

粒子群改进算法matlab-概述说明以及解释1.引言概述部分的内容可如下编写:1.1 概述粒子群算法(Particle Swarm Optimization, PSO)是一种基于群体智能的优化算法,通过模拟鸟群或鱼群等自然界中群体行为的方式,来寻找最优解。

它最初由Russell Eberhart和James Kennedy于1995年提出,并在之后的发展中得到了广泛应用。

PSO算法的核心思想是将待求解问题的可能解看作是群体中的粒子,并通过模拟粒子间的交流和协作来不断优化解空间,在寻找最优解的过程中逐步收敛。

每个粒子通过记忆自己的历史最优解和整个群体中的全局最优解来进行自我调整和更新。

在每一次迭代中,粒子根据自身的记忆和全局信息进行位置的更新,直到达到预设的停止条件。

PSO算法具有简单、易于实现和快速收敛等特点,广泛应用于函数优化、组合优化、机器学习等领域。

然而,传统的PSO算法也存在着较为明显的局限性,如易陷入局部最优解、对参数设置较为敏感等问题。

为了克服传统PSO算法的局限性,研究者们提出了各种改进的方法,从算法思想到参数设置进行了深入研究。

本文旨在介绍粒子群改进算法在Matlab环境下的实现。

首先对传统的粒子群算法进行了详细的介绍,包括其原理、算法步骤、优缺点以及应用领域。

然后,进一步介绍了粒子群改进算法的各种改进方法,其中包括改进方法1、改进方法2、改进方法3和改进方法4等。

最后,通过Matlab环境的配置和实验结果与分析来展示粒子群改进算法在实际应用中的性能和效果。

本文的结论部分总结了主要发现、研究的局限性,并展望了未来的研究方向。

综上所述,本文将全面介绍粒子群改进算法的原理、算法步骤、实现过程和实验结果,旨在为读者提供一个详细的了解和研究该算法的指南。

1.2文章结构1.2 文章结构:本文主要包括以下几个部分的内容:第一部分为引言,介绍了本文的背景和目的,概述了即将介绍的粒子群改进算法的原理和优缺点。

particleswarmoptimization粒子群优化算法解析

v

? 初始化:将种群做初始化,以随机的方式求出每一 粒子之初始位置与速度。

? 评估:依据适应度函数计算每一个粒子适应度以判 断其好坏。

? 计算自身最优:找出每一个粒子到目前为止的搜寻 过程中最佳解,这个最佳解称之为 Pbest。

? 计算全局最优:找出种群中的最佳解,此最佳解称 之为Gbest。

群体智能(Swarm Intelligence )

生物学家研究表明:在这些群居生物中虽然每个个体的智能不 高,行为简单,也不存在集中的指挥,但由这些单个个体组成 的群体,似乎在某种内在规律的作用下,却表现出异常复杂而 有序的群体行为。

Swarm可被描述为一些相互作用相邻个体的集合体,蜂群、蚁群、鸟群都是Swarm的典 型例子。

粒子群初始位置和速度随机产生,然后按公式 (1)(2) 进行迭代,直至找到满意的解。 目前,常用

的粒子群算法将全体粒子群 (Global) 分成若干个有 部分粒子重叠的相邻子群,每个粒子根据子群

(Local) 内历史最优Pl调整位置,即公式 (2) 中Pgd 换 为Pld 。

? 每个寻优的问题解都被想像成一支鸟,也称为“Particle”。

Vi =?Vi1,Vi2 ,...,Vid ?

Xi =?Xi1,Xi2 ,...,Xid ?

x(t) Here I am!

Study Factor

My bes最t局优部解

position

pi

运动向量

xi (t ? 1) ? xi (t) ? vi (t)

惯性向量

pg The best position of

位置Pg为所有Pi ( i=1, …,n )中的最优g best ;第i个粒 子的位置变化率(速度)为向量 Vi= (vi1 , v i2 ,…, v iD )。

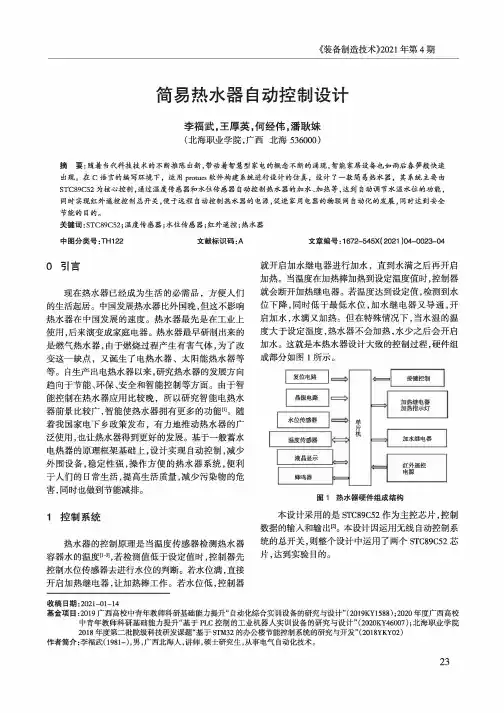

简易热水器自动控制设计

LCD1

LMO 16L

R1

10k

TemF:42.00°C 4?

■42.01 GND • •

Water 1evel!L H

DS18B20 温度传鏗器

目导辭]耳o目c 磅

XTAL1 XTAL2

p1 e

p1 p1

j

pp11.32

p1

p1.4

p1<5

<6

<7

PO.O/ADO PO.1/AD1 P0.2/AD2 PO.3/AD3 P0.4/AD4 P0.5/AD5 P0.6/AD6 FO.7/AD7

高

高 低

加極电器

关 关 开 关 开 关 关 开

加热继电器 加热指示灯

关 关 关 开 关 关 关 关

红外遥控控制热水器总开关,在此模拟设计中 用面包板电路进行红外遥控自动热水器开关测试。 通过实验红外遥控电路控制热水器总开关继电器的

通电,总电源指示灯亮(图7),反之断电时,总电源指 示灯灭。证明实验红外遥控器操作成功,便于达到用 户的安全操作目的。

中图分类号:TH122

文献标识码:A

文章编号:1672-545X( 2021 )04-0023-04

0引言

现在热水器已经成为生活的必需品,方便人们 的生活起居。中国发展热水器比外国晚,但这不影响 热水器在中国发展的速度。热水器最先是在工业上 使用,后来演变成家庭电器。热水器最早研制出来的 是燃气热水器,由于燃烧过程产生有害气体,为了改 变这一缺点,又诞生了电热水器、太阳能热水器等 等。自生产出电热水器以来,研究热水器的发展方向 趋向于节能、环保、安全和智能控制等方面。由于智 能控制在热水器应用比较晚,所以研究智能电热水 器前景比较广,智能使热水器拥有更多的功能叫随 着我国家电下乡政策发布,有力地推动热水器的广 泛使用,也让热水器得到更好的发展。基于一般蓄水 电热器的原理框架基础上,设计实现自动控制,减少 外围设备,稳定性强,操作方便的热水器系统,便利 于人们的日常生活,提高生活质量,减少污染物的危 害,同时也做到节能减排。

Application of particle swarm optimization to model the phase equilibrium of

derivative free optimization algorithms have emerged [5]. Particle swarm optimization (PSO) is a relatively recently population-based stochastic global optimization algorithm [6]. This technique is quite popular within the research community as a problem-design methodology and solver, because of its versatility and ability to find optimal solutions in complex multimodal search spaces applied to non-differentiable cost functions [7]. As described by Eberhart and Kennedy, the PSO is inspired by the ability of flocks of birds, schools of fish, and herds of animals to adapt to their environment, find rich sources of food, and avoid predators by implementing an information sharing approach [8]. In PSO, a set of randomly generated solutions propagates in the design space toward the optimum solution over a number of iterations based on large amount of information about the design space that is assimilated and shared by all members of the swarm [9]. In this work, six binary gas–solid phase systems, and five binary vapor–liquid phase systems containing supercritical CO2 were evaluated using a PSO algorithm. The complete program was developed in Matlab, and was used to calculate the binary interaction parameters of complex mixtures by minimization of the difference between calculated and experimental data. 2. Particle swarm optimization

Particle swarm optimization_ Developments, applications and resources

Yuhui Shi

EDS Embedded Systems Group I40 1 E. Hoffer Street Kokomo, IN 46982 USA Yuhui.Shi@

obtaining free software are included. At the end of the paper, a particle swarm optimization bibliography is presented.

2 Developments

2.1 The Original Version The particle swarm concept originated as a simulation of a simplified social system. The original intent was to graphically simulate the graceful but unpredictable choreography of a bird flock. Initial simulations were modified to incorporate nearest-neighbor velocity matching, eliminate ancillary variables, and incorporate multidimensional search and acceleration by distance (Kennedy and Eberhart 1995, Eberhart and Kennedy 1995). At some point in the evolution of the algorithm, it was realized that the conceptual model was, in fact, an optimizer. Through a process of trial and error, a number of parameters extraneous to optimization were eliminated from the algorithm, resulting in the very simple original implementation (Eberhart, Simpson and Dobbins 1996). PSO is similar to a genetic algorithm (GA) in that the system is initialized with a population of random solutions. It is unlike a GA, however, in that each potential solution is also assigned a randomized velocity, and the potential solutions, called particles, are then “flown” through the problem space. Each particle keeps track of its coordinates in the problem space which are associated with the best solution (fitness) it has achieved so far. (The fitness value is also stored.) This value is called pbest. Another “best” value that is tracked by the global version of the particle swarm optimizer is the overall best value, and its location, obtained so far by any particle in the population. This location is called gbest. The particle swarm optimization concept consists of, at each time step, changing the velocity (accelerating) each particle toward its pbest and gbest locations (global version of PSO). Acceleration is weighted by a random term, with separate random numbers being generated for acceleration toward pbest and gbest locations.

粒子群优化算法 程序

粒子群优化算法程序粒子群优化算法(Particle Swarm Optimization, PSO)是一种基于群体智能的优化算法,它模拟了鸟群或鱼群等生物群体的行为,用于解决各种优化问题。

下面我将从程序实现的角度来介绍粒子群优化算法。

首先,粒子群优化算法的程序实现需要考虑以下几个关键步骤:1. 初始化粒子群,定义粒子的数量、搜索空间的范围、每个粒子的初始位置和速度等参数。

2. 计算适应度,根据问题的特定适应度函数,计算每个粒子的适应度值,以确定其在搜索空间中的位置。

3. 更新粒子的速度和位置,根据粒子的当前位置和速度,以及粒子群的最优位置,更新每个粒子的速度和位置。

4. 更新全局最优位置,根据所有粒子的适应度值,更新全局最优位置。

5. 终止条件,设置终止条件,如最大迭代次数或达到特定的适应度阈值。

基于以上步骤,可以编写粒子群优化算法的程序。

下面是一个简单的伪代码示例:python.# 初始化粒子群。

def initialize_particles(num_particles, search_space):particles = []for _ in range(num_particles):particle = {。

'position':generate_random_position(search_space),。

'velocity':generate_random_velocity(search_space),。

'best_position': None,。

'fitness': None.}。

particles.append(particle)。

return particles.# 计算适应度。

def calculate_fitness(particle):# 根据特定问题的适应度函数计算适应度值。

particle['fitness'] =evaluate_fitness(particle['position'])。

AIAA-2002-1235-345Particle Swarm Optimization

AIAA2002–1235 Particle Swarm OptimizationGerhard Venter(gventer@)∗Vanderplaats Research and Development,Inc.1767S8th Street,Suite100,Colorado Springs,CO80906Jaroslaw Sobieszczanski-Sobieski(j.sobieski@)†NASA Langley Research CenterMS240,Hampton,VA23681-2199The purpose of this paper is to show how the search algorithm known as par-ticle swarm optimization performs.Here,particle swarm optimization is appliedto structural design problems,but the method has a much wider range of possi-ble applications.The paper’s new contributions are improvements to the particleswarm optimization algorithm and conclusions and recommendations as to theutility of the algorithm.Results of numerical experiments for both continuousand discrete applications are presented in the paper.The results indicate that theparticle swarm optimization algorithm does locate the constrained minimum de-sign in continuous applications with very good precision,albeit at a much higher computational cost than that of a typical gradient based optimizer.However,thetrue potential of particle swarm optimization is primarily in applications withdiscrete and/or discontinuous functions and variables.Additionally,particleswarm optimization has the potential of efficient computation with very largenumbers of concurrently operating processors.IntroductionM OST general-purpose optimization software used in industrial applications makes use of gradient-based algorithms,mainly due to their com-putational efficiency.However,in recent years non-gradient based,probabilistic search algorithms have attracted much attention from the research commu-nity.These algorithms generally mimic some natu-ral phenomena,for example genetic algorithms and simulated annealing.Genetic algorithms model the evolution of a species,based on Darwin’s principle of survival of thefittest,1while simulated anneal-ing is based on statistical mechanics and models the equilibrium of large numbers of atoms during an an-nealing process.2Although these probabilistic search algorithms generally require many more function evaluations to find an optimum solution,as compared to gradient-based algorithms,they do provide several advan-∗Senior R&D Engineer,AIAA Member†Senior Research Scientist,Analytical and Computational Methods Branch,Structures and Materials Competency, AIAA FellowCopyright c 2002by Gerhard Venter.Published by the American Institute of Aeronautics and Astronautics,Inc.with permission.tages.These algorithms are generally easy to pro-gram,can efficiently make use of large numbers of processors,do not require continuity in the problem definition,and generally are better suited forfind-ing a global,or near global,solution.In particular these algorithms are ideally suited for solving dis-crete and/or combinatorial type optimization prob-lems.In this paper,a fairly recent type of probabilistic search algorithm,called Particle Swarm Optimiza-tion(PSO),is investigated.The PSO algorithm is based on a simplified social model that is closely tied to swarming theory.The algorithm wasfirst in-troduced by Kennedy and Eberhart.3,4A physical analogy might be a swarm of bees searching for a food source.In this analogy,each bee(referred to as a particle here)makes use of its own memory as well as knowledge gained by the swarm as a whole tofind the best available food source.Since it was originally introduced,the PSO al-gorithm has been studied by a number of different authors.5–8These authors concentrated mostly on multi-modal mathematical problems that are impor-tant in the initial research of any optimization algo-rithm,but are of little practical interest.Few ap-43rd AIAA/ASME/ASCE/AHS/ASC Structures, Structural Dynamics, and Materials Con22-25 April 2002, Denver, ColoradoAIAA 2002-1235plications of the algorithm to structural and multi-disciplinary optimization are known.Two examples are by Fourie and Groenwold who considered an ap-plication to shape and size optimization9and an application to topology optimization.10The present paper focuses on enhancements to the basic PSO algorithm.These include the intro-duction of a convergence criterion and dealing with constrained and discrete problems.The enhanced version of the algorithm is applied to the design of a ten design variable cantilevered beam.Both con-tinuous and integer/discrete versions of the problem are studied.Basic Particle Swarm OptimizationAlgorithmParticle swarm optimization makes use of a ve-locity vector to update the current position of each particle in the swarm.The position of each particle is updated based on the social behavior that a popu-lation of individuals,the swarm in the case of PSO, adapts to its environment by returning to promis-ing regions that were previously discovered.5The process is stochastic in nature and makes use of the memory of each particle as well as the knowledge gained by the swarm as a whole.The outline of a basic PSO algorithm is as follows:1.Start with an initial set of particles,typicallyrandomly distributed throughout the design space2.Calculate a velocity vector for each particle inthe swarm3.Update the position of each particle,using itsprevious position and the updated velocity vec-tor4.Go to Step2and repeat until convergence The scheme for updating the position of each par-ticle is shown in(1)x i k+1=x i k+v i k+1∆t(1)where x ik+1represents the position of particle i atiteration k+1and v ik+1represents the correspond-ing velocity vector.A unit time step(∆t)is used throughout the present work.The scheme for updating the velocity vector of each particle depends on the particular PSO al-gorithm under consideration.A commonly used scheme was introduced by Shi and Eberhart,6as shown in(2)v i k+1=w v i k+c1r1 p i−x i k∆t +c2r2 p g k−x i k∆t(2)where r1and r2are random numbers between0and1,p i is the best position found by particle i sofar and p gkis the best position in the swarm at timek.Again,a unit time step(∆t)is used throughoutthe present work.There are three problem depen-dent parameters,the inertia of the particle(w),andtwo“trust”parameters c1and c2.The inertia con-trols the exploration properties of the algorithm,with larger values facilitating a more global behaviorand smaller values facilitating a more local behavior.The trust parameters indicate how much confidencethe current particle has in itself(c1)and how muchconfidence it has in the swarm(c2).Fourie and Groenwold9proposed a slight modifi-cation to(2)for their structural design applications.They proposed using the best position in the swarmto date p g,instead of the best position in the swarmat iteration k,p gk.Both approaches were investi-gated and it was found that(2)works slightly betterfor our applications.As a result(2)is used through-out the present work.Initial SwarmThe initial swarm is generally created such thatthe particles are randomly distributed throughoutthe design space,each with a random initial velocityvector.In the present work,(3)and(4)are made useof to obtain the random initial position and velocityvectors.x i0=x min+r1(x max−x min)(3)v i0=x min+r2(x max−x min)∆t(4)In(3)and(4),r1and r2are random numbers be-tween0and1,x min is the vector of lower boundsand x max is the vector of upper bounds for the de-sign variables.The influence of the initial swarm distribution onthe effectiveness of the PSO algorithm is studied byconsidering the initial particle distribution.Insteadof using a random distribution,a spacefilling designof experiments(DOE)was used to distribute theinitial swarm in the design space.However,thesenumerical experiments indicate that the initial dis-tribution of the particles(random as compared tousing the spacefilling DOE)is not important tothe overall performance of the PSO algorithm.Thereason being that the swarm changes dynamicallythroughout the optimization process until an opti-mum solution is reached.As a result,the precisedistribution of the initial swarm is not important,as long as it is fairly well distributed throughout thedesign space.In the present work,all initial swarmsare randomly distributed.Problem ParametersThe basic PSO algorithm has three problem de-pendent parameters,w,c1and c2.The literature3 proposes using c1=c2=2,so that the mean of the stochastic multipliers of(2)is equal to1.Addition-ally,Shi and Eberhart7suggest using0.8<w<1.4, starting with larger w values(a more global search behavior)that is dynamically reduced(a more local search behavior)during the optimization.The present work showed that having each par-ticle put slightly more trust in the swarm(larger c2value)and slightly less trust in itself(smaller c1 value)seems to work better for the structural de-sign problems considered ing c1=1.5and c2=2.5works well in all example problems con-sidered.Additionally,dynamically adjusting the w value has several advantages.First,it results in faster convergence to the optimum solution and sec-ond,it makes the w parameter problem independent. The scheme that dynamically adjusts the w value is discussed in more detail in the next section. Enhancements to the Basic Algorithm The basic PSO algorithm summarized in(1)and (2)has been used in the literature to demonstrate the PSO algorithm on a number of test problems. However,the goal here is to develop an implemen-tation of the basic algorithm that would be more general in nature,applicable to a wide range of de-sign problems.To achieve this goal,a number of enhancements to the basic algorithm were investi-gated.These enhancements are discussed in more detail in this section.Convergence CriterionA robust convergence criterion is important for any general-purpose optimizer.Most implementa-tions of the PSO algorithm also implement some convergence criterion.A convergence criterion is necessary to avoid any additional function evalua-tions after an optimum solution is found.Ideally, the convergence criterion should not have any prob-lem specific parameters.The convergence criterion used here is very basic.The maximum change in the objective function is monitored for a specified num-ber of consecutive design iterations.If the maximum change in the objective function is less than a pre-defined allowable change,convergence is assumed. Problem ParametersThe c1=1.5and c2=2.5trust parameter val-ues discussed earlier are used throughout the present work.These parameters seem to be fairly problem independent,but further study is required.The inertia weight parameter w is adjusted dy-namically during the optimization,as suggested by Shi and Eberhart,7with an example implementa-tion by Fourie and Groenwold.9Shi and Eberhart7 proposed linearly decreasing w during thefirst part of the optimization,while Fourie and Groenwold9 decreased the w value with a fraction if no improve-ment has been made for a predefined number of consecutive design iterations.In the present paper a different implementation is proposed,based on the coefficient of variation (COV)of the objective function values.The goal is to change the w value in a problem independent way,with no interaction from the designer.A start-ing value of w=1.4is used to initially accommodate a more global search and is dynamically reduced to no less than w=0.35.The idea is to terminate the PSO algorithm with a more local search.The w value is adjusted using(5)w new=w old f w(5) where w new is the newly adjusted w value,w old is the previous w value and f w is a constant between 0and1.Smaller f w values would result in a more dramatic reduction in w,that would in turn result in a more local search.In the present work f w=0.975 is used throughout,resulting in a PSO algorithm with a fairly global search characteristic.The w value is not adjusted at each design iter-ation.Instead the coefficient of variation(COV) of the objective function values for a subset of best particles is monitored.If the COV falls below a specified threshold value,it is assumed that the al-gorithm is converging towards an optimum solution and(5)is applied.A general equation to calculate the COV for a set of points is provided in(6)COV=StdDevMean(6)where StdDev is the standard deviation and Mean is the mean value for the set of points.In the present work,a subset of the best20%of particles from the swarm are monitored and a COV threshold of1.0is used.Constrained OptimizationThe basic PSO algorithm is defined for uncon-strained problems only.Since most engineering problems are constrained in one way or the other, it is important to add the capability of dealing with constrained optimization problems.It was decided to deal with constraints by making use of a quadratic exterior penalty function.This technique is often used to deal with constrained problems in geneticalgorithms.In the current implementation,the ob-jective function is penalized as shown in(7)when one or more of the constraints are violated.˜f(x)=f(x)+αmi=1max[0,g i(x)]2(7)In(7),f(x)is the original objective function,αis a large penalty parameter,g i(x)is the set of all constraints(with violated constraints having values larger than zero),and˜f(x)is the new,penalized, objective function.In the present work a penalty parameter ofα=108is used.Particles With Violated ConstraintsWhen dealing with constrained optimization problems,special attention to particles with violated constraints needs to be paid.This issue was not ad-dressed in the literature,thus a new enhancement to the basic PSO algorithm is proposed here.It is preferable to restrict the velocity vector of a violated particle to a usable,feasible direction e.g., Vanderplaats,11a direction that would reduce the objective function while pointing back to the feasi-ble region of the design space.Unfortunately,this would require gradient information for each violated particle.Currently,one of the attractive features of the PSO algorithm is that no gradient informa-tion is required and thus the concept of calculating a usable,feasible direction is not viable.Instead,a simple modification to(2)for particles with one or more violated constraints is proposed. The modification can be explained by considering particle i,which is assumed to have one or more violated constraints at iteration k.By re-setting the velocity vector of particle i at iteration k to zero,the velocity vector at iteration k+1is obtained asv i k+1=c1r1 p i−x i k∆t +c2r2 p g k−x i k∆t(8)The velocity of particle i at iteration k+1is thus only influenced by the best point found so far for the particle itself and the current best point in the swarm.In most cases this new velocity vector will point back to a feasible region of the design space. The result is to have the violated particle move back towards the feasible design space in the next design iteration.Discrete/Integer Design VariablesUnlike a genetic algorithm that is inherently a dis-crete algorithm,the PSO algorithm is inherently a continuous algorithm.Although the PSO algorithm is able tofind optimum solutions to continuous prob-lems very accurately,the associated computation cost is high compared to gradient-based algorithms. However,the true potential of the PSO algorithm is in applications with discrete and/or discontinuous functions and variables.In other words,the algo-rithm is expected to excel in applications where a gradient-based algorithm is not a viable alternative. In this paper,two different modifications to the basic PSO algorithm that allow the solution of prob-lems with discrete variables are considered.Thefirst approach is straight forward.The position of each particle is modified to represent a discrete point,by rounding each position coordinate to its closest dis-crete value after applying(1).The second approach is more elaborate.The po-sition of each particle is modified to represent a dis-crete point,by considering a set of candidate discrete values about the new continuous point,obtained af-ter applying(2).The candidate discrete points are obtained by rounding each continuous position coor-dinate to its closest upper and lower discrete values. For a problem with nDvar discrete design variables, this process will result in nDvar2candidate discrete points.For a two dimensional,integer problem,this would produce four candidate discrete points,lo-cated at the vertices of a two dimensional rectangle. The discrete point to use as the new position for the particle is selected from the candidate set of discrete points as the point with the shortest perpendicular distance to the velocity vector.There are several en-hancements to this scheme,based on the direction of the velocity vector,that will substantially reduce the number of candidate discrete points,but a more detail discussion is beyond the scope of this paper. Reducing the number of candidate discrete points is especially important for problems with larger num-bers of design variables.The more elaborate approach is expected to re-sult in a more efficient integer/discrete algorithm.In contrast to the expectation,numerical experiments indicate that there is no significant difference in the performance of the PSO algorithm when using the first as compared to the second approach.Since there is no advantage to using the more elaborate ap-proach,this approach was discarded and the simpler rounding approach is used for all integer/discrete problems presented in this paper.Additional RandomnessTo avoid premature convergence of the algorithm, the literature mentions the possible use of a crazi-ness operator3that adds randomness to the swarm. The craziness operator acts similarly to the mutation operator in genetic algorithms.However,there does not seem to be concensus in the literature whetheror not the craziness operator should be applied. After introducing the craziness operator,Kennedy and Eberhart3conclude that this operator may not be necessary,while Fourie and Groenwold9reintro-duced the craziness operator for their structural de-sign problems.It was thus decided to implement a craziness operator here and test it’s effectiveness for our structural design problems.The originally proposed craziness operator identi-fies a small portion of randomly selected particles at each iteration for which the velocity vector is ran-domly changed.In the present work,the craziness operator is modified.The craziness operator used here also identifies a small number of particles at each design iteration,but instead of changing the velocity vector,both the position and the velocity vector are changed.The position of the particles are changed randomly,while the velocity vector of each modified particle is reset to only the second component of(2)as shown in(9).v i k+1=c1r1 p i−x i k∆t(9) In the present implementation,the particles to mod-ify are identified using the COV for the objective function values of all particles,at the end of each design iteration.If the COV falls below a prede-fined threshold value,it is assumed that the swarm is becoming too uniform.In this case,particles that are located far from the center of the swarm are identified,using the standard deviation of the po-sition coordinates of the particles.Particles that are located more than2standard deviations from the center of the swarm are subjected to the craziness operator.In the present work,a COV threshold value of0.1is used.Example ProblemTo study the behavior of the PSO algorithm,a cantilevered beam example,simular to that consid-ered by Vanderplaats11was chosen.A schematic representation of the example problem,including material properties,are shown in Fig.1.The beam is modeled usingfive segments of equal length and the design problem is defined as mini-mizing the material volume of the beam,subject to maximum bending stress constraints for each seg-ment.The design variables are the height(h)and width(b)of each segment,resulting in ten inde-pendent design variables.Two cases are considered. Thefirst is a continuous design problem where the height of each segment is allowed to vary between 50cm and100cm,while the width is allowed to vary between0.5cm and10cm.The second is anxCross sectionFig.1Cantilevered beam example problem integer/discrete case where all ten design variables are restricted to integer values only.For the sec-ond case,the height of each segment is allowed to vary between50cm and100cm,while the width is allowed to vary between1cm and10cm.The bending stress is obtained from the well known bending stress equation shown in(10)σ=MyI(10)whereσrepresents the bending stress,M the ap-plied bending moment,I the moment of inertia and y is the vertical distance,measured from the neutral axis,where the stress is calculated.For this problem M=P(L−x)and I=112bh3,while the maximum stress value occurs at y=h/2.The height thus has a much larger influence on the bending stress, as compared to the width of the beam.It is reason-able to assume that the optimizer would minimize the weight by keeping the width constant and equal to its lower bound,while changing the height of the beam.In this case,the theoretical solution for the height ish=6P(L−x)σb(11) where P is the applied tip load,x is the horizontal distance measured from the root of the beam andσis the allowable stress limit.To verify the above assumptions,and to study how well a gradient-based optimizer would solve this problem,it was decided to model and solve the continuous case using the GENESIS12structural analysis and optimization code.The theoretical op-timum results for a beam with uniform height and width across the span,as well as the GENESIS and theoretical optimum results for a beam withfive seg-ments are summarized in Table1.The case with uniform height and width was obtained by settingthe width equal to its lower bound and calculating the height from(11)to have the maximum stress at the root equal to the allowable stress.The case with constant height and width may be considered as a baseline from which the optimizer makes improve-ment.Table1Comparison between continuous GEN-ESIS and theoretical resultsParameter Baseline GENESIS Theory Volume(cm3)366832749827438 b1(cm)0.50.50.5b2(cm)0.50.50.5b3(cm)0.50.50.5b4(cm)0.50.50.5b5(cm)0.50.50.5h1(cm)146.73146.73146.39h2(cm)146.73131.16130.93h3(cm)146.73113.16113.39h4(cm)146.7392.7892.58h5(cm)146.7365.6165.47 Table1verifies both the assumptions that lead to(11)and the GENESIS results.GENESIS solved the problem using12finite element analyses.How-ever,it should be noted that GENESIS makes use of advanced approximation techniques to reduce the required function evaluations for solving structural optimization problems.A general purpose gradient-based optimizer would most probably require be-tween100and300function evaluations to solve this problem.Finding the theoretical optimum for the inte-ger/discrete case is a more daunting task.Instead, we’ll determine tight upper and lower bounds for the objective function value.A lower bound may be obtained from(11),using the lower bound values of1.0for all b i,thus producing an optimum an-swer with half the variables being integer/discrete and the other half being continuous.An upper bound may be obtained by considering three dis-crete points,obtained by rounding the continuous h i values obtained from(11).The three points are obtained by rounding all h i values up,rounding all h i values down and rounding all h i values to their closest integer value.It turns out that only the case where all h i values are rounded up produce a feasi-ble design,thus providing an upper bound for the integer/discrete optimum solution.The theoretical results for a beam with uniform height and width across the span,using the root dimensions of the lower bound solution,and the upper and lower bounds for the integer/discrete de-sign problem are shown in Table2.The difference in the objective function values of the calculated upper and lower bounds is less than1%and Ta-ble2thus provides tight upper and lower bounds for the integer/discrete solution.Again,the beam with constant height and width may be considered as a baseline from which the optimization makes im-provement.Table2Upper and lower bound solutions for the integer/discrete caseBound Bound Volume(cm3)517553880339100 b1(cm) 1.0 1.0 1.0b2(cm) 1.0 1.0 1.0b3(cm) 1.0 1.0 1.0b4(cm) 1.0 1.0 1.0b5(cm) 1.0 1.0 1.0h1(cm)103.51103.51104h2(cm)103.5192.5893h3(cm)103.5180.1881h4(cm)103.5165.4766h5(cm)103.5146.2947ResultsThe PSO algorithm was used to analyze the beam example problem,employing the elementary strength of materials approach described in the pre-vious section.As discussed,two design problems were considered,thefirst is a continuous problem and the second an integer/discrete problem.For each design problem,the influence of two enhance-ments to the basic algorithm is considered.The first is the proposed craziness operator.As men-tioned previously,there seems to be disagreement in the literature as to the usefulness of the craziness operator.The second is resetting the velocity vec-tors of violated design points.Resetting the velocity vectors is a new feature that has not been studied previously.To fully investigate the influence of these two enhancements,all possible combinations of us-ing and not using the enhancements were considered, resulting in four possible combinations.The PSO al-gorithm wasfirst run for afixed number of function evaluations and then using the proposed convergence criterion.In all cases,a swarm size of300particles was used.Each run was repeated50times and the best, worst,mean and standard deviation of the best ob-jective function from each of the50repetitions were recorded.For the runs where the convergence crite-rion was used,the best,worst,mean and standarddeviation of the number of function evaluations to convergence for each of the50repetitions were also recorded.For all runs the same PSO parameters were used,as summarized in Table3.The w,c1 and c2values were determined as discussed in previ-ous sections of this paper.The number of particles (swarm size)was selected as a tradeoffbetween cost and reliability.Smaller swarm sizes required less function evaluations for convergence,but decreased the reliability of the rger swarm sizes required more function evaluations for convergence, but increased the reliability of the algorithm.Table3PSO parameters used in example prob-lemsParameter ValueInitial inertia weight,w 1.4Trust parameter1,c1 1.50Trust parameter2,c2 2.50Each run is identified by the combination of en-hancements used during that run,as summarized in Table4.From Table4,R would represent reset-ting the velocities of violated particles only,while CR would represent using the craziness operator and resetting the velocities of violated particles.Table4PSO enhancement summaryOption DefinitionC Apply craziness operatorR Reset velocities of violated particlesFixed Number of Function EvaluationsFirst,each of the four combinations was evaluated using afixed number of design iterations equal to50, resulting in a total number of function evaluations equal to15000.The statistical results obtained from 50repetitions for each combination are summarized in Table5for the continuous design problem and in Table6for the integer/discrete design problem. Table5Objective function(material volume) statistics for the continuous design problemOption Mean StdDev Best Worst—41383185482761095547R31897122472743891809C43232198663015092208CR335341539427439110824Table6Objective function(material volume) statistics for the integer design problemOption Mean StdDev Best Worst—673802070739900112196R42822101533910089491C625621769039100107596CR42253102343910085086By comparing the statistical data,especially the mean and standard deviation values,from Tables5 and6it is clear that resetting the velocity vectors of the violated design points has a significant and positive influence on the performance of the PSO algorithm.In contrast,the craziness operator does not appear to have a big influence.It is not clear if combining the craziness operator and resetting the velocity vectors of the violated design points results in any additional improvements over just resetting the velocity vectors without the craziness operator. Finally,the standard deviation clearly shows that the algorithm is more successful in solving the dis-crete problem(Table6)than the continuous problem (Table5).This was expected,since the discrete problem results in a smaller design space as com-pared to the continuous problem.For the case with afixed number of function evalu-ations,it is possible to compare the optimum results obtained by the PSO algorithm against a random search,using the same number of function valua-tions.We performed a random search with15000 analyses,again repeating the process50times,and recorded the statistics for the best objective function from each repetition.The results are summarized in Table7.Table7Objective function(material volume) statistics for the continuous problem using a ran-dom searchMean StdDev Best WorstWhen comparing the results from Table7with that from Table5,it is clear that the random search has a terrible performance as compared to the PSO algorithm,using the same number of function eval-uations.Convergence CriterionNext the runs of Tables5and6are repeated, using the proposed convergence criterion.For con-vergence the objective function is required not to change more than0.1%in10consecutive design。

科技论文中引言的写作内容

14青岛大学学报(工程技术版)第33卷P S O算法和P S O算法相比性能更好。

对于单峰函数收敛速度更快,对于多峰函数A C F P S O算法能够很好地避 免陷人局部最优值。

下一步研究内容是将A C F P S O算法应用有约朿的复杂函数求解。

参考文献:[1] Kennedy J,Eberhart R. Particle Swarm Optimization[A]. Proceedings of IEEE International Conference on Neural Networks[C]7P i seataway,$IEEE Service Center,1995:1940 - 1948.[2] Eberhart R,Kennedy J. A New Optimizer UsingParticle Swarm Th eory[C] 7 International Symposium on MICRO Machineand Human Science. Washington: IEEE,2002: 39 - 43.[3] Eberhart R,Shi Y. Particle Swarm Optimization:Developments,Applications and Resources[C] 7Proceedings of the 2001Congress on Evolutionary Computation. Brussels:IEEE,2002,1(6):1-86.[]杨维,李歧强.粒子群优化算法综述[J].中国工程科学,2004, 6(5): 87 - 94.[]黄少荣.粒子群优化算法综述[].计算机工程与设计,2009, 30(8): 1977 - 1980.[6] Shi Y,Eberhart R .Modified Particle Swarm Optimizer[J]. IEEE World Congress on Computational Intelligence,1998,112(6):67 - 72.[7] C lercM. The Swarm and the Queen: Towards a Deterministic and Adaptive Particles Swarm Optimization[J]. Proc of IEEECongressonEvolutionaryCom putation,1999(8):1951 - 1957.[8] Shi Y,Eberhart R, Chen Y.Implementation of Evolutionary Fuzzy Systems[J]. IEEE Transactions on Fuzzy Systems,1999,7(2):109-119.[9] Shi Y,Eberhart R. Fuzzy Adaptive Particle Swarm Optimization[C] 7 Proceedings of the IEEE Conference on EvolutionaryComputation. Lisbon :Piscataway,1997,1(12):101-106.[10] Alti A,Fateh M M. Intelligent Identification and Control Using Improved Fuzzy Particle Swarm Optimization[J]. ExpertSystemswith Applications,2011,38(10) :12312 - 12317.[11] Valdez F,Vazquez J C,Melin P,et al. Comparative Study of the Use of Fuzzy Logic in Improving Particle Swarm Optimization Variants for Mathematical Functions Using Co-evolution[J]. AppliedSoft Computing,2017,52:1070 - 1083.[12] Shi Y,Eberhart R C. Patameter Selection in Particle Swarm Optimization[J]. Lecture Notes in Computer Science,1998,1447:591 - 600.[13] Bai Q. Analysis of Particle Swarm Optimization Algorithm[J]. Computer 'InformationScience,2010, 3(1):180 - 184.[14]王东风,孟丽.粒子群优化算法的性能分析和参数选择[].自动化学报,2016, 42(0):552 - 1561.[15] Y a n g W,L iQ. SurveyonParticleSw arm O ptim izationAlgorithm[J]. EngineeringScience,2004,6(5):87 - 94.[16] Rana S,Jasola S,Kumar R. A Review on Particle Swarm Optimization Algorithms and Their Applications to data Clustering[J]. Artificial Intelligence Review,2011,35(3):211 -222.[17]张国良.模糊控制及其M A T L A B应用[M].西安:西安交通大学出版社,2002.[18]王立新,王迎军.模糊系统与模糊控制教程[M].北京:清华大学出版社,2003.[19] Liang J J,Qu B Y,Suganthan P N. Problem Definitions and Evaluation Criteria for the CEC 2014 Special Session and Competition on Single Objective Real-Parameter Numerical Optimization[C]7Computational Intelligence Laboratory. Zhengzhou:China and Technical Report,Nanyang Technological University,2013.[20] Derrac J,Garcia S, Molina D, et al. A Practical Tutorial on the Use of Non Parametric Statistical Tests as a Methodology forComparing Evolutionary and Swarm Intelligence Algorithms[J]. Swarm and Evolutionary Computation,2011,1(1) :3- 18.(下转第37页)科技论文中引言的写作内容引言(也称前言、序言或概述)经常作为科技论文的开端,提出文中要研究的问题,引导读者阅读和 理解全文。

粒子群优化算法基本原理

粒子群优化算法基本原理粒子群优化算法(Particle Swarm Optimization,简称PSO)是一种基于仿生学思想的优化算法,最早由美国加州大学洛杉矶分校(University of California, Los Angeles)的Eberhart和Kennedy于1995年提出。

该算法模拟了群体中个体之间的协作行为,通过不断的信息交流与迭代搜索,寻找最优解。

粒子群优化算法的基本思想是通过模拟鸟群或鱼群等生物群体在搜索空间中的行为,通过个体间的合作与信息共享来寻找最优解。

算法的核心是通过不断更新每个粒子的速度和位置,使其朝着全局最优解的方向进行搜索。

在粒子群优化算法中,每个粒子代表一个解决方案,并通过在搜索空间中移动来寻找最优解。

每个粒子都有一个位置向量和一个速度向量,位置向量表示当前粒子所在的位置,速度向量表示粒子在搜索空间中的移动方向和速度。

每个粒子还有两个重要的参数:个体最佳位置(Pbest)和全局最佳位置(Gbest)。

个体最佳位置表示粒子自身经历的最优位置,全局最佳位置表示整个粒子群中最优的位置。

算法的具体过程如下:1. 初始化粒子群的位置和速度,并为每个粒子设置初始的个体最佳位置。

2. 根据当前位置和速度更新粒子的位置和速度,并计算粒子的适应度值。

3. 更新粒子的个体最佳位置和全局最佳位置。

如果当前适应度值优于个体最佳适应度值,则更新个体最佳位置;如果当前适应度值优于全局最佳适应度值,则更新全局最佳位置。

4. 判断终止条件,如果满足停止条件,则输出全局最佳位置作为最优解;否则返回步骤2进行下一轮迭代。

5. 结束。

粒子群优化算法的优点在于简单易实现,不需要求导等额外计算,且具有全局搜索能力。

由于模拟了群体协作的行为,粒子群优化算法可以克服遗传算法等局部搜索算法容易陷入局部最优解的问题。

此外,算法的收敛速度较快,迭代次数相对较少。

然而,粒子群优化算法也存在一些缺点。

首先,算法对于问题的解空间分布较为敏感,如果解空间分布较为复杂或存在多个局部最优解,算法可能无法找到全局最优解。

一种改进粒子群优化算法在多目标无功优化中的应用_李鑫滨

2010年7月电工技术学报Vol.25 No. 7 第25卷第7期TRANSACTIONS OF CHINA ELECTROTECHNICAL SOCIETY Jul. 2010一种改进粒子群优化算法在多目标无功优化中的应用李鑫滨1,2朱庆军1(1. 燕山大学电气工程学院秦皇岛 066004 2. 河北省数学研究中心石家庄 050000)摘要针对粒子群优化算法容易陷入局部最优等问题,提出了一种新的模糊自适应-模拟退火粒子群优化算法。

该算法首先是基于模糊推理的思想,将规范化的当前最好性能评价和粒子群算法的惯性权重、学习因子作为模糊控制器的输入,以算法参数变化量的百分数作为模糊控制器的输出,并根据参数设置经验建立了相应的模糊控制规则,使其能够自适应地调节粒子群优化算法的参数;对调节后粒子新位置的优劣,则通过采用模拟退火算法调节粒子的适应度来加以评价。

最后,采用改进后的粒子群优化算法对多目标无功优化模型进行了求解。

IEEE 30节点和IEEE 118节点的标准电力系统算例验证了本文所提出的模糊自适应-模拟退火粒子群优化算法的有效性和可行性。

关键词:粒子群优化多目标无功优化模糊自适应模拟退火中图分类号:TM714.3Application of Improved Particle Swarm Optimization Algorithm toMulti-Objective Reactive Power OptimizationLi Xinbin1,2 Zhu Qingjun1(1. Yanshan University Qinhuangdao 066004 China2. Hebei Mathematical Research Center Shijiazhuang 050000 China)Abstract In order to avoid the defect that a conventional particle swarm optimization (PSO) algorithm is easy to trap into a local optimization, a new fuzzy adaptive-simulated annealing PSO algorithm is proposed in this paper. Based on the principle of fuzzy logic, the inputs to the fuzzy controller are the normalized current best performance valuation, inertia weighing of the PSO algorithm and the learning factor, the outputs of the controller are the parameters rate of change. The fuzzy rules are formulated based on the experience of parameters settings so as to adjust the PSO parameters adaptively. The quality of particles’ new location after the adjustment is valued by simulated annealing (SA). Then, the modified PSO algorithm is introduced to solve multi-objective reactive power optimization problem. IEEE 30-bus and IEEE118-bus system are simulated to verify the effectiveness and feasibility of SA- fuzzy self-adaptive particle swarm optimization algorithm.Keywords:Particle swarm optimization (PSO),multi-objective reactive power optimization, fuzzy logic, adaptive, simulated annealing (SA)1引言电力系统无功优化是保障电力系统安全、经济运行的有效手段,合理的无功分布可以降低网损、提高电压质量并保持电网的正常运行。