A Quantitative Analysis of Instruction Prefetching

学情分析内容范文英语

学情分析内容范文英语Title: Analysis of Academic Performance: A Comprehensive ExaminationIn contemporary education, the analysis of academic performance stands as a pivotal tool for educators, administrators, and policymakers alike. This essay delves into the multifaceted aspects of academic performance analysis, exploring its methodologies, significance, challenges, and implications for educational advancement.1. **Introduction to Academic Performance Analysis**Academic performance analysis encompasses the systematic evaluation of students' achievements in various academic domains. It involves the collection, interpretation, and utilization of data to assess students' learning outcomes, strengths, weaknesses, and overall progress.2. **Methodologies for Academic Performance Analysis**- **Quantitative Methods**: Quantitative analysis involves the use of numerical data to measure academic performance. Common quantitative metrics include test scores, grades, attendance rates, and completion rates. Statistical tools such as regression analysis and correlation analysis are employed to identify patterns and relationships in the data.- **Qualitative Methods**: Qualitative analysis focuses on understanding the underlying factors influencing academic performance. It involves techniques such as interviews, focus groups, and observation to gather rich, contextual insights into students' experiences, motivations, and learning environments.3. **Significance of Academic Performance Analysis**- **Informing Instructional Practices**: Analysis of academic performance provides valuable feedback to educators,enabling them to tailor their teaching strategies to meet students' needs effectively.- **Identifying At-Risk Students**: By identifyingstudents who are struggling academically, performanceanalysis allows for early intervention and support, thereby reducing dropout rates and improving overall student outcomes.- **Guiding Policy Decisions**: Policymakers utilize academic performance data to inform decisions related to curriculum development, resource allocation, and educational reform initiatives.4. **Challenges in Academic Performance Analysis**- **Data Quality and Accessibility**: Ensuring the accuracy and availability of academic performance data can be challenging due to issues such as data entry errors, incomplete records, and privacy concerns.- **Interpreting Complex Factors**: Academic performance is influenced by a myriad of factors, including socioeconomic status, cultural background, and learning disabilities. Analyzing performance data requires careful consideration of these multifaceted variables.- **Balancing Accountability and Support**: While academic performance analysis is essential for accountability purposes, it is crucial to strike a balance between holding individuals accountable for outcomes and providing them with the necessary support and resources to succeed.5. **Implications for Educational Advancement**- **Personalized Learning**: Academic performance analysis facilitates personalized learning approaches, where instruction is tailored to accommodate individual student needs, interests, and learning styles.- **Data-Driven Decision Making**: By harnessing the power of data, educators and administrators can make informeddecisions regarding curriculum design, instructional interventions, and resource allocation, leading to improved educational outcomes.- **Continuous Improvement**: Academic performance analysis fosters a culture of continuous improvement within educational institutions, where stakeholders collaboratively strive to enhance teaching and learning practices to better serve students' needs.In conclusion, academic performance analysis serves as a cornerstone of modern education, offering valuable insights into students' learning outcomes and driving efforts to enhance educational quality and equity. By leveraging diverse methodologies, addressing inherent challenges, and embracing its implications, stakeholders can harness the full potential of academic performance analysis to promote student success and educational advancement.。

POSITIVE MATERIAL QUALITY PROCEDURE材料质量鉴定质量程序

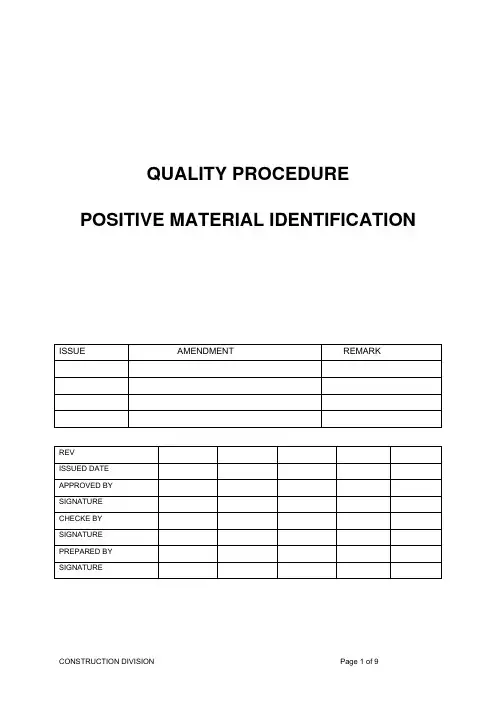

QUALITY PROCEDURE POSITIVE MATERIAL IDENTIFICATIONCONTENTS1.0 SCOPE2.0 PURPOSE3.0 DEFINITION4.0 RESPONSIBILITIES5.0 REFERENCES6.0 QUALITY PROCEDURE7.0 ATTACHMENT1. SCOPEThis procedure is applicable for quantitative analysis of composition of Cr、Ni、Mo、Ti、V 、Mn、Nb、Cu、W、Fe in every kinds of alloy material, pressure-containing components in shop and field fabricated equipment and piping (both base metal and welds), detect and replace incorrect materials,2. PURPOSEPrevent equipment /system failures due to installation of incorrect materials.Construction and fabrication quality control is vital and PMI is a very important part of total quality control.3. DEFINITIONPMI Positive Material IdentificationMRR Material Receiving ReportsMMIR Manufacturer Material Identification Reports4. Responsibilities and qualification of testing personnelQuality engineer is responsible for the supervision of overall NDT operations, coordination and contact with TR and relevant.NDE Project Manager is responsible for commanding construction, coordinating all works related with this project, monitoring and checking works of every departments of this project and assigning and coordinating the resources in this project, such as personnel, equipments, material, etc.participate working meeting organized by owner, supervisor.NDE HSE Manager is responsible for assuring that every testing being carried out safely, reposefully and normally.NDE quality Controlling Manager is responsible for compiling and revising and approving every technical document related to this project, organizing to supervise, check, and instruct, the final assessing of testing results and organizing to settling and submitting project-finishing documents.Testing personnel is responsible for carrying out testing works according to the requirements of standards, regulations, and operation instructions, carefully filling in records, feeding-back quality information in time and keeping the cleaning of facility environment, maintaining equipments and testing apparatus and assuring its normal running.Prior to commencing PMI testing, instrument operators shall be qualified to operate approved equipment on a representative sample of the alloy materials with 100% correct assessment as the performance criteria. The instrument operator shall work to a written procedure and shall have been trained to use the instrument in accordance with that procedure. Training shall be documented.The person(s) performing the PMI testing shall calibrate and/or verify the test equipment performance as specified by the equipment manufacturer.5. REFERENCESSAES-A-206Positive Material IdentificationASME B31.3Chemical Plant and Petroleum Refinery PipingASME B31.4 Pipeline Transportation Systems for Liquid Hydrocarbonsand Other LiquidASME B31.8 Gas Transmission and Distribution Piping SystemsASTM A193 Standard Specification for Alloy-Steel and Stainless SteelBolting Materials for High-Temperature Service ASTM A751 Standard Methods Practices and Terminology for ChemicalAnalysis of Steel Products6. QUALITY PROCEDURE6.1 Extent of VerificationA. The PMI program covers alloy material, pressure-containingcomponents in shop and field fabricated equipment and piping (bothbase metal and welds) and materials used for repair or replacementof pressure containing components.B. One hundred percent PMI testing (each component and weld istested) shall be performed on all pressure components and welds ofalloy materials.C. PMI testing of weld material shall be performed in the same manneras for the adjacent base metal.D. Welding consumables (welding rod or welding wire) shall also beperformed.6.2 The elements of the basic alloy materials to be verified shall be inaccordance with following as:A. For Carbon-Molybdenum, Manganese-Molybdenum, andChromium-Molybdenum steels, the Elements of Chromium andMolybdenum shall be verified.B. For Nickel steels, the Elements of Nickel shall be verified.C. For Regular carbon grade stainless steels, the Elements ofChromium, Nickel, and Molybdenum shall be verified.D. For Low-carbon stainless steels, the Elements of Chromium, Nickel,Molybdenum, and Carbon shall be verified.E. For Stabilized stainless steels, the Elements of Chromium, Nickel,Molybdenum, Titanium and Niobium shall be verified.F. For Nickel-based alloys steels, the Elements of Nickel, Iron, Copper,Chromium, and Molybdenum shall be verified.G. For Copper-based alloys steels, the Elements of Copper, Zinc, andother elements shall be verified.6.3 Testing RequirementsA. The subcontractor shall ensure that the required PMI program hasbeen carried out at any off site fabrication facilities where applicable.Items requiring PMI that have not been tested prior to shipment andreceipt on-site, shall be tested at site.B. Prior to performing any PMI inspection on bulk or lose materialreceived at site, the subcontractor shall be traceable to the MMIR,MRR, and determine what percentage of the material requires PMIverification based on the project specification.C. Prior to performing any PMI inspection at the warehouse or stockyard, the subc ontractor’s PMI inspector shall perform a visualinspection of the material for defects, proper material,manufacturer’s markings, heat number verification and codeidentification markings.D. If no MMIR were shipped with the alloy welding filler metal, each lotof that alloy filler metal shall be tested. As a minimum one samplefrom each Lot shall be measured in the chemical mode forcompliance, and recorded on a PMI report log.E. The subcontractor shall maintain dissimilar alloy material isolation inaccordance with the material management procedure.F. The quality inspector shall consign the NDT personnel for doingPMI testing of alloy materials according to required percent andelement.G. When analysing welds (butt, or groove), the subc ontractor’s PMIinspector shall do a three point check using the ID mode as aminimum, which includes the base metal, heat effect zone andwelding seam .If the base metal has already been identified fromthe warehouse and documented as such, a two point check may beperformed on the weld and remaining base metal. The results shallbe documented for the two points and a comment added for thepreviously inspected fitting on the PMI report form.H. Both inside and outside weld surfaces shall be tested whereaccessible. PMI testing of welds shall be done after removal of slagand oxide from the weld surface.I. The surface to be analyzed shall be clean bare metal, free ofgrease or oil, with a surface finish as specified by the instrumentmanufacturer.6.4 Inspect equipment and/or instrumentA. A portable optical emission spectrograph may be used to check forall the required elements, including carbon, Chromium,Molybdenum, Nickel, Titanium, Iron, Copper etc.B. The PMI inspection equipment and instrument shall be calibrated,reconditioned and leak tested for continuous effectiveness of theinstrument’s radiation shielding by the manufacturer.C. As with all electrical instruments, care should be taken in handlinganalyzers. Avoid dropping or striking the instrument on hardsurfaces at all costs, unless the safety of the operator is at risk.6.5 Methods of TestingA. The instruments and methods used shall be suitable for identifyingthe material by quantitative measurement of the major alloyingelements required in the applicable material specification or weldingprocedure specification.B. Check the battery capability, insure instrument is on normal status.C. Clean the surface of material.D. Test with certified standard steel sample, confirm whether theanalysing process is normal and analysing date is correct.E. Select different radiating time, and not less than 15 secondsaccording to the range of element compositions.F. According to testing piece material and jobsite conditions, selectmost optimum analysing condition, such as operating mode,programme, analysing time and analysing times.G. Analyse and record the results according to the requirements ininstrument instruction.6.6 Criteria of AcceptanceA. For acceptance, it must be demonstrated that materials contain theamounts of alloying elements shown in the material specification.Alloys shall be acceptable if the alloying elements are each withinthe stated tolerance range for the instrument but in no case lessthan 10% of the minimum specified value or greater than 10% ofmaximum specified value in the material specification.B. For deposited weld metal between similar base materials usingmatching consumables, the recorded concentration shall be withinthe stated tolerance range for the instrument but in no case lessthan 12.5% of the minimum specified value or greater than 12.5%of maximum specified value in the material specification.C. Acceptance criteria for dissimilar metal alloy welds and weldoverlays shall be in accordance with the welding consumablespecified in the approved welding procedure. The effects of dilutionbetween the different base metals and the filler metal shall be takeninto account for determining the nominal as-deposited weld metalcomposition.D. If any component, basic material or weld is found unacceptable, itshall be replaced and the replacement shall be alloy verified inaccordance with correlative standard.E. All rejected alloy material, component or weld shall be replaced and100% of the replacement alloy material, component or weld shall besubjected to PMI testing in accordance with correlative standard. 6.7 Testing InspectionThe responsible Inspector shall ensure and verify that alloy materials have been verified by PMI testing as required and the element of the alloy steel shall be within the stated tolerance range.6.8 Verification MarkingA. After the testing, the tester should mark with water-insolublematerial that contains no substances that harmfully affect the metalat ambient or elevated temperatures, In particular, the markingmaterial shall be free of lead, sulfur, zinc, cadmium, mercury,chlorine, or other halogens.B. All verified materials with an acceptable analysis shall be markedwith the letters "PMI" using a certified low-stress stamp. Themarking shall be placed as follows:A. For pipe, one mark, 75 mm from one end on the outer surface ofthe pipe.B. For welds, adjacent to the welder's mark on the weld.C. For fittings and valves, adjacent to the manufacturer's markings.D. For valves, Adjacent to the valve manufacturer's markings onbodies and other pressure parts.E. For steel plates, 75 mm from one edge, adjacent to manufacturer'smarkings.C. PMI markings shall be transferred when a plate or pipe is cut.D. All components, and welds unaccepted shall be marked red “×”.6.9 Colour codeEach component shall be coded and painted characters indicating the specification number of the material if it is not permanently marked or tagged according to the applicable material specification, as following:A. For piping and fitting, each length of pipe and fitting shall have a 5mm or larger stripe running full length (lengthways). One inch andsmaller may have a 3 mm stripe running full length (lengthways).B. For valves, when required, stripe across the body from flange toflange or end to end.C. For flanges, when required, stripe across edge up to hub.D. For spiral-wound gaskets, shall be colour coded in accordance withASME B16.20.E. For bolts, stripe around the midpoint of each bolt or stud.6.10 Record and ReportA. When operators finish the PMI testing, they shall keep the all recordof PMI testing and submit the report to TR’s quality control team.B. The following should be included in reports:Weld, base material, or area examinedFiller metal designation or part identificationEquipment piece or tag numberExamination procedure (standard), identification, and revisionnumberDate of examinationPMI Inspectors or operator nameExamination resultsThe type of testing performed and the type and model of analyzerusedThird party PMI Agency name and authentication result7. ATTACHMENT。

博士课题汇报英文范文

博士课题汇报英文范文Title: Progress Report on My Doctoral Research Project.Abstract:This report outlines the progress and findings of my doctoral research project, which aims to explore the intersection of artificial intelligence (AI) and education. Specifically, my study focuses on developing an AI-based adaptive learning system that tailors educational content to individual student needs. This report highlights the theoretical framework, methodological approach, key findings, and implications of my research.Introduction:The integration of AI in education has the potential to revolutionize teaching and learning. Adaptive learning systems, in particular, have the ability to personalize the learning experience by adjusting content, pace, anddifficulty based on student performance and preferences. My research aims to contribute to this field by developing an AI-based adaptive learning system that is both effectiveand efficient.Theoretical Framework:My research is grounded in the theoretical framework of cognitive psychology and educational technology. I drawupon theories of cognitive load, differentiated instruction, and personalized learning to guide the development of my adaptive learning system. Specifically, I aim to create a system that minimizes cognitive load by providing relevant and engaging content, caters to student preferences and learning styles, and adapts to individual differences in cognitive abilities.Methodological Approach:To achieve these goals, I have employed a mixed-methods approach that combines quantitative and qualitative methodologies. My research design involves the followingsteps:1. Literature Review: I conducted a thorough review of existing literature to identify gaps in knowledge and understand the current state of AI in education.2. Needs Analysis: I conducted interviews and surveys with educators and students to gather insights into their needs and preferences regarding adaptive learning systems.3. System Development: Based on the findings from the needs analysis, I developed a prototype of my adaptive learning system using machine learning algorithms and natural language processing techniques.4. Pilot Study: I conducted a pilot study with a small group of students to test the usability and effectiveness of my system. Quantitative data were collected through preand post-tests, surveys, and usage logs. Qualitative data were gathered through interviews and observations.Key Findings:Preliminary results from my pilot study are promising. Students reported a higher level of engagement and satisfaction with the adaptive learning system compared to traditional methods. Quantitative analysis revealed significant improvements in student performance and a decrease in cognitive load. Qualitative data further supported these findings, with students expressing a preference for the personalized and tailored learning experience provided by the system.Implications:These findings have important implications for both theory and practice. Theoretically, my research contributes to the understanding of how AI can enhance personalized learning and differentiate instruction. Practically, my adaptive learning system has the potential to improve student outcomes and enhance the teaching-learning process. Future research could explore the scalability and sustainability of such systems, as well as their impact on educator roles and practices.Conclusion:In conclusion, the progress and findings reported in this research project demonstrate the promise of AI in education, particularly in the development of adaptive learning systems. My work contributes to the field by providing a theoretical and empirical foundation for personalized learning experiences that cater to individual student needs. Future research will further elaborate on the impact and implications of these systems on teaching and learning practices.。

The Analysis of Scaffolding Instruction Application in Senior High School English Writing

zwwx@ 2019 年 09 月

Tel:+86-551-65690811 65690812

The Analysis of Scaffolding Instruction Application in Senior High School English Writing Teaching — Based on Students of Zuoyun Higቤተ መጻሕፍቲ ባይዱ School in Datong

LEI Ye-hua

(Bohai University, Jinzhou 121000, China)

Abstract: This study aims to investigate the relationship between English Writing achievement and scaffolding instruction with 100 Students from Zuoyun high school as subjects in a quantitative method. Results show that: 1) On the whole, high school students have good use of scaffolding in English writing. 2) The application of scaffolding instruction in high school English writing teaching has a significant positive correlation with English writing performance. 3) On the whole, there is a significant difference in the appli⁃ cation of the scaffolds in English writing between high and low-score group students. Key words:the application of scaffolding instruction; English writing scores; students in Grade One of high school 中图分类号:H319 文献标识码:A 文章编号:1009-5039(2019)18-0270-02

Quantitative Data Analysis- Questionnaire Design

Professional and Academic Skills(PAS)-2Week-3Quantitative Data Analysis- Questionnaire Design andLevel MeasurementDate: 10/02/2020v Collecting Quantitative Data using Questionnaire v Questionnaire Designv Pilot Questionnairev Level of Measurement: Categorical Variable and Continuous Variablev Identify the main issues that you need to consider when preparing quantitative data for analysisCollecting Primary Data using QuestionnaireØQuestionnaire:“is a method/technique of data collection in which each person is asked to respond to same set of questions in a predetermined order”(De Vaus, 2014)Ø “It also widely used as an instrument” (Ekinci, 2015)ØIt provides efficient way of collecting responses from a large sample prior to quantitative analysisTypes of QuestionnaireSource: Saunders (2016)Advantages and disadvantages of questionnaireAdvantages◦Large sample size – can be collected relatively quickly◦Cost effective- large sample of the population can be contacted at relative cost◦Analysis easier and visualisation – closed questions are easier to analyse◦Anonymity- allows respondents to maintain their anonymity◦If completed anonymously and in private then personal questions may receive more valid answersDisadvantages◦Difficult for respondents to provide deep reasons behind consumers behaviour◦Unanswered questions- some questions will be ignored and left unanswered◦Dishonest answer- they may not be 100% truthful with their answers◦Respondents under no/little pressure to complete and return◦No flexibility re questions posed and no supplementary options available-Things to Consider to Produce a Good QuestionnaireüTo ensure that it will collect precise data that you require to answer your research question(s) and your research objectivesüYou are unlikely to have more than one opportunity to collect the dataüDesign of your questionnaire will affect the response rate and the reliability of the data you collect (clear, specific and unambiguous)üEasy questions at the start and open questions at endüFar more closed than open questionsü Types of questions you need to ask to collect your dataüNumber of questions you need to ask to collect your dataüVisual presentation of the questionnaire- Colourful, artistic appearance of the questionnaireValidity and ReliabilityValidity: refers to the ability of your questionnaire to measure what you intend to measure also know as measurement validity.Reliability- refers to the consistency of the research method used, For example if the same method was used again would it lead to the same data collected.For a questionnaire to be valid it must be reliableValidity and reliability of the data you collect and the response rate you achieve depend largely on the:- Design of your questions- Structure of your questionnaire- and the rigor of your pilot testingFactors that can impact ReliabilityØParticipants errorØParticipants biasØResearcher errorØResearcher biasHow many questions should a questionnaire have?◦For your MA dissertation time taken to complete the questionnaire should not exceed 10 -12 minutes.◦Effective design of the questions should allow you to include all necessary questions for your final project and should consist maximum 15-20 questions.How many need to be collected ?◦Around a minimum of 100 for your MA dissertation when conducting a mono method.Constructing your Questions- Closed and Open questionsOpen questions- refer to as open ended questions, allow respondents to give answers in their own wayFink (2013) Closed questions- refer to closed-ended questions or forced-choice questions, provide a number of alternative answers from which the respondent is instructed to choose. It is easier,quicker and require minimal writingThere are 6 types of closed questions:List question: where the respondent is offered a list of items, any of which may be selectedCategory question: where only one response can be selected from a given set of categoriesRanking question: where the respondent is asked to place something in orderRating question: in which a rating device is used to record responsesQuantity question: to which the response is a number giving the amountMatrix question: where responses to two or more questions can be collected using the same gridSource: Saunders, 2016Rating /Likert Scale questionsCategory questionQuantity questionSemantic differential Rating question- Series of bi-polar adjectives between which underlined spaces areprovided for the recipient to record their views/feelings.Example of Rank questionExample of Matrix QuestionConstructing the QuestionnaireØExplaining the purpose of the questionnairev Covering letter or welcome screen:self-completed questionnaires should be accompanied by a covering letter, email, text or SMS message which explains the purpose of of the surveyv Introducing the questionnaire- explain clearly and concisely why you want the respondents to complete the surveyExample of Introduction for Interviewer-completed questionnaire….Lavrakas, 2016Closing the QuestionnaireØ at the end of the questionnaire you will need to explain clearly what you want your respondents to do with their completed questionnaire.Example:Saunders, 2016Pilot Testing and Assessing ValidityThe purpose of the pilot testing is to refine questionnaire so that the respondents will have no problems in answering the questions and there will be no problem in recording the dataØIt helps you to obtain some assessment of the questions, validity and likely reliability of the dataØTo ensure that the data collected will enable your investigative questions to be answeredØfor student questionnaire minimum number of a pilot is 10 (Flink, 2013)To ensure questionnaire’s face validity, as part of your Pilot you should try to get additional information about (Bell and Waters, 2014)-üHow long the question took to completeüthe clarity of the instructionüWhich, if any, questions were ambiguousüWhich, if any, question the respondents felt uneasyüwhether in the layout was clear and attractiveüany other commentsInternet QuestionnaireFor both Web and Mobile questionnaire:ØIt is important to have clear timetable that identifies the tasks need to be doneØA good response will depend on the recipient being motivated to answer the questionnaire and to send it backØEmail or SMS message and visual appearance will help to ensure a high level of responseØQuestionnaire design must be clear across all display mediaData collection- what to measureIf you intend to undertake quantitative analysis then you should consider:v The number of cases of data (sample size)v Type or types of data (scale of measurement)v Data layout and format required by the analysis softwarev Impact of data coding on subsequent analysis (for different types ofdata)v Process and checking the data error (checking your data for out of range values)When you collect data you need to decide on two thingsv What to measurev How to measureWhat to Measurev Usually we collect several measures on each person or thing of interestv Each thing we collect data about is called an observationv Each observation can be a person or an organisation, a product or a period in time Factual or demographic variables- age, gender, education, occupation, incomeAttitudes or opinion variables- record how respondents feel about something, or what they think is true or falsev Behaviour or event variables- contain data about what people did or what happened in past, happening or will happen in the future.Data collection- what to measure continue …..VariablesObservations Age SexIncome Brand preference 20Female 10,000Zara 30Female 35,000Cartier25Male 25,000 SuperdryLevel of MeasurementLevel of measurement: is the relationship between what is being measured and the numbers that represent what is being measured Variables can take many forms and levels. Quantitative data can be divided into two distinct groups:v Categorical variable◦Descriptive or Nominal data◦Ranked datav Numerical variable◦Continuous data◦Discrete DataCategorical DataCategorical data- refer to data whose values can not be measured numerically but can be classified into sets (categories) according to the characteristics that identify or describe the variable or placed in rank order (Brown and Saunders, 2008)i.e. your species (human, domestic cat, fruit bat), race, sex, age group, and educational levelv Descriptive/Nominal data- these data simply count the number of occurrences in each category of a variable. (a car manufacturer may categorize the types of cars as hatchback, Saloon and estate)Binary/dichotomous variable: a sub-type of nominal scale with only two categories.It names two distinct types of things such as: Male or Female, Dead or Alive; Yes or NoCategorical Data continuous…….v Ranked/ordinal variable- precise form of categorical data. When categories are ordered, the variable is known as an ordinal variable.v Ordinal variables will tell us the things that happenedv The order in which things occurredv But this data will not tell us differences between the values/points on a scaleExample 1- beauty pageant winners- first, second and thirdExample-2 : satisfaction levelUnsatisfied Very unsatisfied Very Satisfied Satisfied Somewhatsatisfied123 45Examples of Nominal and ordinal DataNumerical Variablev Numerical variables are those whose values are measured or counted numerically as quantities . (Brown and Saunders, 2008).v These data are more precise than categorical data as you can assign each data valuea position on a numerical scaleTypes of Numerical variable:v Continuous variable-a variable that has a changing value and it can take on infinitely many, uncountable number. i.e. time, a person’s weight, Age, number of customers etcv Interval data: for data to be interval, we must be certain that data on scalerepresent equal differences in the property being measured. (i.e. measure averageday time temperature during summer in London- 60-70 degrees Fahrenheit, 80-90….)Numerical Data continues ……v Ratio data : a ratio variable has all the properties of an interval variable, and also has an absolute value of 0.v Discrete variable- can take only certain values. Usually the whole number on the scale. i.e.v Example:rating your confidence level on 5 point scale.Number of Customers-12Number of shops- 10Number of friends- 8Stress Level0 1 2 3 4 5 6 7 8 9 10Seminar ActivityActivity-1:Demonstrate your choice of data collection method(s) that you plan to adopt in your study.Activity-2-In small groups, discuss about different types of questionnaire and which one you are likely to use.- What are the main attributes of questionnaires discussed on this PPT?-What scale of measurement questions you are likely to use in you questionnaire, provide at least three examples of Rating scales questions.。

20390_ftp

Is Inquiry Possible in Light of Accountability?:A Quantitative Comparison of the Relative Effectiveness of Guided Inquiry and Verification Laboratory InstructionMARGARET R.BLANCHARDDepartment of Mathematics,Science and Technology Education,College of Education, North Carolina State University,Raleigh,NC27695,USASHERRY A.SOUTHERLANDSchool of Teacher Education,College of Education,Florida State University,Tallahassee, FL32306,USAJASON W.OSBORNEDepartment of Curriculum and Instruction and Counselor Education,College of Education,North Carolina State University,Raleigh,NC27695,USAVICTOR D.SAMPSONSchool of Teacher Education,College of Education,Florida State University,Tallahassee, FL32306,USALEONARD A.ANNETTADepartment of Mathematics,Science and Technology Education,College of Education, North Carolina State University,Raleigh,NC27695,USAELLEN M.GRANGERDepartment of Biological Science,College of Arts and Sciences,Florida State University, Tallahassee,FL32306,USAReceived4July2008;revised20November2009,18December2009;accepted4January2010DOI10.1002/sce.20390Published online11March2010in Wiley InterScience().C 2010Wiley Periodicals,Inc.578BLANCHARD ET AL.ABSTRACT:In this quantitative study,we compare the efficacy of Level2,guided inquiry–based instruction to more traditional,verification laboratory instruction in supporting stu-dent performance on a standardized measure of knowledge of content,procedure,andnature of science.Our sample included1,700students placed in the classrooms of12mid-dle school and12high school science teachers.The instruction for both groups included aweek long,laboratory-based,forensics unit.Students were given pre-,post-,and delayedposttests,the results of which were analyzed through a Hierarchical Linear Model(HLM) using students’scores,teacher,level of school,Reformed Teaching Observation Protocol(RTOP)scores,and school socioeconomic status.Overall,compared to students in tra-ditional sections,students who participated in an inquiry-based laboratory unit showedsignificantly higher posttest scores;had the higher scores,more growth,and long-termretention at both the high school and middle school levels,if their teacher had strongerimplementation of inquiry methods(as measured by RTOP scores);and tended to havebetter outcomes than those who learned through traditional methods,regardless of level ofpoverty in the school.Ourfindings suggest that Level2inquiry can be an effective teach-ing approach to support student learning as measured through standardized assessments.C 2010Wiley Periodicals,Inc.Sci Ed94:577–616,2010INTRODUCTIONOverwhelmingly,the states’responses to No Child Left Behind(NCLB)legislation have been to develop and use standardized summative assessments to document student achievement and to evaluate teaching effectiveness(Jones,Jones,&Hargrove,2003). Such emphasis on standardized testing is but one part of a national movement toward accountability that was spurred,in part,by the1999Third International Mathematics and Science Study(TIMSS),in which U.S.students were found to lag behind in science and mathematics test scores when compared to students in other developed countries,many of which had high stakes national tests(Abrams,2007).While the American Association for the Advancement of Science(AAAS)and National Research Council(NRC)have developed guidelines for what ought to be taught in science classrooms,all50states have developed state curricula that have varying degrees of resemblance to these national efforts. However,most states did not adopt or attempt to emulate the standards for instruction, assessment,and program development that were also included in these national guidelines (Collins,1998).Most states,in other words,have developed new standards for student achievement and implemented a standardized statewide summative assessment to ensure that all students will reach these benchmarks but do not focus on changing the nature of classroom instruction or how assessment can be used to foster learning as part of this process.Many states also use a high stakes assessment based on state standards and student performance levels on these examinations as a way to evaluate schools and/or to determine funding.In rapid response to this relationship,many teachers began shaping their instruction to“teach to the test”(Barkesdale-Ladd&Thomas,2000;Hamilton,Stecher,&Klein,2002;Correspondence to:Margaret R.Blanchard;e-mail:Meg Blanchard@Contract grant sponsor:Multi-University Reading,Mathematics and Science Initiative(MURMSI) from a grant awarded by the U.S.Department of Education to the Learning Systems Institute,Office of the Provost,Florida State University(FY04award number U215K040242).MURMSI is a statewide,multiyear research and development initiative designed to measurably improve teaching and learning in reading,mathematics and science in Florida’s K-12schools with a special emphasis on students considered at-risk due to economic or other conditions.Science EducationGUIDED INQUIRY AND VERIFICA TION LABORA TORY INSTRUCTION579 Passman,2001;Whitford&Jones,2000;Yore et al.,2008).According to Shaver,Cuevas, Lee,and Avalos(2007)and Pringle and Carrier Martin(2005),this push for accountability encourages teachers to adopt teaching practices that they perceive as the most effective for“raising test scores”rather than practices that focus on student understanding.Indeed, many teachers indicate that the high stakes nature of standardized summative assessments impacts the quality of their science teaching(Saka,Southerland,&Brooks,2009;Settlage &Meadows,2002;Shaver et al.,2007;Southerland,Abrams,&Hutner,2008;Wideen, O’Shea,Pye,&Ivany,1997).As Deboer(2002)writes,There is considerable evidence that,although well-intentioned,standards-based educationhas created impediments to student-centered teaching and learning while at the same timeit has reduced the autonomy and creativity of classroom teachers.(p.415)Ironically,the negative impact of high stakes summative assessment on teaching practices may be greater in high poverty districts.Jones et al.(2003)found that teachers at lower-performing schools and/or higher poverty schools were more likely to change their practices in response to high-stakes testing because teachers and principals at these schools need to rapidly raise achievement scores in response to the increased scrutiny of county and state officials or the public in pounding the situation is that socioeconomic status(SES),race,and ethnicity are associated with educational achievement(Hurn,1992). Several studies indicate that schools with high minority populations and schools with high poverty levels are more likely to employ test preparation practices(Firestone,Monfils, &Camali,2001;Rothman,1996;Soloman,1998).Perrault(2000),for example,found that teacher-centered instructional approaches that focus on basic skill development are often reinforced at lower performing schools.Jones and Johnston(2002)suggest that these approaches are common at lower performing school because they can be an effective way to prepare students for high stakes assessment.Yet,as Amrein and Berliner(2002)point out,although high stakes tests may improve test scores in the short term,they do little to improve student learning.Kohn(2000)and Hilliard(2000)echo this concern and assert that high stakes tests undermine true school reform(as cited in Pringle&Carrier Martin,2005). Although current research indicates that assessment needs to play a central role in the teaching and learning of science(NRC,1996),not all assessments in education should be summative in nature.Assessments can also be formative,evaluative,or educative.Formative assessments are used to shape the nature of instruction to better meet the needs of students (Black&William,1998),whereas evaluative assessments are used to determine what students have learned during a unit or a lesson to make judgments about the effectiveness of the instruction so it can be improved in the future.Finally,educative assessments,such as the feedback students receive from a teacher,are used to foster learning or improve performance(Wiggins,1998).Many argue that educators need to consistently use all four types of assessments to improve the teaching and learning of science(Gallager,2006). That notion that assessment can play a role in shaping instruction and fostering student learning,however,is very distinct from the intent of summative,statewide,standardized assessments.These assessments,which are used as a measure of student achievement,are often used as a means of accountability for school districts,administrators,and the teachers that work within those districts.The scores from these assessments,as noted earlier,are commonly used to rank or evaluate schools and to inform decisions about funding.In many states in the United States,these statewide assessments are used as a deciding factor in student promotion.The primary goal of these assessments,in other words,is not intended to directly shape student learning or the nature of instruction.Instead,they are a part of a system of incentives and consequences;schools whose children score well and show Science Education580BLANCHARD ET AL.continued growth on these measures are rewarded,whereas schools whose children fail to do well or to show adequate progress in achievement will lose funding.This system is designed to serves as a strong message to change or improve the quality of teaching and learning,so that children in those schools will show improvement on those measures. Concurrent with the movement toward high stakes testing at this district and school level is the promotion of inquiry-based science teaching by science educators.Inquiry-based instruction is promoted in national reform documents as an effective way to help students learn science content,comprehend the nature of scientific inquiry,and understand how to engage in the inquiry process(AAAS,1993,2000;NRC,1996,2000).Inquiry-based instruction also serves as the basis for the pedagogical standards proposed in these documents(Collins,1998).This literature promotes the use inquiry-based instruction inside the classroom arguing that it is better aligned with how people learn and should result in a better understanding of scientific content and processes for a more and greater diversity of students.In seeming juxtaposition to the accountability movement,which focuses on measuring achievement but not instruction,these reform documents indicate that teachers need to spend more time using inquiry-based instructional strategies in problem-solving contexts and less time in didactic presentations of facts(Gess-Newsome,Southerland,Johnston,& Woodbury,2003).Many science teachers and administrators,however,believe that inquiry-based instruction is not possible given the current accountability movement arguing that this type of instruction will not lead to higher test scores(Jones et al.,2003).Although some science educators suggest that inquiry-based instruction often results in a better understanding of the content and that teaching to raise test scores or teaching using inquiry-based instruction is a false dichotomy(Shymansky,Hedges,&Woodworth,1990),the empirical support for this claim is weak.The goal of this study,therefore,is to take a critical look at the benefits of using inquiry in the classroom,focusing on the central question:If teachers employ effective inquiry-based methods of instruction,is the learning of science content and process sacrificed or enhanced?To answer this question,we developed a forensics unit designed to be of high interest to all students,in which students’laboratoryfindings would possibly implicate characters in “foul play,”similar to popular crime programs.We then developed an assessment,which reflected the nature and format of the summative standardized assessment given to schools by the state to meet legislative mandates,to compare student learning in middle schools and high schools with low,middle,and high SES status using two instructional methods; traditional verification laboratory instruction and guided(Level2)inquiry-based laboratory instruction(Settlage&Southerland,2007).Cognizant of the strong potential for differing results based on SES,as well as the critical role of the teacher,and because of the nested nature of the data,we gathered data about the percentage of students that received free or reduced lunch at each school and collected video data to capture the nature of each teacher’s enactment of the lessons.We then employed hierarchical linear modeling(HLM) to examine change in student scores over time.This analysis enabled us to appropriately model these data while taking higher level variables such as school poverty and the teacher implementation into account(Raudenbush&Bryk,2002).REVIEW OF THE LITERATUREDiscussions of the effectiveness of inquiry-based science teaching are rich but incon-clusive.Although the results of some studies indicate that inquiry-based instruction is less effective than direct instruction(e.g.,Klahr&Nigam,2004),a much larger body of re-search suggests student learning is greater or equal to the learning that results from theScience EducationGUIDED INQUIRY AND VERIFICA TION LABORA TORY INSTRUCTION581 use of inquiry-based instruction(see Colburn,2000a).These conflictingfindings,however, seem to stem from the various ways the term“inquiry”has been defined by researchers in various investigations.Given this confusion,we willfirst outline a comprehensive definition of inquiry-based instruction that we have used to frame our research.We then will use this definition to discuss the majorfindings of this literature.A Comprehensive Definition of InquiryThere is much confusion in general about what constitutes inquiry and its role in science teaching(Abrams,Southerland,&Evans,2007).Anderson(2007,p.808)explains that“if [inquiry]is to continue to be useful we will have to press for clarity when the word enters a conversation and not assume we know the intended meaning.”Abrams et al.(2007)suggest that to guide a useful discussion of classroom inquiry,one must both describe the goal one has for the inquiry and the instructional approach one uses to engage students in inquiry. Educators typically employ inquiry in the classroom(as opposed to scientific inquiry)in the service of one or more of the following goals:(1)understanding how scientific inquiry proceeds and how that shapes the knowledge it produces(learning about nature of science (NOS)and scientific inquiry),(2)being able to successfully perform some semblance of scientific inquiry(learning to inquire),and(3)constructing an understanding of the knowledge of science(learning scientific knowledge;NRC,2000).Once the primary goal or goals for the classroom inquiry is decided,it is useful to describe the instructional approach used in the eful descriptions of inquiry come from Schwab(1962)and Colburn(2000b),who focus on three key activities:asking questions, collecting data,and interpreting those data(see Table1).The degree of inquiry depends on who is responsible for the activity.Settlage and Southerland(2007)explain, In level0inquiry,the teacher provides the students with the question to be investigated and the methods of gathering data.The conclusions are not immediately obvious to the studentsduring the activities,but the teacher is there to guide them toward an expected conclusion.Despite any variety in the students’data,the teacher will help them to interpret those soeveryone understands the importance of the results.In Level1or“structured”inquiry,students are provided with a question and a method but are responsible for the interpretation of the result,whereas in Level2or“guided”inquiry, students are responsible determining the method of investigation and how to interpret the results.In Level3or“open”inquiries,students generate the question as well,therefore tak-ing responsibility for all major aspects of the ing this framework,inquiry, albeit at differing levels,corresponds to any science investigation,which also encompasses TABLE1Levels of Inquiry(Abrams et al.,2007)adapted from Schwab(1962)and Colburn(2000b)Source of Data Collection Interpretationthe Question Methods of Results Level0:Verification Given by teacher Given by teacher Given by teacher Level1:Structured Given by teacher Given by teacher Open to student Level2:Guided Given by teacher Open to student Open to student Level3:Open Open to student Open to student Open to studentScience Education582BLANCHARD ET AL.traditional(verification)laboratory instruction.Therefore,within this framework,even “cookbook”laboratories are a form of inquiry(i.e.,Level0or“Verification”).We use these labels mindful of the fact that in dynamic classrooms,these designations may not always be clear-cut;that is,teachers often need to provide support in helping students to generate questions or research designs(that might be fruitful)or answer the question that the student is trying to answer despite the initial intention for student autonomy in those tasks(Blanchard,2006).Hmelo-Silver,Duncan,and Chinn(2007)describe these steps,or ways teachers attempt to provide scaffolding as key to assisting students to make sense and thus enhance learning during these complex tasks.It is important to recognize, however,that there is no optimal form of inquiry that extends across all content or context. Instead,the goal a teacher has for inquiry,characteristics of her teaching context,skill level of the student and the materials available each shape the level of inquiry that can be optimally employed.Thus,we most move away from viewing or describing Level3 inquiry or“open inquiry”as the“ideal”way to teach science(Settlage,2007);instead the optimal level of inquiry will vary according to the classroom context and the demands of the material.Inquiry-Based Instruction Found Less EffectiveA study by Klahr and Nigam(2004)is often cited as evidence that inquiry-based in-struction is less effective than direct instruction.In this study,Klahr and Nigam compared the impact of direct instruction and discovery learning using a sample of112third-and fourth-grade students.The authors tried to magnify the differences between the two instruc-tional methods in this study;students in the discovery learning group received no teacher intervention beyond the suggestion of a learning goal and in the direct instruction group, the materials,goals,examples,explanations,and pace were all teacher controlled.On Day1of the study,students learned an assessment technique(called CVS);one week later, they assessed posters using these techniques.The researchers found that more students in the direct instruction group mastered the CVS technique than those in the discovery-learning group.It is important to note,however,that the discovery method employed in this research was carried out with no teacher intervention.Kirschner,Sweller,and Clark(2006)also argue that inquiry-based instruction is less effective than more guided forms of instruction.These authors lump inquiry,discovery learning,problem-based learning,and experiential learning approaches together in this article and then characterize them as minimally guided forms of instruction.Kirschner, Sweller,and Clark suggest that all of these methods are“pedagogically equivalent ap-proaches[that]include science instruction in which students are placed in inquiry learning contexts and asked to discover the fundamental and well-known principles of science by modeling the investigatory activities of researchers”(p.75–76,italics added).They also suggest that these“approaches ignore both the structure that constitute human cognitive architecture and evidence from empirical studies over the last decade that consistently indi-cate that minimally guided instruction is less effective and less efficient than instructional approaches that place a strong emphasis on guidance in student learning”(p.75).The point they stress in this article is that teacher guidance plays an important role in student learning and methods that do not provide students with the guidance they need are likely to be ineffective.The discovery approach of Klahr and Nigam(2004)and the inquiry described by Kirschner et al.(2006)seem to most closely resemble Level3or open inquiry (Settlage&Southerland,2007).Open inquiries typically require prior experiences with inquiry and as well as some prior knowledge and skills,and therefore only are appropriateScience EducationGUIDED INQUIRY AND VERIFICA TION LABORA TORY INSTRUCTION583 to teach and learn certain types of content(Abrams et al.,2007).The National Science Education Standards(NSES),which describe inquiry in terms offive essential features, suggest that“the type and amount of structure[by the teacher]can vary depending on what is needed to keep students productively engaged in pursuit of a learning outcome”and therefore“what the nature of that inquiry should be”(NRC,2000,p.135).In addition,the direct instruction group of Klahr and Nigam(2004)was told the result of the investigation, which is atypical of most laboratory settings,in which thefinal outcome is left to the student to discover,and then often confirmed by the teacher afterward(Colburn,2000b). Thus,Klahr and Nigam’s small-scale,short-term study with elementary students used a model of discovery that lacked the teacher interaction recommended by Hmelo-Silver et al. (2007),the NSES(NRC,2000),and science education researchers working in classrooms with teachers attempting inquiry-based instructional methods(e.g.,Blanchard,Southerland &Granger,2009;Crawford,2007).Klahr and Nigam’s study also used students’judgment of posters as the outcome measure,not students’ability to show gains on standardized tests. Inquiry-Based Instruction Found to be Equivalent or More Effective Colburn(2000a),arguably a proponent of inquiry-based instructional methods,con-ducted a literature review of many studies that demonstrate greater or equal student learning with inquiry-based instruction.The studies included in this review cover a broad time span (1960s–1990s)and they differ by grade level,level of inquiry,amount of teacher support, and the use of laboratory.Colburn writes,Most studies I examined supported the collective conclusion that inquiry-based instruction was equal or superior to other instructional modes for students producing higher test scoreson content achievement tests...These studies generally concluded inquiry was beneficialfor achieving one or more non-content goals,while simultaneously not hurting students’content achievement.(p.3)In one such study,Leonard(1983)compared a Biological Sciences Curriculum Study in a college biology laboratory to a well-established commercial program that was highly structured.On a posttest,the students who had experienced the inquiry-based approach scored significantly higher than students who experienced a more traditional verification method.Similar studies(Hall&McCurdy,1990;Leonard,Cavana,&Lowery,1981)used straightforward designs to look at achievement in high school and college classrooms and found that students achieved similar or higher test scores when taught using guided or Level 2inquiry.These studies,however,did not study achievement at the middle school level and did not account for any additional factors,such as student SES.In a follow-up to the study by Klahr and Nigam(2004),Dean and Kuhn(2006)focused on students the same age as those studied by Klahr and Nigam,but followed students over 10weeks.Three groups of fourth-grade students(15students in each group)of diverse socioeconomic status worked on problems that required them to control variables to reach an effective solution related to forecasting an earthquake.Thefirst group conducted only the discovery(Level3or open inquiry)work.A second group received direct instruction on concepts,prior to conducting the earthquake forecasting investigation.The third group received only the direct instruction,without the engagement and practice.Dean and Kuhn found that direct instruction was neither necessary nor sufficient for students to acquire and maintain the knowledge on an immediate posttest and one given5weeks later.The researchers were not concerned with efficiency,and they did not control for the amount of time students spent on the tasks.Indeed,students in the discovery conditions spent Science Education584BLANCHARD ET AL.more“time on task”than those in the direct instruction only group.Although this study is methodologically suboptimal,this may be a pedagogically valuable aspect to inquiry methods—that students may spend more time on task overall(and are more engaged), rather than spending a significant portion of lesson time off-task and unengaged.A number of studies report gains in student learning when multiple approaches to science instruction are taken with participants(one of which being inquiry),when teachers receive extensive professional development,and when engagement lasts a prolonged period of time.In one such study,Marx et al.(2004)worked with middle school teachers,students, and district personnel in an inner city environment in Detroit,Michigan.This large-scale, 3-year program engaged approximately8,000students in inquiry-based and technology-infused curriculum units that were collaboratively developed by district personnel and staff. Results showed statistically significant gains on students’posttests,and that the strengths of the effects grew over the3years of the study.The authors of this study describe the effort as an example of how students who historically have been low achievers in science can succeed in inquiry-based and standards-linked science when there is careful development and alignment with district policies and personnel.Although this study is an excellent example of a promising professional development model coupled with inquiry-based science,it is not a comparative,controlled study on the effects of inquiry-based instruction versus didactic instruction.In a follow-up to this study,Geier et al.(2008)examined the impact of these units on student performance on the high stakes state standardized test in science.Geier et al. compared the performance of two cohorts of seventh and eighth graders that participated in the project with the remainder of the district population in this study.The results of their analysis indicate that the cohorts of students that participated in the project had significantly higher scores and pass rates on the statewide test.It is important to note,however,that the pooled comparison used in this study did not constitute a true control study since many of the students in the district also experienced other science improvement efforts and some received no intervention at all.Thus,the results of this study,although informative,cannot be considered definitive.Inconclusive Findings Regarding Inquiry in the ClassroomAnother recent study had inconclusivefindings about the student learning with inquiry-based instruction versus other methods.N.Lederman,Lederman,Wickman,and Lager-Nyqvist(2007)conducted a study with three Grade8teachers in Chicago and three Grade6–7teachers in Stockholm,all of who participated in2weeks of professional development.The teachers taught two,2-week-long science units using the following three methods(to different class periods)each day:guided(Level2)inquiry-based instruction, direct instruction,and a hybrid method in between inquiry and direct instruction.Approxi-mately500students participated in the project.Trained observers observed lessons selected at random to verify the validity of the three instructional treatments.No significant differ-ences were found in students’test scores on subject matter or attitudes toward science on posttests.In a follow-up to this work,the authors replicated the study with the same group of teachers and had similarfindings;they found no significant differences on posttests based on teaching method(J.S.Lederman,Lederman,&Wickman,2008).The J.S.Lederman et al.findings refute the work of Chen and Klahr(1999)and Klahr(2000),but are consistent with those of V on Seeker and Lissitz(1999),V on Seeker (2002),Shymansky et al.(1990),and Shymansky,Kyle,and Alport(1983).The Lederman et al.studies employed only six teachers,all at the middle school level,each of whom implemented three different instructional strategies on the same day to different students.Science Education。

Y084-分析化学-黄承志主编 分析化学 第1章 绪论(为保护版权请大家不要外传哦~)

第1章绪论我们知道,世界是物质的,物质世界是不断运动和发展变化的。

但是,物质世界是由什么组成、各种成分各有多少、彼此之间的关系如何以及如何随着时间的变化而变化等诸多问题就犹如黑箱,是自古以来人们就一直探索的问题。

人们认识黑箱的最简单办法是把黑箱劈开、或者在黑箱上凿开小孔,使用肉眼或探头观察。

人们正是通过这样的方法逐渐认识了我们赖以生存的客观物质世界,形成了认识客观世界的各种科学方法和科学理论,发明了各种实用技术。

药学和制药学工作者为了研究和开发各种药物,需要掌握与疾病相关的各种科学方法、科学理论和各种实用技术,其中分析化学就是药学工作者必须掌握的确定有关药物组成和含量的方法和技术。

1.1 什么是分析化学1.1.1分析化学的定义分析化学(Analytical chemistry)是关于物质的质和量的科学,是通过化学、物理或其他手段获得物质是什么、有多少、结构和形态如何等信息的方法论和技术学。

它涉及到在一般化学测量过程中的相关概念、术语、一般原理和实验技术等基础问题,需要对所建立方法的正确性、准确度等进行评价。

美国化学会(American Chemical Society, ACS)认为,分析化学是获得、处理和交换有关物质组成和结构信息的科学, 是测定物质是什么和有多少的艺术和科学1。

而国际纯粹与应用化学会(IUPAC)认为分析化学是“关于建立或应用方法、仪器和策略以获取物质在空间和时间方面的组成和性质以及有关测量值的不准确度、有效性、和/或基本标准可追溯性等信息的科学”2。

分析化学以揭示物质世界组成、含量、结构及形态等信息的真相为根本任务。

通俗地说,分析化学是关于物质测量的学科,它能回答样品“是什么”(定性分析)、1Analytical chemistry is the science of obtaining, processing, and communicating information about the composition and structure of matter. In other words, it is the art and science of determining what matter is and how much of it exists.2Analytical chemistry is a scientific discipline that develops and applies methods, instruments, and strategies to obtain information on the composition and nature of matter in space and time, as well as on the value of these measurements, i.e., their uncertainty, validation, and/or traceability to fundamental standards.“有多少”(定量分析)、“结构如何(结构分析)”、“形态怎样(形态分析)”等问题。

小鼠胰岛素说明书(1)

小鼠胰岛素定量分析酶联免疫检测试剂盒本试剂盒仅供科研使用。

用于体外定量检测小鼠血清、血浆或细胞培养上清液中的胰岛素浓度。

使用前请仔细阅读说明书并检查试剂组分是否完整, 如有疑问请与上海巧伊生物科技有限公司联系,我们将提供力所能及的帮助。

如您有其它需求,请登录上海巧伊生物科技有限公司网站或致电本公司。

胰岛素简介:胰岛素是糖代谢中最主要的激素之一。

胰腺的ß细胞岛细胞产生胰岛素前体蛋白,前体蛋白被加工成C肽和胰岛素。

它们以等摩尔浓度进入血循环中。

成熟的胰岛素由A、B两条链组成。

这两条链是通过两个二硫键桥接形成有功能的胰岛素分子。

血浆葡萄糖浓度的变化是胰岛素产生并分泌的最主要刺激因素,产生的胰岛素具有一些代谢调节作用。

其最主要的作用是,将外周血中糖转运到肝脏中贮存起来。

一些诸如肝糖生成障碍或在促进血糖升高的激素诸如胰高血糖素、肾上腺素、生长激素和皮质醇等作用下促进肝糖分解都可拮抗胰岛素的作用。

检测原理:本试剂盒采用双抗体夹心ELISA法检测样本中胰岛素的浓度。

胰岛素捕获抗体已预包被于酶标板上,当加入标本或参考品时,其中的胰岛素会与捕获抗体结合,其它游离的成分通过洗涤的过程被除去。

当加入与HRP耦连的抗胰岛素抗体后,抗小鼠胰岛素抗体与胰岛素接合,形成夹心的免疫复合物,其它游离的成分通过洗涤的过程被除去。

最后加入显色剂,若样本中存在胰岛素将会形成免疫复合物,辣根过氧化物酶会催化无色的显色剂氧化成蓝色物质,在加入终止液后呈黄色。

通过酶标仪检测,读其450nm处的OD值,胰岛素浓度与OD450值之间呈正比,通过参考品绘制标准曲线,对照未知样本中OD值,即可算出标本中胰岛素浓度。

小鼠胰岛素定量分析酶联免疫检测试剂盒组成:组分 规格(96T/48T)小鼠胰岛素预包被板 12条/6条标准品稀释液 10ml/5ml小鼠胰岛素标准品 2支/1支(冻干)小鼠胰岛素抗体HRP结合物 10ml/5ml浓缩洗涤液 20× 30ml/15mlTMB底物 10ml/5ml终止液 5ml/3ml封板胶纸 3/2张说明书 1份标本收集:1.标本的收集请按下列流程进行操作;A.细胞上清标本离心去除悬浮物后即可;B.血清标本应是自然凝固后,取上清,避免在冰箱中凝固血液;C.血浆标本,推荐用EDTA的方法收集若待测样本不能及时检测,D.标本收集后请分装,冻存于-20℃,避免反复冻融。

专业英语单词

一、专业词汇人工智能artificial intelligence <教学技术instructional technology 电子绩效支持系统(EPSS )electronic performance support systems面向媒体media-oriented 面向过程process-oriented系统化systematic 利用utilization媒体特性attribute of media 函授课程correspondence course主机,大型机mainframe 无显著差异no significant difference 媒体大争论the great media debate 视盘videodisk<绩效技术performance technology 情境认知situated cognition视听传播andiovisual communication 智能代理intelligent agent虚拟现实virtual reality 经验之塔cone of experience一般系统论general system 教学系统设计( ISD ) instructional systems design知识管理系统knowledge management systems学习者为中心的学习环境learner-centered learning environments程序教学programmed instruction 学科内容专家(SME) Subject Matter Expert任务分析task analysis 言语主义verbalism传播,传播学communications 操作性条件反射operant conditioning 进步主义progressivism 强化reinforcement远程教育distance education 实时的real-time直观概念intuitive notion 图式理论schema theory精细化理论elaboration theory 元认知metacognition经典型条件反射classical conditioning 操作性条件反射operant conditioning 言语行为verbal behavior 认知科学cognitive science长时记忆long-term memory 短时记忆short-term memory乘法表multiplication table 学习分类taxonomy of learning<行为主义behaviorism <认知主义cognitivism建构主义constructivism <个性化教学individualized instructiona 教学开发instructional development 资讯系统advisory system著作工具authoring tools 信息管理information management知识管理knowledge management 智能导师系统intelligent tutoring system交互式仿真模拟interactive simulation系统化教学开发systematic instructional development<学习管理系统learning management system <客观主义objectvism<后现代注意postmodernism <发现学习discovery learning <信息加工理论information-processing theory <教学策略instructional strategy <绩效潜能performance potential <问题解决problem solving<核心传播理论core communication theory 社会动力学societal dynamic传播理论communication theory <群体传播group communication <人际传播interpersonal communication 大众传播mass communication收文incoming message 协作网络cooperative network发送者和接收者sender and receiver创新推广理论(IDT)Innovation Diffusion Theory 混沌理论chaos theory复杂性和相互依赖性complexity and interdependence 自然科学natural science 系统动力学system dynamica 系统思考systems thinking<学习结果分类category of learning outcome<认知信息加工理论cognitive information processing theory<建构主义学习理论constructivism learning theory<教育目标educational objective <教学事件event of instruction 智慧技能intellectual skills <学习条件learning condition<动作技能motor skill <程序教学programmed instruction 言语信息verbal information 认知策略cognitive sstrategies机械学习rote learning 非随意性non-arbitrary先有知识prior knowledge 迭代过程iterative process同化assimiliation 逐字回忆verbatim recall<评价/测量策略measurement strategy <社会学习理论social learning theory <教学机器teaching machine 教学事件events of instruction<学习目标分析analysis of learning goals <评价工具evaluation instrument <教学模式instructional model 形成性评价formative evaluation 总结性评价summative evaluation 前端分析front-end analysis学习环境learning environment以学生为中心的学习student-centered learning技术支持的学习环境technology-supported learning environment<教学设计自动化系统AID system Automated I D<智能代理intelligent agent <知识对象knowledge object<认知技能cognitive skill <协作学习cooperative learning 信息素养information literacy 信息高速公路information highway关键技能critical skills <终身学习lifelong learning<技术素养technological literacy <应用型研究applied research<批判性探究critcal inquiry <经验材料empirical material<定量研究quantitative research <基于问题的problem-based<实施阶段Implementation phase <确定目标State objectives<通信革命communication revolution <九段教学法Nine event of instruction <学习者特征Learner Characteristics <视听教学audiovisual instruction <案例研究case study <应用性研究applied research<因果关系cause-effect relationships <控制组control group<经验材料empirical material <实验组experimental group<实验处理experimental treatment <形成假设hypothesis formulation <独立变量independent variable <数据资料numerical data<定量研究quantitative research <准实验的quasi-experimental<社会调查social survey <主题subject matter元认知metacognation 知识库knowledge base心智模型mental models 知识迁移knowledge transfer高阶技能higher order skills 自我意识self-awareness教学干预instructional interventions 视频会议videoconferencing录像带videotape 独立学习independent study学习结果learning outcomes<人种<超媒体AECT(Association for Educational Communications and Technology)教育传播与技术协会DA VI(Department of Audiovisuan Instruction)视听教学部ECIT(Educational Communications and Instuctional Technology)教育传播和教学技术<EPSS(Electronic Performance Support System)电子绩效支持系统Committee on Definitions and Terminology 定义与术语委员会<ISD(instruction systems design)教学系统设计ID(instruction design)教学设计SME(Subject Matter Expert)学科内容专家<VR(virtual reality)虚拟现实DVI(Department of Visual Instruction)视觉教学部<CAI(computer-assisted instruction)<LMS(learning management system)学习管理系统CD(Compact Disk)光盘DVD(digital video disk)数字化视频光盘VCR(Video Cassette Recorder)录像机WWW(World Wide Web)万维网HBO(Home Box Office)家庭影院DVR(Digital Video Recorder)数字录像机MPC(multimedia personal computer)个人多媒体计算机<AI(Artificial Intelligence)人工智能AR(Artificial reality)人工现实CD-ROM(CompactDisk Read-Only Memory)光盘只读存储器<CMC(Computer-MediatedCommunication)计算机媒介沟通,计算机传媒通信LCD 液晶显示器NIR(Network Information Retrieval)网络信息搜索系统<ID1(The First Generation Instruction Design)第一代教学设计ID2(The Second Generation Instruction Design)第二代教学设计<AID(Automated Instruction Design)自动化教学设计IDE(Instructional Design Environment)教学设计环境CBI(computer-based instruction)计算机辅助教学ICT(Information and Communications Technology)信息与通信技术ALA(The American Library Association)美国图书馆协会OTEN(Open Trainning and Education Network)开放式培训与教育网络ODL(Open and Distance Learning)开放和远程学习COL(The Commonwealth of Learning)学习共同体ICDE(International Council for Open and Distance Education)国际开放与远程教育协会JTA(Job Task Analysis)工作任务分析ZPD(Zone Of Proximal Development)最近发展区<LAN 局域网教学理论- 代表人物(对应连线选择)远程教育九段教学法经验理论布鲁姆、加涅、……四、翻译50分(1)12分经验之塔中英翻译模型。

定量分析的英文名词解释

定量分析的英文名词解释Quantitative Analysis: An English Term ExplanationIntroductionThe field of quantitative analysis is a methodical approach that involves the examination and interpretation of data using mathematical and statistical techniques. It plays a crucial role in various disciplines, including finance, economics, business management, and scientific research. This article aims to provide a comprehensive explanation of the English term "quantitative analysis" by exploring its definitions, applications, and key components.Defining Quantitative AnalysisQuantitative analysis refers to the systematic process of analyzing numerical data to uncover patterns, relationships, and trends. Unlike qualitative analysis, which focuses on subjective observations and interpretations, quantitative analysis utilizes objective measurements and mathematical calculations to derive meaningful insights. By quantifying data through statistical models and mathematical formulas, this analytical approach enables researchers and decision-makers to make informed judgments based on empirical evidence.Applications of Quantitative Analysis1. Financial Analysis:Quantitative analysis plays a vital role in the field of finance. Analysts utilize various quantitative techniques to assess investments, evaluate risk, and make strategic decisions. For instance, by using financial ratios and mathematical models, analysts can analyze the performance and stability of companies, determine the fair value of stocks, and predict future market trends.2. Economics:Economists heavily rely on quantitative analysis to study economic phenomena and formulate economic policies. By analyzing economic indicators such as GDP, inflation rates, and unemployment rates, economists can assess the health of economies, predict future trends, and propose effective strategies for economic growth.3. Market Research:Quantitative analysis is widely used in market research to gather and interpret consumer data. Surveys and questionnaires are designed to collect quantitative data, which is then analyzed to understand consumer preferences, behavior patterns, and market trends. Statistical techniques, such as regression analysis and hypothesis testing, enable researchers to identify correlations, test hypotheses, and make predictions.Components of Quantitative Analysis1. Data Collection:The first step in quantitative analysis involves collecting relevant data. This can be done through various methods, such as surveys, experiments, or secondary data sources. It is crucial to ensure the accuracy, reliability, and representativeness of the data collected, as the quality of the analysis heavily relies on the quality of the data.2. Data Analysis:Once the data is collected, it is processed and analyzed using statistical techniques and mathematical models. Descriptive statistics, such as mean, median, and standard deviation, provide insights into the central tendencies and variability of the data. Inferential statistics allow researchers to draw conclusions and make predictions based on a sample of data.3. Data Interpretation:The final step of quantitative analysis involves interpreting the results. This requires critically evaluating the findings, identifying patterns or relationships, and drawing meaningful conclusions. Proper interpretation of quantitative analysis is essential to ensure that the insights gained from the data are relevant, valid, and actionable.ConclusionQuantitative analysis is a valuable tool used across various disciplines to analyze numerical data and derive meaningful insights. Its applications extend to finance, economics, market research, and beyond. By utilizing mathematical and statistical techniques, researchers and decision-makers can make informed judgments based on empirical evidence. Understanding the components and applications of quantitative analysis is essential for those who seek to effectively analyze and interpret numerical data.。

- 1、下载文档前请自行甄别文档内容的完整性,平台不提供额外的编辑、内容补充、找答案等附加服务。

- 2、"仅部分预览"的文档,不可在线预览部分如存在完整性等问题,可反馈申请退款(可完整预览的文档不适用该条件!)。

- 3、如文档侵犯您的权益,请联系客服反馈,我们会尽快为您处理(人工客服工作时间:9:00-18:30)。

The sequential prefetch schemes are limited to the prefetching of sequential blocks and, therefore, has a performance problem caused by taken branches. To rectify this problem, Kim et al. proposed a scheme called threaded prefetching 8]. In this scheme, each instruction block has an instruction block pointer called thread. The thread indicates the instruction block to be prefetched when the block containing it is accessed by the processor. The thread is dynamically updated during program execution so that it indicates the instruction block that is most likely to be accessed next. In this scheme, the thread of a block is made to point to the block that was previously accessed after the present block because such a block is most likely to be accessed next by the principles of locality. In 7], an clairvoyant instruction prefetch scheme was described to study the intrinsic limitation of prefetching such as upper bounds on prefetch accuracy. The clairvoyant prefetch scheme is based on a post-mortem analysis of program execution. In the scheme, the instruction reference behavior of a block is rst encoded by what is called a reference pattern. In the reference pattern, each instruction block is represented by a letter. For example, the rst distinct instruction block in the pattern is represented by a and the second distinct instruction block is represented by b and so on. As a result, various reference patterns such as a{a{a..., a{b{a..., a{b{b..., a{b{c..., etc. will be generated according to the reference behavior of each instruction block during program execution. Then, based on these reference patterns, a table is constructed that lists, for each possible pre x of reference patterns, the frequencies of letters that appear after the pre x. For example, there are three possible reference patterns of length 3 that have a{b as their pre x: a{b{a, a{b{b, and a{b{c. If we assume that a{b{a, a{b{b, and a{b{c appeared once, twice, and once, respectively, in the reference patterns, the table has an entry for pre x a{b that has frequencies of 1, 2 and 1 for a, b, and c respectively. The clairvoyant prefetch scheme uses this table to make the prefetch decision in the direction with the highest frequency for each possible pre x of reference patterns. Also, to prefetch the instruction block corresponding to a letter in a reference pattern, the clairvoyant prefetch scheme should have address information associated with each letter. For this purpose, the scheme maintains, for each instruction block, address information for each letter in its reference pattern. To quantitatively analyze the performance of the above three instruction prefetch schemes, we performed simulations based on traces that re ect the multi-programming environment and operating system execution behavior. Traces we used are the SPEC SDM(Software Development Multitasking) benchmark traces gathered at BYU. The computer system used for generating the traces was a 20 MHz Intel 486 DX with 16M main memory. The operat-

2 Prefetching Strategies

Recent advances in electronic technology have drastically reduced processor cycle times. In DRAM technology, however, the cycle time improvement has been slow although density growth has been tremendous. As a result, the processor-memory cycle time disparity increases. One of possible approaches to bridging the disparity is to use prefetching. Prefetching aims at reducing the gap between the processor and memory cycle times by fetching, in advance, instruction and data that are likely to be requested by the processor in the near future. To improve the overall system performance through prefetching, accurate prefetch prediction and su cient prefetch time is required. In particular, prefetching gives rise to prefetch overhead if the prefetch prediction is not correct. Most previous studies on prefetching were limited to proposing a particular prefetch scheme and presenting its performance improvement, largely ignoring its negative aspects. However, prefetching can have adverse e ects on the overall performance because of inaccurate prefetch prediction. A signi cant portion of the prefetch overheads is due to memory contention between demand and prefetch requests. In this paper, we examine quantitatively the e ects of instruction prefetching for a range of conventional bus/memory system con gurations. This allows us to accurately show the cross-