机器学习十大算法:AdaBoost

Chapter7

AdaBoost

Zhi-Hua Zhou and Yang Yu

Contents

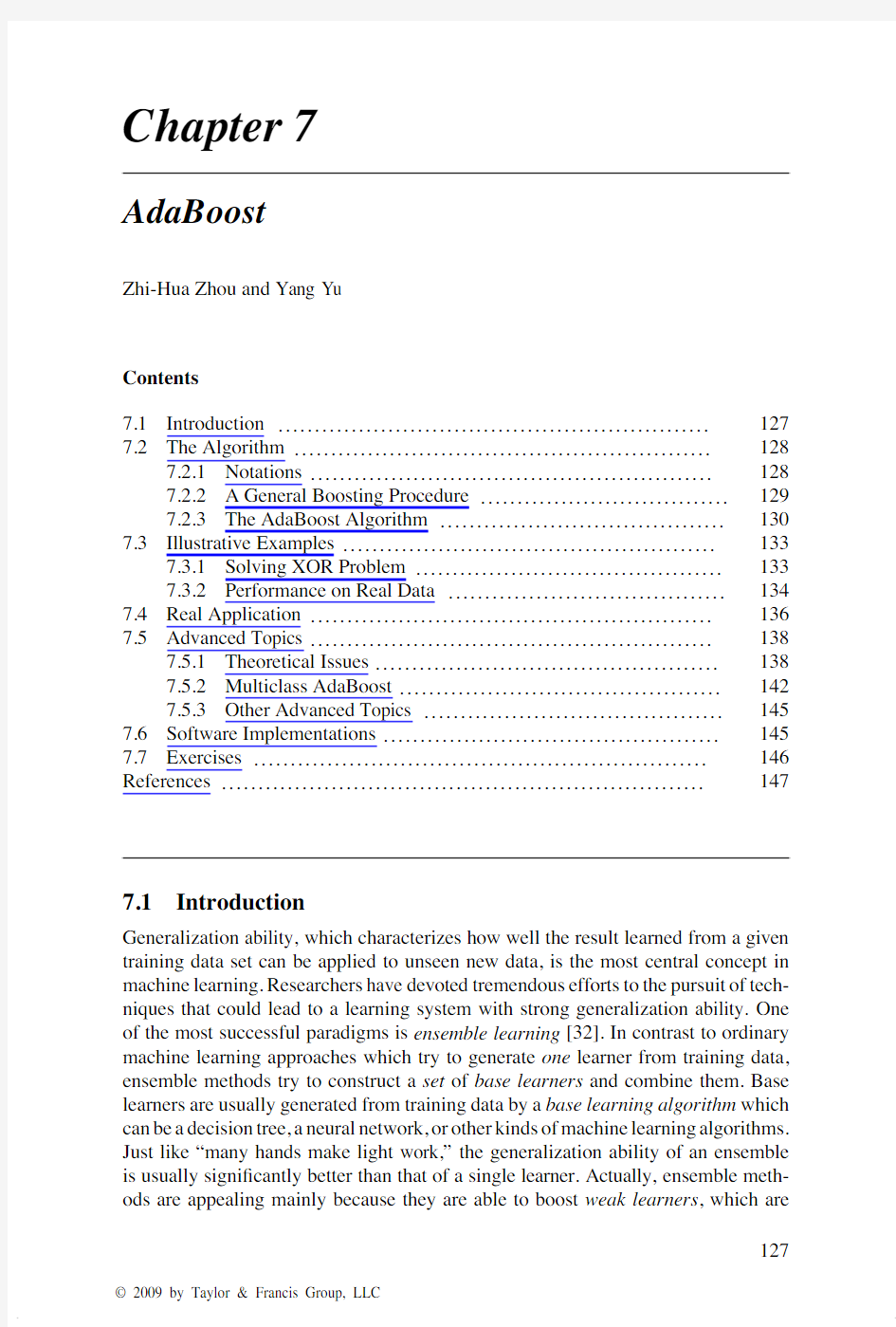

7.1Introduction (127)

7.2The Algorithm (128)

7.2.1Notations (128)

7.2.2A General Boosting Procedure (129)

7.2.3The AdaBoost Algorithm (130)

7.3Illustrative Examples (133)

7.3.1Solving XOR Problem (133)

7.3.2Performance on Real Data (134)

7.4Real Application (136)

7.5Advanced Topics (138)

7.5.1Theoretical Issues (138)

7.5.2Multiclass AdaBoost (142)

7.5.3Other Advanced Topics (145)

7.6Software Implementations (145)

7.7Exercises (146)

References (147)

7.1Introduction

Generalization ability,which characterizes how well the result learned from a given training data set can be applied to unseen new data,is the most central concept in machine learning.Researchers have devoted tremendous efforts to the pursuit of tech-niques that could lead to a learning system with strong generalization ability.One of the most successful paradigms is ensemble learning[32].In contrast to ordinary machine learning approaches which try to generate one learner from training data, ensemble methods try to construct a set of base learners and combine them.Base learners are usually generated from training data by a base learning algorithm which can be a decision tree,a neural network,or other kinds of machine learning algorithms. Just like“many hands make light work,”the generalization ability of an ensemble is usually signi?cantly better than that of a single learner.Actually,ensemble meth-ods are appealing mainly because they are able to boost weak learners,which are

127

128AdaBoost

slightly better than random guess,to strong learners,which can make very accurate predictions.So,“base learners”are also referred as“weak learners.”

AdaBoost[9,10]is one of the most in?uential ensemble methods.It took birth from the answer to an interesting question posed by Kearns and Valiant in1988.That is,whether two complexity classes,weakly learnable and strongly learnable prob-lems,are equal.If the answer to the question is positive,a weak learner that performs just slightly better than random guess can be“boosted”into an arbitrarily accurate strong learner.Obviously,such a question is of great importance to machine learning. Schapire[21]found that the answer to the question is“yes,”and gave a proof by construction,which is the?rst boosting algorithm.An important practical de?ciency of this algorithm is the requirement that the error bound of the base learners be known ahead of time,which is usually unknown in practice.Freund and Schapire[9]then pro-posed an adaptive boosting algorithm,named AdaBoost,which does not require those unavailable information.It is evident that AdaBoost was born with theoretical signif-icance,which has given rise to abundant research on theoretical aspects of ensemble methods in communities of machine learning and statistics.It is worth mentioning that for their AdaBoost paper[9],Schapire and Freund won the Godel Prize,which is one of the most prestigious awards in theoretical computer science,in the year2003. AdaBoost and its variants have been applied to diverse domains with great success, owing to their solid theoretical foundation,accurate prediction,and great simplicity (Schapire said it needs only“just10lines of code”).For example,Viola and Jones[27] combined AdaBoost with a cascade process for face detection.They regarded rectan-gular features as weak learners,and by using AdaBoost to weight the weak learners, they got very intuitive features for face detection.In order to get high accuracy as well as high ef?ciency,they used a cascade process(which is beyond the scope of this chap-ter).As a result,they reported a very strong face detector:On a466MHz machine,face detection on a384×288image costs only0.067second,which is15times faster than state-of-the-art face detectors at that time but with comparable accuracy.This face detector has been recognized as one of the most exciting breakthroughs in computer vision(in particular,face detection)during the past decade.It is not strange that“boost-ing”has become a buzzword in computer vision and many other application areas. In the rest of this chapter,we will introduce the algorithm and implementations,and give some illustrations on how the algorithm works.For readers who are eager to know more,we will introduce some theoretical results and extensions as advanced topics.

7.2The Algorithm

7.2.1Notations

We?rst introduce some notations that will be used in the rest of the chapter.Let X denote the instance space,or in other words,feature space.Let Y denote the set of labels that express the underlying concepts which are to be learned.For example,we

7.2The Algorithm129 let Y={?1,+1}for binary classi?cation.A training set D consists of m instances whose associated labels are observed,i.e.,D={(x i,y i)}(i∈{1,...,m}),while the label of a test instance is unknown and thus to be predicted.We assume both training and test instances are drawn independently and identically from an underlying distribution D.

After training on a training data set D,a learning algorithm L will output a hypoth-esis h,which is a mapping from X to Y,or called as a classi?er.The learning process can be regarded as picking the best hypothesis from a hypothesis space,where the word“best”refers to a loss function.For classi?cation,the loss function can naturally be0/1-loss,

loss0/1(h|x)=I[h(x)=y]

where I[·]is the indication function which outputs1if the inner expression is true and0otherwise,which means that one error is counted if an instance is wrongly classi?ed.In this chapter0/1-loss is used by default,but it is noteworthy that other kinds of loss functions can also be used in boosting.

7.2.2A General Boosting Procedure

Boosting is actually a family of algorithms,among which the AdaBoost algorithm is the most in?uential one.So,it may be easier by starting from a general boosting procedure.

Suppose we are dealing with a binary classi?cation problem,that is,we are trying to classify instances as positive and https://www.360docs.net/doc/9712500810.html,ually we assume that there exists an unknown target concept,which correctly assigns“positive”labels to instances belonging to the concept and“negative”labels to others.This unknown target concept is actually what we want to learn.We call this target concept ground-truth.For a binary classi?cation problem,a classi?er working by random guess will have50%0/1-loss. Suppose we are unlucky and only have a weak classi?er at hand,which is only slightly better than random guess on the underlying instance distribution D,say,it has49%0/1-loss.Let’s denote this weak classi?er as h1.It is obvious that h1is not what we want,and we will try to improve it.A natural idea is to correct the mistakes made by h1.

We can try to derive a new distribution D from D,which makes the mistakes of h1more evident,for example,it focuses more on the instances wrongly classi?ed by h1(we will explain how to generate D in the next section).We can train a classi?er h2from D .Again,suppose we are unlucky and h2is also a weak classi?er.Since D was derived from D,if D satis?es some condition,h2will be able to achieve a better performance than h1on some places in D where h1does not work well, without scarifying the places where h1performs well.Thus,by combining h1and h2in an appropriate way(we will explain how to combine them in the next section), the combined classi?er will be able to achieve less loss than that achieved by h1.By repeating the above process,we can expect to get a combined classi?er which has very small(ideally,zero)0/1-loss on D.

130AdaBoost

Input: Instance distribution D ; Base learning algorithm L ;

Number of learning rounds T .

Process:1. D 1 = D . % Initialize distribution

2. for t = 1, ··· ,T :

3. h t = L (D t ); % Train a weak learner from distribution D t

4. ?t = Pr x ~D t ,y I [h t (x )≠ y ]; % Measure the error of h t

5. D t +1 = AdjustDistribution (D t , ?t )

6. end

Output: H (x ) = CombineOutputs ({h t (x )})

Figure 7.1A general boosting procedure.

Brie?y,boosting works by training a set of classi?ers sequentially and combining them for prediction,where the later classi?ers focus more on the mistakes of the earlier classi?ers.Figure 7.1summarizes the general boosting procedure.

7.2.3The AdaBoost Algorithm

Figure 7.1is not a real algorithm since there are some undecided parts such as Ad just Distribution and CombineOutputs .The AdaBoost algorithm can be viewed as an instantiation of the general boosting procedure,which is summarized in Figure 7.2.Input: Data set D = {(x 1, y 1), (x 2, y 2), . . . , (x m , y m )};

Base learning algorithm L ;

Number of learning rounds T .

Process:1. D 1 (i ) = 1/m . % Initialize the weight distribution

2. for t = 1, ··· ,T :

3. h t = L (D , D t ); % Train a learner h t from D using distribution D t

4. ?t = Pr x ~D t ,y I [h t (x )≠ y ]; % Measure the error of h t

5. if ?t > 0.5 then break

6. αt = ? ln ( ); % Determine the weight of h t

7. D t +1 (i ) =

8. end

Output: H (x ) = sign (Σt =1αt h t (x ))

× {

exp(–αt ) if h t (x i ) = y i exp(αt ) if h t (x i ) ≠ y i % Update the distribution, where % Z t is a normalization factor which

% enables D t +1 to be distribution T 1– ?t ?t D t (i )Z t D t (i )exp(–αt y i h t (x i ))Z t Figure 7.2The AdaBoost algorithm.

7.2The Algorithm 131

Now we explain the details.1AdaBoost generates a sequence of hypotheses and combines them with weights,which can be regarded as an additive weighted combi-nation in the form of H (x )=

T t =1

αt h t (x )From this view,AdaBoost actually solves two problems,that is,how to generate the hypotheses h t ’s and how to determine the proper weights αt ’s.

In order to have a highly ef?cient error reduction process,we try to minimize an exponential loss

loss exp (h )=E x ~D ,y [e ?yh (x )]

where yh (x )is called as the classi?cation margin of the hypothesis.

Let’s consider one round in the boosting process.Suppose a set of hypotheses as well as their weights have already been obtained,and let H denote the combined hypothesis.Now,one more hypothesis h will be generated and is to be combined with H to form H +αh .The loss after the combination will be

loss exp (H +αh )=E x ~D ,y [e ?y (H (x )+αh (x ))]

The loss can be decomposed to each instance,which is called pointwise loss,as

loss exp (H +αh |x )=E y [e ?y (H (x )+αh (x ))|x ]

Since y and h (x )must be +1or ?1,we can expand the expectation as loss exp (H +αh |x )=e ?y H (x ) e ?αP (y =h (x )|x )+e αP (y =h (x )|x ) Suppose we have already generated h ,and thus the weight αthat minimizes the loss can be found when the derivative of the loss equals zero,that is,

?loss exp (H +αh |x )?α=e ?y H (x ) ?e ?αP (y =h (x )|x )+e αP (y =h (x )|x ) =0

and the solution is

α=12ln P (y =h (x )|x )P (y =h (x )|x )=12ln 1?P (y =h (x )|x )P (y =h (x )|x )

By taking an expectation over x ,that is,solving

?loss exp (H +αh )

?α=0,and denoting

=E x ~D [y =h (x )],we get

α=12ln 1? which is the way of determining αt in AdaBoost.

1Here we explain the AdaBoost algorithm from the view of [11]since it is easier to understand than the original explanation in [9].

132AdaBoost

Now let’s consider how to generate h.Given a base learning algorithm,AdaBoost invokes it to produce a hypothesis from a particular instance distribution.So,we only need to consider what hypothesis is desired for the next round,and then generate an instance distribution to achieve this hypothesis.

We can expand the pointwise loss to second order about h(x)=0,when?xing α=1,

loss exp(H+h|x)≈E y[e?y H(x)(1?yh(x)+y2h(x)2/2)|x]

=E y[e?y H(x)(1?yh(x)+1/2)|x]

since y2=1and h(x)2=1.

Then a perfect hypothesis is

h?(x)=arg min

h loss exp(H+h|x)=arg max

h

E y[e?y H(x)yh(x)|x]

=arg max

h

e?H(x)P(y=1|x)·1·h(x)+e H(x)P(y=?1|x)·(?1)·h(x) Note that e?y H(x)is a constant in terms of h(x).By normalizing the expectation as

h?(x)=arg max

h e?H(x)P(y=1|x)·1·h(x)+e H(x)P(y=?1|x)·(?1)·h(x)

e P(y=1|x)+e P(y=?1|x)

we can rewrite the expectation using a new term w(x,y),which is drawn from e?y H(x)P(y|x),as

h?(x)=arg max

h

E w(x,y)~e?y H(x)P(y|x)[yh(x)|x]

Since h?(x)must be+1or?1,the solution to the optimization is that h?(x)holds the same sign with y|x,that is,

h?(x)=E w(x,y)~e?y H(x)P(y|x)[y|x]

=P w(x,y)~e?y H(x)P(y|x)(y=1|x)?P w(x,y)~e?y H(x)P(y|x)(y=?1|x)

As can be seen,h?simply performs the optimal classi?cation of x under the distri-bution e?y H(x)P(y|x).Therefore,e?y H(x)P(y|x)is the desired distribution for a hypothesis minimizing0/1-loss.

So,when the hypothesis h(x)has been learned andα=1

2ln1?

has been deter-

mined in the current round,the distribution for the next round should be

D t+1(x)=e?y(H(x)+αh(x))P(y|x)=e?y H(x)P(y|x)·e?αyh(x)

=D t(x)·e?αyh(x)

which is the way of updating instance distribution in AdaBoost.

But,why optimizing the exponential loss works for minimizing the0/1-loss? Actually,we can see that

h?(x)=arg min

h E x~D,y[e?yh(x)|x]=1

2

ln

P(y=1|x)

P(y=?1|x)

7.3Illustrative Examples133 and therefore we have

sign(h?(x))=arg max

y

P(y|x)

which implies that the optimal solution to the exponential loss achieves the minimum Bayesian error for the classi?cation problem.Moreover,we can see that the function h?which minimizes the exponential loss is the logistic regression model up to a factor 2.So,by ignoring the factor1/2,AdaBoost can also be viewed as?tting an additive logistic regression model.

It is noteworthy that the data distribution is not known in practice,and the AdaBoost algorithm works on a given training set with?nite training examples.Therefore,all the expectations in the above derivations are taken on the training examples,and the weights are also imposed on training examples.For base learning algorithms that cannot handle weighted training examples,a resampling mechanism,which samples training examples according to desired weights,can be used instead.

7.3Illustrative Examples

In this section,we demonstrate how the AdaBoost algorithm works,from an illustra-tion on a toy problem to real data sets.

7.3.1Solving XOR Problem

We consider an arti?cial data set in a two-dimensional space,plotted in Figure7.3(a). There are only four instances,that is,

?????????(x1=(0,+1),y1=+1) (x2=(0,?1),y2=+1) (x3=(+1,0),y3=?1) (x4=(?1,0),y4=?1)

?

???

?

???

?

This is the XOR problem.The two classes cannot be separated by a linear classi?er which corresponds to a line on the?gure.

Suppose we have a base learning algorithm which tries to select the best of the fol-lowing eight functions.Note that none of them is perfect.For equally good functions, the base learning algorithm will pick one function from them randomly.

h1(x)=

+1,if(x1>?0.5)

?1,otherwise h2(x)=

?1,if(x1>?0.5)

+1,otherwise

h3(x)=

+1,if(x1>+0.5)

?1,otherwise h4(x)=

?1,if(x1>+0.5)

+1,otherwise

134

AdaBoost

(a) The XOR data(b) 1st round(c) 2nd round(d) 3rd round Figure7.3AdaBoost on the XOR problem.

h5(x)=

+1,if(x2>?0.5)

?1,otherwise h6(x)=

?1,if(x2>?0.5)

+1,otherwise

h7(x)=

+1,if(x2>+0.5)

?1,otherwise h8(x)=

?1,if(x2>+0.5)

+1,otherwise

where x1and x2are the values of x at the?rst and second dimension,respectively. Now we track how AdaBoost works:

1.The?rst step is to invoke the base learning algorithm on the original data.h2,

h3,h5,and h8all have0.25classi?cation errors.Suppose h2is picked as the?rst base learner.One instance,x1,is wrongly classi?ed,so the error is1/4=0.25.

The weight of h2is0.5ln3≈0.55.Figure7.3(b)visualizes the classi?cation, where the shadowed area is classi?ed as negative(?1)and the weights of the classi?cation,0.55and?0.55,are displayed.

2.The weight of x1is increased and the base learning algorithm is invoked again.

This time h3,h5,and h8have equal errors.Suppose h3is picked,of which the weight is0.80.Figure7.3(c)shows the combined classi?cation of h2and h3with their weights,where different gray levels are used for distinguishing negative areas according to classi?cation weights.

3.The weight of x3is increased,and this time only h5and h8equally have the

lowest errors.Suppose h5is picked,of which the weight is1.10.Figure7.3(d) shows the combined classi?cation of h2,h3,and h8.If we look at the sign of classi?cation weights in each area in Figure7.3(d),all the instances are correctly classi?ed.Thus,by combining the imperfect linear classi?ers,AdaBoost has produced a nonlinear classi?er which has zero error.

7.3.2Performance on Real Data

We evaluate the AdaBoost algorithm on56data sets from the UCI Machine Learning Repository,2which covers a broad range of real-world tasks.We use the Weka(will be introduced in Section7.6)implementation of AdaBoost.M1using reweighting with https://www.360docs.net/doc/9712500810.html,/~mlearn/MLRepository.html

7.3Illustrative Examples 135AdaBoost with decision tree (unpruned)AdaBoost with decision tree (pruned)

AdaBoost with decision stump

D e c i s i o n s t u m p D e c i s i o n t r e e (p r u n e d )1.00

0.800.600.40

0.20

0.00 1.000.800.600.400.200.000.000.200.400.600.80 1.00

0.000.200.400.600.80 1.00D e c i s i o n t r e e (u n p r u n e d )1.00

0.800.600.400.20

0.000.000.200.400.600.80 1.00

Figure 7.4Comparison of predictive errors of AdaBoost against decision stump,pruned,and unpruned single decision trees on 56UCI data sets.

50base learners.Almost all kinds of learning algorithms can be taken as base learning algorithms,such as decision trees,neural networks,and so on.Here,we have tried three base learning algorithms,including decision stump,pruned,and unpruned J4.8decision trees (Weka implementation of C4.5).

We plot the comparison results in Figure 7.4,where each circle represents a data set and locates according to the predictive errors of the two compared algorithms.In each plot of Figure 7.4,the diagonal line indicates where the two compared algorithms have identical errors.It can be observed that AdaBoost often outperforms its base learning algorithm,with a few exceptions on which it degenerates the performance.The famous bias-variance decomposition [12]has been employed to empirically study why AdaBoost achieves excellent performance [2,3,34].This powerful tool breaks the expected error of a learning approach into the sum of three nonnegative quantities,that is,the intrinsic noise,the bias,and the variance.The bias measures how closely the average estimate of the learning approach is able to approximate the target,and the variance measures how much the estimate of the learning approach ?uctuates for the different training sets of the same size.It has been observed [2,3,34]that AdaBoost primarily reduces the bias but it is also able to reduce the variance.

136AdaBoost

Figure7.5Four feature masks to be applied to each rectangle.

7.4Real Application

Viola and Jones[27]combined AdaBoost with a cascade process for face detection. As the result,they reported that on a466MHz machine,face detection on a384×288 image costs only0.067seconds,which is almost15times faster than state-of-the-art face detectors at that time but with comparable accuracy.This face detector has been recognized as one of the most exciting breakthroughs in computer vision(in particular,face detection)during the past decade.In this section,we brie?y introduce how AdaBoost works in the Viola-Jones face detector.

Here the task is to locate all possible human faces in a given image.An image is ?rst divided into subimages,say24×24squares.Each subimage is then represented by a feature vector.To make the computational process ef?cient,very simple features are used.All possible rectangles in a subimage are examined.On every rectangle, four features are extracted using the masks shown in Figure7.5.With each mask, the sum of pixels’gray level in white areas is subtracted by the sum of those in dark areas,which is regarded as a feature.Thus,by a24×24splitting,there are more than 1million features,but each of the features can be calculated very fast.

Each feature is regarded as a weak learner,that is,

h i,p,θ(x)=I[px i≤pθ](p∈{+1,?1})

where x i is the value of x at the i-th feature.

The base learning algorithm tries to?nd the best weak classi?er h i?,p?,θ?that minimizes the classi?cation error,that is,

E(x,y)I[h i,p,θ(x)=y]

(i?,p?,θ?)=arg min

i,p,θ

Face rectangles are regarded as positive examples,as shown in Figure7.6,while rectangles that do not contain any face are regarded as negative examples.Then,the AdaBoost process is applied and it will return a few weak learners,each corresponds to one of the over1million features.Actually,the AdaBoost process can be regarded as a feature selection tool here.

Figure7.7shows the?rst two selected features and their position relative to a human face.It is evident that these two features are intuitive,where the?rst feature measures how the intensity of the eye areas differ from that of the lower areas,while

7.4Real Application137

Figure7.6Positive training examples[27].

the second feature measures how the intensity of the two eye areas differ from the area between two eyes.

Using the selected features in order,an extremely imbalanced decision tree is built, which is called cascade of classi?ers,as illustrated in Figure7.8.

The parameterθis adjusted in the cascade such that,at each tree node,branching into“not a face”means that the image is really not a face.In other words,the false negative rate is minimized.This design owes to the fact that a nonface image is easier to be recognized,and it is possible to use a few features to?lter out a lot of candidate image rectangles,which endows the high ef?ciency.It was reported[27]that10 features per subimage are examined in average.Some test results of the Viola-Jones face detector are shown in Figure7.9.

138

AdaBoost

7.5Advanced Topics

7.5.1Theoretical Issues

Computational learning theory studies some fundamental theoretical issues of machine learning.First introduced by Valiant in1984[25],the Probably Approx-imately Correct(PAC)framework models learning algorithms in a distribution free manner.Roughly speaking,for binary classi?cation,a problem is learnable or strongly

time

learnable if there exists an algorithm that outputs a hypothesis h in polynomial

7.5Advanced Topics139

Figure7.9Outputs of the Viola-Jones face detector on a number of test images[27]. such that for all0<δ, ≤0.5,

P

E x~D,y[I[h(x)=y]]<

≥1?δ

and a problem is weakly learnable if the above holds for all0<δ≤0.5but only when is slightly smaller than0.5(or in other words,h is only slightly better than random guess).

In1988,Kearns and Valiant[15]posed an interesting question,that is,whether the strongly learnable problem class equals the weakly learnable problem class.This question is of fundamental importance,since if the answer is“yes,”any weak learner is potentially able to be boosted to a strong learner.In1989,Schapire[21]proved that the answer is really“yes,”and the proof he gave is a construction,which is the?rst

140AdaBoost

boosting algorithm.One year later,Freund[7]developed a more ef?cient algorithm. Both algorithms,however,suffered from the practical de?ciency that the error bound of the base learners need to be known ahead of time,which is usually unknown in https://www.360docs.net/doc/9712500810.html,ter,in1995,Freund and Schapire[9]developed the AdaBoost algorithm, which is effective and ef?cient in practice.

Freund and Schapire[9]proved that,if the base learners of AdaBoost have errors 1, 2,···, T,the error of the?nal combined learner, ,is upper bounded as

=E x~D,y I[H(x)=y]≤2T

T

t=1

t t≤e?2

T

t=1

γ2t

whereγt=0.5? t.It can be seen that AdaBoost reduces the error exponentially fast.Also,it can be derived that,to achieve an error less than ,the round T is upper

bounded as

T≤

1

2γ

ln

1

where it is assumed thatγ=γ1=γ2=···=γT.

In practice,however,all the operations of AdaBoost can only be carried out on training data D,that is,

D=E x~D,y I[H(x)=y]

and thus the errors are training errors,while the generalization error,that is,the error over instance distribution D

D=E x~D,y I[H(x)=y]

is of more interest.

The initial analysis[9]showed that the generalization error of AdaBoost is upper

bounded as

D≤ D+?O

dT

m

with probability at least1?δ,where d is the VC-dimension of base learners,m is the number of training instances,and?O(·)is used instead of O(·)to hide logarithmic terms and constant factors.

The above bound suggests that in order to achieve a good generalization ability, it is necessary to constrain the complexity of base learners as well as the number of learning rounds;otherwise AdaBoost will over?t.However,empirical studies show that AdaBoost often does not over?t,that is,its test error often tends to decrease even after the training error reaches zero,even after a large number of rounds,such as 1000.

For example,Schapire et al.[22]plotted the performance of AdaBoost on the letter data set from UCI Machine Learning Repository,as shown in Figure7.10(left), where the higher curve is test error while the lower one is training error.It can be observed that AdaBoost achieves zero training error in less than10rounds but the generalization error keeps on reducing.This phenomenon seems to counter Occam’s

7.5Advanced Topics 141

e r r o r r a t e r a t i o o

f t e s t s e t t θ

201510

5

0 1.00.5101001000-1-0.50.51

Figure 7.10Training and test error (left)and margin distribution (right)of AdaBoost on the letter data set [22].

Razor,that is,nothing more than necessary should be done,which is one of the basic principles in machine learning.

Many researchers have studied this phenomena,and several theoretical explana-tions have been given,for example,[11].Schapire et al.[22]introduced the margin -based explanation.They argued that AdaBoost is able to increase the margin even after the training error reaches zero,and thus it does not over?t even after a large number of rounds.The classi?cation margin of h on x is de?ned as yh (x ),and that of H (x )= T t =1αt h t (x )is de?ned as

y H (x )= T

t =1αt yh t (x ) T

t =1αt

Figure 7.10(right)plots the distribution of y H (x )≤θfor different values of θ.It was proved in [22]that the generalization error is upper bounded as

D ≤P x ~D ,y (y H (x )≤θ)+?O d m θ2+ln 1δ ≤2T

T t =1 1?θt (1? )1+θ+?O m θ2+ln δ with probability at least 1?δ.This bound qualitatively explains that when other variables in the bound are ?xed,the larger the margin,the smaller the generalization error.

However,this margin-based explanation was challenged by Brieman [4].Using minimum margin ,

=min x ∈D

y H (x )Breiman proved a generalization error bound is tighter than the above one using minimum margin.Motivated by the tighter bound,the arc-gv algorithm,which is a variant of AdaBoost,was proposed to maximize the minimum margin directly,by

142AdaBoost updatingαt according to

αt=1

2ln

1+γt

1?γt

?1

2

ln

1+ t

1? t

Interestingly,the minimum margin of arc-gv is uniformly better than that of AdaBoost, but the test error of arc-gv increases drastically on all tested data sets[4].Thus,the margin theory for AdaBoost was almost sentenced to death.

In2006,Reyzin and Schapire[20]reported an interesting?nding.It is well-known that the bound of the generalization error is associated with margin,the number of rounds,and the complexity of base learners.When comparing arc-gv with AdaBoost, Breiman[4]tried to control the complexity of base learners by using decision trees with the same number of leaves,but Reyzin and Schapire found that these are trees with very different shapes.The trees generated by arc-gv tend to have larger depth, while those generated by AdaBoost tend to have larger width.Figure7.11(top) shows the difference of depth of the trees generated by the two algorithms on the breast cancer data set from UCI Machine Learning Repository.Although the trees have the same number of leaves,it seems that a deeper tree makes more attribute tests than a wider tree,and therefore they are unlikely to have equal complexity. So,Reyzin and Schapire repeated Breiman’s experiments by using decision stump, which has only one leaf and therefore is with a?xed complexity,and found that the margin distribution of AdaBoost is actually better than that of arc-gv,as illustrated in Figure7.11(bottom).

Recently,Wang et al.[28]introduced equilibrium margin and proved a new bound tighter than that obtained by using minimum margin,which suggests that the mini-mum margin may not be crucial for the generalization error of AdaBoost.It will be interesting to develop an algorithm that maximizes equilibrium margin directly,and to see whether the test error of such an algorithm is smaller than that of AdaBoost, which remains an open problem.

7.5.2Multiclass AdaBoost

In the previous sections we focused on AdaBoost for binary classi?cation,that is, Y={+1,?1}.In many classi?cation tasks,however,an instance belongs to one of many instead of two classes.For example,a handwritten number belongs to1of10 classes,that is,Y={0,...,9}.There is more than one way to deal with a multiclass classi?cation problem.

AdaBoost.M1[9]is a very direct extension,which is as same as the algorithm shown in Figure7.2,except that now the base learners are multiclass learners instead of binary classi?ers.This algorithm could not use binary base classi?ers,and requires every base learner have less than1/2multiclass0/1-loss,which is an overstrong constraint. SAMME[35]is an improvement over AdaBoost.M1,which replaces Line5of AdaBoost.M1in Figure7.2by

αt=1

2ln

1? t

t

+ln(|Y|?1)

7.5Advanced Topics 143

C u m u l a t i v e a v e r a g e t r e e d e p t h C u m u l a t i v e f r e q u e n c y 109.5

98.58

7.5

7500

0.7

0.60.50.40.3

0.20.10-0.1450400350300250

20015010050Round

(a)“AdaBoost_bc”

“Arc-gv_bc”1.21

0.80.6

0.4

0.2

0“AdaBoost_bc”

“Arc-gv_bc”

Margin

(b)Figure 7.11Tree depth (top)and margin distribution (bottom)of AdaBoost against arc-gv on the breast cancer data set [20].

This modi?cation is derived from the minimization of multiclass exponential loss.It was proved that,similar to the case of binary classi?cation,optimizing the multiclass exponential loss approaches to the optimal Bayesian error,that is,

sign [h ?(x )]=arg max y ∈Y

P (y |x )

where h ?is the optimal solution to the multiclass exponential loss.

144AdaBoost

A popular solution to multiclass classi?cation problem is to decompose the task into multiple binary classi?cation problems.Direct and popular decompositions include one-vs-rest and one-vs-one .One-vs-rest decomposes a multiclass task of |Y |classes into |Y |binary classi?cation tasks,where the i -th task is to classify whether an instance belongs to the i -th class or not.One-vs-one decomposes a multiclass task of |Y |classes into |Y |(|Y |?1)binary classi?cation tasks,where each task is to classify whether an instance belongs to,say,the i -th class or the j -th class.

AdaBoost.MH [23]follows the one-vs-rest approach.After training |Y |number of (binary)AdaBoost classi?ers,the real-value output H (x )= T t =1αt h t (x )of each AdaBoost is used instead of the crisp classi?cation to ?nd the most probable class,that is,

H (x )=arg max y ∈Y

H y (x )

where H y is the AdaBoost classi?er that classi?es the y -th class from the rest.

AdaBoost.M2[9]follows the one-vs-one approach,which minimizes a pseudo-loss.This algorithm is later generalized as AdaBoost.MR [23]which minimizes a ranking loss motivated by the fact that the highest ranked class is more likely to be the correct class.Binary classi?ers obtained by one-vs-one decomposition can also be aggregated by voting or pairwise coupling [13].

Error correcting output codes (ECOCs)[6]can also be used to decompose a multiclass classi?cation problem into a series of binary classi?cation problems.For example,Figure 7.12a shows output codes for four classes using ?ve classi?ers.Each classi?er is trained to discriminate the +1and ?1classes in the corresponding column.For a test instance,by concatenating the classi?cations output by the ?ve classi?ers,a code vector of predictions is obtained.This vector will be compared with the code vector of the classes (every row in Figure 7.12(a)using Hamming distance,and the class with the shortest distance is deemed the ?nal prediction.According to infor-mation theory,when the binary classi?ers are independent,the larger the minimum Hamming distance within the code vectors,the smaller the 0/https://www.360docs.net/doc/9712500810.html,ter,a uni?ed framework was proposed for multiclass decomposition approaches [1].Figure 7.12(b)shows the output codes for one-vs-rest decomposition and Figure 7.12(c)shows the output codes for one-vs-one decomposition,where zeros mean that the classi?ers should ignore the instances of those classes.

↓↓↓↓↓

y 1 = +1 ?1 +1 ?1 +1

y 2 = +1 +1 ?1 ?1 ?1

y 3 = ?1 ?1 +1 ?1 ?1

y 4 = ?1 +1 ?1 +1 +1

(a) Original code (b) One-vs-rest code (c) One-vs-one code

H 1H 2H 3H 4H 5

↓↓↓↓y 1 = +1 ?1 ?1 ?1y 2 = ?1 +1 ?1 ?1y 3 = ?1 ?1 +1 ?1y 4 = ?1 ?1 ?1 +1H 1H 2H 3H 4↓↓↓↓↓y 1 = +1 +1 +1 0 0 0y 2 = –1 0 0 +1 +1 0y 3 = 0 ?1 0 ?1 0 +1y 4 = 0 0 ?1 0 ?1 ?1H 1H 2H 3H 4H 5↓H 6Figure 7.12ECOC output codes.

7.6Software Implementations145 7.5.3Other Advanced Topics

Comprehensibility,that is,understandability of the learned model to user,is desired in many real applications.Similar to other ensemble methods,a serious de?ciency of AdaBoost and its variants is the lack of comprehensibility.Even when the base learners are comprehensible models such as small decision trees,the combination of them will lead to a black-box model.Improving the comprehensibility of ensemble methods is an important yet largely understudied direction[33].

In most ensemble methods,all the generated base learners are used in the ensemble. However,it has been proved that stronger ensembles with smaller sizes can be ob-tained through selective ensemble,that is,ensembling some instead of all the available base learners[34].This?nding is different from previous results which suggest that ensemble pruning may sacri?ce the generalization ability[17,24],and therefore pro-vides support for better selective ensemble or ensemble pruning methods[18,31]. In many applications,training examples of one class are far more than other classes. Learning algorithms that do not consider class imbalance tend to be overwhelmed by the majority class;however,the primary interest is often on the minority class.Many variants of AdaBoost have been developed for class-imbalance learning[5,14,19,26]. Moreover,a recent study[16]suggests that the performance of AdaBoost could be used as a clue to judge whether a task suffers from class imbalance or not,based on which new powerful algorithms may be designed.

As mentioned before,in addition to the0/1-loss,boosting can also work with other kinds of loss functions.For example,by considering the ranking loss,RankBoost[8] and AdaRank[30]have been developed for information retrieval tasks.

7.6Software Implementations

As an off-the-shelf machine learning technique,AdaBoost and its variants have easily accessible codes in Java,M ATLAB ,R,and C++.

Java implementations can be found in Weka,3one of the most famous open-source packages for machine learning and data mining.Weka includes AdaBoost.M1al-gorithm[9],which provides options to choose the base learning algorithms,set the number of base learners,and switch between reweighting and resampling mech-anisms.Weka also includes other boosting algorithms,such as LogitBoost[11], MultiBoosting[29],and so on.

M ATLAB implementation can be found in Spider.4R implementation can be found in R-Project.5C++implementation can be found in Sourceforge.6There are also many other implementations that can be found on the Internet.

3https://www.360docs.net/doc/9712500810.html,/ml/weka/.

4http://www.kyb.mpg.de/bs/people/spider/.

5https://www.360docs.net/doc/9712500810.html,/web/packages/.

6https://www.360docs.net/doc/9712500810.html,/projects/multiboost.

146AdaBoost

7.7Exercises

1.What is the basic idea of Boosting?

2.In Figure7.2,why should it break when t≥0.5?

3.Given a training set

??????

?????(x1=(+1,0),y1=+1) (x2=(0,+1),y2=+1) (x3=(?1,0),y3=+1) (x4=(0,?1),y4=+1) (x5=(0,0),y5=?1)

?

???

??

???

??

is there any linear classi?er that can reach zero training error?Why/why not?

4.Given the above training set,show that AdaBoost can reach zero training error

by using?ve linear base classi?ers from the following pool.

h1(x)=2I[x1>0.5]?1h2(x)=2I[x1<0.5]?1

h3(x)=2I[x1>?0.5]?1h4(x)=2I[x1

h5(x)=2I[x2>0.5]?1h6(x)=2I[x2<0.5]?1

h7(x)=2I[x2>?0.5]?1h8(x)=2I[x2

h9(x)=+1h10(x)=?1

5.In the above exercise,will AdaBoost reach nonzero training error for any

T≥5?T is the number of base classi?ers.

6.The nearest neighbor classi?er classi?es an instance by assigning it with the

label of its nearest training example.Can AdaBoost boost the performance of such classi?er?Why/why not?

7.Plot the following functions in a graph within range z∈[?2,2],and observe

their difference.

l1(z)=

0,z≥0

1,z<0

l2(z)=

0,z≥1

1?z,z<1

l3(z)=(z?1)2l4(z)=e?z

Note that,when z=y f(x),l1,l2,l3,and l4are functions of0/1-loss,hinge loss (used by support vector machines),square loss(used by least square regression), and exponential loss(the loss function used by AdaBoost),respectively.

8.Show that the l2,l3,and l4functions in the above exercise are all convex (l is convex if?z1,z2:l(z1+z2)≥(l(z1)+l(z2))).Considering a binary classi?cation task z=y f(x)where y={?1,+1},?nd that function to which the optimal solution is the Bayesian optimal solution.

9.Can AdaBoost be extended to solve regression problems?If your answer is yes,

how?If your answer is no,why?

数学建模笔记

数学模型按照不同的分类标准有许多种类: 1。按照模型的数学方法分,有几何模型,图论模型,微分方程模型.概率模型,最优控制模型,规划论模型,马氏链模型. 2。按模型的特征分,有静态模型和动态模型,确定性模型和随机模型,离散模型和连续性模型,线性模型和非线性模型. 3.按模型的应用领域分,有人口模型,交通模型,经济模型,生态模型,资源模型。环境模型。 4.按建模的目的分,有预测模型,优化模型,决策模型,控制模型等。 5.按对模型结构的了解程度分,有白箱模型,灰箱模型,黑箱模型。 数学建模的十大算法: 1.蒙特卡洛算法(该算法又称随机性模拟算法,是通过计算机仿真来解决问题的算法,同时可以通过模拟可以来检验自己模型的正确性,比较好用的算法。) 2.数据拟合、参数估计、插值等数据处理算法(比赛中通常会遇到大量的数据需要处理,而处理数据的关键就在于这些算法,通常使用matlab作为工具。) 3.线性规划、整数规划、多元规划、二次规划等规划类问题(建模竞赛大多数问题属于最优化问题,很多时候这些问题可以用数学规划算法来描述,通常使用lingo、lingdo软件实现) 4.图论算法(这类算法可以分为很多种,包括最短路、网络流、二分图等算法,涉及到图论的问题可以用这些方法解决,需要认真准备。) 5.动态规划、回溯搜索、分治算法、分支定界等计算机算法(这些算法是算法设计中比较常用的方法,很多场合可以用到竞赛中) 6.最优化理论的三大非经典算法:模拟退火法、神经网络、遗传算法(这些问题时用来解决一些较困难的最优化问题的算法,对于有些问题非常有帮助,但是算法的实现比较困难,需谨慎使用) 7.网格算法和穷举法(当重点讨论模型本身而情史算法的时候,可以使用这种暴力方案,最好使用一些高级语言作为编程工具) 8.一些连续离散化方法(很多问题都是从实际来的,数据可以是连续的,而计算机只认得是离散的数据,因此将其离散化后进行差分代替微分、求和代替积分等思想是非常重要的。

机器学习10大算法-周辉

机器学习10大算法 什么是机器学习呢? 从广泛的概念来说,机器学习是人工智能的一个子集。人工智能旨在使计算机更智能化,而机器学习已经证明了如何做到这一点。简而言之,机器学习是人工智能的应用。通过使用从数据中反复学习到的算法,机器学习可以改进计算机的功能,而无需进行明确的编程。 机器学习中的算法有哪些? 如果你是一个数据科学家或机器学习的狂热爱好者,你可以根据机器学习算法的类别来学习。机器学习算法主要有三大类:监督学习、无监督学习和强化学习。 监督学习 使用预定义的“训练示例”集合,训练系统,便于其在新数据被馈送时也能得出结论。系统一直被训练,直到达到所需的精度水平。 无监督学习 给系统一堆无标签数据,它必须自己检测模式和关系。系统要用推断功能来描述未分类数据的模式。 强化学习 强化学习其实是一个连续决策的过程,这个过程有点像有监督学习,只是标注数据不是预先准备好的,而是通过一个过程来回调整,并给出“标注数据”。

机器学习三大类别中常用的算法如下: 1. 线性回归 工作原理:该算法可以按其权重可视化。但问题是,当你无法真正衡量它时,必须通过观察其高度和宽度来做一些猜测。通过这种可视化的分析,可以获取一个结果。 回归线,由Y = a * X + b表示。 Y =因变量;a=斜率;X =自变量;b=截距。 通过减少数据点和回归线间距离的平方差的总和,可以导出系数a和b。 2. 逻辑回归 根据一组独立变量,估计离散值。它通过将数据匹配到logit函数来帮助预测事件。 下列方法用于临时的逻辑回归模型: 添加交互项。 消除功能。 正则化技术。 使用非线性模型。 3. 决策树 利用监督学习算法对问题进行分类。决策树是一种支持工具,它使用树状图来决定决策或可能的后果、机会事件结果、资源成本和实用程序。根据独立变量,将其划分为两个或多个同构集。 决策树的基本原理:根据一些feature 进行分类,每个节点提一个问题,通过判断,将数据分为两类,再继续提问。这些问题是根据已有数据学习出来的,再投

国内外工业机器人品牌盘点

国内外工业机器人品牌盘点 自改革开放以来,全球制造业向中国的迁移造就了我国成为“世界工厂”的局面。但随着国内外经济环境的变化,我国当当前的制造业也面临着前所未有的挑战,劳动力成本的上升、供给的下降、人口红利的消失,以及制造工厂对质量、成本、效率以及安全要求的不断提高,激发着我国企业逐步向自动化的转型。 目前,在全球范围内工业机器人技术日趋成熟,机器人俨然已经成为一种标准设备而在工业自动化行业中被广泛应用,如此一来国内外也产生了一批在较有影响力的知名工业机器人企业。 1. 瑞典ABB机器人 瑞典ABB机器人集团总部位于瑞士苏黎世,是目前世界行最大的机器人制造企业。1974年,ABB机器人成功研发了全球第一台市售全电动微型处理器控制的工业机器人IRB6,主要应用于工件的取放和物料的搬运。一年后,ABB持续发力,又生产出了全球第一台焊接机器人。直至1980年兼并Trallfa喷涂机器人,ABB机器人在产品结构上趋向于完备。 二十世纪末,为了更好的扩张与发展,ABB机器人进军中国市场,于1999年成立上海ABB。上海ABB是ABB在华工业机器人以及系统业务(机器人)、仪器仪表(自动化产品)、变电站自动化系统(电力系统)和集成分析系统(工程自动化)的主要生产基地。 ABB机器人生产的工业机器人主要应用于:焊接、装配、铸造、密封涂胶、材料处理、包装、喷漆、水切割等领域。 2. 德国库卡(KUKA)机器人 德国库卡成立于于1898年,是具有百年历史的知名企业,最初主要专注于室内及城市照明。但不久之后,库卡就涉足至其它领域(焊接工具及设备,大型容器),1966年更是成为了欧洲市政车辆的市场领导者。1973年,库卡研发了名为FAMULUS第一台工业机器人,到了1995年,库卡机器人技术脱离库卡焊接及机器人独立。现今,库卡专注于向工业生产过程提供先进的自动化解决方案。 KUKA库卡机器人(上海)是库卡在德国意外开设的全球首家海外工厂,主要生产库卡工业机器人和控制台,应用于汽车焊接及组建等工序,其产量占据了库卡全球生产总量的三分之一。 库卡机器人主要产品包括:Scara及六轴工业机器人、货盘堆垛机器人、作业机器人、架装式机器人、冲压连线机器人、焊接机器人、净室机器人、机器人系统和单元。

数学建模常用的十种解题方法

数学建模常用的十种解题方法 摘要 当需要从定量的角度分析和研究一个实际问题时,人们就要在深入调查研究、了解对象信息、作出简化假设、分析内在规律等工作的基础上,用数学的符号和语言,把它表述为数学式子,也就是数学模型,然后用通过计算得到的模型结果来解释实际问题,并接受实际的检验。这个建立数学模型的全过程就称为数学建模。数学建模的十种常用方法有蒙特卡罗算法;数据拟合、参数估计、插值等数据处理算法;解决线性规划、整数规划、多元规划、二次规划等规划类问题的数学规划算法;图论算法;动态规划、回溯搜索、分治算法、分支定界等计算机算法;最优化理论的三大非经典算法:模拟退火法、神经网络、遗传算法;网格算法和穷举法;一些连续离散化方法;数值分析算法;图象处理算法。 关键词:数学建模;蒙特卡罗算法;数据处理算法;数学规划算法;图论算法 一、蒙特卡罗算法 蒙特卡罗算法又称随机性模拟算法,是通过计算机仿真来解决问题的算法,同时可以通过模拟可以来检验自己模型的正确性,是比赛时必用的方法。在工程、通讯、金融等技术问题中, 实验数据很难获取, 或实验数据的获取需耗费很多的人力、物力, 对此, 用计算机随机模拟就是最简单、经济、实用的方法; 此外, 对一些复杂的计算问题, 如非线性议程组求解、最优化、积分微分方程及一些偏微分方程的解⑿, 蒙特卡罗方法也是非常有效的。 一般情况下, 蒙特卜罗算法在二重积分中用均匀随机数计算积分比较简单, 但精度不太理想。通过方差分析, 论证了利用有利随机数, 可以使积分计算的精度达到最优。本文给出算例, 并用MA TA LA B 实现。 1蒙特卡罗计算重积分的最简算法-------均匀随机数法 二重积分的蒙特卡罗方法(均匀随机数) 实际计算中常常要遇到如()dxdy y x f D ??,的二重积分, 也常常发现许多时候被积函数的原函数很难求出, 或者原函数根本就不是初等函数, 对于这样的重积分, 可以设计一种蒙特卡罗的方法计算。 定理 1 )1( 设式()y x f ,区域 D 上的有界函数, 用均匀随机数计算()??D dxdy y x f ,的方法: (l) 取一个包含D 的矩形区域Ω,a ≦x ≦b, c ≦y ≦d , 其面积A =(b 一a) (d 一c) ; ()j i y x ,,i=1,…,n 在Ω上的均匀分布随机数列,不妨设()j i y x ,, j=1,…k 为落在D 中的k 个随机数, 则n 充分大时, 有

数据挖掘领域的十大经典算法原理及应用

数据挖掘领域的十大经典算法原理及应用 国际权威的学术组织the IEEE International Conference on Data Mining (ICDM) 2006年12月评选出了数据挖掘领域的十大经典算法:C4.5, k-Means, SVM, Apriori, EM, PageRank, AdaBoost, kNN, Naive Bayes, and CART. 不仅仅是选中的十大算法,其实参加评选的18种算法,实际上随便拿出一种来都可以称得上是经典算法,它们在数据挖掘领域都产生了极为深远的影响。 1.C4.5 C4.5算法是机器学习算法中的一种分类决策树算法,其核心算法是ID3算法.C4.5算法继承了ID3算法的优点,并在以下几方面对ID3算法进行了改进: 1)用信息增益率来选择属性,克服了用信息增益选择属性时偏向选择取值多的属性的不足; 2) 在树构造过程中进行剪枝; 3) 能够完成对连续属性的离散化处理; 4) 能够对不完整数据进行处理。

C4.5算法有如下优点:产生的分类规则易于理解,准确率较高。其缺点是:在构造树的过程中,需要对数据集进行多次的顺序扫描和排序,因而导致算法的低效。 2. The k-means algorithm即K-Means算法 k-means algorithm算法是一个聚类算法,把n的对象根据他们的属性分为k个分割,k < n。它与处理混合正态分布的最大期望算法很相似,因为他们都试图找到数据中自然聚类的中心。它假设对象属性来自于空间向量,并且目标是使各个群组内部的均方误差总和最小。 3. Support vector machines 支持向量机,英文为Support Vector Machine,简称SV 机(论文中一般简称SVM)。它是一种監督式學習的方法,它广泛的应用于统计分类以及回归分析中。支持向量机将向量映射到一个更高维的空间里,在这个空间里建立有一个最大间隔超平面。在分开数据的超平面的两边建有两个互相平行的超平面。分隔超平面使两个平行超平面的距离最大化。假定平行超平面

机器学习十大算法:CART

Chapter10 CART:Classi?cation and Regression Trees Dan Steinberg Contents 10.1Antecedents (180) 10.2Overview (181) 10.3A Running Example (181) 10.4The Algorithm Brie?y Stated (183) 10.5Splitting Rules (185) 10.6Prior Probabilities and Class Balancing (187) 10.7Missing Value Handling (189) 10.8Attribute Importance (190) 10.9Dynamic Feature Construction (191) 10.10Cost-Sensitive Learning (192) 10.11Stopping Rules,Pruning,Tree Sequences,and Tree Selection (193) 10.12Probability Trees (194) 10.13Theoretical Foundations (196) 10.14Post-CART Related Research (196) 10.15Software Availability (198) 10.16Exercises (198) References (199) The1984monograph,“CART:Classi?cation and Regression Trees,”coauthored by Leo Breiman,Jerome Friedman,Richard Olshen,and Charles Stone(BFOS),repre-sents a major milestone in the evolution of arti?cial intelligence,machine learning, nonparametric statistics,and data mining.The work is important for the compre-hensiveness of its study of decision trees,the technical innovations it introduces,its sophisticated examples of tree-structured data analysis,and its authoritative treatment of large sample theory for trees.Since its publication the CART monograph has been cited some3000times according to the science and social science citation indexes; Google Scholar reports about8,450citations.CART citations can be found in almost any domain,with many appearing in?elds such as credit risk,targeted marketing,?-nancial markets modeling,electrical engineering,quality control,biology,chemistry, and clinical medical research.CART has also strongly in?uenced image compression 179

国内外著名工业机器人厂商

国内外工业机器人厂商 在国外,工业机器人技术日趋成熟,已经成为一种标准设备而得到工业界广泛应用,从而也形成了一批在国际上较有影响力的、著名的工业机器人公司。她们包括:瑞典的ABB Robotics,瑞士Staubli公司日本的FANUC、Yaskawa,德国的KUKA Roboter,美国的Adept Technology、American Robot、Emerson Industrial Automation、S-T Robotics,意大利COMAU,英国的AutoTech Robotics,加拿大的Jcd International Robotics,以色列的Robogroup Tek公司,这些公司已经成为其所在地区的支柱性企业。在国内,工业机器人产业刚刚起步,但增长的势头非常强劲。如中国科学院沈阳自动化所投资组建的新松机器人公司,年利润增长在40%左右。 一、国外主要机器人公司 1、瑞典ABB Robotics公司 ABB公司是世界上最大的机器人制造公司。1974年,ABB公司研发了全球第一台全电控式工业机器人-IRB6,主要应用于工件的取放和物料的搬运。1975年,生产出第一台焊接机器人。到1980年兼并Trallfa喷漆机器人公司后,机器人产品趋于完备。至2002年,ABB公司销售的工业机器人已经突破10万台,是世界上第一个突破10万台的厂家。ABB公司制造的工业机器人广泛应用在焊接、装配、铸造、密封涂胶、材料处理、包装、喷漆、水切割等领域。 公司网址:https://www.360docs.net/doc/9712500810.html,/robotics 2、瑞士Staubli公司 史陶比尔将其在机械运动控制方面的经验和优势应用在工业机器人上。先成功开发并生产了以坚固、可靠和修正尺寸而著称的TX、RX系列机器人手臂后,又拥有了高速、精确、安全的新一代SCARARS系列工业机器人。史陶比尔现在的工业机器人与过去相比,具有更快的速度,更高的精度,更好的灵活性和更友好的用户环境。史陶比尔采用了创造性的专利技术,集成了无间隙的齿轮减速系统,结合了高性能的控制器,从而保证了精确的轨迹控制和最佳的过程参数管理。根据各行业的需求而设计出一系列不同应用范围的专业机器人,可以直接集成到各个生产设备中,其主要应用领域包括:镭射和水注入切割,抛光打磨,装配搬运,喷涂,精加工等。 史陶比尔公司为您提供优质的客户服务,以保证*和TX系列机器人销售和售后服务。在史陶比尔杭州公司还设立了亚太地区的配件服务中心。我们还提供包含机器人各个方面的培训:操作员的课程,编程培训,维护培训,其中有专门根据您需要开设的特殊课程。当您在应用开发,编程和系统集成时,我们将给与全部的技术支持。史陶比尔工业机器人凭借其快速、精确和灵活的特点,为您提供完美的解决方案。 公司网址:https://www.360docs.net/doc/9712500810.html,/web/robot/division.nsf 3、日本FANUC公司 FANUC公司的前身致力于数控设备和伺服系统的研制和生产。1972年,从日本富士通公司的计算机控制部门独立出来,成立了FANUC公司。FANUC公司包括两大主要业务,一是工业机器人,二是工厂自动化。2004年,FANUC公司的营业总收入为2648亿日元,其中工业机器人(包括注模机产品)销售收入为1367亿日元,占总收入的51.6%。 其最新开发的工业机器人产品有: (1)R-2000iA系列多功能智能机器人。具有独特的视觉和压力传感器功能,可以将随意堆

数学建模中常见的十大模型

数学建模常用的十大算法==转 (2011-07-24 16:13:14) 转载▼ 1. 蒙特卡罗算法。该算法又称随机性模拟算法,是通过计算机仿真来解决问题的算法,同时可以通过模拟来检验自己模型的正确性,几乎是比赛时必用的方法。 2. 数据拟合、参数估计、插值等数据处理算法。比赛中通常会遇到大量的数据需要处理,而处理数据的关键就在于这些算法,通常使用MA TLAB 作为工具。 3. 线性规划、整数规划、多元规划、二次规划等规划类算法。建模竞赛大多数问题属于最优化问题,很多时候这些问题可以用数学规划算法来描述,通常使用Lindo、Lingo 软件求解。 4. 图论算法。这类算法可以分为很多种,包括最短路、网络流、二分图等算法,涉及到图论的问题可以用这些方法解决,需要认真准备。 5. 动态规划、回溯搜索、分治算法、分支定界等计算机算法。这些算法是算法设计中比较常用的方法,竞赛中很多场合会用到。 6. 最优化理论的三大非经典算法:模拟退火算法、神经网络算法、遗传算法。这些问题是用来解决一些较困难的最优化问题的,对于有些问题非常有帮助,但是算法的实现比较困难,需慎重使用。 7. 网格算法和穷举法。两者都是暴力搜索最优点的算法,在很多竞赛题中有应用,当重点讨论模型本身而轻视算法的时候,可以使用这种暴力方案,最好使用一些高级语言作为编程工具。 8. 一些连续数据离散化方法。很多问题都是实际来的,数据可以是连续的,而计算机只能处理离散的数据,因此将其离散化后进行差分代替微分、求和代替积分等思想是非常重要的。 9. 数值分析算法。如果在比赛中采用高级语言进行编程的话,那些数值分析中常用的算法比如方程组求解、矩阵运算、函数积分等算法就需要额外编写库函数进行调用。 10. 图象处理算法。赛题中有一类问题与图形有关,即使问题与图形无关,论文中也会需要图片来说明问题,这些图形如何展示以及如何处理就是需要解决的问题,通常使用MA TLAB 进行处理。 以下将结合历年的竞赛题,对这十类算法进行详细地说明。 以下将结合历年的竞赛题,对这十类算法进行详细地说明。 2 十类算法的详细说明 2.1 蒙特卡罗算法 大多数建模赛题中都离不开计算机仿真,随机性模拟是非常常见的算法之一。 举个例子就是97 年的A 题,每个零件都有自己的标定值,也都有自己的容差等级,而求解最优的组合方案将要面对着的是一个极其复杂的公式和108 种容差选取方案,根本不可能去求解析解,那如何去找到最优的方案呢?随机性模拟搜索最优方案就是其中的一种方法,在每个零件可行的区间中按照正态分布随机的选取一个标定值和选取一个容差值作为一种方案,然后通过蒙特卡罗算法仿真出大量的方案,从中选取一个最佳的。另一个例子就是去年的彩票第二问,要求设计一种更好的方案,首先方案的优劣取决于很多复杂的因素,同样不可能刻画出一个模型进行求解,只能靠随机仿真模拟。 2.2 数据拟合、参数估计、插值等算法 数据拟合在很多赛题中有应用,与图形处理有关的问题很多与拟合有关系,一个例子就是98 年美国赛A 题,生物组织切片的三维插值处理,94 年A 题逢山开路,山体海拔高度的插值计算,还有吵的沸沸扬扬可能会考的“非典”问题也要用到数据拟合算法,观察数据的

数据挖掘十大待解决问题

数据挖掘领域10大挑战性问题与十大经典算法 2010-04-21 20:05:51| 分类:技术编程| 标签:|字号大中小订阅 作为一个数据挖掘工作者,点可以唔知呢。 数据挖掘领域10大挑战性问题: 1.Developing a Unifying Theory of Data Mining 2.Scaling Up for High Dimensional Data/High Speed Streams 3.Mining Sequence Data and Time Series Data 4.Mining Complex Knowledge from Complex Data 5.Data Mining in a Network Setting 6.Distributed Data Mining and Mining Multi-agent Data 7.Data Mining for Biological and Environmental Problems 8.Data-Mining-Process Related Problems 9.Security, Privacy and Data Integrity 10.Dealing with Non-static, Unbalanced and Cost-sensitive Data 数据挖掘十大经典算法 国际权威的学术组织the IEEE International Conference on Data Mining (ICDM) 2006年12月评选出了数据挖掘领域的十大经典算法:C4.5, k-Means, SVM, Apriori, EM, PageRank, AdaBoost, kNN, Naive Bayes, and CART. 不仅仅是选中的十大算法,其实参加评选的18种算法,实际上随便拿出一种来都可以称得上是经典算法,它们在数据挖掘领域都产生了极为深远的影响。 1. C4.5 C4.5算法是机器学习算法中的一种分类决策树算法,其核心算法是ID3算法. C4.5算法继承了ID3算法的优点,并在以下几方面对ID3算法进行了改进: 1) 用信息增益率来选择属性,克服了用信息增益选择属性时偏向选择取值多的属性的不足; 2) 在树构造过程中进行剪枝; 3) 能够完成对连续属性的离散化处理; 4) 能够对不完整数据进行处理。 C4.5算法有如下优点:产生的分类规则易于理解,准确率较高。其缺点是:在构造树的过程中,需要对数据集进行多次的顺序扫描和排序,因而导致算法的低效。 2. The k-means algorithm 即K-Means算法 k-means algorithm算法是一个聚类算法,把n的对象根据他们的属性分为k个分割,k < n。它与处理混合正态分布的最大期望算法很相似,因为他们都试图找到数据中自然聚类的中心。它假设对象属性来自于空间向量,并且目标是使各个群组内部的均方误差总和最小。 3. Support vector machines 支持向量机,英文为Support Vector Machine,简称SV机(论文中一般简称SVM)。它是一种監督式學習的方法,它广泛的应用于统计分类以及回归分析中。支持向量机将向量映射到一个更高维的空间里,在这个空间里建立有一个最大间隔超平面。在分开数据的超平面的两边建有两个互相平行的超平面。分隔超平面使两个平行超平面的距离最大化。假定平行超平面间的距离或差距越大,分类器的总误差越小。一个极好的指南是C.J.C Burges的《模式识别支持向量机指南》。van der Walt 和Barnard 将支持向量机和其他分类器进行了比较。 4. The Apriori algorithm

机器学习十大算法8:kNN

Chapter8 k NN:k-Nearest Neighbors Michael Steinbach and Pang-Ning Tan Contents 8.1Introduction (151) 8.2Description of the Algorithm (152) 8.2.1High-Level Description (152) 8.2.2Issues (153) 8.2.3Software Implementations (155) 8.3Examples (155) 8.4Advanced Topics (157) 8.5Exercises (158) Acknowledgments (159) References (159) 8.1Introduction One of the simplest and rather trivial classi?ers is the Rote classi?er,which memorizes the entire training data and performs classi?cation only if the attributes of the test object exactly match the attributes of one of the training objects.An obvious problem with this approach is that many test records will not be classi?ed because they do not exactly match any of the training records.Another issue arises when two or more training records have the same attributes but different class labels. A more sophisticated approach,k-nearest neighbor(k NN)classi?cation[10,11,21],?nds a group of k objects in the training set that are closest to the test object,and bases the assignment of a label on the predominance of a particular class in this neighborhood.This addresses the issue that,in many data sets,it is unlikely that one object will exactly match another,as well as the fact that con?icting information about the class of an object may be provided by the objects closest to it.There are several key elements of this approach:(i)the set of labeled objects to be used for evaluating a test object’s class,1(ii)a distance or similarity metric that can be used to compute This need not be the entire training set. 151

十大优秀工业机器人系统集成商分析

十大优秀工业机器人系统集成商 分析 十大优秀工业机器人系统集成商分析 工业机器人产业是一个集系统集成、先进制造和精密配套融合一体的产业,是一个需要技术、制造、研发沉淀经验的行业。从我国机器产业链发展来看,由于受核心技术限制等多方面因素影响,我国工业机器人产业目前获得突破的主要为系统集成领域。国内一些领先企业从集成应用开始,主要借助对国内市场需求、服务等优势,逐渐脱颖而出,取得了不错的市场成绩。笔者对获得2013年十大优秀工业机器人系统集成商的发展概况及主要产品进行了简单归纳分析,以飨读者。 1、佛山市利迅达机器人系统有限公司(简称:利迅达) 佛山市利迅达机器人系统有限公司是从事机器人系统自动化集成和工业智能化设备研发、生产的高科技企业。公司筹备于2008年,于2010年4月正式成立,经过数年迅猛增长,已发展成为华南地区乃至国内规模最大,实力最强的专业工业机器人应用系统集成商。

利迅达与欧州多家高技术企业的机器人系统研发生产企业战略合作,令利迅达由一开始就在一个国际级的高起点上,再根据中国市场实际,研发出一系列具自有知识产权的全新意念的金属产品表面处理综合系统。其中“机器人打磨拉丝 系统”被评为2011年广东省高新技术产品;“机器人智能化焊接系统”被评为2012年广东省高新技术产品。公司为顺德区百家智能制造工程试点示范企业,在2013年被认定为国家级高新技术企业。 2、厦门思尔特机器人系统有限公司(简称:思尔特) 思尔特创建于2004年6月,位于厦门集美灌南工业区,是厦门市高新技术企业。思尔特多年来为中联、徐工、柳工、厦工、龙工、玉柴等多家国内大中型企业服务,设计制造出技术先进的机器人系统。 2009年,思尔特在上海成立全资子公司上海思尔特机器人科技有限公司,针对冲压机、折弯机、压铸机、弯管机、热锻机等机床的自动上下料生产线的研发、设计、制造。 2010年,思尔特决定打造西南区制造基地,于2010年7月注册成立全资子公司成都思尔特机器人科技有限公司。成都思尔特是西南地区首家专业机器人系统集成商,具有年集成200套机器人系统的能力,主营方向为汽车零部件及薄板焊接的机器人应用。 3、无锡丹佛数控装备机械科技有限公司(简称:丹佛) 无锡丹佛数控装备机械科技有限公司成立于2010年,现阶段主要经营项目分别为:abb工业机器人、韩国现代工业机器人、焊接机器人、搬运机器人、涂装机器人、机床上下料机器人、码垛机器人、焊接机器人、机器人取毛刺等等,同时为客户提供夹具设计制造及交钥匙工程。 丹佛又与几家大型的融资企业签订战略合作合伙,为那些有订单有市场而没有太多

十 大 经 典 排 序 算 法 总 结 超 详 细

数据挖掘十大经典算法,你都知道哪些? 当前时代大数据炙手可热,数据挖掘也是人人有所耳闻,但是关于数据挖掘更具体的算法,外行人了解的就少之甚少了。 数据挖掘主要分为分类算法,聚类算法和关联规则三大类,这三类基本上涵盖了目前商业市场对算法的所有需求。而这三类里又包含许多经典算法。而今天,小编就给大家介绍下数据挖掘中最经典的十大算法,希望它对你有所帮助。 一、分类决策树算法C4.5 C4.5,是机器学习算法中的一种分类决策树算法,它是决策树(决策树,就是做决策的节点间的组织方式像一棵倒栽树)核心算法ID3的改进算法,C4.5相比于ID3改进的地方有: 1、用信息增益率选择属性 ID3选择属性用的是子树的信息增益,这里可以用很多方法来定义信息,ID3使用的是熵(shang),一种不纯度度量准则,也就是熵的变化值,而 C4.5用的是信息增益率。区别就在于一个是信息增益,一个是信息增益率。 2、在树构造过程中进行剪枝,在构造决策树的时候,那些挂着几个元素的节点,不考虑最好,不然容易导致过拟。 3、能对非离散数据和不完整数据进行处理。 该算法适用于临床决策、生产制造、文档分析、生物信息学、空间数据建模等领域。 二、K平均算法

K平均算法(k-means algorithm)是一个聚类算法,把n个分类对象根据它们的属性分为k类(kn)。它与处理混合正态分布的最大期望算法相似,因为他们都试图找到数据中的自然聚类中心。它假设对象属性来自于空间向量,并且目标是使各个群组内部的均方误差总和最小。 从算法的表现上来说,它并不保证一定得到全局最优解,最终解的质量很大程度上取决于初始化的分组。由于该算法的速度很快,因此常用的一种方法是多次运行k平均算法,选择最优解。 k-Means 算法常用于图片分割、归类商品和分析客户。 三、支持向量机算法 支持向量机(Support Vector Machine)算法,简记为SVM,是一种监督式学习的方法,广泛用于统计分类以及回归分析中。 SVM的主要思想可以概括为两点: (1)它是针对线性可分情况进行分析,对于线性不可分的情况,通过使用非线性映射算法将低维输入空间线性不可分的样本转化为高维特征空间使其线性可分; (2)它基于结构风险最小化理论之上,在特征空间中建构最优分割超平面,使得学习器得到全局最优化,并且在整个样本空间的期望风险以某个概率满足一定上界。 四、The Apriori algorithm Apriori算法是一种最有影响的挖掘布尔关联规则频繁项集的算法,其核心是基于两阶段“频繁项集”思想的递推算法。其涉及到的关联规则在分类上属于单维、单层、布尔关联规则。在这里,所有支持度大于最小支

人工智能之机器学习常见算法

人工智能之机器学习常见算法 摘要机器学习无疑是当前数据分析领域的一个热点内容。很多人在平时的工作中都或多或少会用到机器学习的算法。这里小编为您总结一下常见的机器学习算法,以供您在工作和学习中参考。 机器学习的算法很多。很多时候困惑人们都是,很多算法是一类算法,而有些算法又是从其他算法中延伸出来的。这里,我们从两个方面来给大家介绍,第一个方面是学习的方式,第二个方面是算法的类似性。 学习方式 根据数据类型的不同,对一个问题的建模有不同的方式。在机器学习或者人工智能领域,人们首先会考虑算法的学习方式。在机器学习领域,有几种主要的学习方式。将算法按照学习方式分类是一个不错的想法,这样可以让人们在建模和算法选择的时候考虑能根据输入数据来选择最合适的算法来获得最好的结果。 监督式学习: 在监督式学习下,输入数据被称为训练数据,每组训练数据有一个明确的标识或结果,如对防垃圾邮件系统中垃圾邮件非垃圾邮件,对手写数字识别中的1,2,3,4等。在建立预测模型的时候,监督式学习建立一个学习过程,将预测结果与训练数据的实际结果进行比较,不断的调整预测模型,直到模型的预测结果达到一个预期的准确率。监督式学习的常见应用场景如分类问题和回归问题。常见算法有逻辑回归(LogisTIc Regression)和反向传递神经网络(Back PropagaTIon Neural Network) 非监督式学习: 在非监督式学习中,数据并不被特别标识,学习模型是为了推断出数据的一些内在结构。常见的应用场景包括关联规则的学习以及聚类等。常见算法包括Apriori算法以及k-Means 算法。 半监督式学习: 在此学习方式下,输入数据部分被标识,部分没有被标识,这种学习模型可以用来进行预

数学建模十种常用算法

数学建模有下面十种常用算法, 可供参考: 1.蒙特卡罗算法(该算法又称随机性模拟算法,是通过计算机仿真来解决问 题的算法,同时可以通过模拟可以来检验自己模型的正确性,是比赛时必用的方法) 2.数据拟合、参数估计、插值等数据处理算法(比赛中通常会遇到大量的数 据需要处理,而处理数据的关键就在于这些算法,通常使用Matlab作为工具) 3.线性规划、整数规划、多元规划、二次规划等规划类问题(建模竞赛大多 数问题属于最优化问题,很多时候这些问题可以用数学规划算法来描述,通常使用Lindo、Lingo软件实现) 4.图论算法(这类算法可以分为很多种,包括最短路、网络流、二分图等算 法,涉及到图论的问题可以用这些方法解决,需要认真准备) 5.动态规划、回溯搜索、分治算法、分支定界等计算机算法(这些算法是算 法设计中比较常用的方法,很多场合可以用到竞赛中) 6.最优化理论的三大非经典算法:模拟退火法、神经网络、遗传算法(这些 问题是用来解决一些较困难的最优化问题的算法,对于有些问题非常有帮助,但是算法的实现比较困难,需慎重使用) 7.网格算法和穷举法(网格算法和穷举法都是暴力搜索最优点的算法,在很 多竞赛题中有应用,当重点讨论模型本身而轻视算法的时候,可以使用这种暴力方案,最好使用一些高级语言作为编程工具) 8.一些连续离散化方法(很多问题都是实际来的,数据可以是连续的,而计 算机只认的是离散的数据,因此将其离散化后进行差分代替微分、求和代替积分等思想是非常重要的) 9.数值分析算法(如果在比赛中采用高级语言进行编程的话,那一些数值分 析中常用的算法比如方程组求解、矩阵运算、函数积分等算法就需要额外编写库函数进行调用) 10.图象处理算法(赛题中有一类问题与图形有关,即使与图形无关,论文中 也应该要不乏图片的,这些图形如何展示以及如何处理就是需要解决的问题,通常使用Matlab 进行处理)

全球十大工业机器人品牌

全球十大工业机器人品牌 随着智能装备得发展,机器人在工业制造中得优势越来越显着,机器人企业也如雨后春笋般得出现。然而占据主导地位得还就是那些龙头企业。 1、发那科(FANUC) FANUC(发那科)就是日本一家专门研究数控系统得公司,成立于1956年。就是世界上最大得专业数控系统生产厂家,占据了全球70%得市场份额。FANUC1959年首先推出了电液步进电机,在后来得若干年中逐步发展并完善了以硬件为主得开环数控系统。进入70年代,微电子技术、功率电子技术,尤其就是计算技术得到了飞速发展,FANUC公司毅然舍弃了使其发家得电液步进电机数控产品,一方面从GETTES公司引进直流伺服电机制造技术。 1976年FANUC公司研制成功数控系统5,随后又与SIEMENS公司联合研制了具有先进水平得数控系统7,从这时起,FANUC公司逐步发展成为世界上最大得专业数控系统生产厂家。 自1974年,FANUC首台机器人问世以来,FANUC致力于机器人技术上得领先与创新,就是世界上唯一一家由机器人来做机器人得公司,就是世界上唯一提供集成视觉系统得机器人企业,就是世界上唯一一家既提供智能机器人又提供智能机器得公司。FANUC机器人产品

系列多达240种,负重从0、5公斤到1、35吨,广泛应用在装配、搬运、焊接、铸造、喷涂、码垛等不同生产环节,满足客户得不同需求。 2008年6月,FANUC成为世界第一个突破20万台机器人得厂家;2011年,FANUC全球机器人装机量已超25万台,市场份额稳居第一。 2、库卡(KUKA) 库卡(KUKA)及其德国母公司就是世界工业机器人与自动控制系统领域得顶尖制造商,它于1898年在德国奥格斯堡成立,当时称“克勒与克纳皮赫奥格斯堡(KellerundKnappichAu gsburg)”。公司得名字KUKA,就就是KellerundKnappichAugsburg得四个首字母组合。在1995年KUKA公司分为KUKA机器人公司与KUKA库卡焊接设备有限公司(即现在得K UKA制造系统),2011年3月中国公司更名为:库卡机器人(上海)有限公司。 KUKA产品广泛应用于汽车、冶金、食品与塑料成形等行业。KUKA机器人公司在全球拥有20多个子公司,其中大部分就是销售与服务中心。KUKA在全球得运营点有:美国,墨西哥,巴西,日本,韩国,台湾,印度与欧洲各国。

数学建模中常见的十大模型讲课稿

数学建模中常见的十 大模型

精品文档 数学建模常用的十大算法==转 (2011-07-24 16:13:14) 转载▼ 1. 蒙特卡罗算法。该算法又称随机性模拟算法,是通过计算机仿真来解决问题的算法,同时可以通过模拟来检验自己模型的正确性,几乎是比赛时必用的方法。 2. 数据拟合、参数估计、插值等数据处理算法。比赛中通常会遇到大量的数据需要处理,而处理数据的关键就在于这些算法,通常使用MA TLAB 作为工具。 3. 线性规划、整数规划、多元规划、二次规划等规划类算法。建模竞赛大多数问题属于最优化问题,很多时候这些问题可以用数学规划算法来描述,通常使用Lindo、Lingo 软件求解。 4. 图论算法。这类算法可以分为很多种,包括最短路、网络流、二分图等算法,涉及到图论的问题可以用这些方法解决,需要认真准备。 5. 动态规划、回溯搜索、分治算法、分支定界等计算机算法。这些算法是算法设计中比较常用的方法,竞赛中很多场合会用到。 6. 最优化理论的三大非经典算法:模拟退火算法、神经网络算法、遗传算法。这些问题是用来解决一些较困难的最优化问题的,对于有些问题非常有帮助,但是算法的实现比较困难,需慎重使用。 7. 网格算法和穷举法。两者都是暴力搜索最优点的算法,在很多竞赛题中有应用,当重点讨论模型本身而轻视算法的时候,可以使用这种暴力方案,最好使用一些高级语言作为编程工具。 8. 一些连续数据离散化方法。很多问题都是实际来的,数据可以是连续的,而计算机只能处理离散的数据,因此将其离散化后进行差分代替微分、求和代替积分等思想是非常重要的。 9. 数值分析算法。如果在比赛中采用高级语言进行编程的话,那些数值分析中常用的算法比如方程组求解、矩阵运算、函数积分等算法就需要额外编写库函数进行调用。 10. 图象处理算法。赛题中有一类问题与图形有关,即使问题与图形无关,论文中也会需要图片来说明问题,这些图形如何展示以及如何处理就是需要解决的问题,通常使用MATLAB 进行处理。 以下将结合历年的竞赛题,对这十类算法进行详细地说明。 以下将结合历年的竞赛题,对这十类算法进行详细地说明。 2 十类算法的详细说明 2.1 蒙特卡罗算法 大多数建模赛题中都离不开计算机仿真,随机性模拟是非常常见的算法之一。 举个例子就是97 年的A 题,每个零件都有自己的标定值,也都有自己的容差等级,而求解最优的组合方案将要面对着的是一个极其复杂的公式和108 种容差选取方案,根本不可能去求解析解,那如何去找到最优的方案呢?随机性模拟搜索最优方案就是其中的一种方法,在每个零件可行的区间中按照正态分布随机的选取一个标定值和选取一个容差值作为一种方案,然后通过蒙特卡罗算法仿真出大量的方案,从中选取一个最佳的。另一个例子就是去年的彩票第二问,要求设计一种更好的方案,首先方案的优劣取决于很多复杂的因素,同样不可能刻画出一个模型进行求解,只能靠随机仿真模拟。 2.2 数据拟合、参数估计、插值等算法 数据拟合在很多赛题中有应用,与图形处理有关的问题很多与拟合有关系,一个例子就是98 年美国赛A 题,生物组织切片的三维插值处理,94 年A 题逢山开路,山体海拔高度的 收集于网络,如有侵权请联系管理员删除